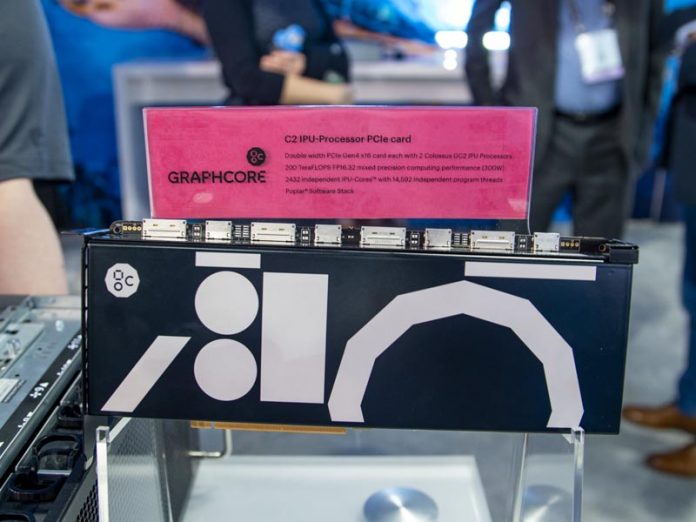

If you were to make a list of the top 3 AI hardware startups with products today, Graphcore would easily make it to our list. At Dell Tech World 2019, STH got Hands-on With a Graphcore C2 IPU PCIe Card. It was apparently just sitting at a booth and most attendees had no idea what it was. At Supercomputing 2019 (SC19), STH saw not just a card, but multiple Graphcore C2 IPU systems. We also found out that the Graphcore cards dropped one of our favorite features.

Graphcore C2 IPU at SC19

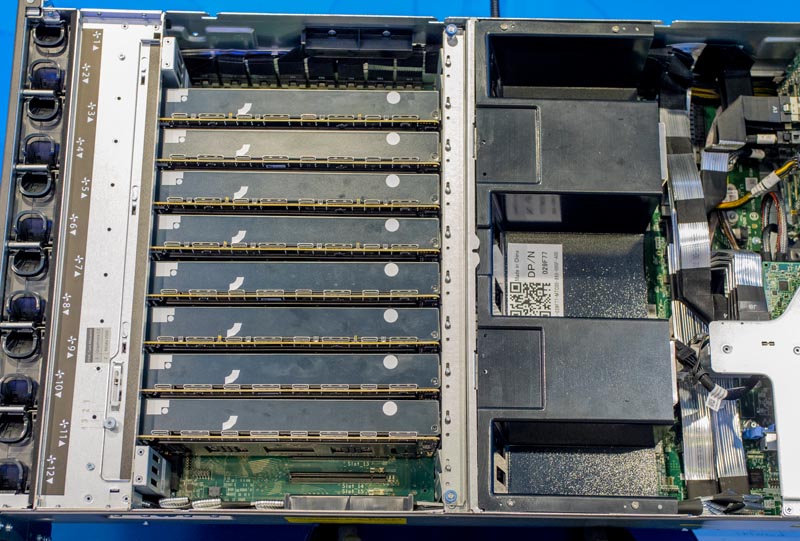

At SC19, there were several Graphcore C2 IPU PCIe systems with 8x of the PCIe cards installed. Here is a Dell EMC DSS8440 with eight cards installed in a partner’s booth:

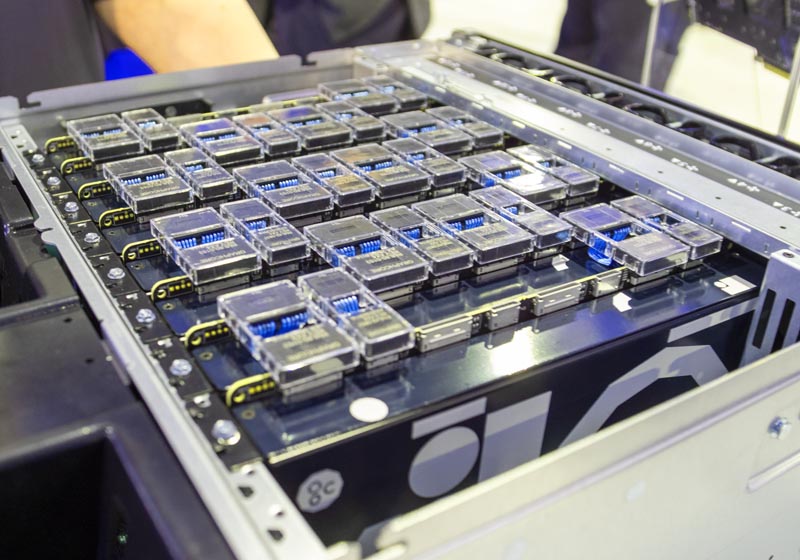

The Dell booth had a similar setup, except with the PCIe Gen4-based bridges installed. The DSS8440 can handle 10x NVIDIA Tesla V100’s or GPUs, similar to what we did in our DeepLearning11 10x GPU Server, but the inter-GPU communication happens via PCIe. With Graphcore’s PCIe solution, these top-of-card links create a fabric which means inter-IPU traffic happens without hitting the underlying PCIe host. Instead, PCIe is used for IPU to host CPU, memory, and peripheral communication. This topology limits the Dell EMC DSS8440 with Graphcore IPUs to 8 cards.

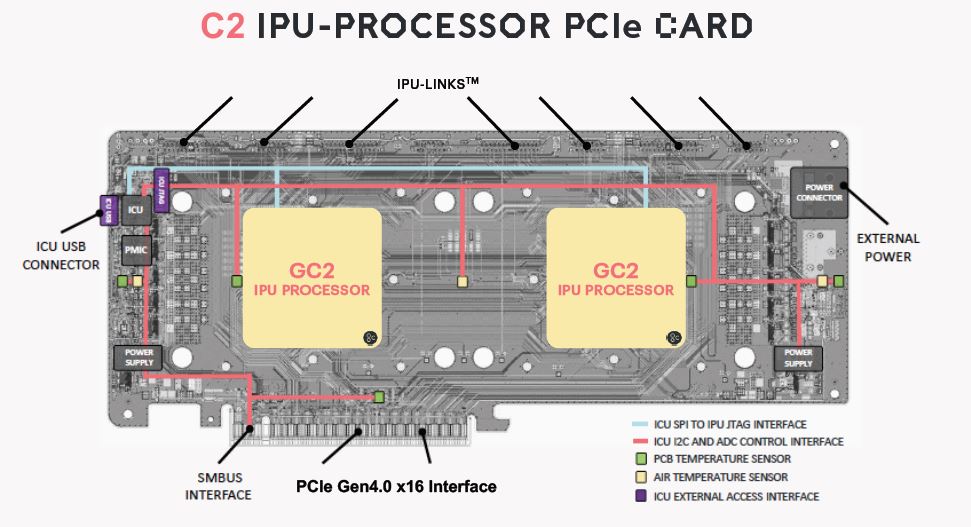

Each card has two Colossus GC2 processors onboard. You can see the basic diagram of the C2 cards including the two chips and top IPU-Links below:

Graphcore claims that the 300W C2 cards can do 200TFLOPS of mixed precision using both GC2 chips. Each of the two onboard GC2 IPUs has 1,216 IPU cores and 300MB of memory. This is key to how Graphcore is getting massive performance from its solutions.

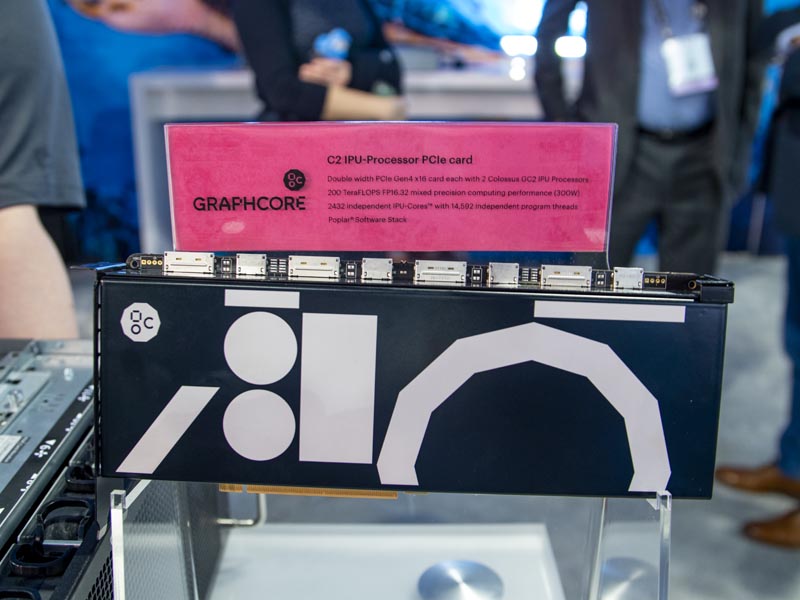

Many of our readers will have noticed by now a striking visual change for the cards. At Dell Tech World 2019 the cards had an absolutely striking visual appearance on their airflow guides.

The production PCIe cards are more mundane with a black and white image. Our Editor-in-Chief, Patrick, asked about the change. He said, “Graphcore made this change because the more colorful model was harder to produce resistant to heat during operation. They simplified the cards to make them easier to manufacture which makes sense since few will be seen outside of show floors.”

While the PCIe cards are interesting, we think Graphcore is looking to higher power parts. The company has been associated with Facebook’s OAM form factor from the start. As the Open Compute Project’s OAI gets ready to kick into full gear with platforms like the Inspur OAM UBB, we are going to see accelerators in OAM form factors gain in popularity. Graphcore is specifically calling the product at SC19 the “C2 IPU-Processor PCIe card” likely because the company is planning to offer an OAM solution in the future.

Final Words

These cards are designed to sit in 4U chassis, in data centers, where if all goes well nobody will ever see them. Still, there was something absolutely striking about the old air shroud design that absolutely differentiated the cards versus the NVIDIA Tesla V100’s as you can see in our Inspur Systems NF5468M5 Review (PCIe) or our DeepLearning12 (SXM2) build. Since the paint was detrimental to the performance of the product, Graphcore made the right decision to drop the design. Until next time, here is a video with the old design.

The bigger impact story is, of course, that we are seeing not just production Graphcore C2 IPUs, but we are also seeing more Dell EMC DSS 8440 servers with these shown at industry events, including at partner booths.

Interesting where this is headed. Will this hardware be for sale or only available through cloud instances?

Hi k8s – I believe you can get them in the DSS8440 for on-prem.

There still is no fundamental understanding as to the ease of development for these cards.

There still is no fundamental and sound review of how they stack up against a well formed competition.

> Each of the two onboard GC2 IPUs has 1,216 IPU cores and 300MB of memory.

300MB of SOC memory with no other onboard memory.

The biggest issue with training models is other things machine learning related is the size of the model.

So, clearly Graphcore has gone off reservation with a potentially one off architecture that puts severe limitations on what kind of workflows it is optimized for and what types it would severely be brought to its knees. Again, still no disclosure as to the development environment.

High speed interconnects between cards that bypass PCI-E are nothing new :

https://www.nvidia.com/en-us/data-center/dgx-2/

Intel just disclosed :

https://www.servethehome.com/intel-xe-hpc-gpu-is-something-to-get-excited-about/

https://www.servethehome.com/great-2021-intel-xeon-sapphire-rapids-with-xe-ponte-vecchio-gpu-and-oneapi-at-sc19/

Intel is home to this market.

AMD is seeking a home here.

ARM is seeking a home. Big data providers already have their own TPU hardware.

Nvidia is home here already and rapidly progressing its offering with a FULL formed Dev stack.

What list are these guys on? There still has been no real-world disclosures/demos that establishes their product beyond vaporware. Please correct me if I’m wrong. Are they just hoping to be acquire-hired by dell or something?

I’d like to know how much they cost. Is it 8K like a v100? more? less?

NVIDIA Tesla V100 can be connected via NVLINK too. I agree, compelling hardware and benchmark results. Impressive team, doing lots cool stuff architecturally. Will be interesting to see Nvidia Ampere chip next year. I keep thinking real competition will kick in with 7nm silicon.

Graphcore Benchmarks:

https://www.graphcore.ai/posts/new-graphcore-ipu-benchmarks