Our review of the Gigabyte R181-NA0 is going to cover one of our favorite types of platforms. With the Intel Xeon Scalable generation, we saw more than a simple processor transition. At the same time as the new platforms rolled out, we saw a shift to NVMe SSDs in a significant way. While previous generations utilized SATA SSDs in 10x 2.5″ arrays, with the Intel Xeon Scalable generation, the market transitioned to NVMe-first.

Why 10x NVMe 1U Systems Matter

Before delving into this review, we wanted to discuss why the 1U 10x NVMe form factor is unmistakably the form factor we like right now. Case and point, we have had an entire cluster of 1U 10x NVMe systems running as part of our DemoEval service for a software-defined storage client for over a year.

Utilizing 10x NVMe drives means a system will need to use 40x PCIe 3.0 lanes. Technically this was possible from a single socket Intel Xeon E5-2600 series CPU (V1-V4) however, it is not practical. The Intel Xeon E5 series was limited to 40x PCIe lanes per CPU so that left other connectivity, such as NICS, connected to the PCH for very limited performance. That has essentially made dual socket Intel platforms, like the Gigabyte R181-NA0, the go-to NVMe architecture. The AMD EPYC “P” series parts change this equation. However, when utilizing 100GbE, the four NUMA node design can still present some tuning challenges. With the Intel Xeon Scalable generation, we now have 48x PCIe 3.0 lanes per CPU which have made 10x NVMe 1U systems even more exciting.

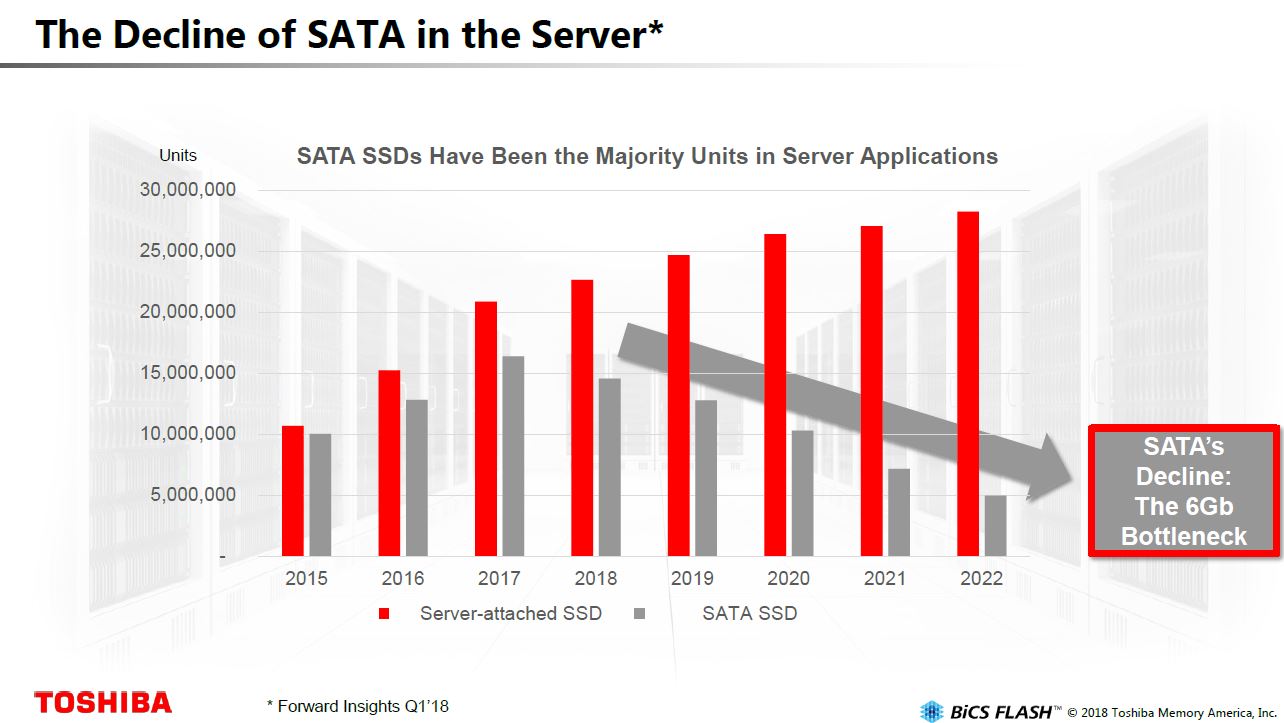

Beyond the processor architecture changes, Intel Xeon Scalable launched at the long-predicted changeover from SATA SSDs to NVMe SSDs. In the past few quarters, and by most accounts what will continue, is the gradual decline of SATA SSDs in servers. Here is a forecast slide we highlighted from Toshiba, a maker of SSDs that shows this trend.

SATA has no roadmap past SATA III 6.0gbps yet today’s controllers, and NAND can push a PCIe 3.0 x4 bus to its limit around five or six times faster.

That brings us to why the 1U 10x NVMe server is so important. One can utilize 10x NVMe SSDs, a large amount of RAM, and 25/40/50/100GbE networking to build out high throughput software-defined storage and hyper-converged clusters. 2U 24-bay Intel designs use PCIe switches. As we saw with the How Intel Xeon Changes Impacted Single Root Deep Learning Servers piece, the new Skylake-SP CPUs (and AMD EPYC for that matter) can only handle a PCIe 3.0 x16 backhaul from PCIe switch to CPU. In a 2U 24 bay chassis that means one has either 16 or 32 PCIe lanes to the drives. In effect, one adds the latency and power consumption of PCIe switches and loses bandwidth. The 1U 10x NVMe solutions do not need PCIe switches and thus provide full bandwidth to the drives.

With potential storage bandwidth hitting 30GB/s these are simply awesome hyper-converged and software-defined storage building blocks. The 1U form factor also yields higher memory and compute density than the 2U designs.

In this review, we are going to focus on how the Gigabyte R181-NA0 can fulfill these roles.

Gigabyte R181-NA0 Test Configuration Overview

Gigabyte sent us the base server for review, and we outfitted it with a few different configurations.

- Server: Gigabyte R281-G30 2U server

- CPUs: 2x Intel Xeon Platinum 8180, 2x Intel Xeon Gold 6152, 2x Intel Xeon Gold 6138, 2x Intel Xeon Gold 5119T, 2x Intel Xeon Silver 4116, 2x Intel Xeon Bronze 3106

- RAM: 192GB in 12x 16GB DDR4-2666MHz RDIMMs

- SATA III Storage: 2x 128GB SATA DOMs

- 25GbE NIC: Broadcom 25GbE OCP Mezzanine

- 40GbE NIC: Mellanox ConnectX-3 EN Pro

- 100GBE NIC: Mellanox ConnectX-4

- NVMe SSDs: 10x Intel DC P3320 2TB, 10x Intel DC P3520 2TB, 10x Intel P3700 400GB

Given the focus on the review, we wanted to show NVMe performance. One realization we had was that we have many different types of SSDs. We did plug and operational testing also using: Intel Optane 900p 280GB/ 480GB, Samsung XS1715 400GB, XM1725 800GB, and Intel DC P4510 2TB and 8TB drives as well. We only had three sets of 10x drives available in the lab but they range from extreme value to high endurance SSDs.

As we go through the server hardware, we are going to show how networking and expansion options abound in the platform. The server has 1GbE networking built-in but we see the vast majority of servers based on this platform being deployed with 25/40/50/100GbE so we wanted to be sure to work with that. Unlike the Gigabyte R281-G30 we reviewed, the Gigabyte R181-NA0 only has one accessible OCP slot because the other OCP mezzanine slot is used for NVMe connectivity. We also had to swap out the 100GbE and 40GbE NICs for our tests.

On the RAM side, the system supports up to 24x RDIMMs. We used a single DIMM per channel configuration but there is room for significant expansion here. We also used a variety of CPUs, however, we think that if you are buying this server you will move up the Xeon CPU stack well beyond the Xeon Bronze series. We still wanted to show a broad set of performance scenarios so you can get a sense of different options.

Next, we are going to delve into the hardware details. We will then look at the management interface and a block diagram of the platform. We are then going to look at performance, power consumption, and then give our final thoughts on the platform.

Only 2 UPI links? When true it’s not really made for the gold 6 series or platinum and to be honest why would you need suchs an amount of compute power in a storage server?

I’ll stick to a brand that can handle 24 NVMe-drives with just one CPU.

@Misha,

You are obviously referring to a different CPU brand, since there is no single Intel CPU which can support 10x NVMe drives.

@BinkyTO,

10 NVMe’s is only 40 PCIe slots sol it should be possible with Xeon Scalable, you just don’t have many lanes left for other equipment.

@misha hyperconverged one of the biggest growing sectors and a multi billion dollar hardware market. You’d need two CPUs since you can’t do even a single 100G link on an x8.

I’d say this looks nice

@Tommy F

Only two 10.4 UPI links you are easally satisfied.

24×4=96 PCIe lanes so their are 32 left – 4 for chipsets etc and a boot drive leaves you with 28 PCIe 3-lanes.

28PCIe-lanes x 985MB/s x 8bit = 220 Gbit/s, good enough for 2018.

And lets not forget octa-channel memory(DDR4-2666) on 1 CPU(7551p) for $2,200 vs. 2 x 5119T($1555 each) with only 6 channel DDR4-2400.

In 2019 EPYC 2 will be released with PCIe-4, which has double the speed of PCIe-3.

Not taken into account “Spectre, Meltdown, Foreshadow, etc….)

@Patrick there’s a small error in the legend of the storage performance results. Both colors are labeled with read performance where I expect the black bars to represent write performance instead.

What I don’t see is the audience for this solution. With an effective raw capacity of 20Tb maximum (and probably a 1:10 ratio between disk and platform cost), why would anyone buy this platform instead of a dedicated JBOF or other ruler format based platforms. The cost per TB as well as storage density of the server reviewed here seems to be significantly worse.

David- thanks for the catch. It is fixed. We also noted that we tried 8TB drives, we just did not have a set of 10 for the review. 2TB is now on the lower end of the capacity scale for new enterprise drives. These 10x NVMe 1U’s there is a large market for, which is why the form factor is so prevalent.

Misha – although I may personally like EPYC, and we have deployed some EPYC nodes into our hosting cluster, this review was not focused on that as an alternative. Most EPYC systems still crash if you try to hot-swap a NVMe SSD while that feature just works on Intel systems. We actually use mostly NVMe AICs to avoid remote hands trying to remove/ insert NVMe drives on EPYC systems.

Also, your assumption that you will be able to put an EPYC 2nd generation in an existing system and have it run PCIe Gen4 to all of the devices is incorrect. You are then using both CPUs and systems that do not currently exist to compare to a shipping product.

Few things:

1. Such a system with an a single EPYC processor would save money to a customer who needs such a system since you can do everything with a single CPU.

2. 10 NVME drives with those fans – if you’ll heavily use those CPU’s (lets say 60-90%) then the speed of those NVME drives will drop rapidly since those fans will run faster, sucking more air from outside and will cool the drives too much, which reduces the SSD read speed. I didn’t see anything mentioned on this article.

@Patrick – You can also buy EPYC systems that do NOT crash when hot-swapping a NVMe SSD, you even mentioned it in earlier thread on STH.

I did not assume that you can swap out the EPYC1 with an EPYC2 and get PCIe-4. When it is just for more compute speed it should work(same socket) as promised many times by AMD. When you want to make use of PCIe-4 you will need a new motherboard. When you want to upgrade from XEON to XEON-scalable you have no choice, you have to upgrade both the MB as the CPU

Hetz, we have a few hundred dual Xeon E5 and Scalable 10 NVME 1U’s and have never seen read speeds due to fans drop.

@Patrick don’t feed the troll. I don’t envy that part of your job.

dell-emc-poweredge-r7415-review 2U 24 U2

aic-fb127-ag-innovative-nf1-amd-epyc-storage-solution 1U 36 NF1

Yes, too bad that the Dell R7415 which STH reviewed earlier this year was not used for comparison of NVMe performance with Epyc.

Also STH themselves reported that the R7415 would support hot-swap without crashing. So I don’t quite understand why crashes are used as an argument to not benchmark against Epyc.

https://www.servethehome.com/dell-emc-poweredge-r7415-nvme-hot-swap-amd-epyc-in-action/