Open Networking Software

By default, many of these switches came with Dell OS10. We are going to let our readers look at OS10 and the switch documentation for specific features. It is difficult to try getting a complete list since there are hundreds of features our readers care about. One advantage here is that this is still supported under OS10 unlike the S5100F-ON series and support extends well beyond just Dell’s NOS. Instead, we wanted to focus on some of the options out there if one does not want to run Dell OS10. After all, this is a “-ON” open networking switch.

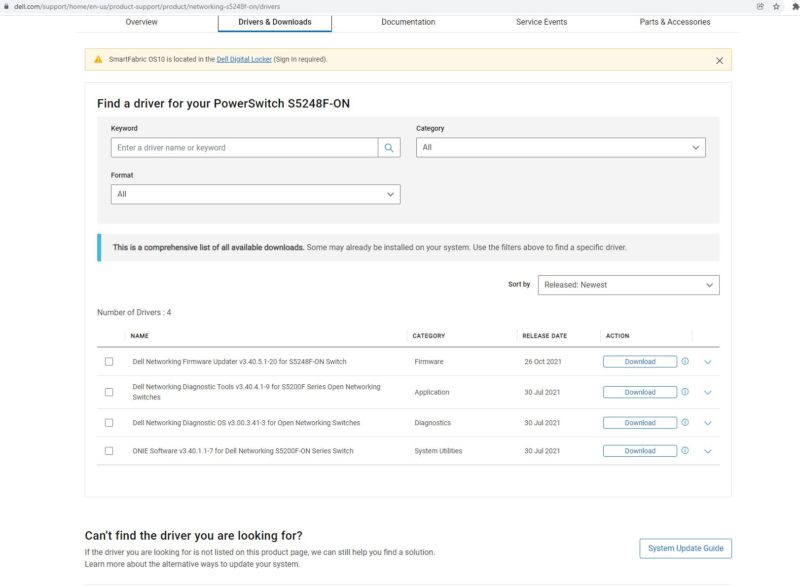

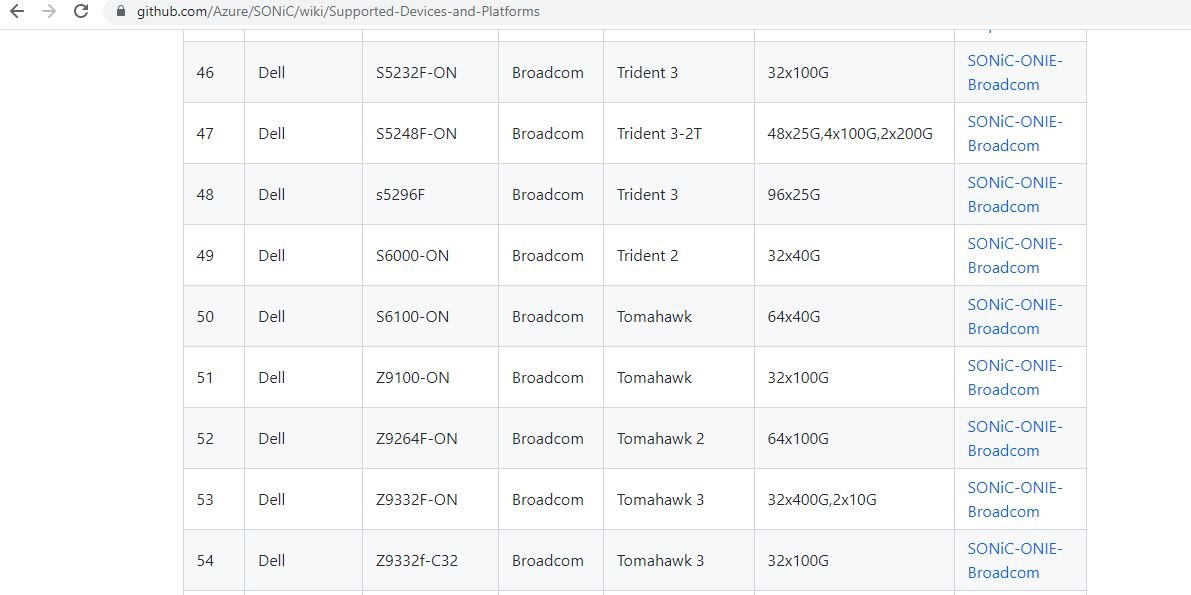

First, we need to start with SONiC. SONiC is perhaps the biggest player in the space. If you wanted an open networking solution, this would probably be what we would recommend. Indeed, we have had guides on SONiC since around the time that this switch was released such as Get started with 40GbE SDN with Microsoft Azure SONiC. When we look at the SONiC hardware support page and look for Dell switches, the S5248F-ON is presently supported by the SONiC-ONIE-Broadcom line:

We will quickly note here that the Dell options on this page are all based on Broadcom Trident or Tomahawk chips, not the XPliant chips the previous generation was based on. Marvell just announced it is acquiring Innovium for its high-end switch ASIC, and Innovium focused on selling to hyper-scalers had an offering catering specifically to SONiC. You can see Inside an Innovium Teralynx 7-based 32x 400GbE Switch on STH, but Innovium was very focused on hyper-scale markets and thus built a core competency around SONiC.

Another big one is Cumulus. NVIDIA acquired Cumulus Networks and looking for Dell switches on their page is very similar. We again have Broadcom options with the S5248F-ON listed.

Something we will note here is that NVIDIA Cumulus Linux does not support the QSFP28-DD ports 200GbE, only as QSFP28 100GbE ports. NVIDIA is pushing Cumulus more for its Mellanox-derived line of switches these days, so we see SONiC as the main open networking OS for this switch.

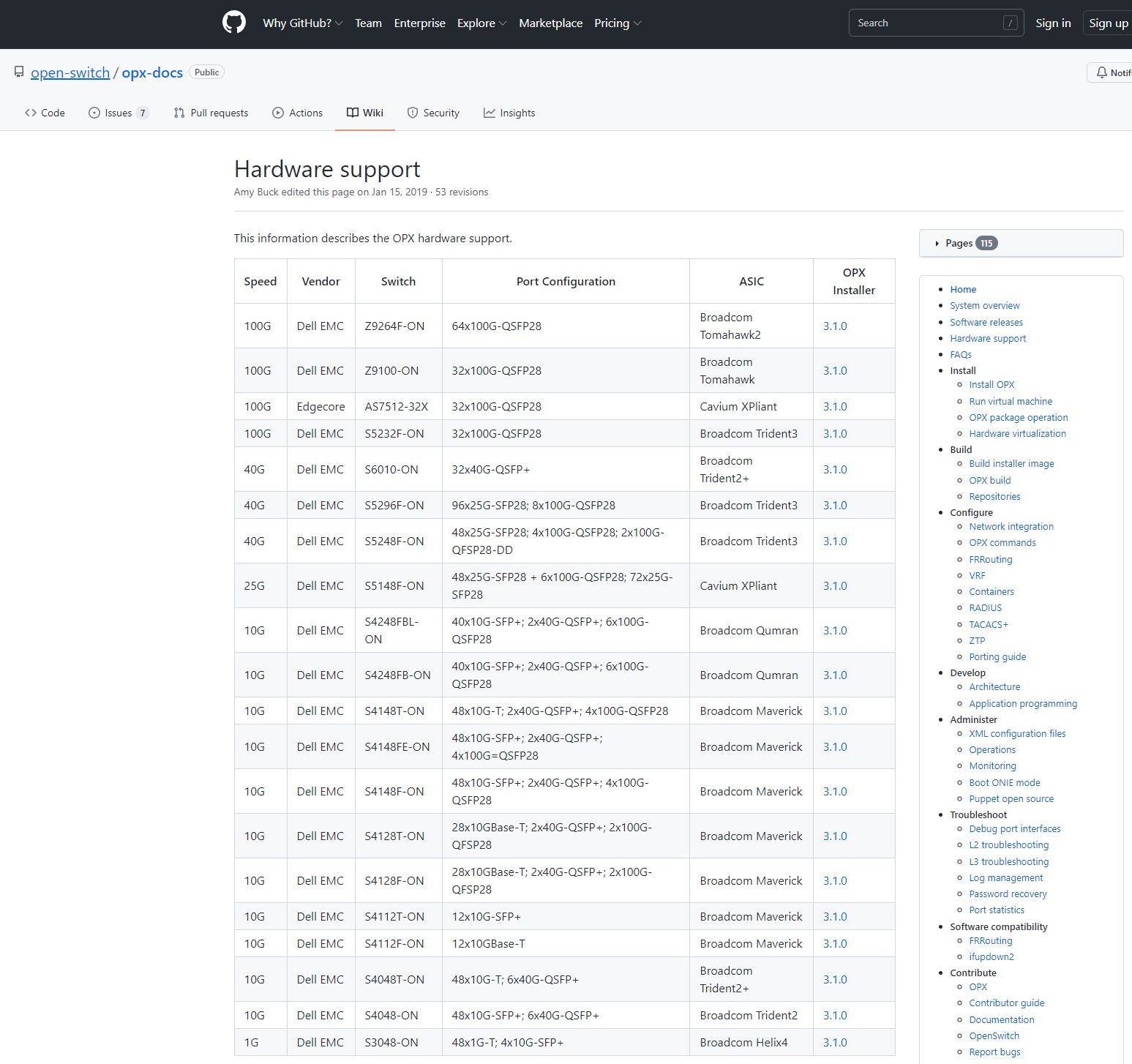

We tend not to use Open Switch just given the scale and momentum of SONiC. Still, we will quickly note we saw the S5248F-ON on that HCL:

As with Cumulus, the QSFP28-DD ports become 100GbE ports so we lose 200Gbps or 10% of the switch performance with these solutions.

To us, this is a better value switch than the S5100F-ON series because it is supported by more common 3rd party distributions. If one really wants Open Networking, then having support for open networking distributions seems to be an important characteristic.

Next, we are going to look at power consumption and noise before getting to our final words.

A Small Typo:

Next to those we have four QSFP28 100GbE ports. That gives us 800Gbps of uplink bandwidth and 1.2Gbps of 25GbE server connectivity.

Should be more like 1.2Tbps.

I found an Easter Egg in this article! It says the power supplies are 750 kW, so I imagine with two of them it would power around 50,000 PoE devices at 30 W each :)

What is the purpose of switches like these? We use 32 port 100G arista 7060s and use 4×25 breakout cables, and 4x 100g uplinks per ToR giving 800g to the rack itself. Obviously we have additional ports for uplinks if necessary, but it hasn’t been, but when I evaluated these switches I wasn’t sure what the usage was because it doesn’t provide the flexibility of straight 100g ports.

I would love to see what a DC designer would have to do to deal with a system that has dual 750,000w PSUs. lol

I just have to say thank you for your review of this switch – especially your coverage of Azure SONiC, which is on my road map for a parallel test installation we set our hearts on being able to roll out when we’re moving to the new software infrastructure that’s the result of years of development in order to reduce our monoliths to provide the seeds for what we hope to become our next only more populous monolith installation of expanded service clusters that made more sense to partition into more defined homologated workloads. We’re entirely vanilla in networking terms, having watched and waited for the beginning of the maturity phases of adoption, which I think is definitely under way now. To be able to deploy parallel vanilla and SDN (SONIC) switchgear on identical hardware, is precisely the trigger and encouragement that we’ve been looking out for – thanks to STH for bringing the news to us, yet again.