Power Consumption and Noise

The switch itself comes with redundant 750W PSUs that are high-efficiency 80Plus Platinum units.

Dell lists the typical power consumption at 310W which seems a bit higher than what we normally see on ours. Our lab unit is usually running in the 200-250W range but we are not saturating each port and some ports are not connected as machines move in and out of the lab. There are also higher-power optics that can be installed. If one loads the switch with higher-power optics Dell says it has a 647W max. There is a large variation in these switches depending on what kind of pluggable components are being installed, so hopefully, this range is helpful.

In terms of noise, this is certainly not a quiet switch. Without optics installed, it idles just above 39dba. This was actually similar to the S5232F-ON and lower than the S5296F-ON.

Fans ramp from there based on the traffic and optics connected. We would not recommend it even for a lightly sound isolated cabinet in an otherwise quiet office. This is a switch designed for data center racks, not necessarily edge deployments where racks may be close to quiet spaces.

Final Words

Getting to see inside these switches is something that we wish more vendors assisted the market with. If, as an industry, we are going to call switches “open” then the hardware, usually made by an ODM, should not be some sort of closely guarded secret. We hope that Dell opens up more on its hardware in the future.

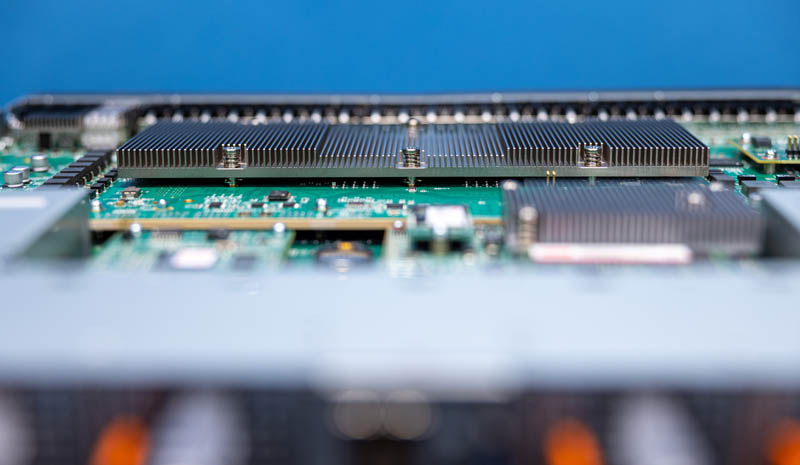

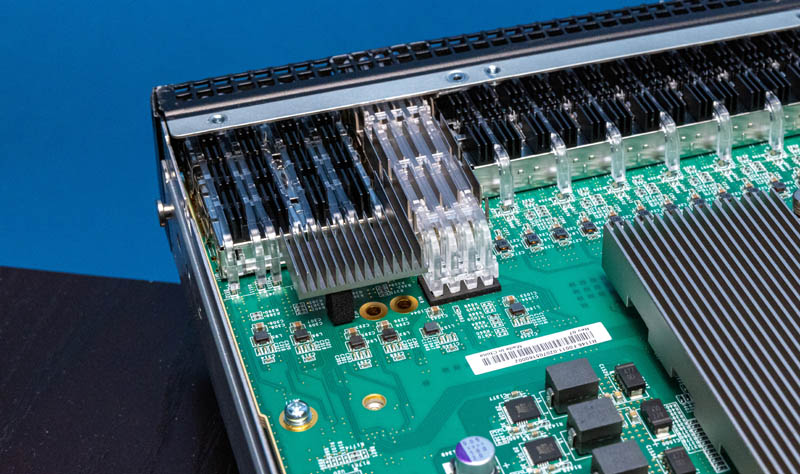

This switch is several generations into the 48-port 25GbE switch era, and as such, that is perhaps the least exciting feature. The QSFP28-DD port is interesting since that provides more performance per square millimeter of faceplate space. The port also offers something a little bit different in terms of cooling.

The common rear management fans and power supplies laid out as we see on the S5232F-ON series are a nice touch. That simplifies deployment since we would expect to see the 32-port 100GbE switch as more of an aggregation layer, although we are increasingly seeing 32-port 400GbE switches perform that function due to the associated radix impact.

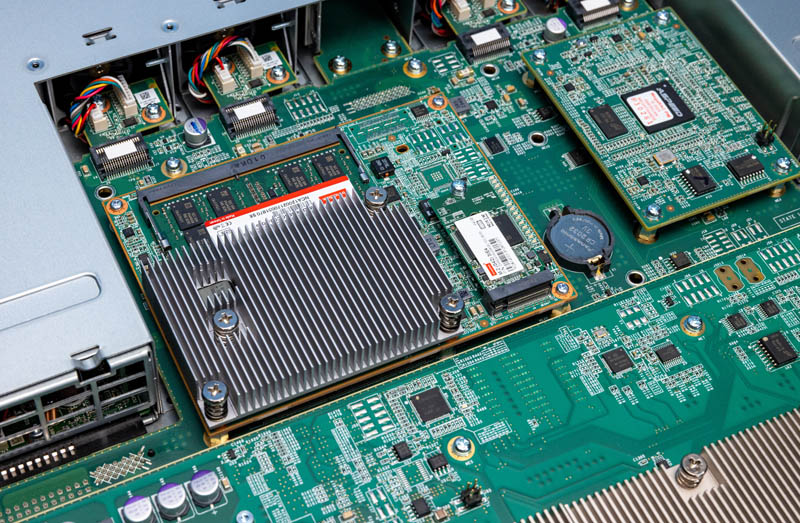

Upgrading to the Atom C3000 series from the C2000 series is a huge generational improvement just like moving to Broadcom. This is clearly a switch made to be more aligned to open networking software such as SONiC. For some, the AMI label will be offputting, but Dell and AMI have documented saying these labels are OK.

At STH, we have been mostly transitioning to 32-port 100GbE switches in our racks, then using breakout DACs to reach the various nodes we have installed. That provides more switching throughput and also makes it easier to mix 25GbE, 50GbE, and 100GbE machines in racks.

Overall, this is a very nice generational upgrade over the S5148F-ON we reviewed previously and that hardware upgrade means that we can use the S5248F-ON with more open networking solutions than its predecessor.

A Small Typo:

Next to those we have four QSFP28 100GbE ports. That gives us 800Gbps of uplink bandwidth and 1.2Gbps of 25GbE server connectivity.

Should be more like 1.2Tbps.

I found an Easter Egg in this article! It says the power supplies are 750 kW, so I imagine with two of them it would power around 50,000 PoE devices at 30 W each :)

What is the purpose of switches like these? We use 32 port 100G arista 7060s and use 4×25 breakout cables, and 4x 100g uplinks per ToR giving 800g to the rack itself. Obviously we have additional ports for uplinks if necessary, but it hasn’t been, but when I evaluated these switches I wasn’t sure what the usage was because it doesn’t provide the flexibility of straight 100g ports.

I would love to see what a DC designer would have to do to deal with a system that has dual 750,000w PSUs. lol

I just have to say thank you for your review of this switch – especially your coverage of Azure SONiC, which is on my road map for a parallel test installation we set our hearts on being able to roll out when we’re moving to the new software infrastructure that’s the result of years of development in order to reduce our monoliths to provide the seeds for what we hope to become our next only more populous monolith installation of expanded service clusters that made more sense to partition into more defined homologated workloads. We’re entirely vanilla in networking terms, having watched and waited for the beginning of the maturity phases of adoption, which I think is definitely under way now. To be able to deploy parallel vanilla and SDN (SONIC) switchgear on identical hardware, is precisely the trigger and encouragement that we’ve been looking out for – thanks to STH for bringing the news to us, yet again.