Dell EMC PowerEdge R7525 Test Configuration

In our PowerEdge R7525 test configuration, we had a very nice system setup:

- System: Dell EMC PowerEdge R7525 24x NVMe Backplane

- CPUs: 2x AMD EPYC 7H12

- Memory: 1TB 16x 64GB DDR4-3200 Dual Rank ECC RDIMMs

- Storage: 24x 1.6TB Intel DC P4610 NVMe SSDs

- Networking: Intel XL710 dual SFP+ 10GbE OCP NIC 3.0

While we have the highest-end CPUs and a high-end storage array, there are many additional configuration options. One can, for example, get 32x 64GB DIMMs for 2TB of memory. Although this is not on the standard configurator, the CPUs themselves support up to 256GB LRDIMMs and 4TB of memory per socket (8TB in a dual-socket configuration like this.) Dell has on their spec sheet but not online configurator options for 128GB DDR4 LRDIMMs.

Dell also offers PCIe Gen4 NVMe SSDs with the system while the Intel units here are PCIe Gen3 drives. We tested a Kioxia CM6 in the system and it linked at PCIe Gen4 speeds. Dell also has a number of networking upgrades available for a system like this.

For those wondering, here is a quick view of the system’s topology.

We are going to quickly note that Dell EMC is vendor locking AMD EPYC CPUs tied to its systems. We covered this extensively in our piece: AMD PSB Vendor Locks EPYC CPUs for Enhanced Security at a Cost.

An impact of this is that the CPUs used in Dell systems where this security feature is run cannot be used in servers from other vendors which can potentially contribute to e-waste after a servers’ initial lifecycle deployment. That is a trade-off that Dell’s security feature brings.

Dell EMC PowerEdge R7525 Management

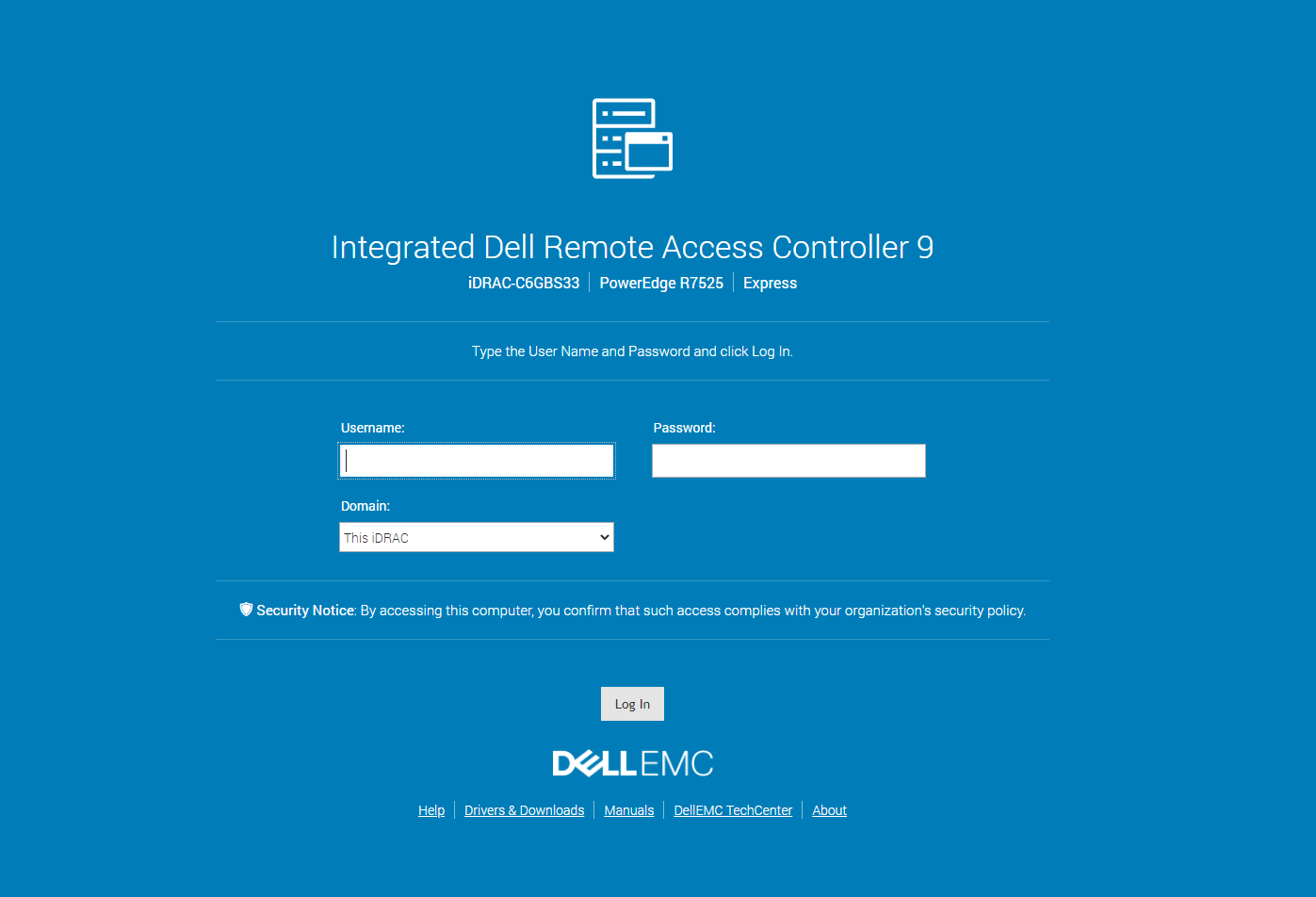

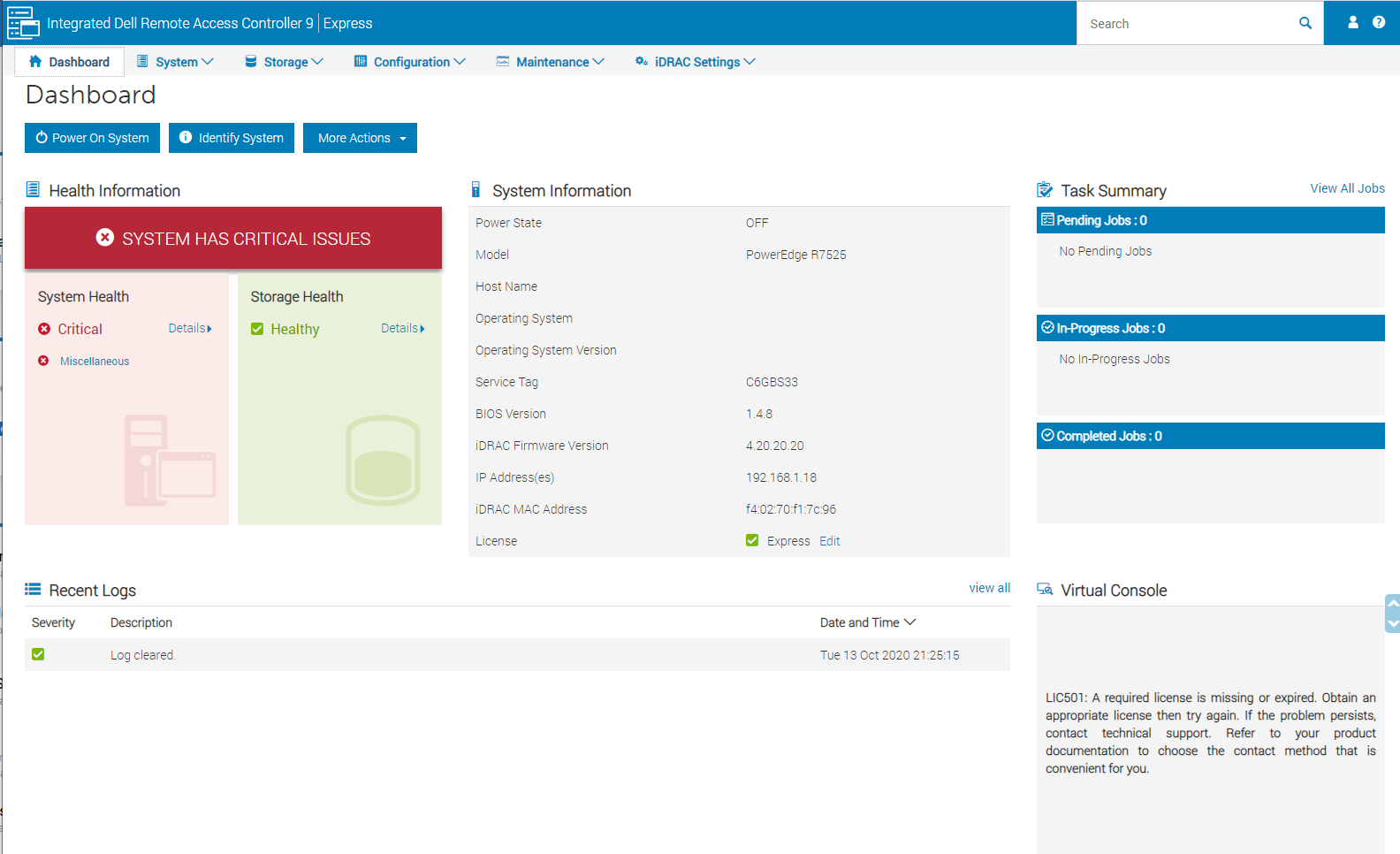

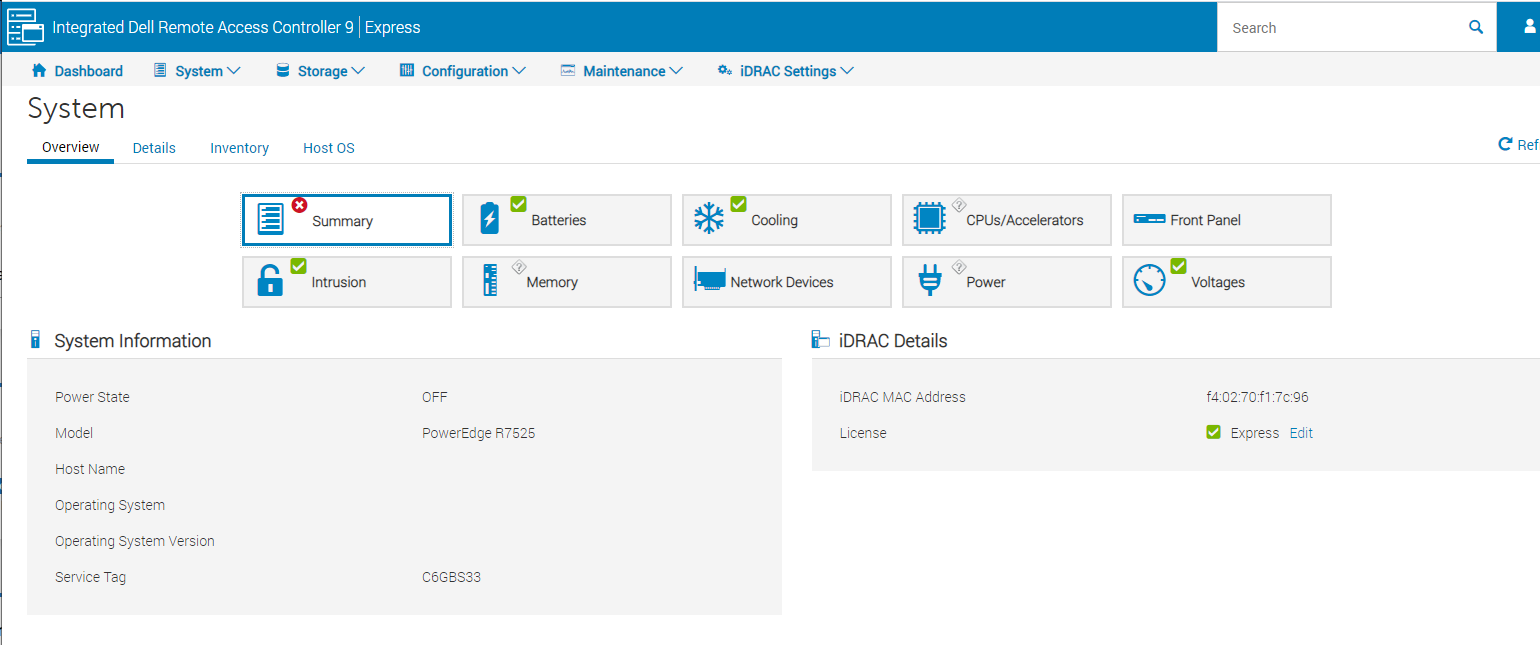

The Dell EMC PowerEdge R7525 offers Dell iDRAC 9. Our test server came with iDRAC 9 Express which is the base option. Normally our PowerEdge review units come with Enterprise which has features such as iKVM enabled. As a result, we are going to show a few screenshots from our recent Dell EMC PowerEdge C6525 Review to demonstrate what the Enterprise level looks like. Logging into the web interface, one is greeted with a familiar login screen.

An important aspect of this system that many potential buyers will be interested in is the integration with existing Dell management tools. Since this is iDRAC 9, and Dell has been refining the solution for a few years even with AMD platforms, this works as one would expect from an Intel Xeon server. If you have an existing Xeon infrastructure, Dell makes adding AMD nodes transparent.

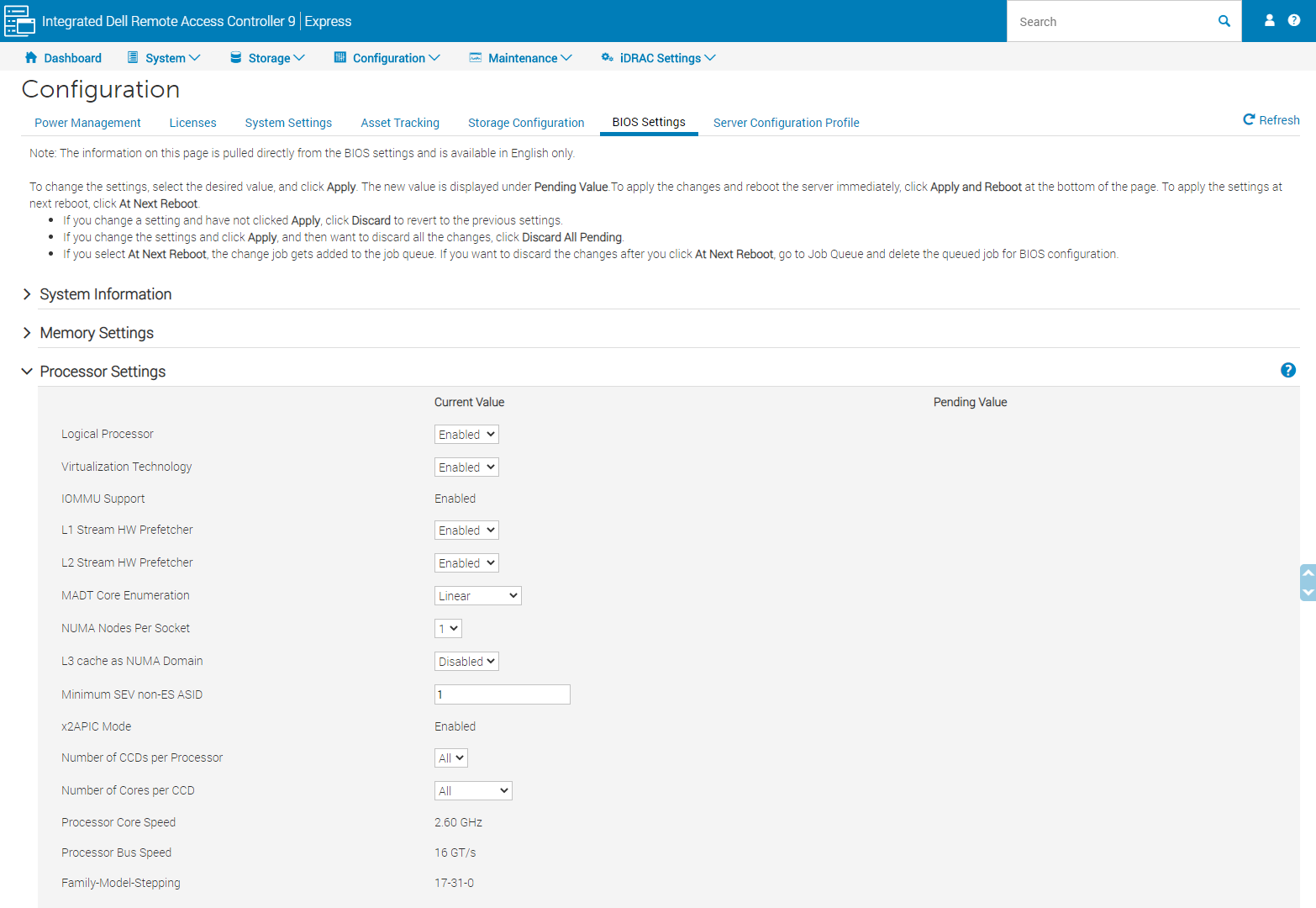

Another feature worth noting is the ability to set BIOS settings via the web interface. That is a feature we see in solutions from top-tier vendors like Dell EMC, HPE, and Lenovo, but many vendors in the market do not have.

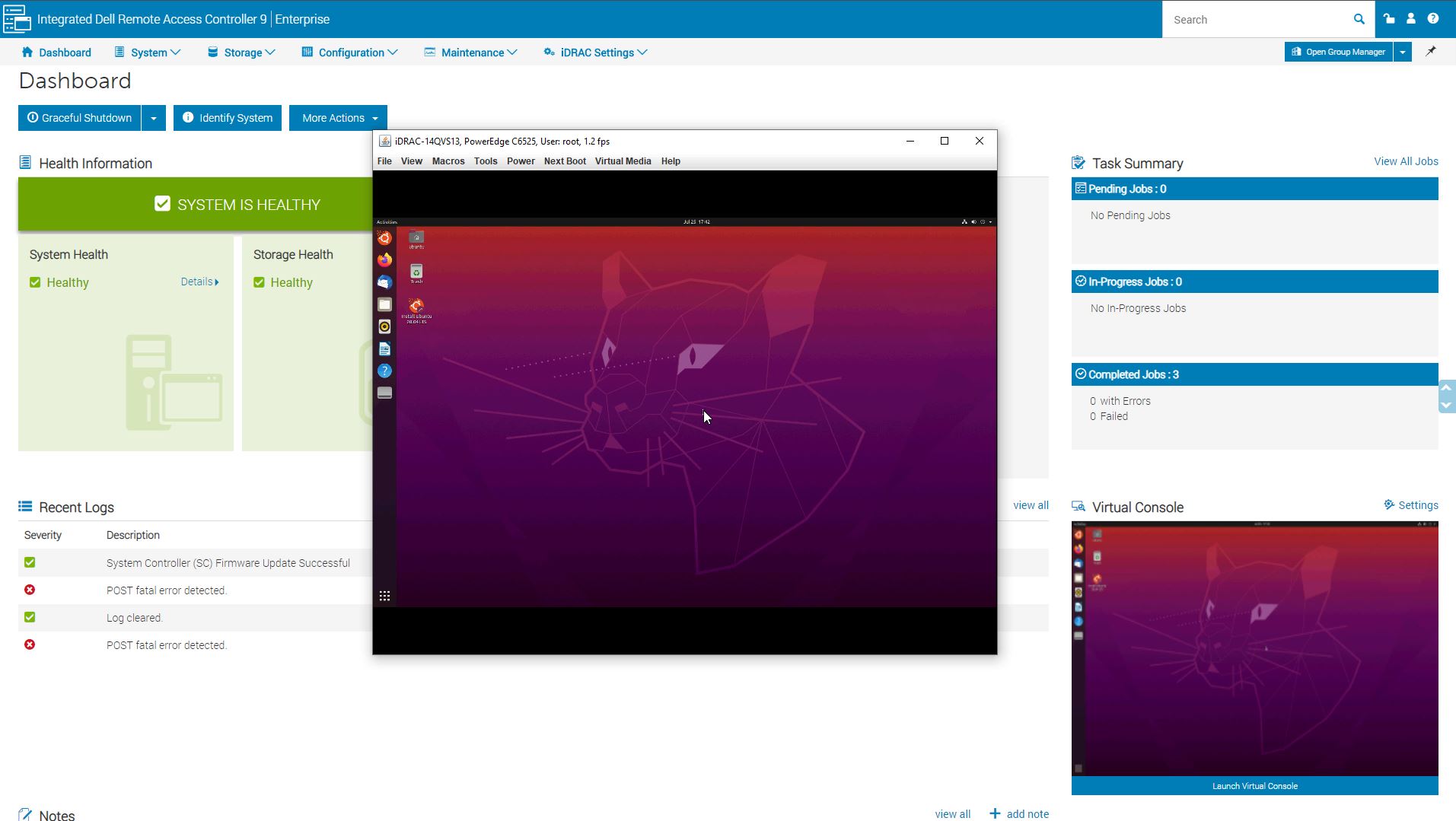

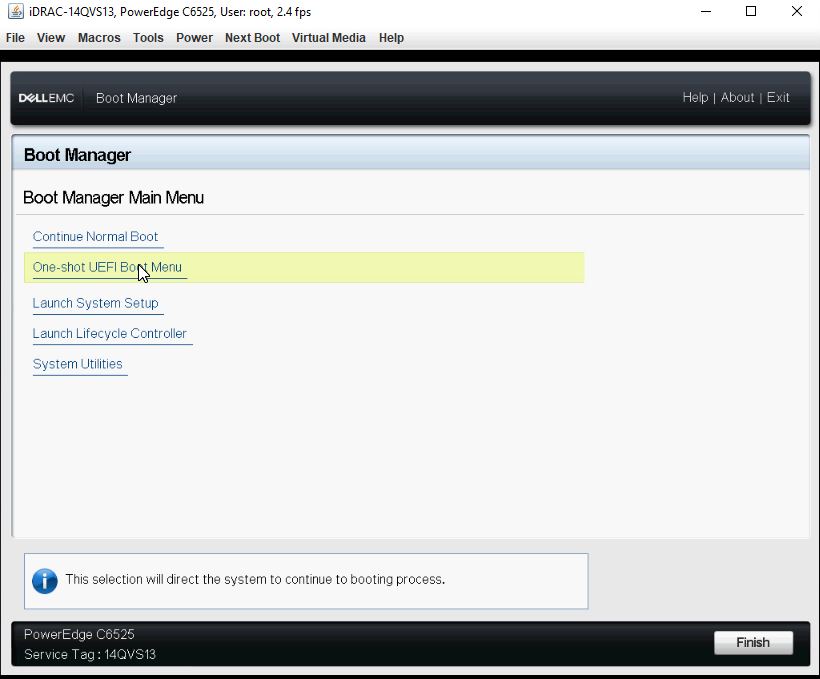

The iKVM feature is a must-have feature for any server today as it has one of the best ROI’s when it comes to troubleshooting. iDRAC 9 features several iKVM console modes including Java, ActiveX, and HTML5. Here we are pulling a view of the legacy Java version from our C6525 review:

Modern server management solutions such as iDRAC are essentially embedded IoT systems dedicated to managing bigger systems. As such, iDRAC has a number of configuration settings for the service module so you can set up proper networking as an example. This even includes features such as detailed asset tracking to monitor what hardware is in clusters. Over time, servicing clusters often means that configurations can drift, and Dell has solutions to help the business side of IT such as tracking the assets.

We probably do not cover this enough, but there are other small features that Dell has. Examples here are selecting the boot device at next boot, important if you want to boot to iKVM media for service or something like that. Dell has an extremely well-developed solution that is a step above white box providers in terms of a breadth of features. Many providers offer the basic set, but Dell has something that goes beyond this.

Something we did not like is the fact that the Enterprise version is an upgrade on our server. With a configuration that has a list price of well over $100,000 the extra $300 option to enable iKVM feels like a “nickel and diming” move. Even discounted to $73,000 using an instant discount still leaves an enormous amount of margin in this product. In 2020 and beyond, iKVM needs to be a standard feature on servers especially with COVID restrictions limiting access to data centers. This has become a workplace safety feature.

Next, we are going to take a look at the performance followed by power consumption and our closing thoughts.

Speaking of course as an analytic database nut, I wonder this: Has anyone done iometer-style IO saturation testing of the AMD EPYC CPUs? I really wonder how many PCIe4 NVMe drives a pair of EPYC CPUs can push to their full read throughput.

I should have first said this: I want a few of these!!!

Patrick, I’m curious when you think we’ll start seeing common availability of U.3 across systems and storage manufacturers? Are we wasting money buying NVMe backplanes if U.3 is just around the corner? Perhaps it’s farther off than I think? Or will U.3 be more niche and geared towards tiered storage?

I see all these fantastic NVMe systems and wonder if two years from now I’ll wish I waited for U.3 backplanes.

The only thing I don’t like about the R7525s (and we have about 20 in our lab) is the riser configuration with 24x NVMe drives. The only x16 slots are the two half-height slots on the bottom. I’d prefer to get two full height x16 slots, especially now that we’re seeing more full height cards like the NVIDIA Bluefield adapters.

We’re looking at these for work. Thanks for the review. This is very helpful. I’ll send to our procurement team

This is the prelude to the next generation ultra-high density platforms with E1.S and E1.L and their PCIe Gen4 successors. AMD will really shine in this sphere as well.

We would set up two pNICs in this configuration:

Mellanox/NVIDIA ConnectX-6 100GbE Dual-Port OCPv3

Mellanox/NVIDIA ConnectX-t 100GbE Dual-Port PCIe Gen4 x16

Dual Mellanox/NVIDIA 100GbE switches (two data planes) configured for RoCEv2

With 400GbE aggregate in an HCI platform we’d see huge IOPS/Throughput performance with ultra-low latency across the board.

The 160 PCIe Gen4 peripheral facing lanes is one of the smartest and most innovative moves AMD made. 24 switchless NVMe drives with room for 400GbE of redundant ultra-low latency network bandwidth is nothing short of awesome.

Excellent article Patrick!

Happy New Year to everyone at Serve The Home! 🙂

> This is a forward-looking feature since we are planning for higher TDP processors in the near future.

*cough* Them thar’s a hint. *cough*

Dear Wes,

This is focused towards the data IO for NAS/Databases/Websites. If you want full height x16 slots for AI, GPGPU or VM’s with vGPU then you’ll have to look elsewhere for larger cases. You could still use the R7525 to host the storage & non-GPU apps.

@tygrus

There are full height storage cards (think computational storage devices) and full height Smart NICs (like the Nvidia Bluefield-2 dual port 100Gb NIC) that are extremely useful in systems with 24x NVMe devices. These are still single slot cards, not a dual slot like GPUs and accelerators. I’m also only discussing half length cards, not full length like GPUs and some other accelerators.

The 7525 today supports 2x HHHL x16 slots and 4x FHHL x8 slots. I think the lanes should have been shuffled on the risers a bit so that the x16 slots are FHHL and the HHHL slots are x8.

@Wes

Actually R7525 can be configured also with Riser config allowing full length configuration (on my configurator I see Riser Config 3 allow that). I see that both when using SAS/SATA/Nvme and when using NVME only. I also see that on DELL US configurator NVME only configuration does not allow Riser Config 3. Looking at Technical manual, I see that such configuration is not allowed while looking on at the Installation and Service Manual such configuration is listed in several parts of the manual. Take a look.

I configured mine with the 16 x NVMe backplane for my lab. 3 x Optane and 12 by NVMe drives. 1 cache + 4 capacity, 3 disk groups per server. I then have 5 x16 full-length slots. 2 x Mellanox 100Gbe adapters, plus the OCP NIC and 2 x Quadro RTX8000 across 4 servers. Can’t wait to put them into service.

Hey There, what’s the brand to chipset?

Hi, can I ask the operational wattage of DELL R7525?

I can’t seem to find any way to make the dell configurator allow me to build an R7525 with 24x NVMe on their web site. Does anyone happen to know how/where I would go about building a machine with the configurations mentioned in this article?