Dell EMC PowerEdge C6525 Node Overview

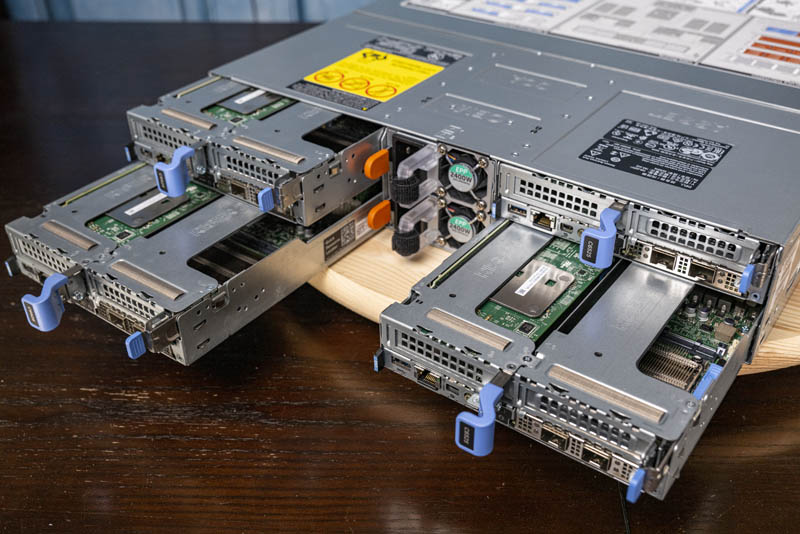

The PowerEdge C6525 is aimed at higher-density applications such as high-performance computing (HPC) and hyper-converged deployments. The man value proposition of a 2U4N solution is the ability to house four dual-socket nodes in 2U.

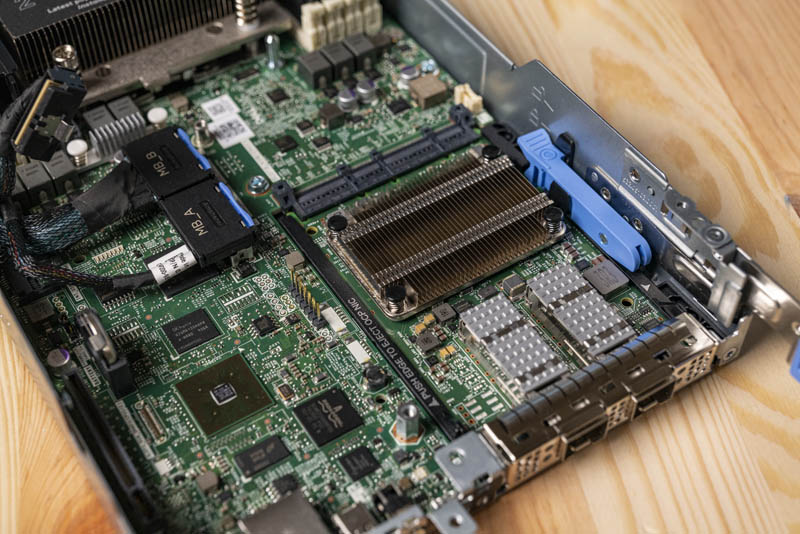

There are two sockets. Each can handle an AMD EPYC 7002 series CPU. The nodes themselves follow a standard layout with the chassis connectors at the right side, the CPUs and memory next, and the rear dedicated to PCIe cards and I/O.

The connectors Dell is using are high-density connectors that make servicing easier than with older generations of large gold finger PCBs. They also are being used here to direct airflow towards the CPUs.

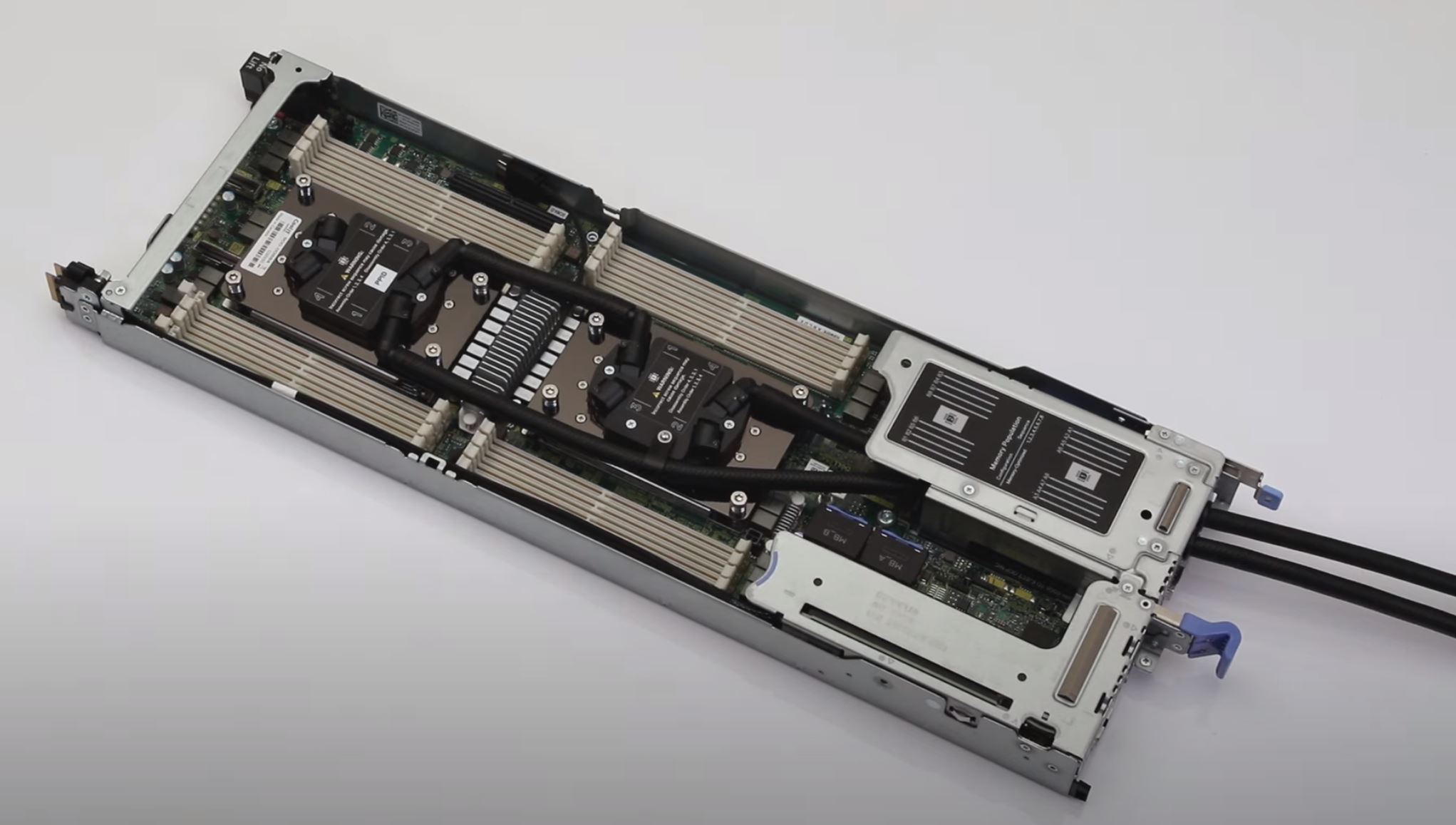

The CPUs are dual AMD EPYC 7002 series “Rome” processors. They can offer up to 64 cores and 128 threads per socket. That means each system can hit 128 cores and 256 threads. With four systems, that is how we get to a kilo-thread or 1024 threads.

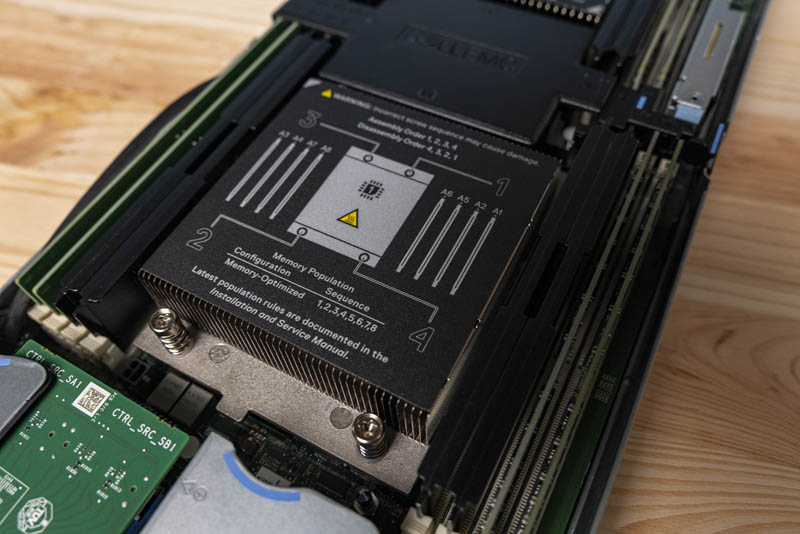

These CPUs have 8-channel memory controllers so Dell has 8x DDR4 DIMM slots onboard the C6525. The EPYC 7002 series supports two DIMMs per channel (2DPC) configurations on some of Dell’s larger servers (e.g. the PowerEdge R7415) which mean one gets 16 DIMMs per socket. There is simply no space for that in the C6525 due to width constraints. Instead, Dell gives one access to the full memory bandwidth of the platform with eight DIMM slots, albeit at half of the maximum capacity for the processors. Eight DIMMs are still a lot. For the HPC market, the 8-channel memory is the big feature since current Cascade Lake Intel Xeon platforms only use 6-channel memory. The C6420 has eight DIMMs but only 6-channel memory and lower clock speed memory.

Our system is air-cooled with AMD EPYC 7452 processors. Dell has two large heatsinks and there is a small airflow guide between them. A small detail, but one that will resonate with many who have used older and competitive systems, is that this airflow guide is tool-less and is slightly recessed. It is lower than the heatsinks. A common area of frustration in 2U4N deployments comes from airflow guides that get snagged and potentially damaged when inserting nodes. This is a very small design detail, but if you have ever had that happen, you will understand its importance.

The PowerEdge C6525 can handle lower-TDP CPUs as we have, but there are other options as well. Dell partners with CoolIT Systems to provide liquid cooling to the CPU sockets. As TDPs rise across CPUs, GPUs, and other accelerators, liquid cooling will become more common. Dell already has that option. In this generation, it allows the AMD EPYC 7H12 to be used in the system. This is a 280W TDP 64-core CPU designed for the HPC market. With this method, the kilo-thread or half kilo-core 2U solution can be deployed with high clock speeds for HPC.

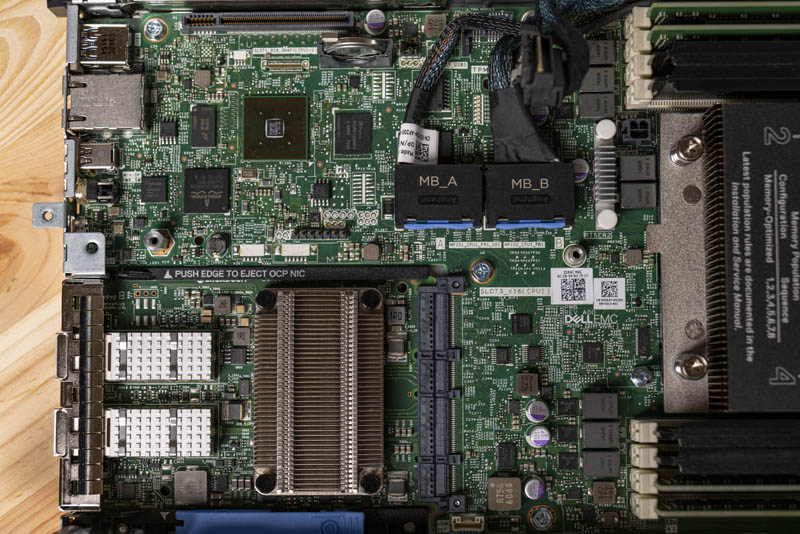

Moving to the rear of the system where the I/O is located, we can see a fairly strong layout. There is the iDRAC 9 BMC along with associated components. One can also see the OCP NIC 3.0 slot that we will discuss shortly. AMD EPYC CPUs to not have platform controller hubs. As a result, this motherboard PCB does not have a big chip with a large heatsink on it.

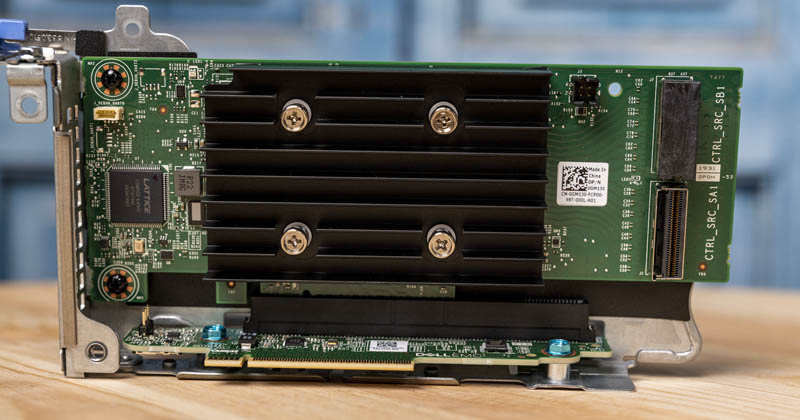

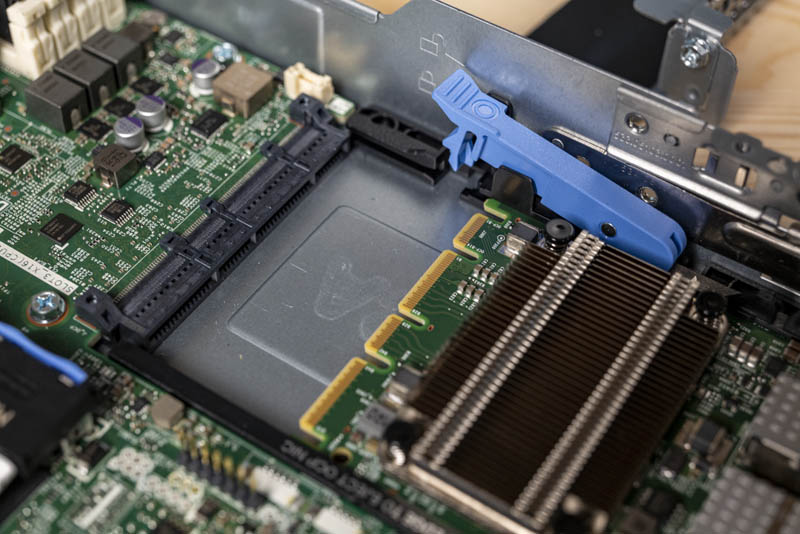

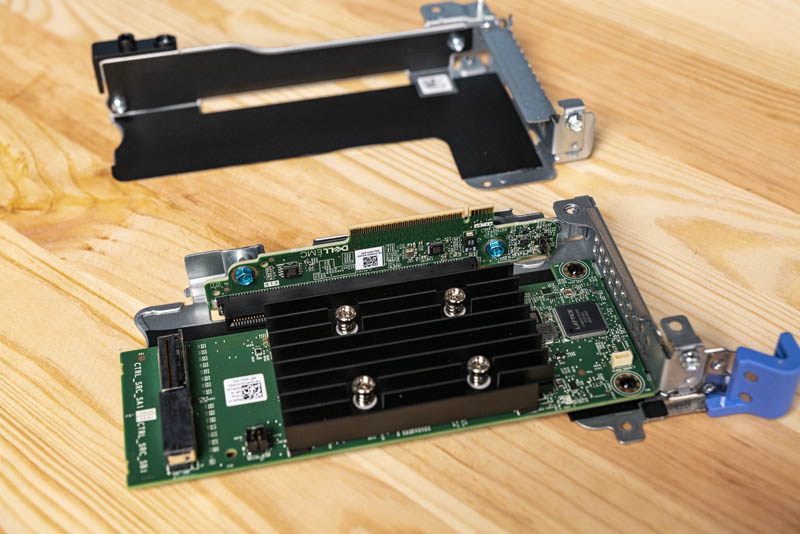

Our system had two PCIe risers. Only one had the riser PCB included but the second one can support a riser. The system supports PCIe Gen4 x16 which is a big differentiator. Many of the competitive 2U4N solutions are PCIe Gen3 only. We are still seeing PCIe Gen4 capable devices trickle into the market, but they will be more common a few months after publishing this review. Adding PCIe Gen4 support means Dell needs to use higher-quality PCB which adds costs. The benefit is that the system gets twice the PCIe bandwidth. In a size-constrained 2U4N platform, that effectively doubles the PCIe bandwidth that Dell is making available to I/O for the C6525 over the C6420.

As with many PowerEdge systems these days, Dell has a BOSS solution. There is a small dual M.2 riser nestled between DIMM slots and the edge of the chassis. If you want to add boot devices here, you can do that while saving front-panel 2.5″ drive bays for higher-value application and data storage.

The rear I/O of the node is focused on the left side. Here we can find a USB 3.0 Type-A port, a 1GbE NIC for management, a mini DisplayPort, and a USB port for iDRAC service. Typically one would expect to find either a VGA port or a high-density connector here to connect to legacy KVMs that are usually VGA based. Here, we do not get a standard VGA port due to space constraints.

Above this I/O block, we find the first PCIe x16 riser slot. Our system was using the slot for front-panel storage, but Dell has a lot of configuration options here.

On the other side of the node, we have the second PCIe Gen4 x16 HHHL riser slot. Ours was just a blank but it is the spot where the second slot would go. It is also the riser slot that is occupied by the liquid cooling tubes when used in conjunction with the CoolIT liquid cooling solution.

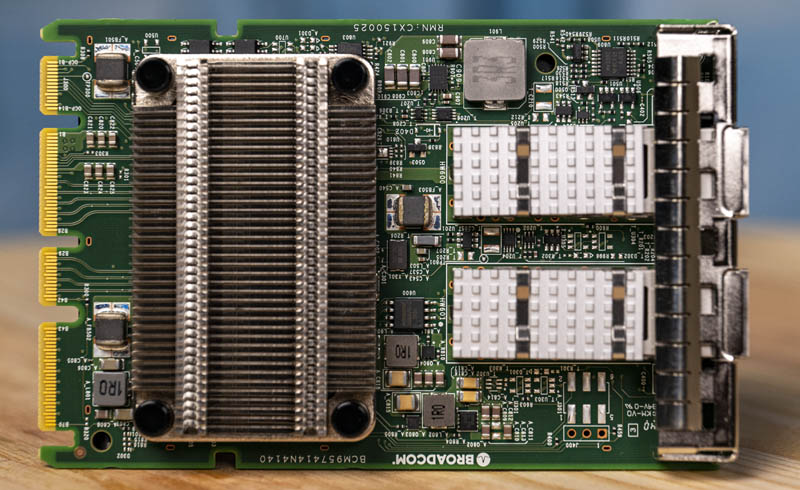

Below this riser slot, we get one of the most interesting slots on the entire server. This is the OCP NIC 3.0 slot. When we first reviewed the Dell C6100 the system was designed for, and used by companies such as Facebook and Twitter and consumed on a massive scale. In the intervening decade, the hyper-scalers decided to focus on making their own systems with lower-margin white box providers. Facebook, then soon Microsoft, joining the Open Compute Project.

While it would be hard to use a modern Facebook OCP server in a standard 19″ enterprise rack today, the impact of OCP goes well beyond servers. Perhaps the most influential impact on the market will be the OCP NIC 3.0 form factor. The industry is effectively abandoning proprietary form factors and is standardizing on this NIC, especially as we move to the PCIe Gen4 era. Coming full circle, the PowerEdge C6525 incorporates the OCP NIC 3.0 that was designed by a former Dell C6xxx series customer.

There is one area that was amazingly frustrating. To replace the NIC, one needs to open the chassis and pull a latch that acts as a lock. This appeared to be a very simple solution. The OCP NIC 3.0 is designed for this type of serviceability as a big upgrade over the OCP NIC 2.0 designs.

Our test system required us to actually move the riser above the NIC as it included sheet metal to secure the OCP NIC. Removing that “dummy” riser in our system meant that we also needed to remove screws that held the first riser down. As a result, we ended up having to remove risers and several screws which made that nice blue latch almost redundant.

We are going to just note here that the PCIe riser design on the PowerEdge C6525 feels like a several years old design. There are many screws that one has to access from different directions just to remove the risers, and they are not completely independent. Companies like Cisco with the competitive Cisco UCS C4200 and the Inspur i24 have riser designs with much better serviceability. Usually, Dell EMC is at the forefront of mechanical design which we saw with small features such as the recessed airflow guide. This is an area where the Dell solution is behind even some white box competition.

Next, we are going to take a look at the management aspects of this solution. We will then test these servers to see how well they perform.

“We did not get to test higher-end CPUs since Dell’s iDRAC can pop field-programmable fuses in AMD EPYC CPUs that locks them to Dell systems. Normally we would test with different CPUs, but we cannot do that with our lab CPUs given Dell’s firmware behavior.”

I am astonished by just how much of a gargantuan dick move this is from Dell.

Could you elaborate here or in a future article how blowing some OTP fuses in the EPYC CPU so it will only work on Dell motherboards improves security. As far as I can tell, anyone stealing the CPUs out of a server simply has to steal some Dell motherboards to plug them into as well. Maybe there will also be third party motherboards not for sale in the US that take these CPUs.

I’m just curious to know how this improves security.

This is an UNREAL review. Compared to the principle tech junk Dell pushes all over. I’m loving the amount of depth on competitive and even just the use. That’s insane.

Cool system too!

“Dell’s iDRAC can pop field-programmable fuses in AMD EPYC CPUs that locks them to Dell systems”?? i’m not quickly finding any information on that? please do point to that or even better do an article on that, sounds horrible.

Ya’ll are crazy on the depth of these reviews.

Patrick,

It won’t be long before the lab could be augmented with those Dell bound AMD EPYC Rome CPUs that come at a bargain eh? 😉

I’m digging this system. I’ll also agree with the earlier commenters that STH is on another level of depth and insights. Praise Jesus that Dell still does this kind of marketing. Every time my Dell rep sends me a principled tech paper I delete and look if STH has done a system yet. It’s good you guys are great at this because you’re the only ones doing this.

You’ve got great insights in this review.

To who it may concern, Dell’s explanation:

Layer 1: AMD EPYC-based System Security for Processor, Memory and VMs on PowerEdge

The first generation of the AMD EPYC processors have the AMD Secure Processor – an independent processor core integrated in the CPU package alongside the main CPU cores. On system power-on or reset, the AMD Secure Processor executes its firmware while the main CPU cores are held in reset. One of the AMD Secure Processor’s tasks is to provide a secure hardware root-of-trust by authenticating the initial PowerEdge BIOS firmware. If the initial PowerEdge BIOS is corrupted or compromised, the AMD Secure Processor will halt the system and prevent OS boot. If no corruption, the AMD Secure Processor starts the main CPU cores, and initial BIOS execution begins.

The very first time a CPU is powered on (typically in the Dell EMC factory) the AMD Secure Processor permanently stores a unique Dell EMC ID inside the CPU. This is also the case when a new off-the-shelf CPU is installed in a Dell EMC server. The unique Dell EMC ID inside the CPU binds the CPU to the Dell EMC server. Consequently, the AMD Secure Processor may not allow a PowerEdge server to boot if a CPU is transferred from a non-Del EMC server (and CPU transferred from a Dell EMC server to a non-Dell EMC server may not boot).

Source: “Defense in-depth: Comprehensive Security on PowerEdge AMD EPYC Generation 2 (Rome) Servers” – security_poweredge_amd_epyc_gen2.pdf

PS: I don’t work for Dell, and also don’t purchase their hardware – they have some great features, and some unwanted gotchas from time to time.

What would be nice is some pricing on this

Wish the 1gb Management nic would just go away. There is no need to have this per blade. It would be simple for dell to route the connections to a dumb unmanaged switch chip on that center compartment and then run a single port for the chassis. Wiring up lots of cables to each blade is a messy. Better yet, place 2 ports allowing daisy chaining every chassis in a rack and elimate the management switch entirety.

Holy mother of deer… Dell has once again pushed vendor lock and DRM way too far! Unbelievable!

It’s part of AMD’s Secure Processor, and it allows the CPU to verify that the BIOS isn’t modified or corrupted, and if it is, it refuses to post.. It’s not exactly an efuse and more of a cryptographic signing thing going on where the Secure Processor validates that the computer is running a trusted BIOS image. The iDRAC 9 can even validate the BIOS image while the system is running. The iDRAC can also reflash the BIOS back to a non-corrupt, trusted image. On the first boot of an Epyc processor in a Dell EMC system, it gets a key to verify with; this is what can stop the processor from working in other systems as well.

Honestly, there is no reason Dell can’t have it both ways with iDrac. iDrac is mostly software, and could be virtualized with each VM having access to one set of the hardware interfaces. This ould cut their costs by three, roughly, while giving them their current management solution. After all, ho often do you access all four at once?

haw is the price for this server PowerEdge C6525