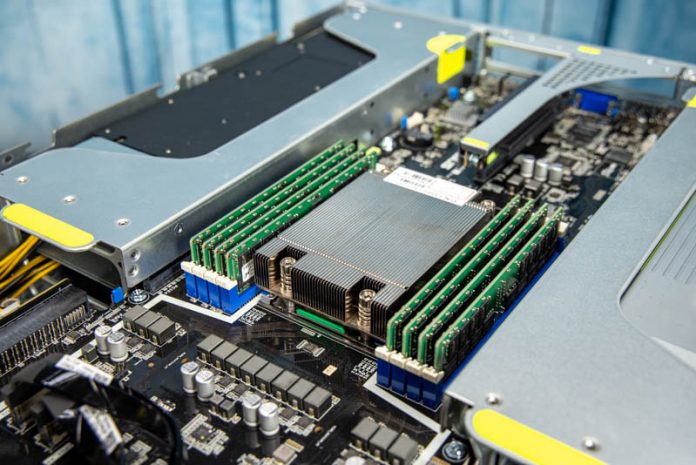

Something that AMD has been pushing lately is the ratio of four GPUs to a single CPU. Many of the next-generation Exascale supercomputers are built on that premise. For those who are looking to deploy that ratio in a single system, the ASUS ESC4000A-E10 offers a single socket AMD EPYC GPU server solution. One can fit four double-width training GPUs or eight single-width inferencing GPUs. In our review, we are going to see how ASUS designed this 2U package.

ASUS ESC4000A-E10 Hardware Overview

Something that we have been doing lately is splitting our hardware overview section into two distinct parts. We are first going to cover the external portion of the chassis. We are then going to cover the internal portion.

ASUS ESC4000A-E10 External Overview

We are going to start our hardware overview with the front of the system. One can see that the ESC4000A-E10 is a 2U system that looks a bit different than a standard server. Instead of using all 2U of the front panel’s space for drives, there is more going on here.

First, there is a bit of ASUS’s brand identity making its way into the front panel. Here we can see four USB 3 Type-A front panel headers along with LED indicator lights and a chassis identification and power buttons. Then there is a feature that is less common on servers: a LCD display that shows the POST status. This is a hallmark of ASUS servers and we have seen it in the past. For example, the ASUS RS500A-E9-RS4-U and even formed the cover image for our ASUS RS720Q-E8-RS8-P 2U 4-Node Xeon E5-2600 V3/ V4 Server Review years ago.

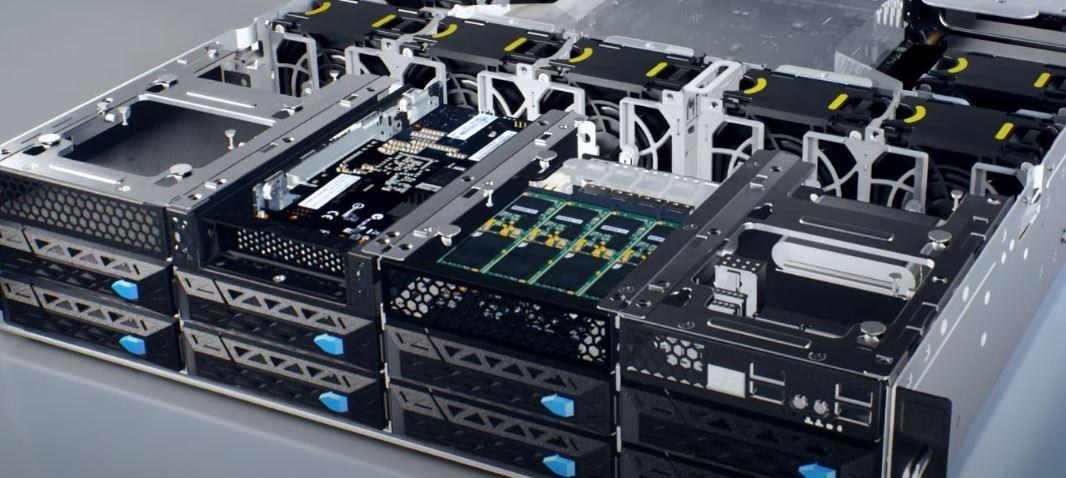

The 8x 3.5″ drive bays are quite interesting as well. The trays themselves are tool-less designs for 3.5″ drives. If you are using 2.5″ drives, like the Samsung PM1733 PCIe Gen4 NVMe SSD pictured below, you will need to screw them into the bottom of the tray. By default, the eight bays support 4 x SATA/SAS + 4 x SATA/SAS/NVMe devices. Two of the four NVMe capable bays are enabled by default but optionally one can add up to four drives.

By reducing the number of drive bays in the front, and using 3.5″ bays where 2.5″ NVMe drives are likely to be used, ASUS is able to increase airflow and thus cooling to the system. The other impact is that ASUS has a customizable front I/O design. We already looked at the right-side USB and BIOS LED panel. On the left side, we see a blank spot but this is clearly designed to have some flexible I/O added. In the middle, we find two spaces that we wanted to focus on.

First, one can see the PCIe Gen4 x8 riser. This has a cabled PCIe Gen4 connection, a feature we are starting to see on more modern systems. It also has power for the PCIe device. This slot is designed for RAID controllers for the front panel drives in the event one wants to configure a system that way. In theory, one can also use a PCIe NIC or SSD here as well.

The other slot is a customizable slot. One can see the power cable on the zoom-out above. That power cable is used to provide power to opting installed in this bay. ASUS offers customization such as the ability to have a 4x M.2 NVMe SSD array here. There is even an option to add an OCP NIC 3.0 slot (PCIe Gen4 x8.) We did not have these options in our chassis, but here is a screenshot of the option.

Moving to the rear of the unit, we can see a common layout for this type of 2U GPU system. There are pods for the GPUs on either side. In the middle, we get the power supply and motherboard I/O.

Looking at the middle section we see the server I/O above the redundant 1.6kW power supplies. The I/O is fairly basic with two USB 3 Type-A ports, a VGA port, and three network ports. The first port is a management port while the next two ports are 1GbE network ports. ASUS is using the Intel i350 NIC for these ports. There are two low-profile PCIe expansion slots that we will discuss more in our internal overview.

Something that we wish ASUS did with the system is to invest a bit more in labeling. For example, looking at the three 1GbE ports, it can be difficult to figure out which is the management and which are the Intel i350 ports. Likewise, our system did not have field service guide stickers and there are no external labels on the GPU expansion slots that may aid in service without opening the system.

Next, we are going to take a look inside the server to see how this system is constructed.

Patrick – Nvidia GPUs seem to like std 256MB BAR windows. AMD Radeon VII and MI50/60 can map the entire 16GB VRAM address space, if the EUFI cooperates ( so-called large BAR).

Any idea if this system will map large BARs for AMD GPUs?

The sidepod airflow view is quite

…… Nice

Fantastic review. I am considering this for a home server rack in the basement to go with some of my quiet Dell Precision R5500 units. Didn’t see anything in the regards to noise level, idle and during high utilization. Can you speak to this?

This is a really nice server. We use it for FPGA cards, where we need to connect network cables to the cards. This is not possible in most GPU servers (e.g. Gigabyte). It just works :-)

But don’t get disappointed if your fans are not yellow, ASUS changed the color for the production version.

We’ve bought one of these servers just now, but when we build in 8x RTX4000’s, only on gets detected by our hypervisor (Xenserver).

Is there any option in the BIOS we need to address in order for the other 7 to be recognized?

Update on my reply @ 24 feb 2022:

It seemed we received a faulty server. Another unit DID present us with all 8 GPUs in Xenserver without any special settings to be configured in the BIOS at all (Default settings used)