ASUS ESC4000A-E10 Performance

For this exercise, we are using our legacy Linux-Bench scripts which help us see cross-platform “least common denominator” results we have been using for years as well as several results from our updated Linux-Bench2 scripts.

At this point, our benchmarking sessions take days to run and we are generating well over a thousand data points. We are also running workloads for software companies that want to see how their software works on the latest hardware. As a result, this is a small sample of the data we are collecting and can share publicly. Our position is always that we are happy to provide some free data but we also have services to let companies run their own workloads in our lab, such as with our DemoEval service. What we do provide is an extremely controlled environment where we know every step is exactly the same and each run is done in a real-world data center, not a test bench.

We are going to show off a few results and highlight a number of interesting data points in this article.

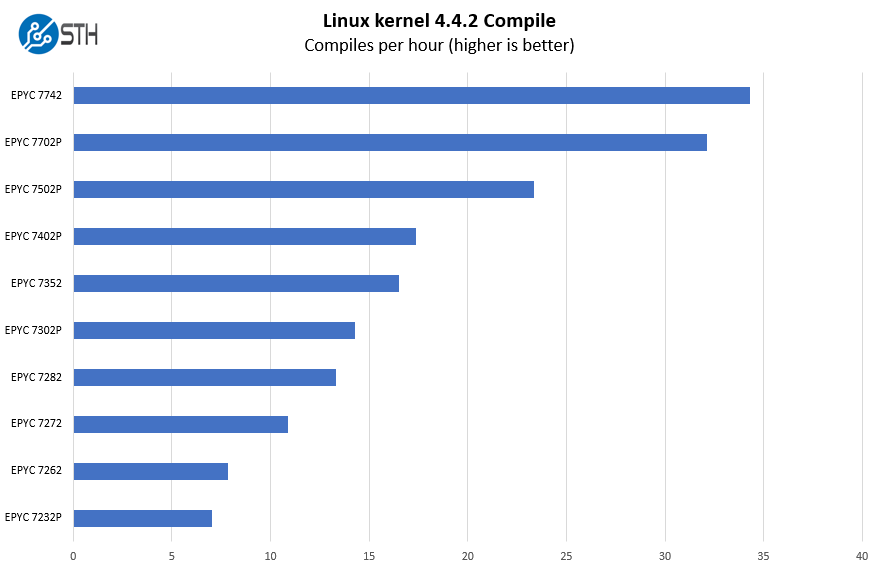

Python Linux 4.4.2 Kernel Compile Benchmark

This is one of the most requested benchmarks for STH over the past few years. The task was simple, we have a standard configuration file, the Linux 4.4.2 kernel from kernel.org, and make the standard auto-generated configuration utilizing every thread in the system. We are expressing results in terms of compiles per hour to make the results easier to read:

We are specifically using a few SKUs here to demonstrate some of the in-socket scaling one gets with AMD EPYC 7002. We get from 8-64 cores here, although we did not get to test a 48-core part, we suspect given the top-end EPYC 7742 works that the 48-core parts work as well.

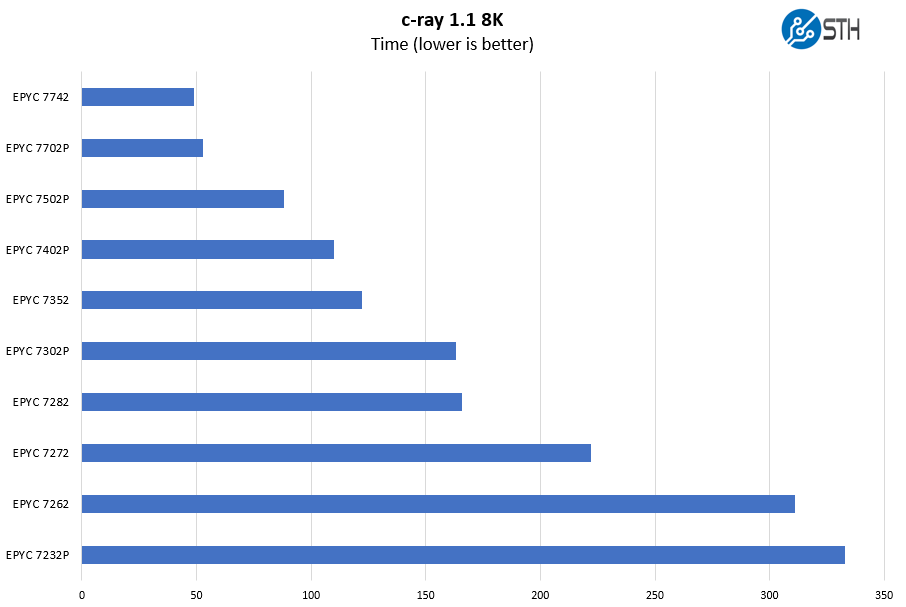

c-ray 1.1 Performance

We have been using c-ray for our performance testing for years now. It is a ray tracing benchmark that is extremely popular to show differences in processors under multi-threaded workloads. We are going to use our 8K results which work well at this end of the performance spectrum.

ASUS supports changing cTDP to 240W in this platform on parts such as the AMD EPYC 7742. This adds some marginal amount of performance that we discussed in our AMD EPYC 7H12 Review.

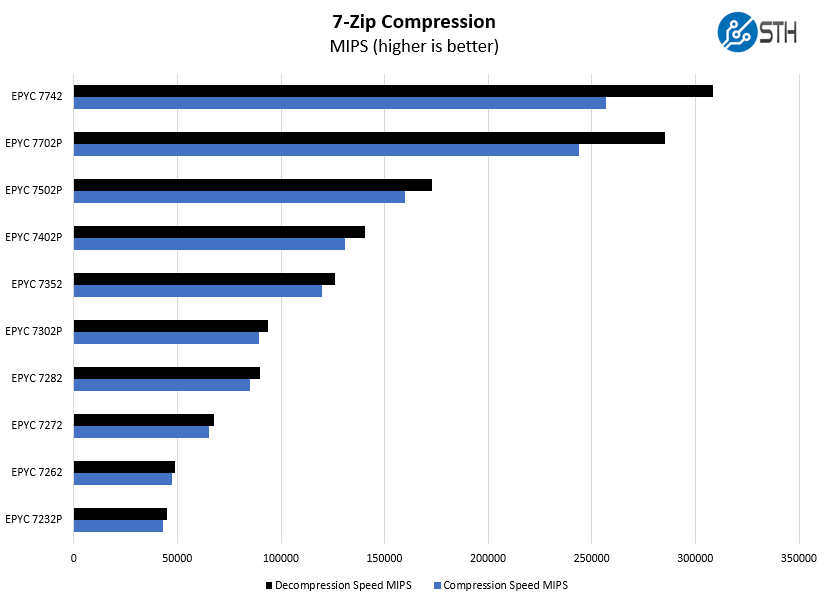

7-zip Compression Performance

7-zip is a widely used compression/ decompression program that works cross-platform. We started using the program during our early days with Windows testing. It is now part of Linux-Bench.

To us, the “P” series SKUs which only operate in single-socket configurations, but have much lower prices, are probably the best bets for a system like the ESC4000A-E10. They are lower cost than their non-P siblings and since this is a single-socket platform, that makes sense.

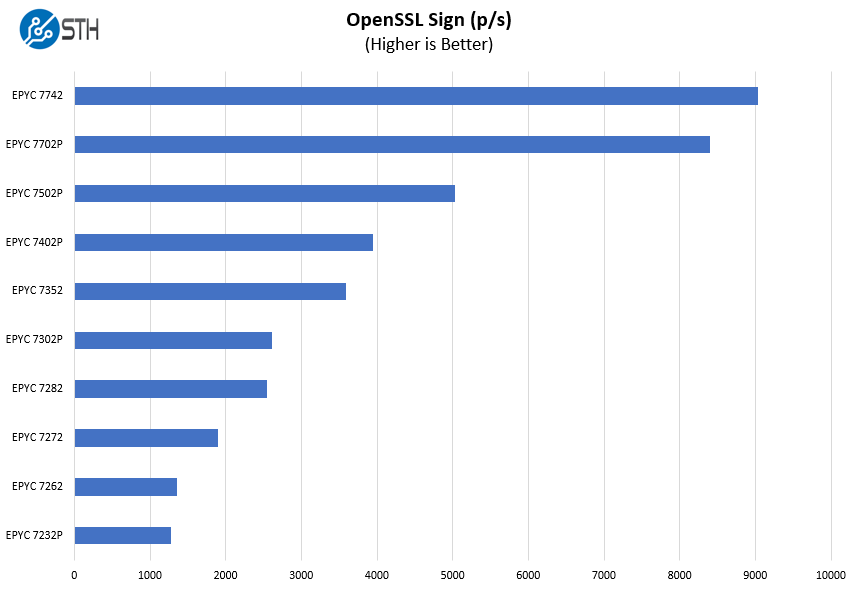

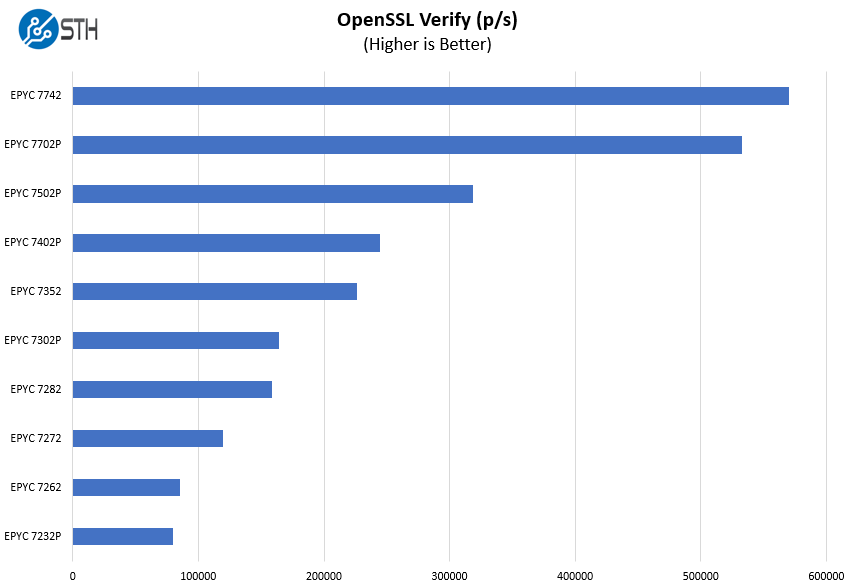

OpenSSL Performance

OpenSSL is widely used to secure communications between servers. This is an important protocol in many server stacks. We first look at our sign tests:

Here are the verify results:

Again, part of the appeal here is that AMD has these single socket “P” series parts that allow one to not just consolidate from two sockets to one in the ASUS platform, but then one can also lower costs beyond that 2:1 consolidation ratio.

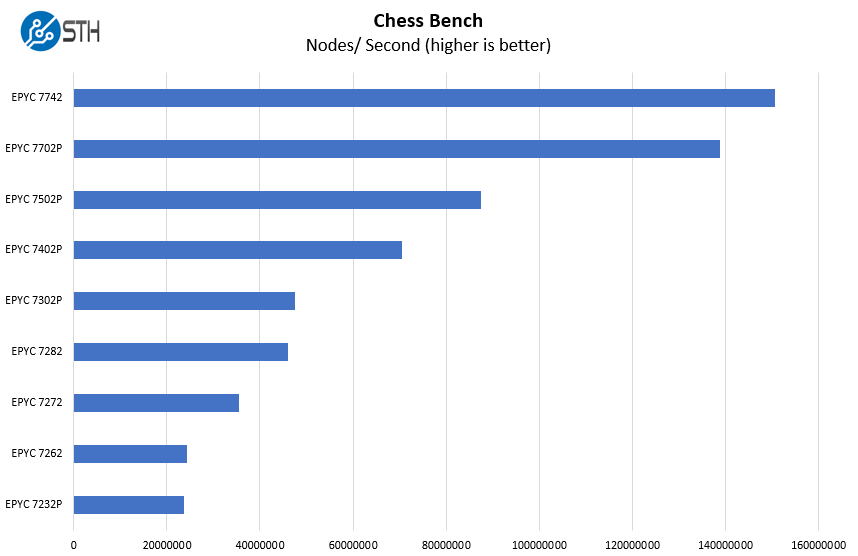

Chess Benchmarking

Chess is an interesting use case since it has almost unlimited complexity. Over the years, we have received a number of requests to bring back chess benchmarking. We have been profiling systems and are ready to start sharing results:

Overall, the system was able to perform within about 1.5% of our typical 2U 1P AMD EPYC performance which is effectively a test variation. Those massive fans and ductwork that we saw in the hardware overview are doing a lot of cooling.

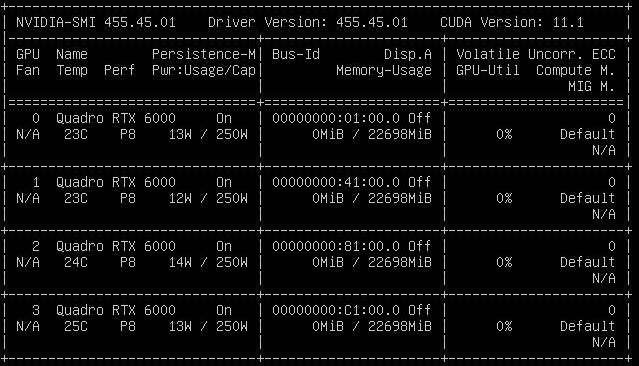

ASUS ESC4000A-E10 GPU Compute Performance

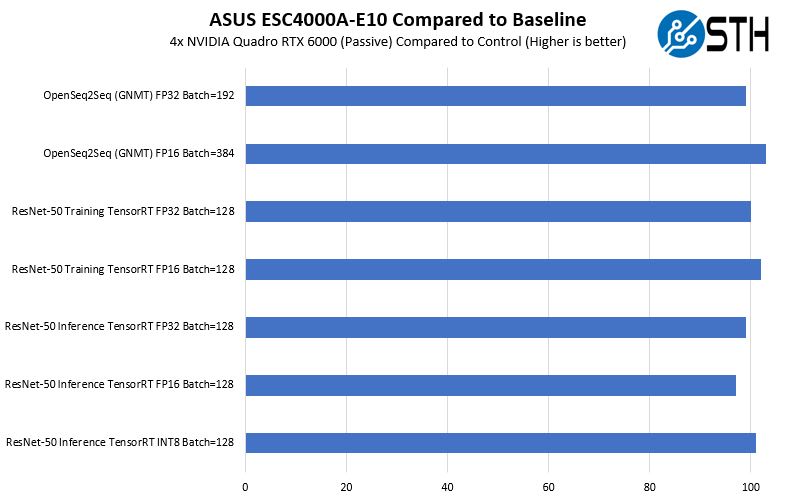

We wanted to validate that this type of system was analogous to the type of per-GPU performance that we see in more traditional 8-10 GPU 4U servers. Here we are using four passively cooled NVIDIA Quadro RTX 6000 cards.

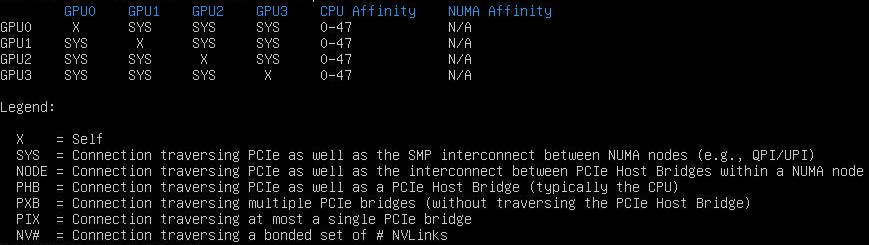

In the system, there are no PCIe switches. As a result, we can still get P2P transfers however, the topology goes back to the AMD EPYC IO Die to traverse paths from one GPU to another. This would, of course, look different if we had NVLink bridges installed in each GPU side pod. As with any GPU system, you are likely going to have to make some BIOS tweaks to ensure high performance of your setup. That is something ASUS could streamline with options such as setting BIOS via the IPMI web interface that we discussed in the management section.

As such, we ran these eight GPUs through a number of benchmarks to test that capability:

Overall, the performance was very close to what we were seeing in the larger systems when we use 4x GPU sets. Generally, GPU compute is limited mostly by topology and cooling so this is about what we would expect. Hopefully, we will get to publish a review of the 8x GPU server soon.

Next, we are going to wrap up with our power consumption, STH Server Spider, and our final words.

Patrick – Nvidia GPUs seem to like std 256MB BAR windows. AMD Radeon VII and MI50/60 can map the entire 16GB VRAM address space, if the EUFI cooperates ( so-called large BAR).

Any idea if this system will map large BARs for AMD GPUs?

The sidepod airflow view is quite

…… Nice

Fantastic review. I am considering this for a home server rack in the basement to go with some of my quiet Dell Precision R5500 units. Didn’t see anything in the regards to noise level, idle and during high utilization. Can you speak to this?

This is a really nice server. We use it for FPGA cards, where we need to connect network cables to the cards. This is not possible in most GPU servers (e.g. Gigabyte). It just works :-)

But don’t get disappointed if your fans are not yellow, ASUS changed the color for the production version.

We’ve bought one of these servers just now, but when we build in 8x RTX4000’s, only on gets detected by our hypervisor (Xenserver).

Is there any option in the BIOS we need to address in order for the other 7 to be recognized?

Update on my reply @ 24 feb 2022:

It seemed we received a faulty server. Another unit DID present us with all 8 GPUs in Xenserver without any special settings to be configured in the BIOS at all (Default settings used)