Today, AMD is launching the AMD Instinct MI300 series. This is an entire family that is, in many ways, similar to Intel’s original vision for 2025 Falcon Shores (although that is now GPU-only) and NVIDIA’s Hopper series. The difference is that AMD is launching in mid-2023 and already delivering parts for an enormous supercomputer. Make no mistake, if something is going to chip into NVIDIA’s market share in AI during 2023, this is one of the few solutions that have a legitimate chance.

We are writing this live at AMD’s data center event so please excuse typos. Note: We did a quick update adding images we found of the MI300.

AMD Instinct MI300 is THE Chance to Chip into NVIDIA AI Share

Just to be clear, there are only a few AI companies that have a realistic chance to put a dent in NVIDIA’s AI share in 2023. NVIDIA is facing very long lead times for its H100 and now A100 GPUs. As a result, if you want NVIDIA for AI and do not have an order in today, we would not expect to deploy before 2024.

We covered the Intel Gaudi2 AI Accelerator Super Cluster as one effort to offer an alternative. Beyond a large company like Intel, there are only a few companies that have a clear path this year to make a dent. One is Cerebras and its CS-2 and Wafer Scale Engine-2. Cerebras removes a huge amount of overhead by keeping wafers intact and using silicon interconnects versus PCB and cables. AMD has Instinct MI300.

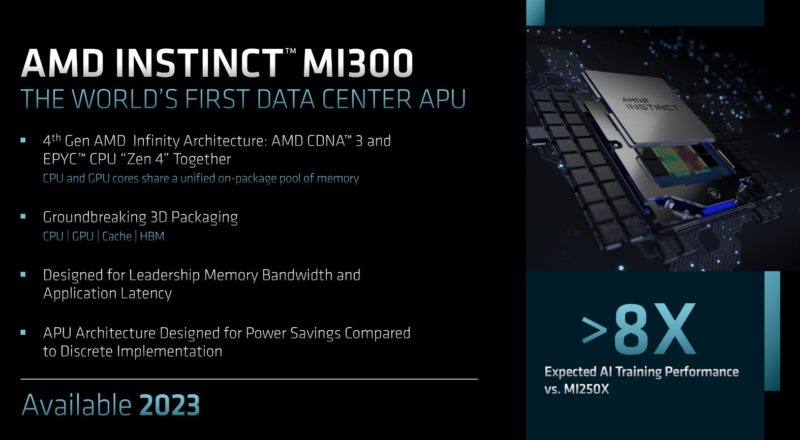

The MI300 is a family of very large processors, and it is modular. AMD can utilize either CDNA3 GPU IP blocks or AMD Zen 4 CPU IP blocks along with high-bandwidth memory (HBM.) The key here is that AMD is thinking large scale with the MI300.

The base MI300 is a large silicon interposer that provides connectivity for up to eight HBM stacks and four sets of GPU or CPU tiles.

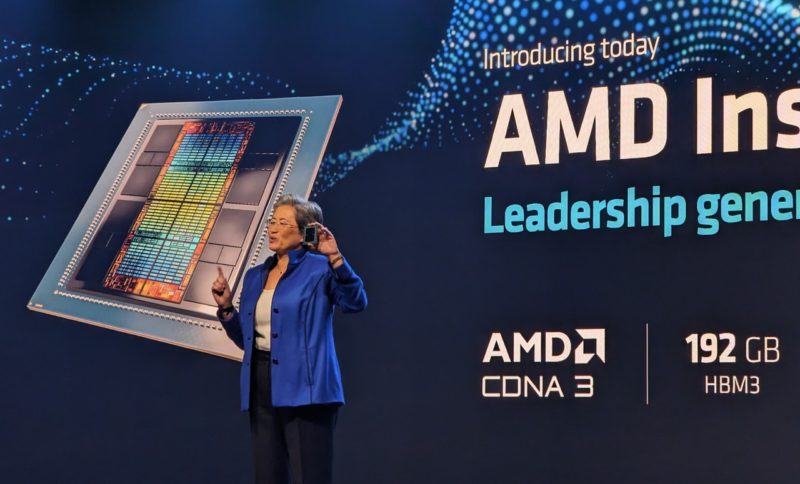

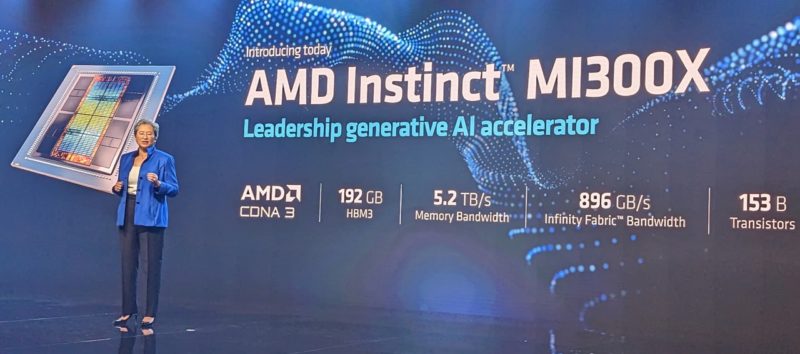

For a traditional GPU, the MI300 will be a GPU-only part. All four center tiles are GPU. With so much HBM onboard (192GB) AMD can simply fit more onto a single GPU than NVIDIA can. The NVIDIA H100 tops out currently at 96GB per H100 in the NVIDIA H100 NVL for High-End AI Inference.

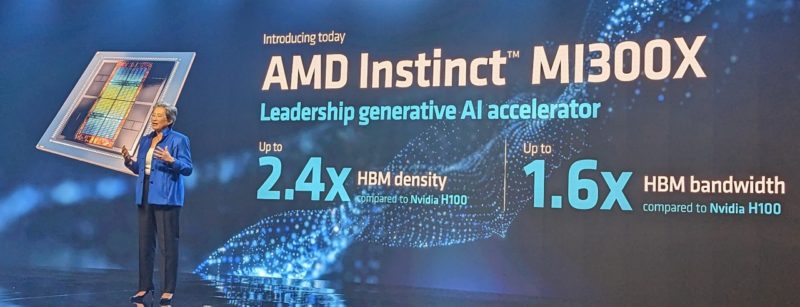

The AMD Instinct MI300X has 192GB of HBM3, 5.2TB/s of memory bandwidth 896GB/s of Infinity Fabric bandwidth. This is a 153B transistor part.

AMD is going directly at NVIDIA H100 with this. Other AI vendors are focused on comparing to the A100, but AMD is going directly at the super popular NVIDIA H100.

The advantage of having a huge amount of onboard memory is that AMD needs fewer GPUs to run models in memory, and can run larger models in memory without having to go over NVLink to other GPUs or a CPU link. There is a huge opportunity in the market for running large AI inference models and with more GPU memory, larger more accurate models can be run entirely in memory without the power and hardware costs of spanning across multiple GPUs.

For a traditional CPU, the MI300 can have a CPU-only part. This gives us the familiar Zen 4 cores that we find in Genoa but with up to 96 cores lots of HBM3 memory. We are about to do our Intel Xeon Max review with up to 56 cores and 64GB of onboard HBM for some sense of scale. AMD is not talking about this part at the event today.

For next-gen parts, AMD can utilize three GPU tiles and one CPU tile. That is a tightly coupled solution where the CPU and GPU are on the same silicon interposer. It is more similar to a NVIDIA Grace Hopper being a CPU and GPU combination. With both onboard, AMD avoids a PCIe connection over PCB between the CPU and GPU. AMD also does not need external DDR5 memory (more on that in a bit.) This is important because it lowers power consumption and provides higher memory bandwidth. One way to think about this is AMD has a CPU dedicated to quickly processing data and feeding GPUs.

The AMD Instinct MI300A has 24 Zen 4 cores, CDNA3 GPU cores, and 128GB HBM3. This is the CPU that is being deployed for the El Capitan 2+ Exaflop supercomputer.

AMD can also populate these in different ratios including potentially not populating everything. At the same time, AMD may not productize all of the combinations one can imagine. Still, AMD’s modular approach also means it has a platform to potentially update tiles asynchronously in the future.

AMD Instinct MI300 OAM and a CXL Bonus

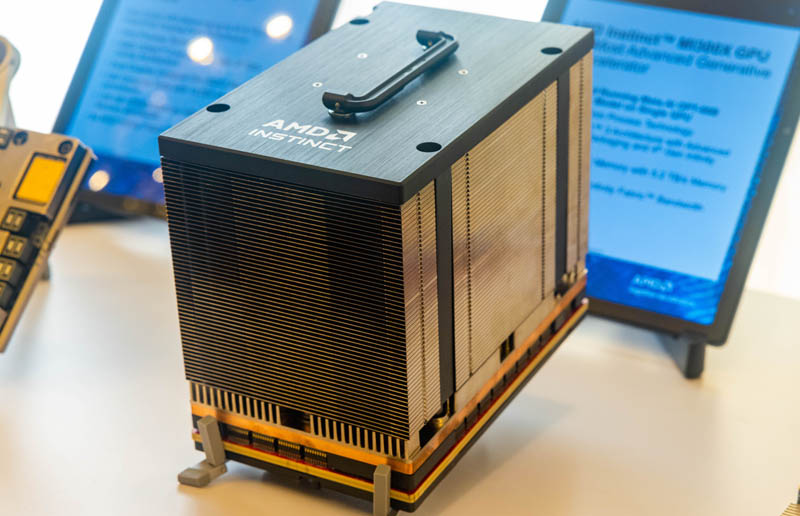

AMD announced today a 1.5TB of HBM3 memory solution that takes 8x AMD Instinct MI300 OAM modules and places them onto an OCP UBB.

We have also seen 4x OAM boards with PCIe slots and MCIO connectors for directly attaching NICs, storage, and even memory.

Something that AMD is not focusing on, but that we have seen folks talk about when we have seen MI300 platforms live over the last few weeks, is CXL. AMD supports CXL Type-3 devices with its parts. There is a path to getting more memory, and that path uses CXL memory expansion modules. That is huge.

Final Words

The big takeaway here is AMD just launched a massive GPU. AMD knows that a big part of the journey is through AI software. That is where NVIDIA Has a massive lead. AMD showed it is committed to working with PyTorch, Hugging Face, and more.

Make no mistake, the AMD MI300 is more than just a massive GPU. This is AMD’s vision for a next generation of high-performance compute.

Uhm, the MI300X has 192GB as shown on the slides… I know it was expected to be 128 GB.. ;)

Not exactly thrilled by the inferencing speed on the 40B model. Considering that ChatGPT is 175B, it is doing a model with 1/4 the size, and tokens/s is not that great.

Technically you could do llama.cpp inferencing of the 4bit GGML CPU models with GPU CLBlast, CuBlast for either Stable-Vicuna 13B, or the 65B https://huggingface.co/TheBloke/guanaco-65B-GGML, get much better ELO on the CPU.

In your sweep through alternatives you made no mention of Graphcore IPU hardware and software support for AI.

(I work for Graphcore, but this isn’t sanctioned by them – I am speaking for myself).

I know it is very much not meant for this and probably wouldn’t work, but I’d love to try the MI300A as an APU to see if it can run Crysis. And yes, I can hear people yelling that it probably doesn’t even have a display engine, but still, it would be fun if it worked. I kinda want AMD to make something like that on a much smaller scale. It would be fun to have a highly integrated but socketed SoC with a powerful discrete-level GPU and all IO circuitry on a mostly passive ITX board that only carries the VR circuitry and perhaps some RAM, if there is no HBM onboard.

Who needs a mobo? Take a 1 CPU + 3 GPU part, bolt on a couple USB, a power jack and a bunch of x8 cabled PCIe5 jacks.

@Robert Zsolt – as you noted it has no render engine so it cannot run Crysis. However it can SIMULATE 100 monkeys playing Crysis at 100 FPS.

Where are the MI300 benchmarks?

Note, MI300X has 13 chiplets (base interposer, 4 NOC w/Infinity fabric and cache, 8 GPU chiplets), while MI300A is 14 (remove 2 GPU chiplets, add 3 Zen4 chiplets). MI300C would be 17 (remove 8 GPU chiplets, add 12 CPU chiplets). These counts don’t include HBM memory or structural silicon.

No performance numbers? Why is there only focus on the memory size? No TDP, no FLOPs, no benchmarks? Interesting…

No stats on performance? Why does the memory size get all the attention? There no TDP, FLOPs, or benchmarks? Interesting