Today we are finishing up a weekend trifecta of three GPU server reviews with the Tyan Thunder HX FT83A-B7129. This is a 4U server that can take up to ten double-width GPUs and also some additional PCIe expansion. Based around the 3rd generation Intel Xeon Scalable codenamed “Ice Lake”, this system also supports PCIe Gen4. In this review, we are going to get into the system and see what makes it different.

Tyan Thunder HX FT83A-B7129 Hardware Overview

For this review, we are going to take a look at the exterior of the server, then the interior. As a quick note, the specific model we are looking at is the B7129F83AV8E4HR-N. Tyan sells a few variants of this server as well. We also have a video you can check out here:

As always, we suggest opening this in a YouTube tab, browser, or app for a better viewing experience. The video has quite a bit of context around the design decisions made in this system.

Tyan Thunder HX FT83A-B7129 External Hardware Overview

The system itself is a 4U system measuring 32.68 x 17.26 x 6.9 inches or 830 x 438.4 x 176mm. It is certainly far from the deepest GPU servers we have tested, but it also has a ton of PCIe expandability.

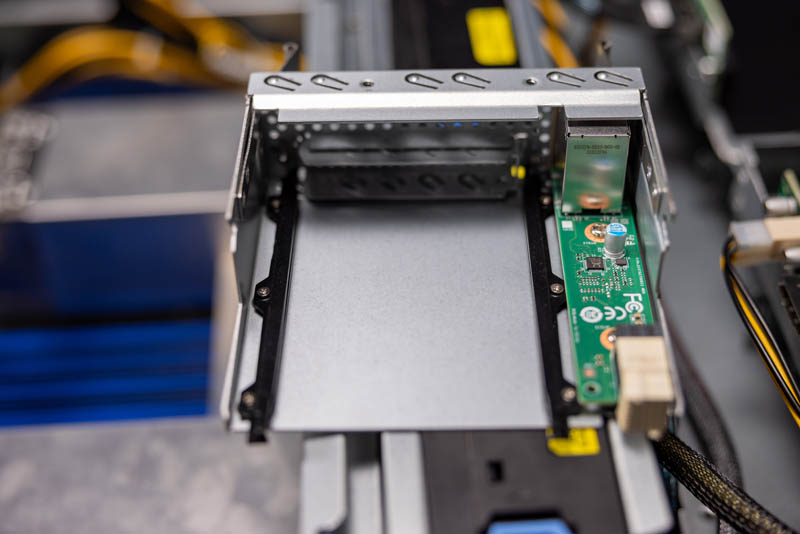

In terms of storage, we get 12x 3.5″ bays that are SATA / SAS (SAS with a RAID controller or HBA) capable. Four of the bays are NVMe capable as well, so long as the system is configured for it. One will notice that above the 3.5″ bays there is 1U of space with four dividers. This is for the B7129F83AV14E8HR-N variant that adds 10x 2.5″ drive bays. For many, the 12x 3.5″ configuration will be sufficient and provides more airflow. We will quickly note that Tyan also has its tool-less 3.5″ trays in this system with an easy latching mechanism. If one wants, 2.5″ drives can be added.

On the front left side of the system, we have two USB 3 ports and a VGA port.

On the right, we have a serial console port, power/ reset buttons and status LEDs. One of the cool features here is that Tyan has all cold aisle serviceable KVM ports. Instead of having to get a KVM cart in the data center and hooking up to the rear of a GPU rack, which is often very hot with a ton of air moving, one can instead go to the cold aisle. A small but nice feature is that the IPMI out-of-band management port is on the rear, not the front here, so one does not have a situation we see on some GPU servers where the management port needs to be wired in the front of systems.

Moving to the rear, we get a fairly common design for these 4U 10x GPU servers. The top is all dedicated to I/O expansion for GPUs. The bottom is for power supplies and I/O.

The I/O is handled by a module that has two main functions. First, there are the out-of-band management port and USB ports. Second, there is an OCP NIC 3.0 slot here.

This module can be removed and easily serviced.

In terms of power supplies, there are four 1.6kW 80Plus Platinum PSUs. The 1.6kW rating is at 200V+ while the power supplies drop to 1kW if you are at lower voltages like 110/120V. In a system like this, we get 3+1 redundant power so that means up to 4.8kW usable at higher voltages but only 3kW usable at lower voltages. Our test configuration was over 3kW so this is an important distinction.

Next, let us get inside the chassis to see what makes this system unique.

So many GPU servers latley

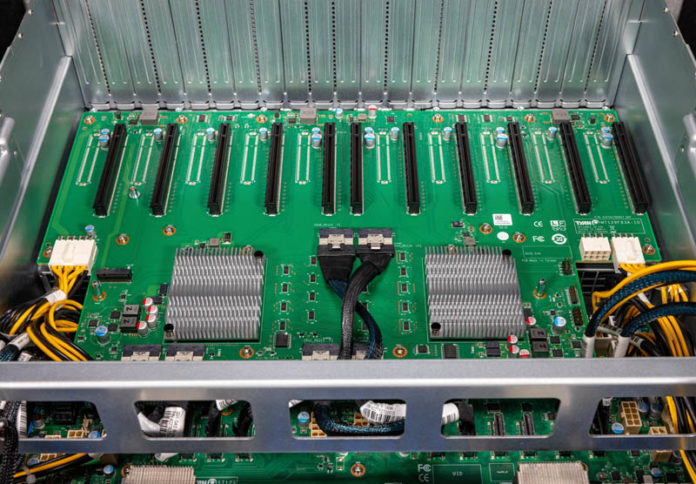

Is multi root host supported on this platform? That is a huge and flexible feature of leveraging both the Broadcom PCIe switches and cabling those to the motherboard: each of the PCIe switches can be configured to link to each of the CPU sockets. If the motherboard and GPU platforms support this multi-host topology it will cut out a hop between the CPU sockets to access memory as each card can then go directly to the appropriate socket and normalize memory access latency. Very helpful for some applications. The downside is that per socket socket PCIe bandwidth is halved. As a flexible solution using PCIe switches and cables, this is configurable to optimizes for your use-case.

There is also the obvious options of being able to swap the CPU board with AMD Epyc while re-using the GPU IO board. Good engineering on Tyan’s part. While tied to PCIe 4.0 due to the switches, it would permit rapid deployment of dual socket Intel Sapphire Rapids and AMD Genoa platforms next year with the need to only replace part of the motherboard.

It’d be nice to see a few more options in the front for those two 16x PCIe 4.0 slots. In this system it is clear that they’re targeted for HBA/RAID controller cards but I feel they’d be better off as some OPC 3.0 NIC cards or dedicated to some NVMe drive bay. Everything being cabled does provide tons of options though.

I do think it is a bit of a short coming that there is no onboard networking besides the IPMI port. Granted anyone with this server would likely opt for some higher speed networking (100 Gbit Ethernet etc.) but having a tried and true pair of 1 Gbit ports for tasks like cluster management that is OS application level accessible is nice. Being able to PEX boot without the hassle of going through a 3rd party NIC are advantageous. Granted it is possible to boot the system through the IPMI interface using a remotely mounted drive but that is a scheme that doesn’t lend itself well to automation from my prior experience.

Can we please put an end to junky drive trays. There has to be a better option.

Want to buy!

Please email me for further details.