Tyan Thunder HX FT83A-B7129 Internal Hardware Overview

For this, we are going to start at the front of the system and then move to the rear of the system.

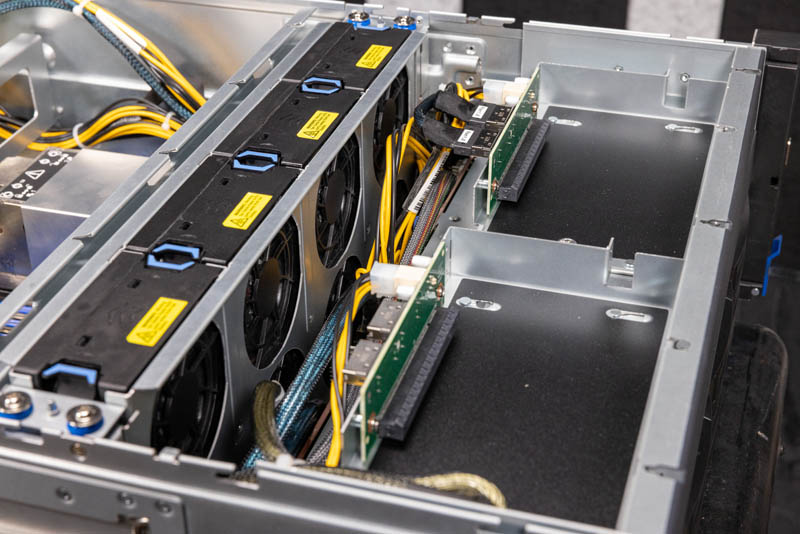

First, there are two PCIe x16 slots that sit in the front of the chassis on the top U of the machine.

These are designed to be for applications like RAID controllers and HBAs, especially if one wants to use either a SAS controller or a tri-mode controller.

As a quick note, because the system utilizes cables, the second (right in the image below) slot is not hooked up to data in our test configuration. Cabled PCIe connections are nice because one can, for example, use them to directly connect NVMe drives or use them to activate this slot. One can also choose to put either eight or sixteen lanes of PCIe to each slot so x8 / x8 is a possible configuration as well.

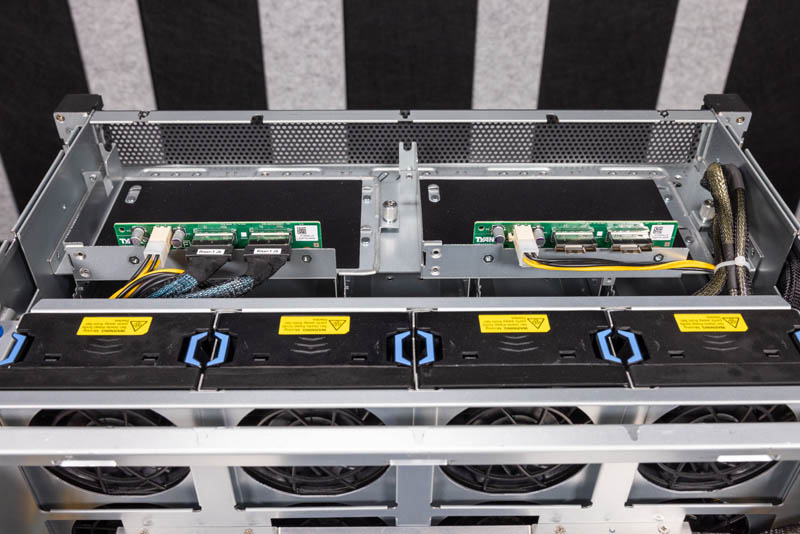

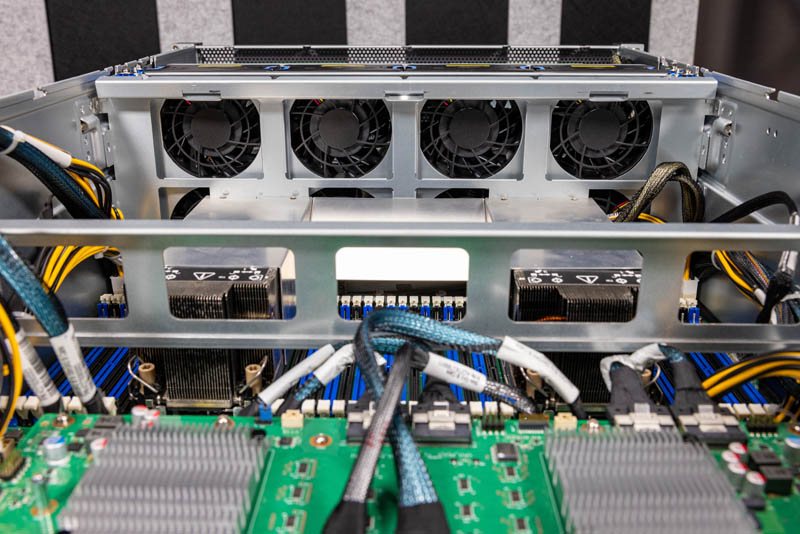

Behind this area, we have the fan partition. The fans are counter-rotating fan modules that are stacked two high to extend from the bottom to the top of the chassis. Each of these fan modules, therefore, has four sets of fan blades spinning. The hot-swap mechanism that Tyan uses is surprisingly easy to service. It felt absolutely great to swap the fans.

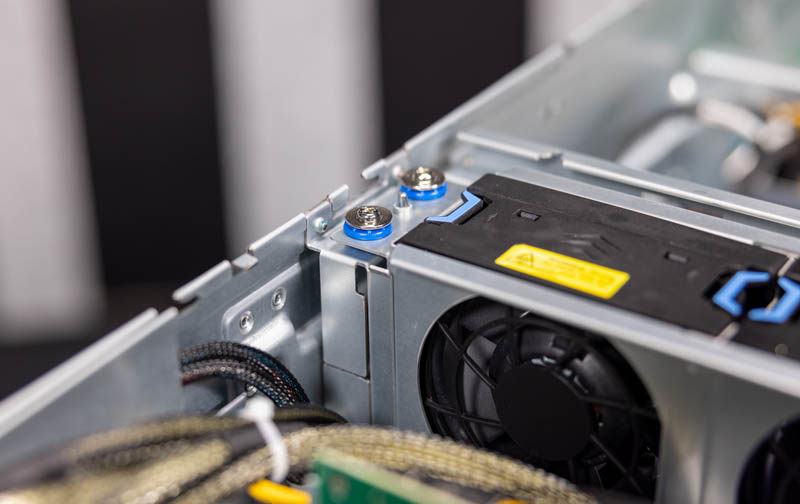

One small item that we see here, is rubber grommets on the screws securing the fan partition to the chassis. This may seem like a small feature, but it shows that Tyan has been doing this for generations. These systems have very high-speed fans and the vibration is certainly a challenge in the systems. It is nice to see these little enhancements to the latest designs versus some of the early GPU systems we tested many years ago.

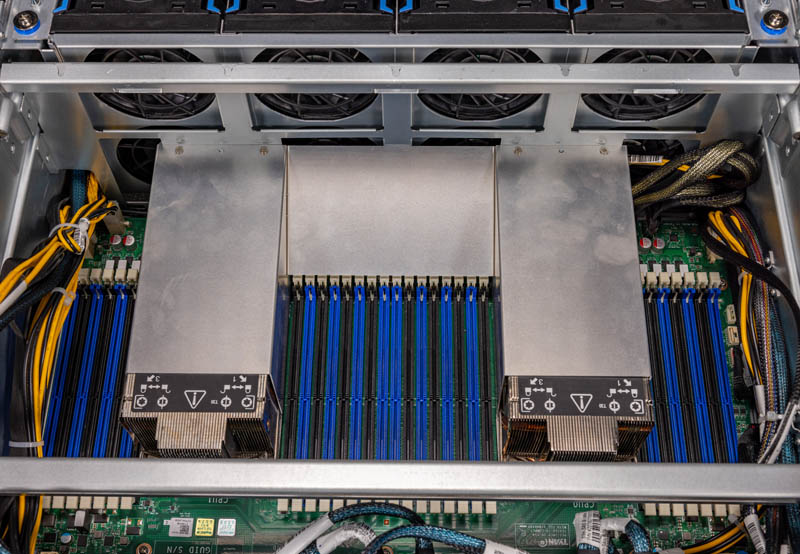

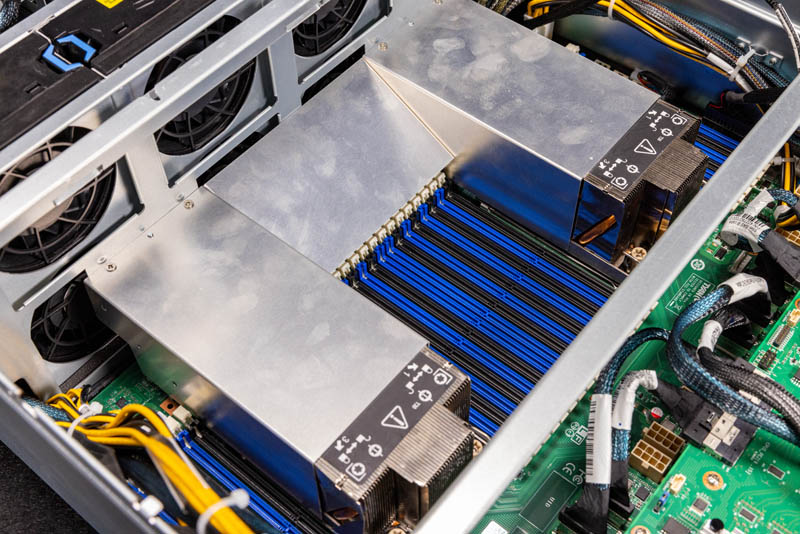

One other cooling-related feature is the fan shroud. Years ago, many systems had a flimsy air shroud around the CPUs. For this system, Tyan is not even using a nicer hard plastic shroud. Instead, tyan has a full metal shroud to direct airflow from the fans to the CPus. One will see that each CPU is being serviced by two fans to help with redundancy in this design.

The CPUs in this system are 3rd generation Intel Xeon Scalable CPUs. That means that we get features such as AVX-512, VNNI, PCIe Gen4, 8-channel DDR4-3200 memory, and support for Intel Optane PMem 200. That metal cooling shroud along with the 2U heatsinks means that Tyan can handle up to 270W TDP CPUs.

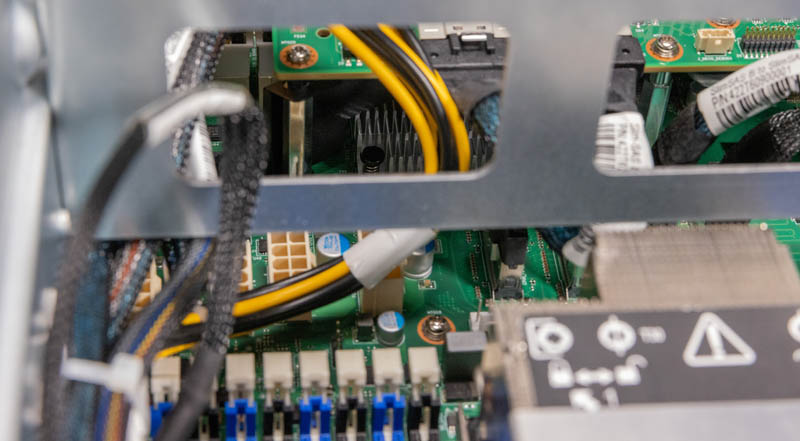

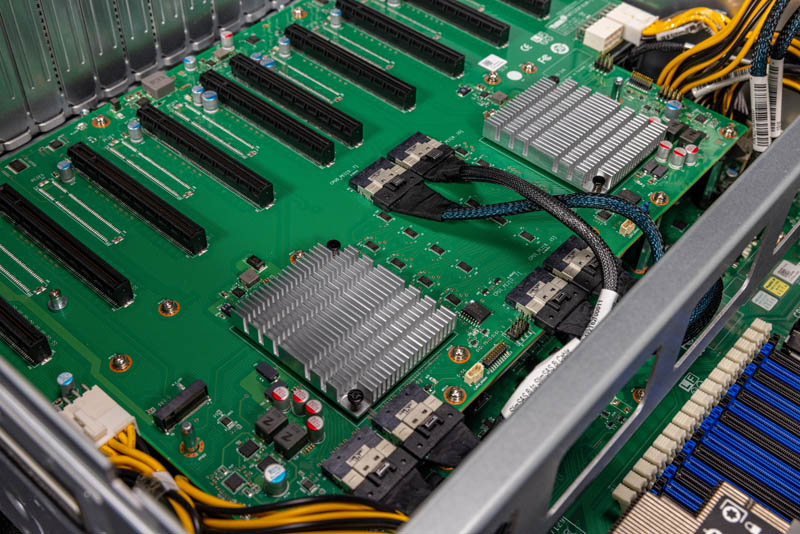

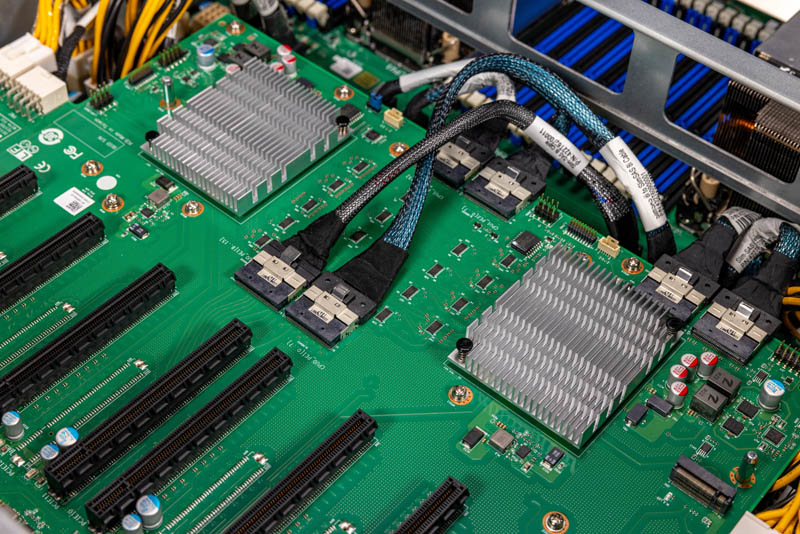

Here is another view looking from the GPU/ accelerator area back to the front of the chassis.

The system tucks its PCH below the main GPU board that we can see in the small silver heatsink under the GPU board in this photo.

All of the GPU power and PCIe connectivity is found via cabled connections. In the video (missed a photo of it) we show that Tyan has both data center as well as consumer-style GPU power options for the server. If you have ever purchased a GPU server only to find you had the wrong cables, you will know how important that can be.

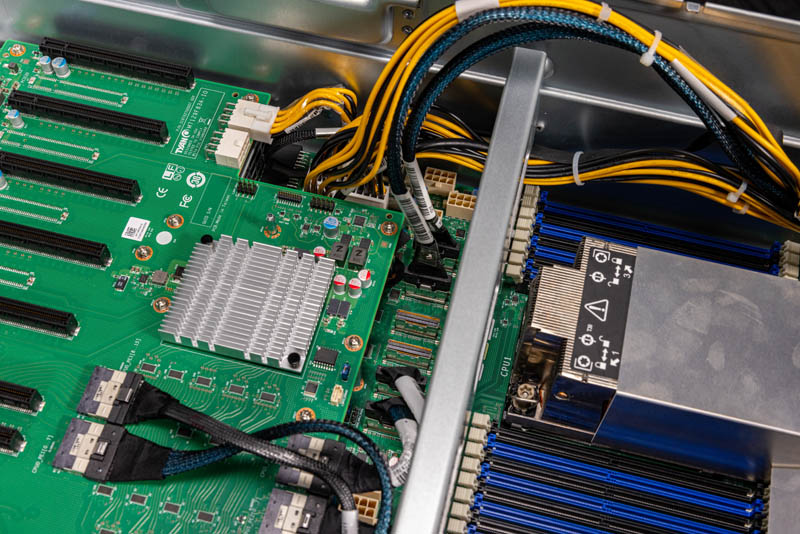

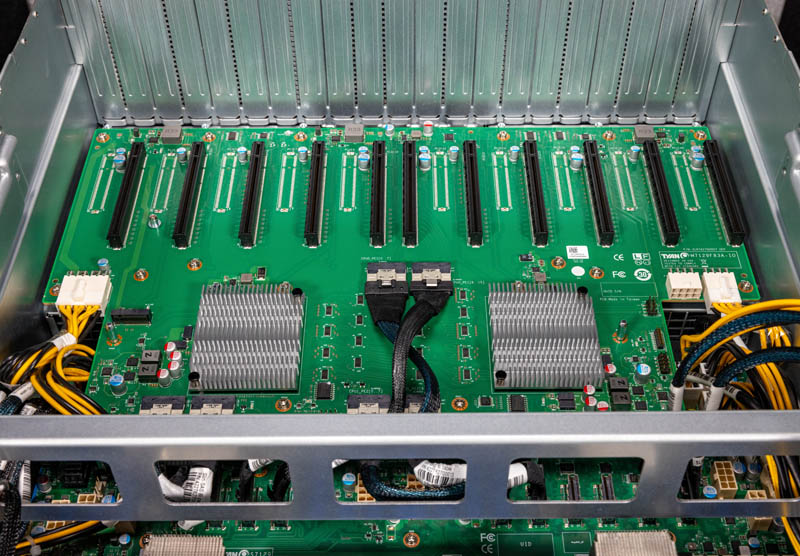

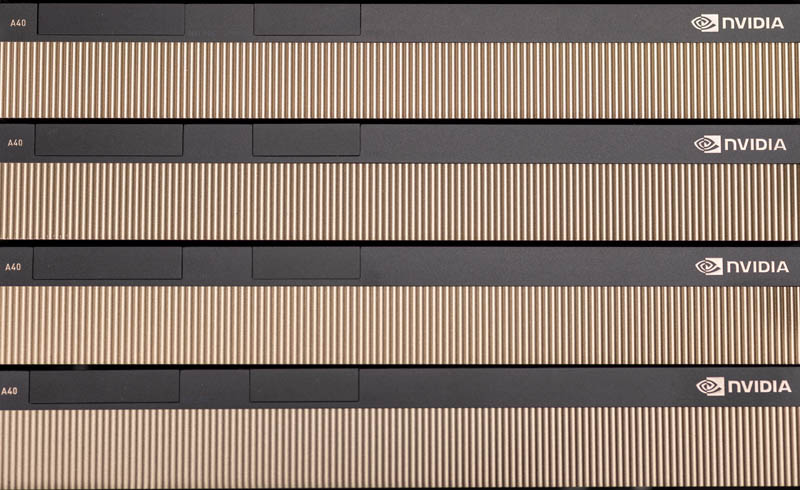

The main feature of the system, however, is the 11x PCIe GPU baseboard. This is designed to handle either 8x GPU or 10x GPU configurations while still leaving a center slot for networking.

Spacing when the GPUs are installed is correct to allow NVLink bridges between the PCIe cards.

By now, many will have seen the two silver heatsinks. These are PLX 88096 / PEX88096 96-port PCIe switches. Technically they are 98 port switches with two x1 management lanes. These Broadcom switches are ~100ns of latency switches and are PCIe Gen4 capable, making them a fairly significant bandwidth upgrade over the older PCIe Gen3 switches we saw on previous systems.

You will also note that there is a M.2 slot on this PCB. That M.2 slot is very hard to service with the GPUs, so while one may want to use it for a boot device, our sense is that most will not use this slot since it requires removing GPUs to service.

Overall, this is a really interesting hardware platform.

Let us next get to the management as well as some of the performance testing.

So many GPU servers latley

Is multi root host supported on this platform? That is a huge and flexible feature of leveraging both the Broadcom PCIe switches and cabling those to the motherboard: each of the PCIe switches can be configured to link to each of the CPU sockets. If the motherboard and GPU platforms support this multi-host topology it will cut out a hop between the CPU sockets to access memory as each card can then go directly to the appropriate socket and normalize memory access latency. Very helpful for some applications. The downside is that per socket socket PCIe bandwidth is halved. As a flexible solution using PCIe switches and cables, this is configurable to optimizes for your use-case.

There is also the obvious options of being able to swap the CPU board with AMD Epyc while re-using the GPU IO board. Good engineering on Tyan’s part. While tied to PCIe 4.0 due to the switches, it would permit rapid deployment of dual socket Intel Sapphire Rapids and AMD Genoa platforms next year with the need to only replace part of the motherboard.

It’d be nice to see a few more options in the front for those two 16x PCIe 4.0 slots. In this system it is clear that they’re targeted for HBA/RAID controller cards but I feel they’d be better off as some OPC 3.0 NIC cards or dedicated to some NVMe drive bay. Everything being cabled does provide tons of options though.

I do think it is a bit of a short coming that there is no onboard networking besides the IPMI port. Granted anyone with this server would likely opt for some higher speed networking (100 Gbit Ethernet etc.) but having a tried and true pair of 1 Gbit ports for tasks like cluster management that is OS application level accessible is nice. Being able to PEX boot without the hassle of going through a 3rd party NIC are advantageous. Granted it is possible to boot the system through the IPMI interface using a remotely mounted drive but that is a scheme that doesn’t lend itself well to automation from my prior experience.

Can we please put an end to junky drive trays. There has to be a better option.