At STH, we have been looking at the masses of 128GB LPDDR5X machines focused on AI inference for several quarters now. Something we did recently was super-cool and had an impact on the business side of STH that is worth sharing. We used the Dell Pro Max with GB10 to automate a partial role we were looking to hire for. This is not a doom-and-gloom AI is taking jobs story. Instead, we were able to roll funding into hiring a new managing editor at STH. Another way to look at it is that we were able to use a Dell Pro Max with GB10 to keep our business data local, and utilizing AI, it had a 12-month (or less) payback period while having plenty of capacity to use the box way more. When we have done NVIDIA GB10 system reviews previously, those have often resulted in folks saying they were “expensive,” albeit pre-DRAM price increases. This is instead an example of how you can think about getting an almost crazy amount of value from a system.

As a quick note, Dell sent the two Dell Pro Max with GB10 systems, and so we need to say this is sponsored. In practice, having the additional systems enabled our side-by-side testing of multiple models in our workflows on historical data simultaneously. That yielded perhaps the most important finding in this exercise around speed versus accuracy.

Step 1: Understanding the Reporting Problem at STH

As STH has grown, we now have six owned distribution channels with over 100,000 views/ month and all are targeted at different audiences. That does not include social media channels. Something we need to look at on a weekly basis is the performance of articles. For example, we might want to know about the performance of an article and a short video on STH labs versus if we do a full length video on the main YouTube channel. We may want to analyze the time engaging with the content. We may want to pull in newsletter views and so forth. Further, sometimes we will get requests from external parties about the performance of certain pieces. For example, Dell might say, “Hey, how many views did that Dell AI Factory tour get?” Or ask how many it got in the first 30 days or 90 days.

We have a decent number of data sources, some reaching 16 years old at this point. Like just about every business, reporting is necessary. While we are discussing how we are using AI to do reporting, it is something that just about every business can use.

With that, it was time to get into the process.

Step 2: Building the Process Flow with n8n

While folks are getting very excited about Moltbot / Clawdebot these days, but we are using n8n for this workflow since we wanted to use this locally on our data, and there were existing integrations to data sources like Google Analytics. That may not seem like a big deal, but we had something working in 4-5 hours of work (plus many hours of testing.) Having pre-built templates to get to data sources made life very easy. Also, I tend to prefer re-using existing tools like this than trying to ask an agent to re-build custom tools. Some may disagree, but again, I wanted to make something widely applicable.

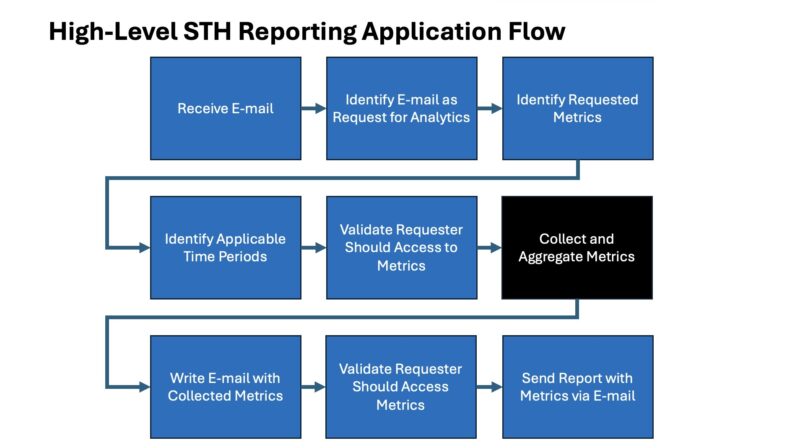

Trying to balance making this super specific to STH and making it more broadly applicable to those reading, here is a high-level sense of the process steps in the flow.

While the details behind each step are a bit more unique to STH, I think the high-level will feel familiar to many. Perhaps the biggest change is that today we can use a LLM to things like ingest any text and extract the data points that are required. I remember doing a project just over a decade ago where folks had to write e-mails with specific formatting and structure to get an Oracle ERP to properly ingest data into a process.

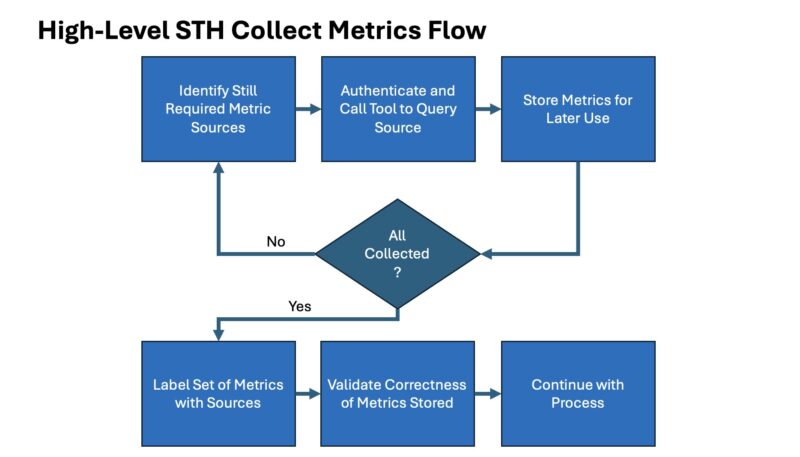

That Collect and Aggregate Metrics flow is what goes out to the actual data sources and queries for information. Luckily, n8n had pre-built templates for almost all of what we needed.

My major tip here was that, because this is something we do regularly, we were not just trying to get views. We are also trying to get other data like limiting the time peroids for each request. I know that sounds like a minor point, but jotting this all out on a notepad before starting with the workflow probably saved a few hours.

Then came the big testing points. I do not want to share the data we used, but one advantage we had was we had both the input query as well as the validated output for roughly 100 requests a year and over a decade. That gave us the test data to try gpt-oss-120b FP8 and MXFP4 versus gpt-oss-20b FP8 for accuracy. Just to make our lives easier, we stopped with the last 1000 requests so from Q1 2015 to Q4 2025. You will note that is not exactly 100/ year, but having a sample size of 1000 we felt was decent. Also, if each workflow takes over a minute to run because it is running sequentially through the collect metrics loop not in parallel (oops!) then it naturally takes quite a bit of time to get through that many iterations.

Something that is going to be a very fair critique of this is that instead of using a reasoning model, there are other small models that might be better, and using a mixture of models is likely the best. It really came down to parsing the incoming e-mails and figuring out which data we needed to pull, and which source that would come from. Oftentimes the request for metrics comes in a small novel of an e-mail with multiple tangential points and asks. The reasoning models did better at parsing through those. It was also Q4 2025 when we set this up, so naturally as we are publishing this the world will change and new models and tools will come out. That is part of the difference between experimenting, and then getting something you put into production.

What we found was really astounding. The gpt-oss-20b model aced the first 20 and so we were originally thinking that we did not need a larger model. Then, #27 came back and it did not match. We realized something was amiss, but the stranger part was that we had so many working perfectly before we got a non-matching result. So we let both Dell Pro Max with GB10 boxes run through the back testing. We did this sequentially so it took some time.

What we realized should make sense to a lot of folks. When we hear a model is 97% accurate, especially on a long response, that is great. What happens here is that we can have over a dozen calls per firing of a workflow so our reliability of the overall workflow if we were only 97% accurate would be more like 69-70%. It turns out, our simple requests did way better than that. We were actually getting closer to 99.4-99.6% accuracy in our sample runs. Still, that led to only a ~95% accuracy. Using the larger gpt-oss-120b got us to 99.96-99.99% accuracy for steps, or an extra nine in overall system reliability. Roughly 95% versus 99.5-99.9% accuracy of the workflow is the difference between low single-digit per year defects, and finding one per week.

Next, let us get into how the math works on having this pay for itself.