This week at STH, we are doing something different. We are not going to publish a mountain of reviews. Instead, we are going to run a series all week. We sent questions around deep learning and AI market trends to the top server vendors in the industry. Each day on STH this week, you will hear a different perspective on the industry, and where we are headed.

I get the fortunate role to speak with folks in the industry on a daily basis. A common thread I find is that individuals sitting in different organizations have different views on technology, and where the market is moving. Some of this is due to their backgrounds, but viewpoints are also formed by customer and partner interactions as well as corporate direction. The STH Deep Learning and AI Q3 2019 Interview Series is designed to bring together a number of these perspectives over the course of the week so our readers can get a feel for the direction of each company and the market as a whole.

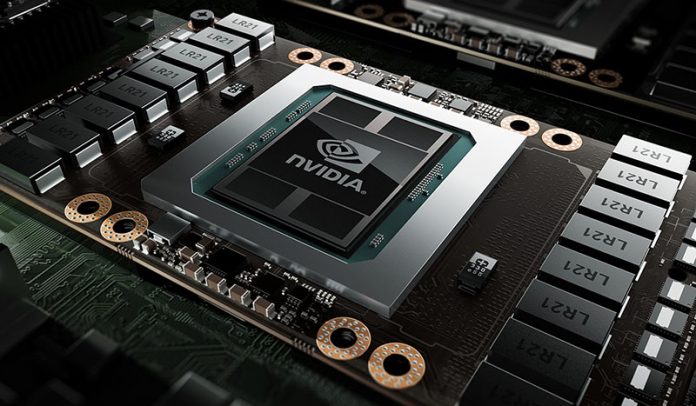

Deep learning and AI are hot topics. While deep learning training remains important, inferencing and the 5G edge are rapidly gaining mindshare. To server vendors, there is a real impact. If you look at the recent IDC 1Q19 Quarterly Server Tracker you will see unit volumes falling, but ASPs rising. One component of that trend is adding accelerators, mostly GPUs, to systems in order to meet customer demands around deep learning and AI. Systems with GPUs, FPGAs, and other accelerators tend to have higher ASPs.

STH Q3 2019 Server AI and Deep Learning Focus Q&A

The following are the questions we asked Dell EMC, HPE, Inspur, Lenovo, and Supermicro. We wanted to get a survey of topic areas rather than go too deep into a specific area. The questions are the same, but the answers show unique perspectives among the respondents.

Training

- Who are the hot companies for training applications that you are seeing in the market? What challenges are they facing to take over NVIDIA’s dominance in the space?

- How are form factors going to change for training clusters? Are you looking at OCP’s OAM form factor for future designs or something different?

- What kind of storage back-ends are you seeing as popular for your deep learning training customers? What are some of the lessons learned from your customers that STH readers can take advantage of?

- What storage and networking solutions are you seeing as the predominant trends for your AI customers? What will the next generation AI storage and networking infrastructure look like?

- Over the next 2-3 years, what are trends in power delivery and cooling that your customers demand?

- What should STH readers keep in mind as they plan their 2019 AI clusters?

Inferencing

- Are you seeing a lot of demand for new inferencing solutions based on chips like the NVIDIA Tesla T4?

- Are your customers demanding more FPGAs in their infrastructures?

- Who are the big accelerator companies that you are working with in the AI inferencing space?

- Are there certain form factors that you are focusing on to enable in your server portfolio? For example, Facebook is leaning heavily on M.2 for inferencing designs.

- What percentage of your customers today are looking to deploy inferencing in their server clusters? Are they doing so with dedicated hardware or are they looking at technologies like 2nd Generation Intel Xeon Scalable VNNI as “good enough” solutions?

- What should STH readers keep in mind as they plan their 2019 server purchases when it comes to AI inferencing?

Application

- How are you using AI and ML to make your servers and storage solutions better?

- Where and when do you expect an IT admin will see AI-based automation take over a task that is now so big that they will have a “wow” moment?

Final Words

Check back every morning on STH this week to get a different perspective on the above questions. All five companies did a great job getting us responses in a timely manner and each has a unique perspective informed by their customers, experiences, and company direction. There are certainly other questions we wanted to ask, and areas to explore, but we wanted to hit a few key areas and not make our ask “doable” for executives responding.

We are going to publish the STH Deep Learning and AI Q3 2019 Interview Series on the following schedule:

- Monday 22 July 2019 – Dell EMC Talks Deep Learning and AI Q3 2019

- Tuesday 23 July 2019 – HPE Delves Into Deep Learning and AI Q3 2019

- Wednesday 24 July 2019 – Inspur Focus on Deep Learning and AI Q3 2019

- Thursday 25 July 2019 – Lenovo Discusses Deep Learning and AI Q3 2019

- Friday 26 July 2019 – Supermicro on Deep Learning and AI Q3 2019

We hope you enjoy this series on STH.

This is an excellent idea, Patrick, and I look forward to reading the articles.

Great idea, I have and will read them all.

This is great, thanks Patrick