Dell EMC is the largest server vendor in the world by unit shipments and volume. Where Dell goes, the market follows and every components vendor wants to be in the Dell EMC PowerEdge ecosystem. Dell EMC’ CTO, VP/Fellow, Server & Infrastructure Systems, Robert Hormuth, was featured on STH several months ago featuring his 2019 predictions giving his insight into the ecosystem. Now he is back, this time taking part in STH’s STH Deep Learning and AI Q3 2019 Interview Series.

Dell EMC Talks Deep Learning and AI Q3 2019

In this series, we sent major server vendors several questions and gave them carte blanche to interpret and answer them as they saw fit. Our goal is simple, provide our readers with unique perspectives from the industry. Each person in this series is shaped by their background, company, customer interactions, and unique experiences. The value of the series is both in the individual answers, but also what they all say about how the industry views its future.

Training

Who are the hot companies for training applications that you are seeing in the market? What challenges are they facing to take over NVIDIA’s dominance in the space?

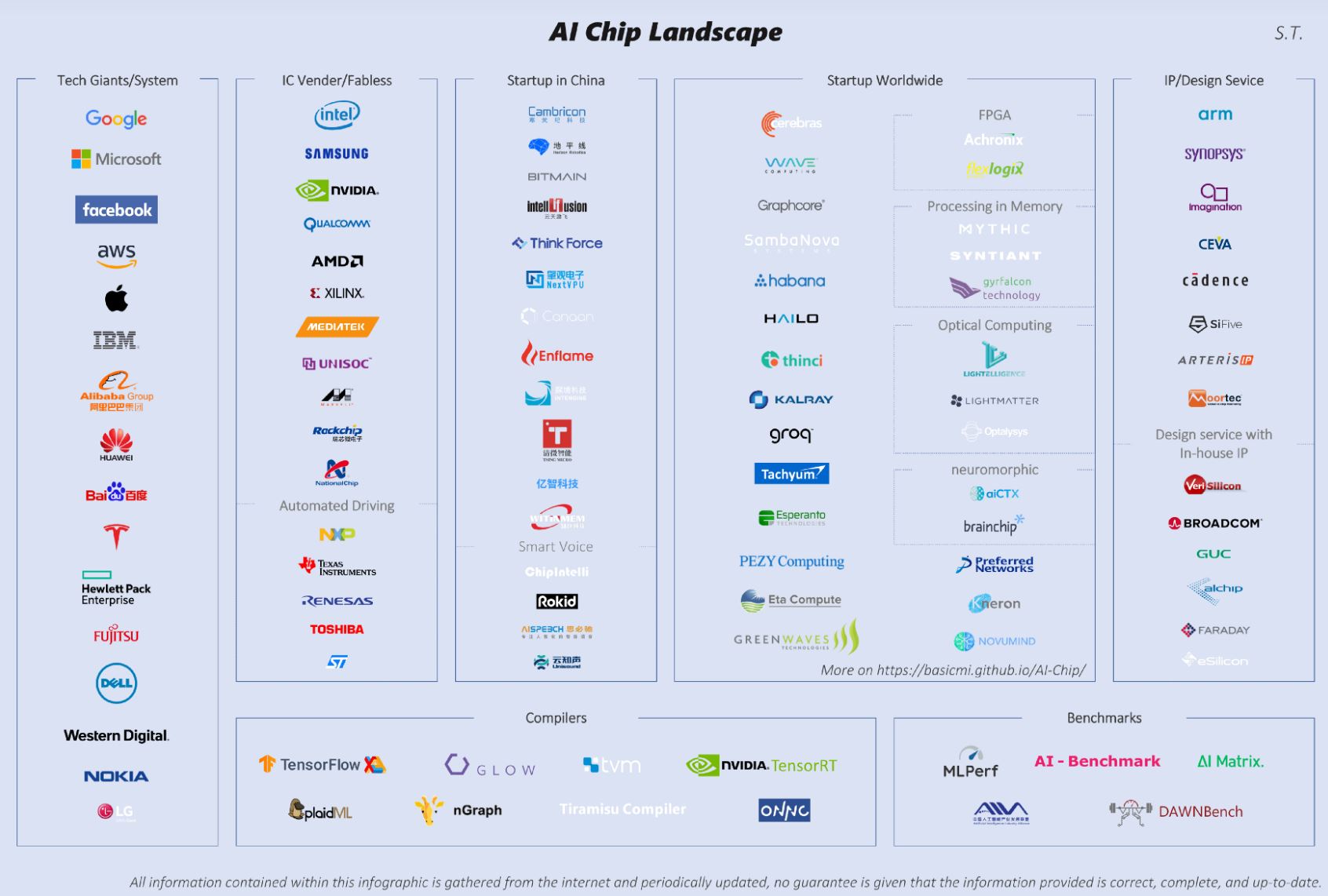

There are many companies vying to enter this space. NVIDIA has certainly done an outstanding job at the hardware level, but more importantly at the software and ecosystem level. NVIDIA has been on a more than 10-year journey to bring heterogeneous compute to the data center and it is certainly paying off right now. The AI silicon landscape is rather large, as seen here:

(Source: Shan Tang via Medium)

The landscape is filled with traditional vendors trying to expand markets and startups around the world, but I think many folks are getting confused with the buzz of AI and shiny new silicon. AI is a subset of the big picture, Data Science, i.e. turning data into insights for better business, product and market outcomes. But when you step back, while the AI computational part is important, you must be able to do the math in a reasonable amount of time. There is so much more – data collection, data prep, data normalization, feature extraction, data verification, analysis tools, process management tools, data & model archiving, server infrastructure – that once you get past the shiny new ML code, customers are realizing the bigger picture. In the end, the software support and libraries will dictate the winners and losers – assuming your hardware is good enough, which is no easy feat.

How are form factors going to change for training clusters? Are you looking at OCP’s OAM form factor for future designs or something different?

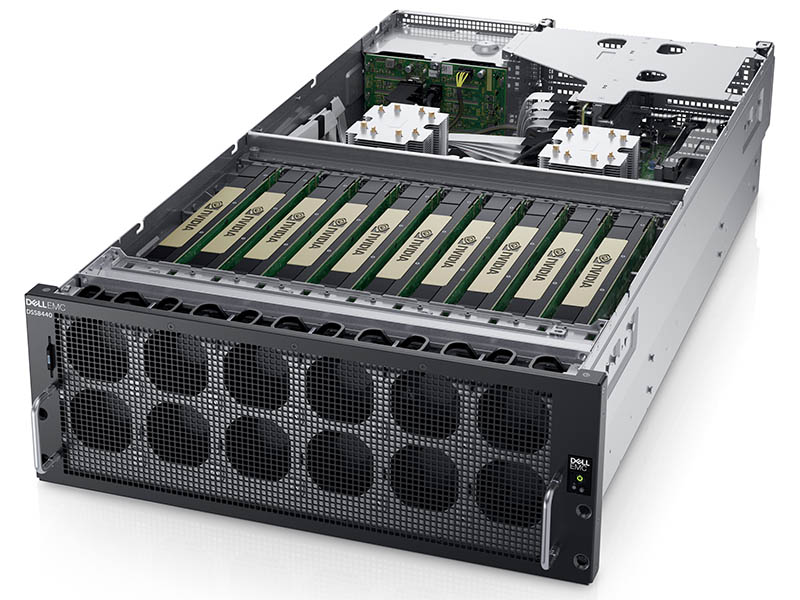

OCP’s OAM will provide direction for the industry to standardize and commoditize. It all depends on if the top accelerator vendors agree. Given the pace of technology, it could be a long time before such a form factor takes off. At Dell Technologies, we are optimizing our infrastructure for the two predominant form factors today – PCIe and NVLink. The PowerEdge C4140 is offered as 4x PCIe accelerators or in a 4x NVLink configuration in a 1U, and the DSS8440 is a 4U with up to 10x PCIe accelerators. In the wild-wild west of AI silicon (see above), having a platform for all the emerging silicon startups is very important. And we have a ‘BYP’ (Bring Your PCIe) adapter strategy where several other platforms support these emerging accelerators from a power, thermal and form-factor point.

What kind of storage back-ends are you seeing as popular for your deep learning training customers? What are some of the lessons learned from your customers that STH readers can take advantage of?

This depends on how much you scale-out and how many accelerators are used for training the model. For single-node testing, NFS over 10GbE appears to be sufficient. As you scale to larger clusters, you will need 25 to 50GbE or more. We have a lot of customers using Isilon and we have reference architectures using our accelerator servers and Isilon for different vertical markets.

Over the next 2-3 years, what are trends in power delivery and cooling that your customers demand?

All I can say is, “Scotty, we need more power!” Right now, the power and cooling problem in the data center is being overlooked. Facilities are often the last group engaged. CPU power is blowing past 250W per socket and GPUs beyond 400W. Couple this with Nx25W NVMe drives and a decent amount of DRAM, and we have a problem. Unfortunately, most racks are in the 10-14KW range today, heading toward 14-17KW in the future, and some of the big guys are even in the 10-14KW because they need to land the same PODs in colocation facilities. We have some customers that can and will push 100KW per rack today, but that’s not the norm. On the HPC side, we are seeing an increase in liquid cooling due to size and performance focus. For example, the Top500 No. 5 ranked, Frontera supercomputer at TACC uses Dell EMC liquid-cooled servers. In the next three to five years, hitting 100KW or 125KW per rack won’t be too hard, and that’s pretty scary.

What should STH readers keep in mind as they plan their 2019 AI clusters?

Don’t wait until the end to think about (1) power and cooling, invite facilities to the party early, and (2) while AI silicon is shiny, think through all the other elements of data science early on – including data collection, data prep, data normalization, feature extraction, data verification, analysis tools, process management tools, data/model archiving and server infrastructure.

Inferencing

Are you seeing a lot of demand for new inferencing solutions based on chips like the NVIDIA Tesla T4?

Yes, in imaging and retail environments, for example, the T4 is becoming quite popular. The PowerEdge R7425 based on AMD EPYC is very popular in retail video analytics, with up to 6x T4s today. As CPUs continue to increase lane count, we’ll look to add more.

Are your customers demanding more FPGAs in their infrastructures?

We are not yet seeing substantial demand in typical data center or large edge-side environments for AI inferencing, but the interest in far edge is certainly picking up for FPGAs doing inference.

Who are the big accelerator companies that you are working with in the AI inferencing space?

As the top server vendor, we are talking to them all. Dell Technologies customers expect us to be trusted advisors on the technology front and guide them to the best solution for their problem – and that’s exactly what we work hard to do.

Are there certain form factors that you are focusing on to enable in your server portfolio? For example, Facebook is leaning heavily on M.2 for inferencing designs.

We have designs that can accommodate M.2 and U.2, but the offerings today for the general market are still PCIe form factor based.

What percentage of your customers today are looking to deploy inferencing in their server clusters? Are they doing so with dedicated hardware or are they looking at technologies like 2nd Generation Intel Xeon Scalable VNNI as “good enough” solutions?

Depending on the workload, we see both. But, generally speaking, most customers want their training and inference to be of the same architecture such as V100 for training and T4 for inference, for example.

What should STH readers keep in mind as they plan their 2019 server purchases when it comes to AI inferencing?

Well, first they should buy Dell EMC PowerEdge, of course. 😊 Customers need to make sure they have flexible and scalable architectures. Security is another top concern and should not be ignored in the AI space. When you start to think about edge and inferencing, security and distributed system management will matter a lot.

Application

How are you using AI and ML to make your servers and storage solutions better?

We have many uses for AI and ML inside of Dell Technologies—from improving our product quality and predictive failure to storage caching algorithm enhancement and optimizing our supply chain. In servers, we are bringing compute closer to where data is ingested (i.e., DSS8440).

Where and when do you expect an IT admin will see AI-based automation take over a task that is now so big that they will have a “wow” moment?

We are and will be using AI in some of our HCI products, such as VxRail ACE, for updates and other tasks based on knowledge already. Over time, IT organizations will look back and notice they simply aren’t having to do as much as in the past.

Final Words

As always, I wanted to thank Robert for taking the time to answer our questions. I know he has a busy schedule. Taking some time to share his perspective helps our readers understand where Dell EMC sees the market is important. One thread you will notice in Robert’s responses is that by helping customers through their deep learning and AI journeys, large server companies like Dell EMC are building customer and industry implementation know-how like we would have typically turned to large IT organizations in years past.

One of my key takeaways after reading through Robert’s responses is that Dell recognized the value in joining customers on the journey early. Dell is now transitioning into helping onboard the next-generations even if that is behind the scenes through implementing algorithms it developed into its products.