Recently we were at OCP Summit 2017 and had the opportunity to see more progress on the AMD Naples platform first hand. AMD Naples is a highly anticipated server platform from AMD that is set to challenge Intel Xeon dominance in the x86 2P market later this year. As far as we are aware, there have only been two AMD Naples platforms publicly shown to date and we have pictures of both plus a block diagram showing how the 128 lanes of high-speed I/O are implemented in an example Naples platform. While headlines of 128 PCIe 3.0 lanes for the platform are attention grabbing, realistically many of the I/O lanes will be used for other system I/O purposes. The block diagram shows how these platform I/O needs can be met with 112 PCIe lanes available to PCIe cards, NVMe drives and etc.

Publicly Shown AMD Naples Server Platforms to Date

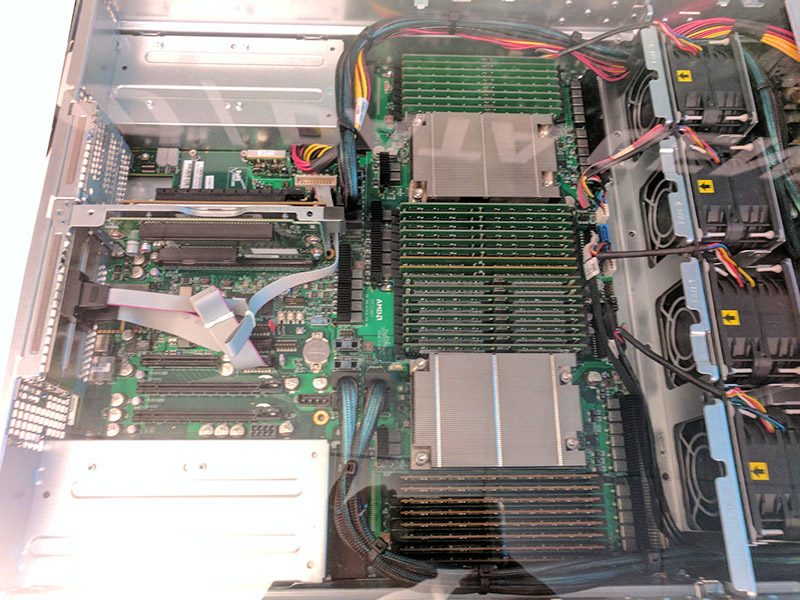

The first publicly shown AMD Naples platform was the AMD Speedway system. Here is a snap we took at the AMD Tech Day in San Francisco earlier.

You can read some of our analysis of the platform in our earlier AMD Naples piece.

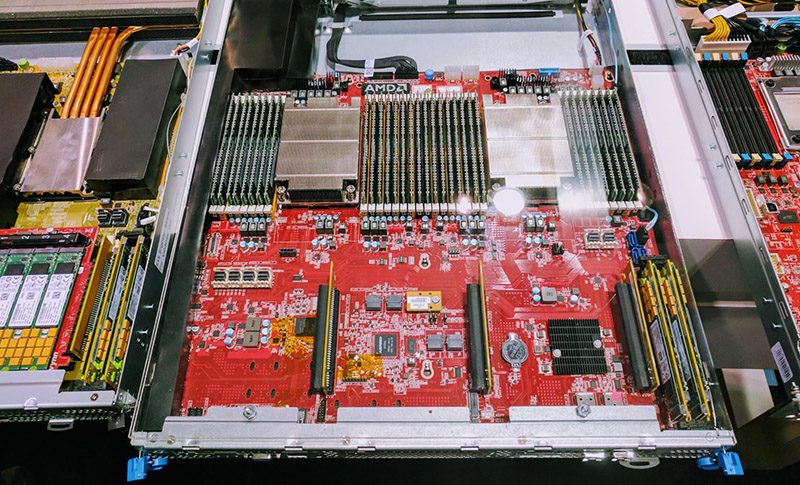

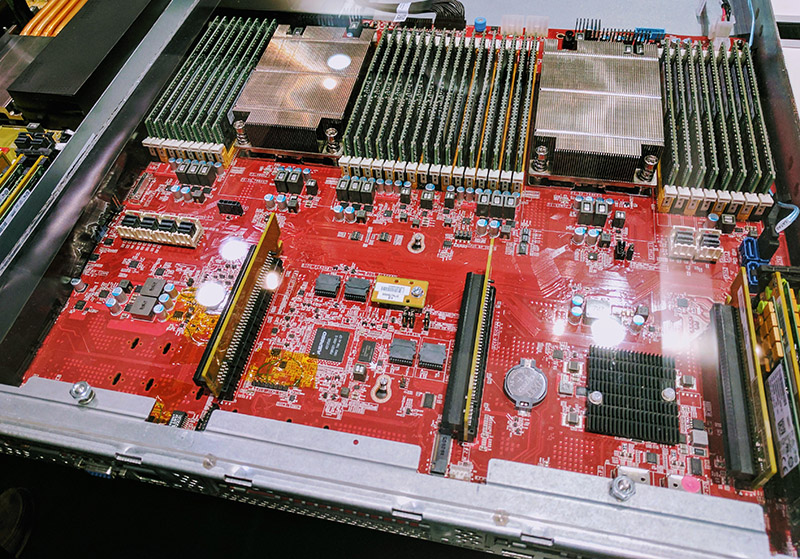

At OCP Summit 2017, Microsoft and AMD showed off a Project Olympus – AMD Naples server. For reference, the system on the right edge of the below is a Qualcomm Centriq 2400 system and the system on the left is using a not yet formally announced Intel CPU (e.g. an OCP Project Olympus Skylake-EP / Purley server.)

You can see that the platform has several PCIe x16 slots, SFF PCIe connectors, and plenty of room for m.2 NVMe SSDs.

The platform to the right has the CPU socket covered (here is essentially what it will look like), but you can see the 32 DIMM slots of the Naples platform push the bounds of the width of the chassis more than the upcoming Intel platfrm.

We also have a clear picture of an ASPEED BMC in the center of the PCB. Our initial analysis of the AMD Speedway platform showed that although AMD is claiming that Naples is a SoC design, the external BMC will still be part of the platform. Looking at a block diagram of the server platform gives us a clearer view of what is going on.

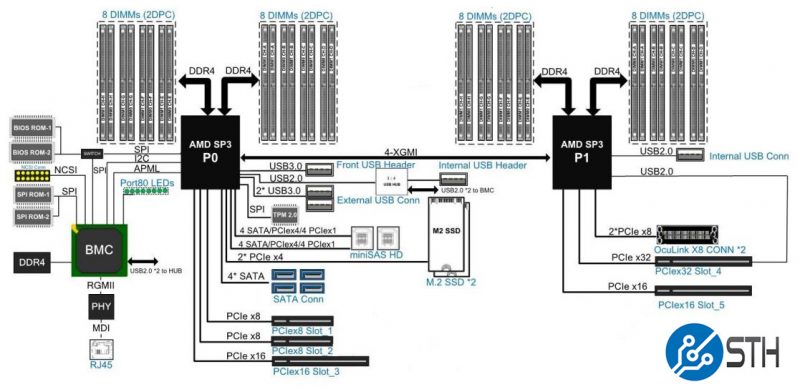

AMD Naples 2P Server Block Diagram with 112 PCIe Lanes

We also have a full AMD Naples dual socket block diagram from the company. Note, different servers will use different implementations, but this is an example of how Naples can be implemented. This shows 16 DIMMs per CPU, 32 DDR4 DIMMs on the platform using two DIMMs per channel.

Perhaps the most interesting piece of the block diagram is that the dual socket server still has 112 lanes of PCIe 3.0 available even after using high-speed I/O for USB ports, the BMC and other system components.

The block diagram also shows a 4-XGMI link between the two CPUs. Each CPU has 128 lanes of high speed I/O with 64 dedicated in each for chip to chip communication in dual processor configurations.

Final Words

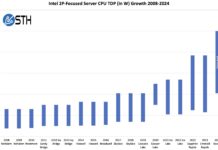

The impact of having 112 PCIe 3.0 lanes available cannot be understated. Current generation Intel E5-2600 V4 dual socket platforms only have 80 PCIe lanes available. While AMD may market 128 v. 80, that is not a realistic number. Based on the platforms we have seen, and the block diagrams, we see that 112 PCIe lanes in a system is a more reasonable figure. On the Intel side, Xeon E5 V4 platforms normally will not use its PCIe 3.0 lanes for the BMC, USB, SATA ports and etc. because there is a PCH. As a result, the comparison we will be using at this point is 112 v. 80 or 40% more usable CPU PCIe lanes for AMD Naples versus Intel Xeon E5-2600 V4.

I am a little bit confused about the ryzen/naples difference.

Currently I am running a 1225v3 4/4 which i’d like to upgrade to a 6/12 or 8/16 cpu. Obviously going to a recent 6+ core xeon would be very expensive.

The ryzen 1700 8/16 would be a perfect CPU for me. However most posts about the ryzen lineup and server usage are telling people to wait for Naples. But everytime I read something about Naples it’s all very high end.

Will there be a “homeserver”(xeon 12xx) lineup of Naples? Or should I wait for esxi etc to fix the ryzen bugs?

Hi Steve –

Naples is the high-end Xeon E5 competitor line.

Try the STH forums DIY forum

Folks there will usually help you find something within your budget that is (much) faster. Extremely resourceful members.

Patric, on first picture of “AMD Naples Project Olympus OCP Server” there is just a little bit shown of another board on the left, with number of probably M2 pcie nvme cards attached. Do you have any more pictures of this board or know anything about it ? Currently there is scarce supply of PCIe boards allowing for more than two M2 pcie x2 nvme cards to be installed in one PCIe x16 slot. Numerous solutions for two M2 pcie x4 nvme cards in one PCIe x8 slot but not quad in x16 slot :( I am looking for economical way to connect as many as possible of M2 pcie x4 nvme cards to one board and use the pcie lanes to the full extend.

Ted – that is the Skylake-EP Project Olympus platform.

You could try something like this? https://www.servethehome.com/the-dell-4x-m-2-pcie-x16-version-of-the-hp-z-turbo-quad-pro/

The big issue is PCIe bifurcation. Not all motherboards/ BIOS support bifurcation of a x16 slot to 4 x4.

Thank you for the information and suggestion. I have seen your post about this when first researching availability of such solutions. These Dell/HP cards are expensive compared to dual m.2 cards. There is one more supplier, Amfeltec however expensive as well. http://amfeltec.com/products/pci-express-gen-3-carrier-board-for-4-m-2-ssd-modules/

Most of modern motherboards support in BIOS setting of PCI bifurcation, Supermicro X10DRi-T board has three PCIE 3.0 x16 slots (need both cpus) which support bifurcation: 16, 8/4/4, 4/4/4/4. Already populated four slots (including x8) with dual M.2 PCIe x8 Supermicro cards, however looking for more power :-)

To best of my knowledge as this is bifurcation and M.2 cards are directly connected to PCIe lanes then there is no need for PCIe switch. I cannot find justification for these high prices, especially when dual M.2 card PCIe 3.0 x8 from Supermicro costs 60-80 usd.