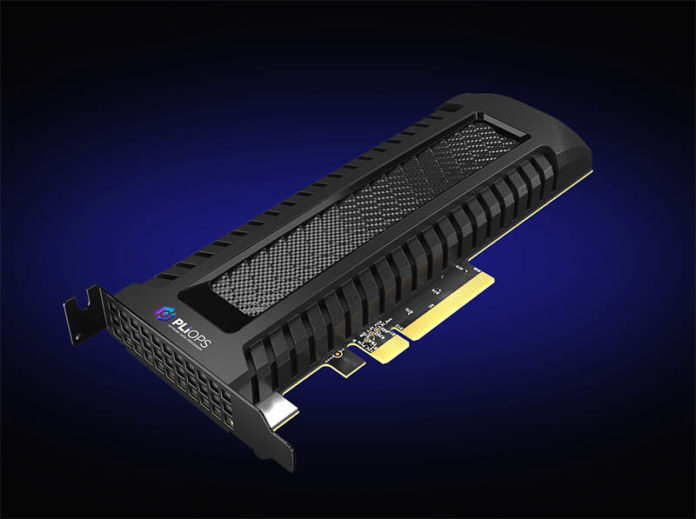

Today we are covering the launch of perhaps one of the more interesting pieces of hardware launched this summer. The Pliops XDP, short for Extreme Data Processor, is designed to accelerate storage in all-flash systems. This Xilinx FPGA-based solution (we are told an ASIC is in the roadmap) is perhaps the more modern way to look at a RAID controller. That is not exactly Pliops’ marketing, but effectively that is what the XDP aims to replace. Just to be clear, Pliops is a well-funded startup with backing from companies like Intel, NVIDIA, and Xilinx so it has quite a bit of backing compared to other efforts. Let us get into the XDP details.

Pliops XDP Extreme Data Processor

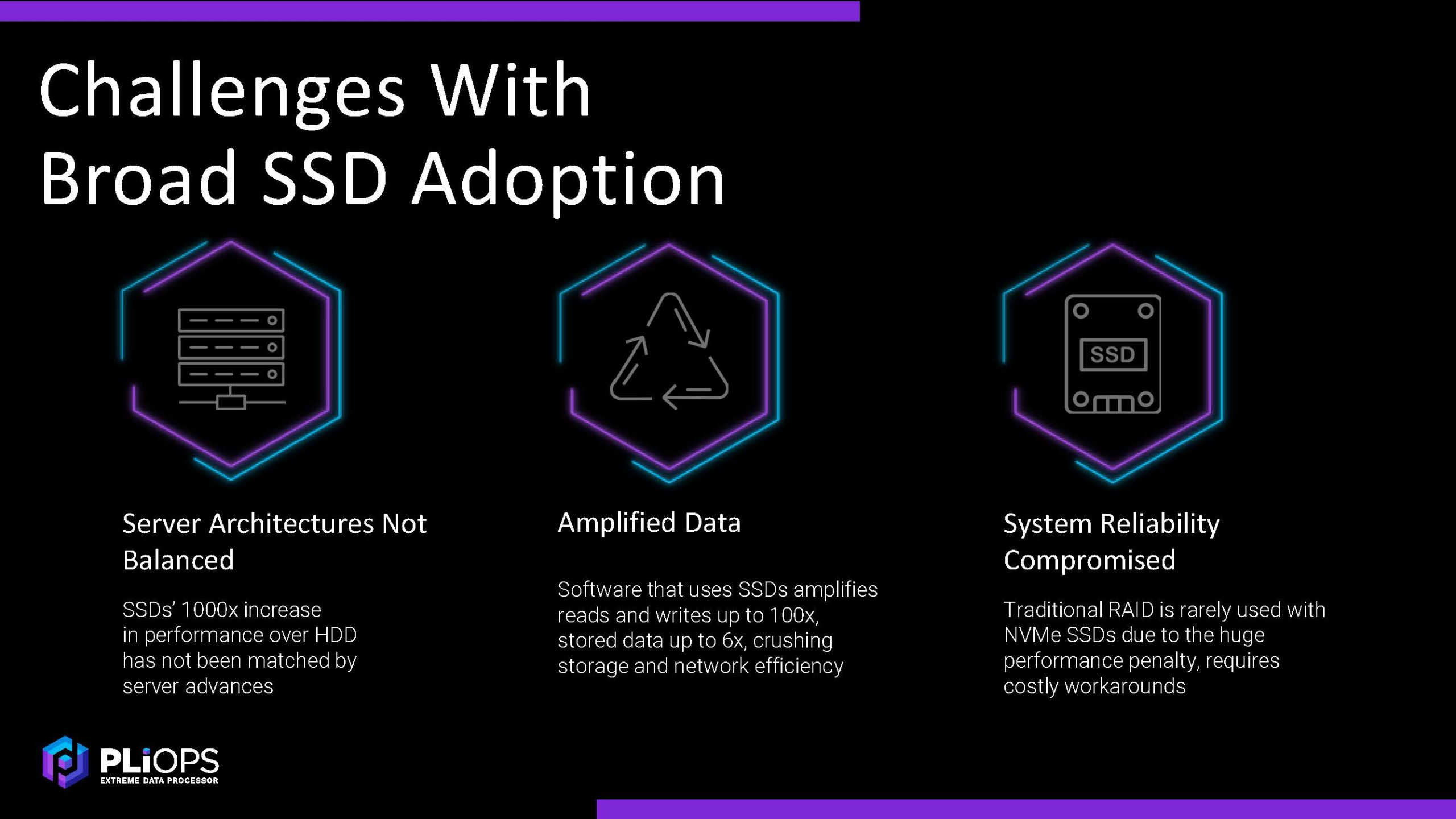

Recently we covered the Microchip Adaptec 24G SAS Tri-Mode RAID and HBA Launch. That is an example of trying to service an existing market of both flash and disks. Pliops is going after a different market: the all-flash server.

The company says that it can hit higher performance with more effective capacity and potentially lower latency than existing implementations.

We are going to get into this in more detail later, but effectively by more efficiently using resources, Pliops is able to give higher TCO.

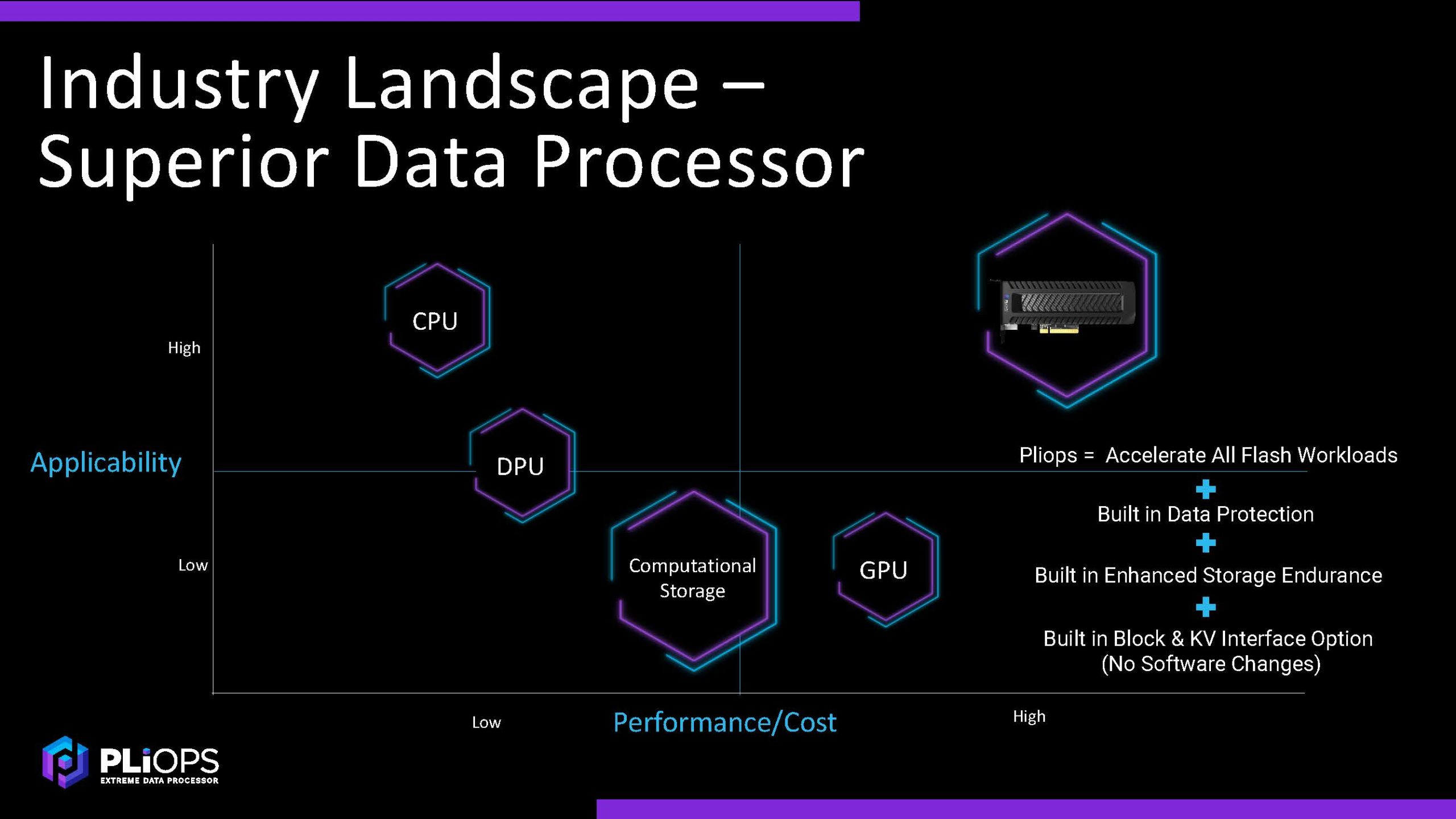

At STH, we have been covering the DPU with pieces such as What is a DPU and DPU vs SmartNIC and the STH NIC Continuum Framework. The Pliops XDP is not a DPU because it does not have onboard netowrking. It instead is designed to accelerate a few common applications in servers and increase storage efficiency.

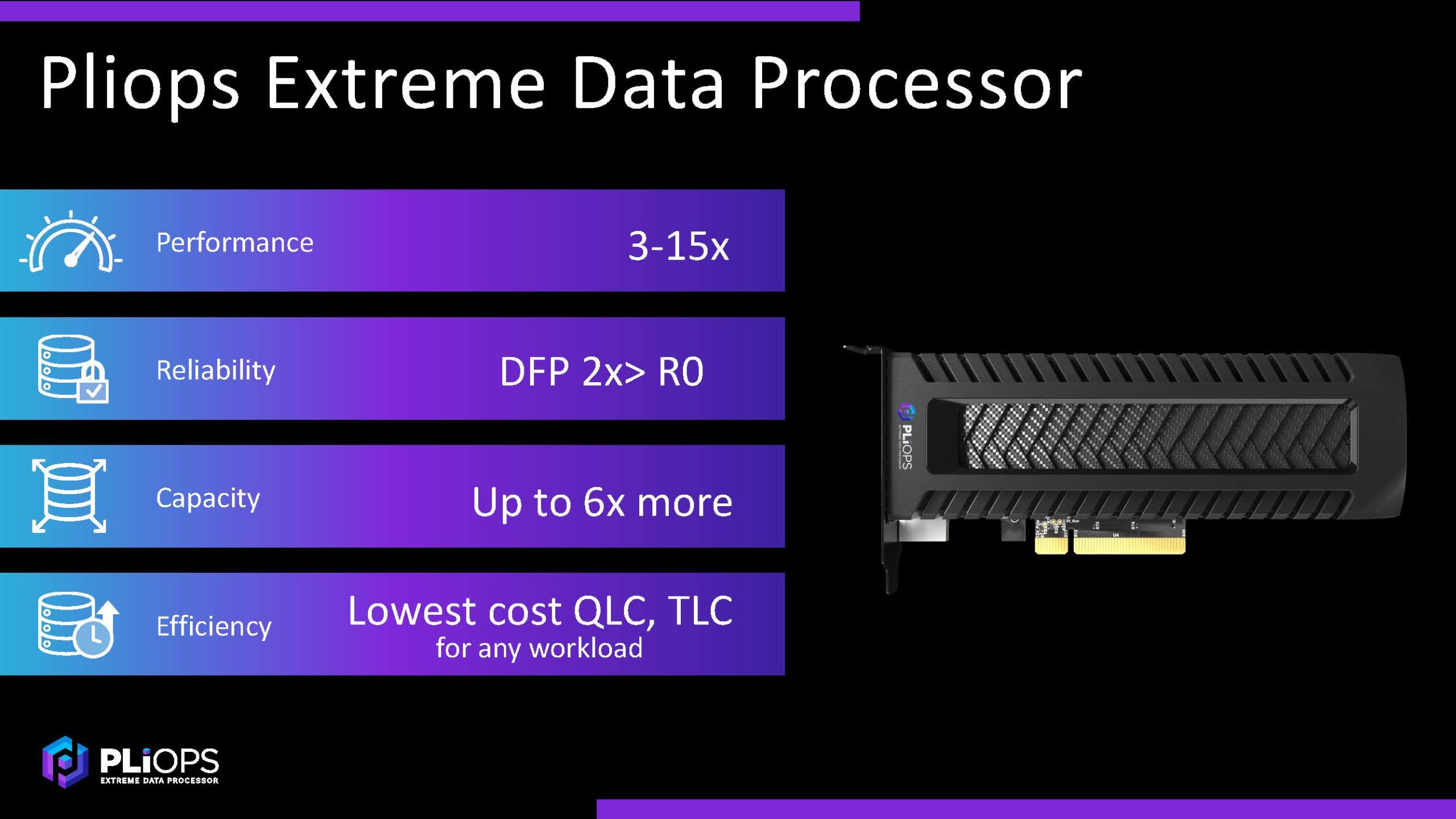

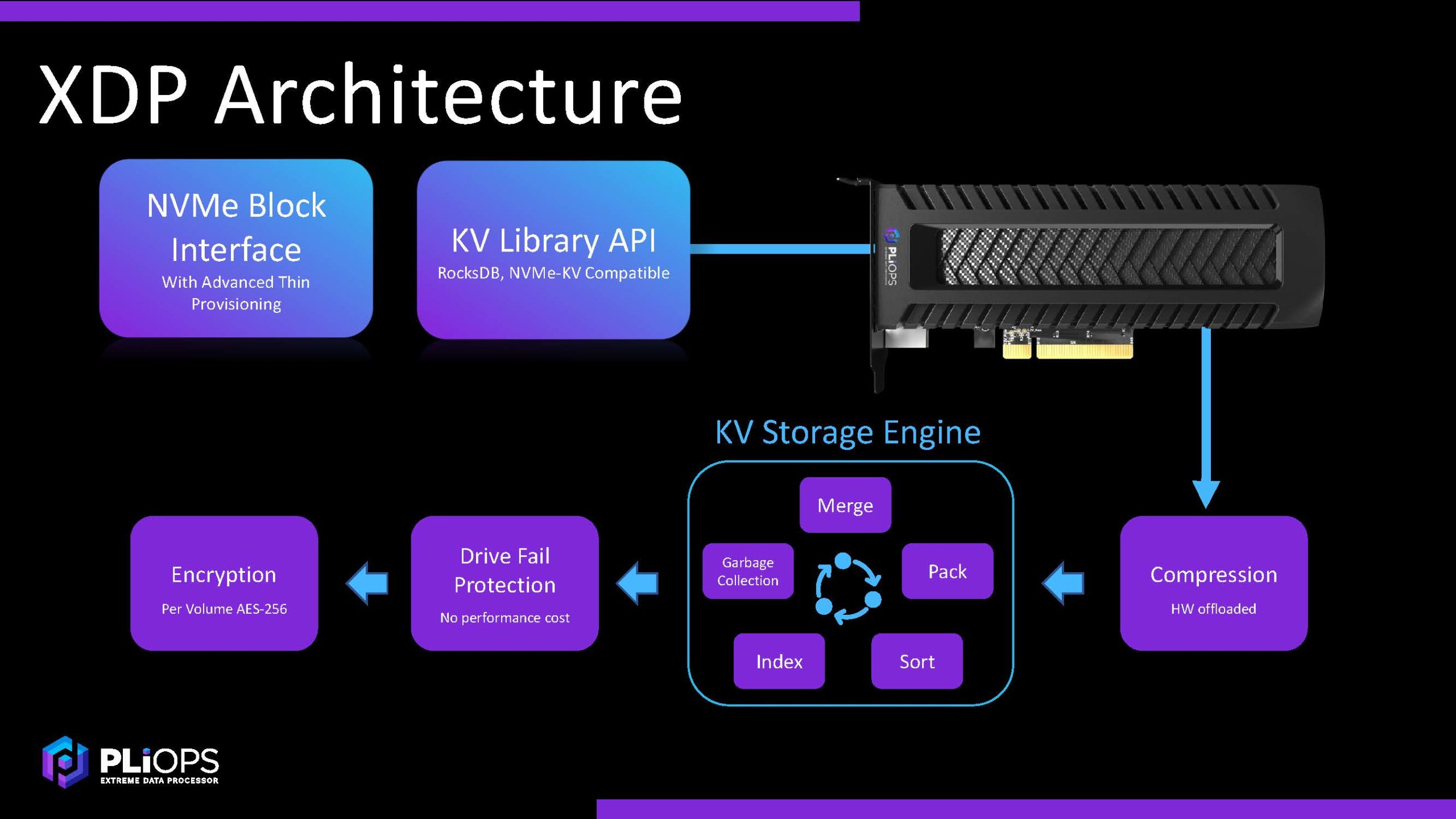

There are basically two ways that the XDP can be accessed. Either as a standard NVMe block interface or with a key value library API. Our readers should be fairly familiar with NVMe since that is a common consumer technology these days in SSDs. Perhaps the more exciting one then is the KV library API. Basically for all of those applications that use key value stores, Pliops is looking to provide acceleration so that task is not done on the CPU, but rather the XDP.

Beyond the KV engine, Pliops is doing a few things that are going to be interesting to many of our readers. First, it does compression and encryption. Second, it can run an erasure coding type algorithm across drive sets to offer redundancy without sacrificing performance.

While we will get to the performance later, let us discuss why this is some of the big magic. By compressing, encrypting, and then distributing data across sets of NVMe SSDs, Pliops is able to take advantage of some easy ways to get better performance. If data comes in and can be compressed, then less data needs to be written to the array of drives (and subsequently retrieved.) That decreases writes to media and increases effective storage capacity. It also increases raw performance because less data is written to drives.

Pliops says its KV engine can run a more computationally expensive algorithm in FPGA hardware rather than utilizing expensive CPU cores which further drives efficiency.

When it comes to write amplification on SSDs, we asked and were told that we can think of what is underneath the heat spreader as a NV-DIMM setup where DRAM can be dumped to NAND on a power loss. This DRAM serves as a cache and allows the XDP to buffer data that will be written to SSDs which in turn makes SSD writes larger sequential writes again improving performance.

At this point I asked Steven Fingerhut the company’s President and Chief Business Officer something akin to “this sounds like what SandForce did on early SSDs just scaled up to a server-level” and I then found out that several folks had worked with SandForce over the years. Still, the concepts employed are fundamental to modern storage. Data is compressed, cached, and distributed. Pliops just takes that from the CPU and offloads it to a FPGA-based accelerator and is focused on SSD solutions and not legacy disk, like Microchip and Broadcom are focused on with their RAID controller businesses.

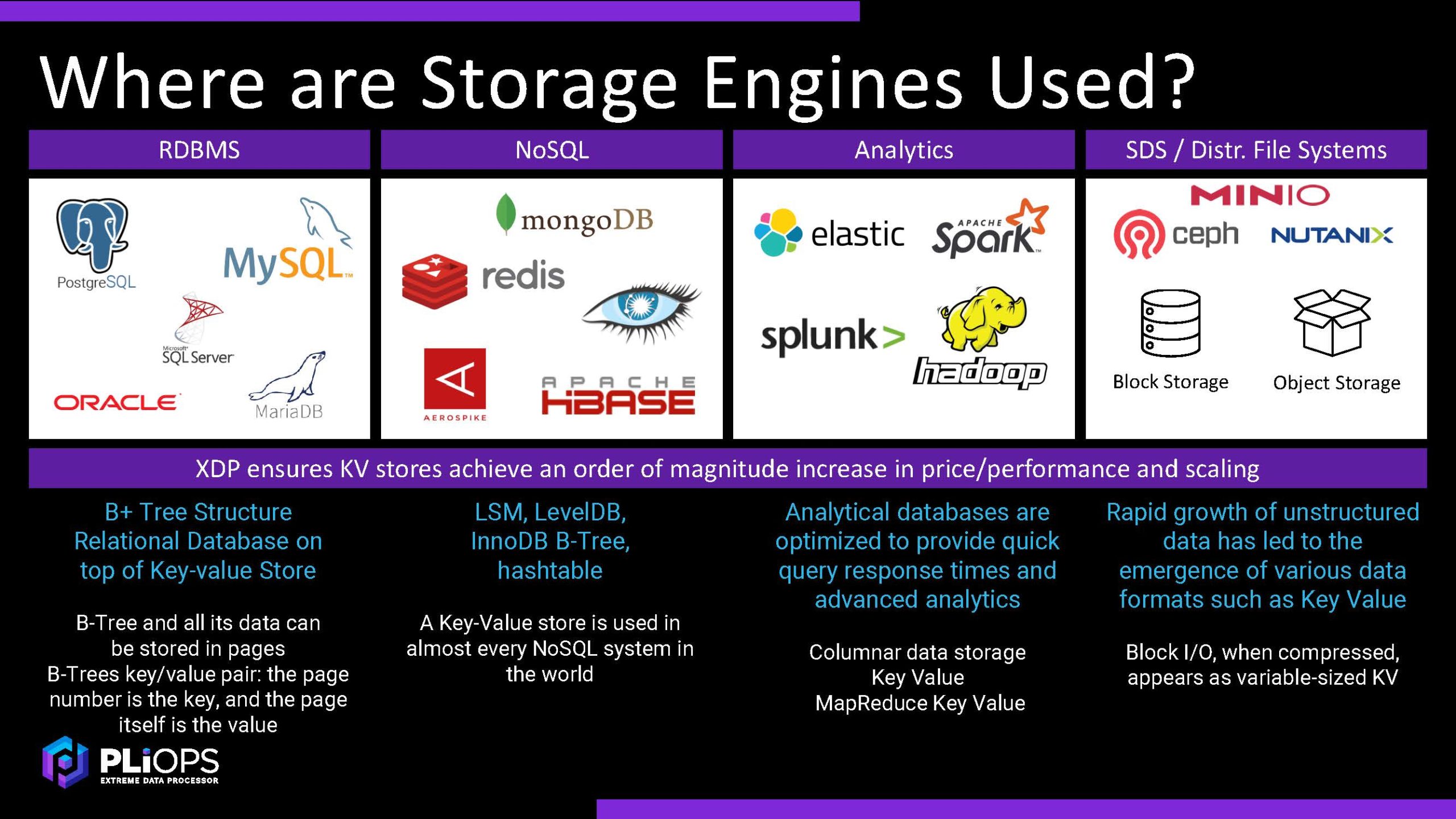

Going beyond the basics of the wisdom of doing accelerated compression, encryption, and redundancy on an accelerator versus CPUs, KV engines are used all over. Here is the company’s slide on that.

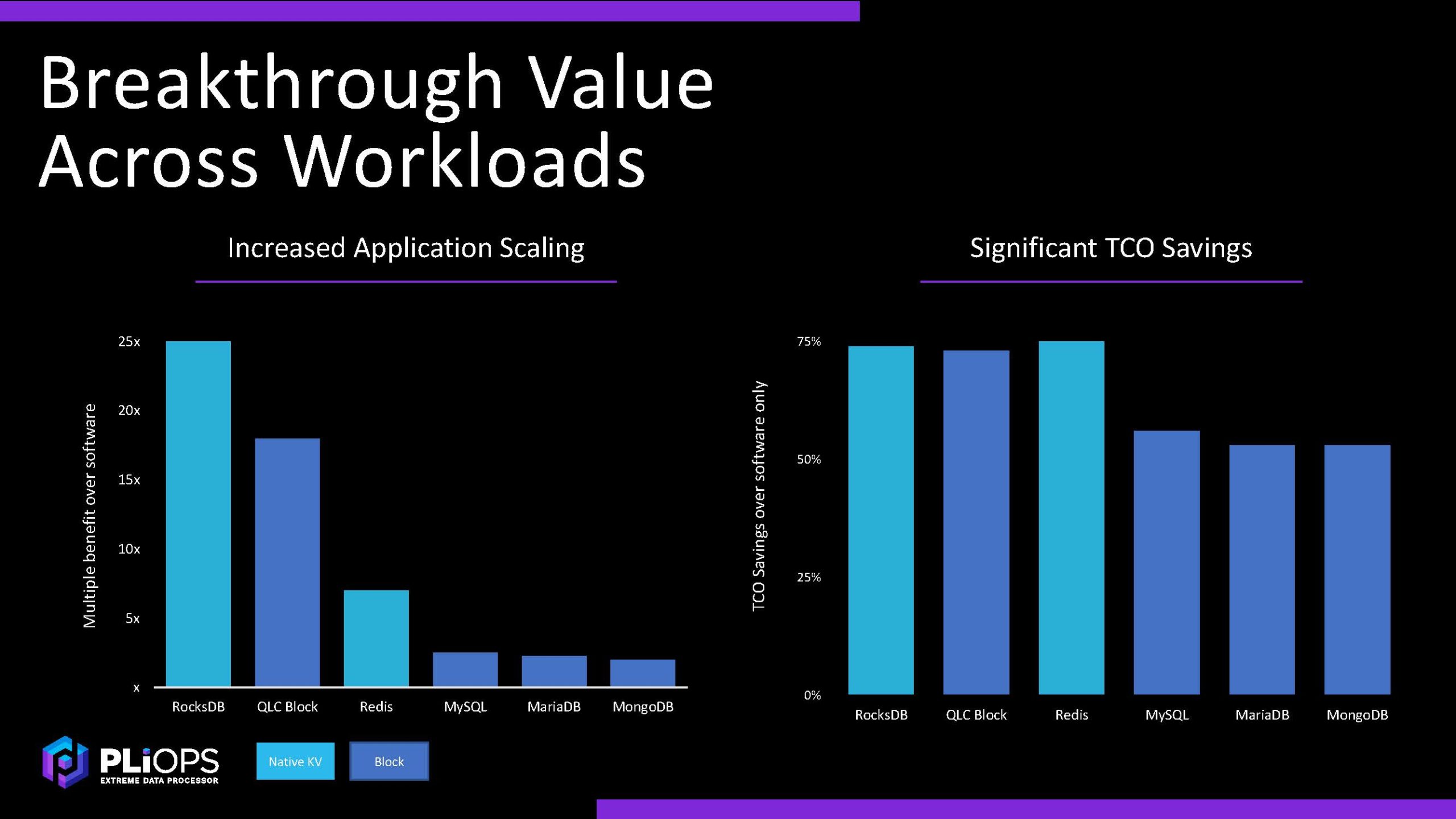

This is good, but let us get next to the impact of adding the accelerator into a stack.

Pliops XDP Extreme Data Processor Performance and TCO

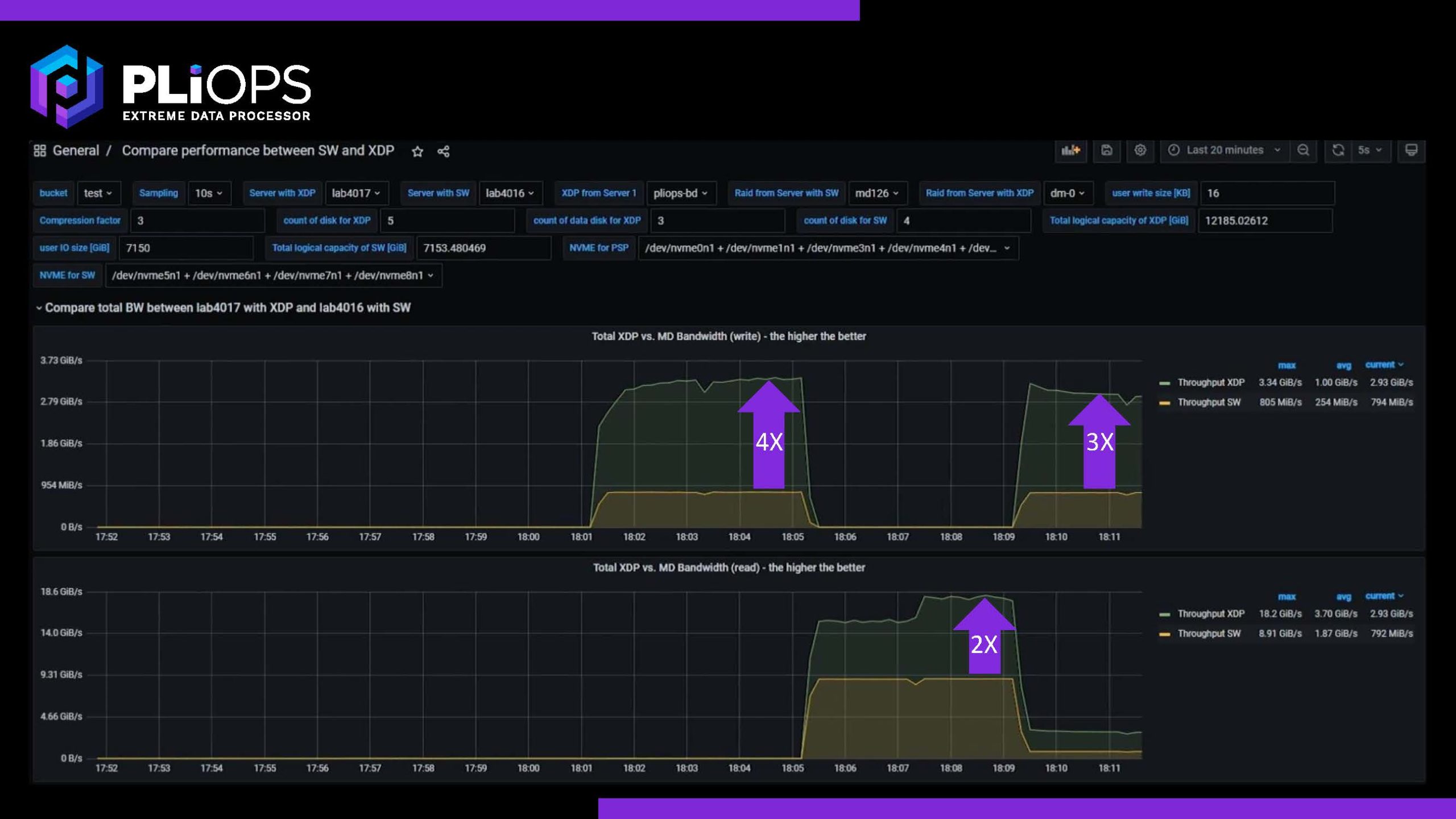

Pliops has several of these charts, but one discusses the performance advantages for read/ write over software solutions.

Because of how its engine works, it can provide better performance than RAID 0.

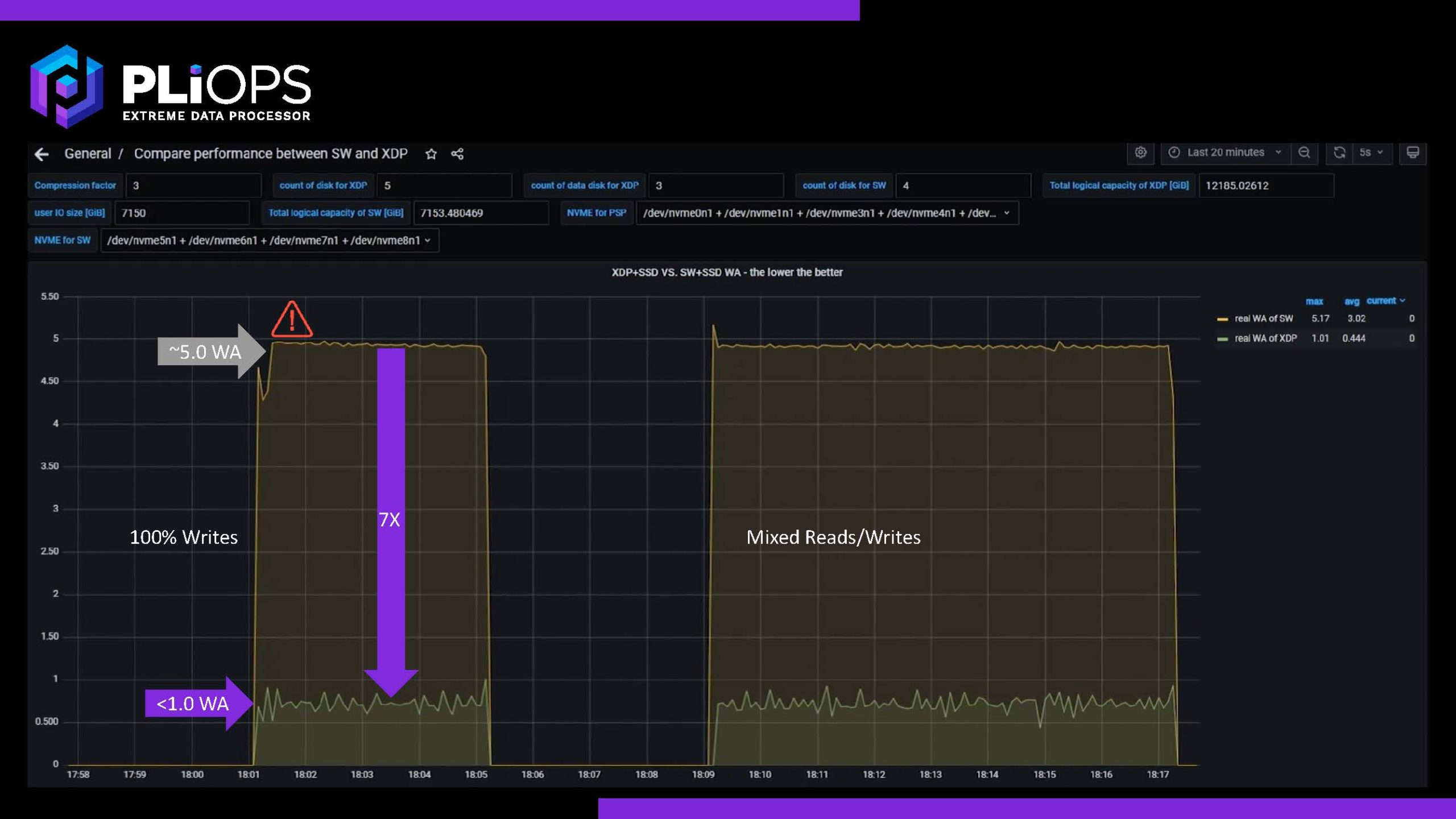

Here is an example of the write amplification which the company says is around 7x. Having less write amplification allows one to use QLC instead of TLC also increasing capacity because the endurance is less of a concern.

Pliops says it can get to DRAM-like performance but at a lower cost. We heard the solution also works very well with Intel Optane SSDs.

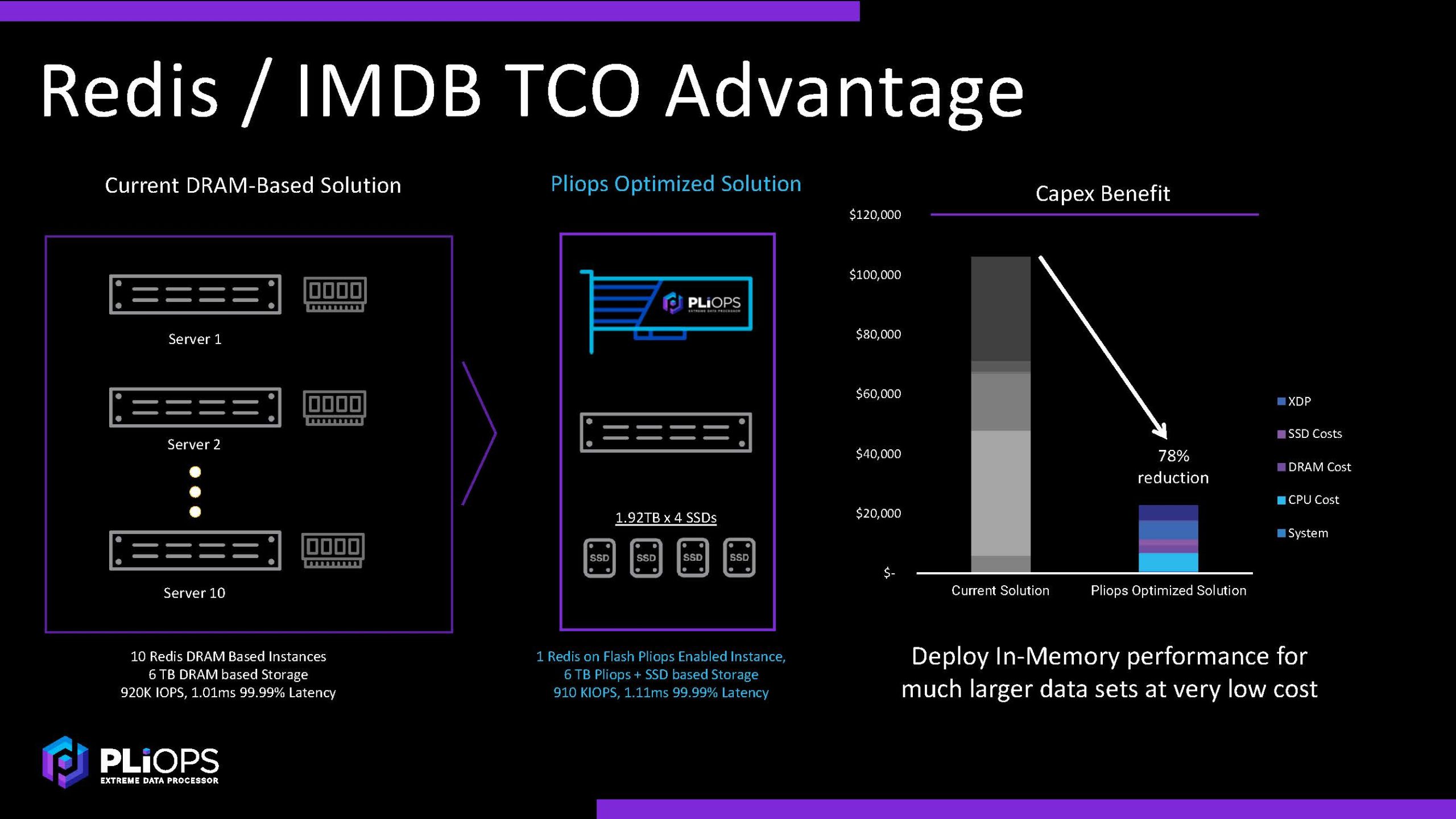

On the TCO side, it is about 10% higher four nines latency and slightly lower IOPS than DRAM, but at a lower cost since it is using NAND for Redis/ IMDB with XDP.

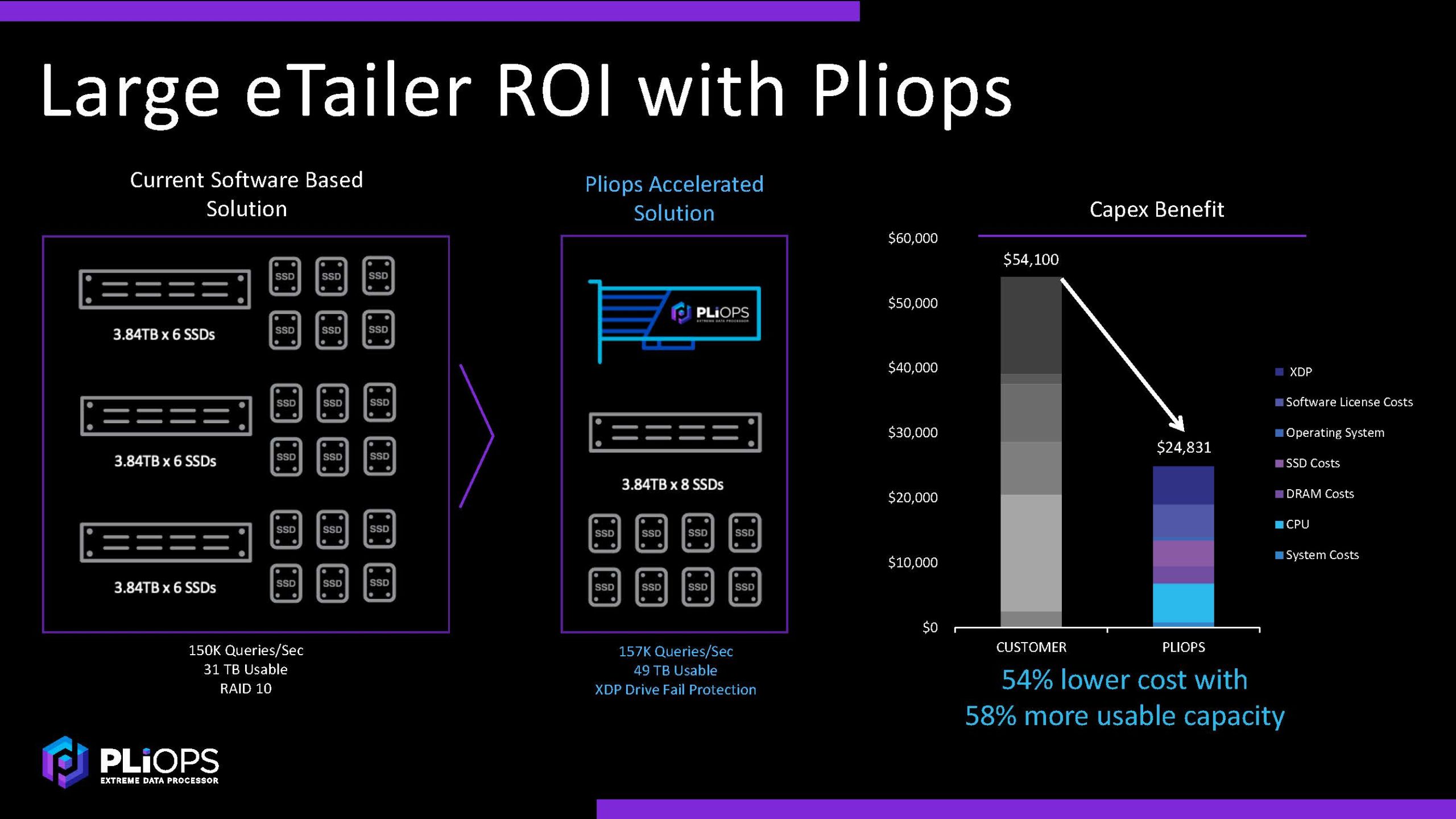

Here is another example where there is double drive fail protection on the Pliops XDP versus RAID 10 and Pliops uses fewer servers and SSDs to achieve a similar level of performance (and greater capacity.)

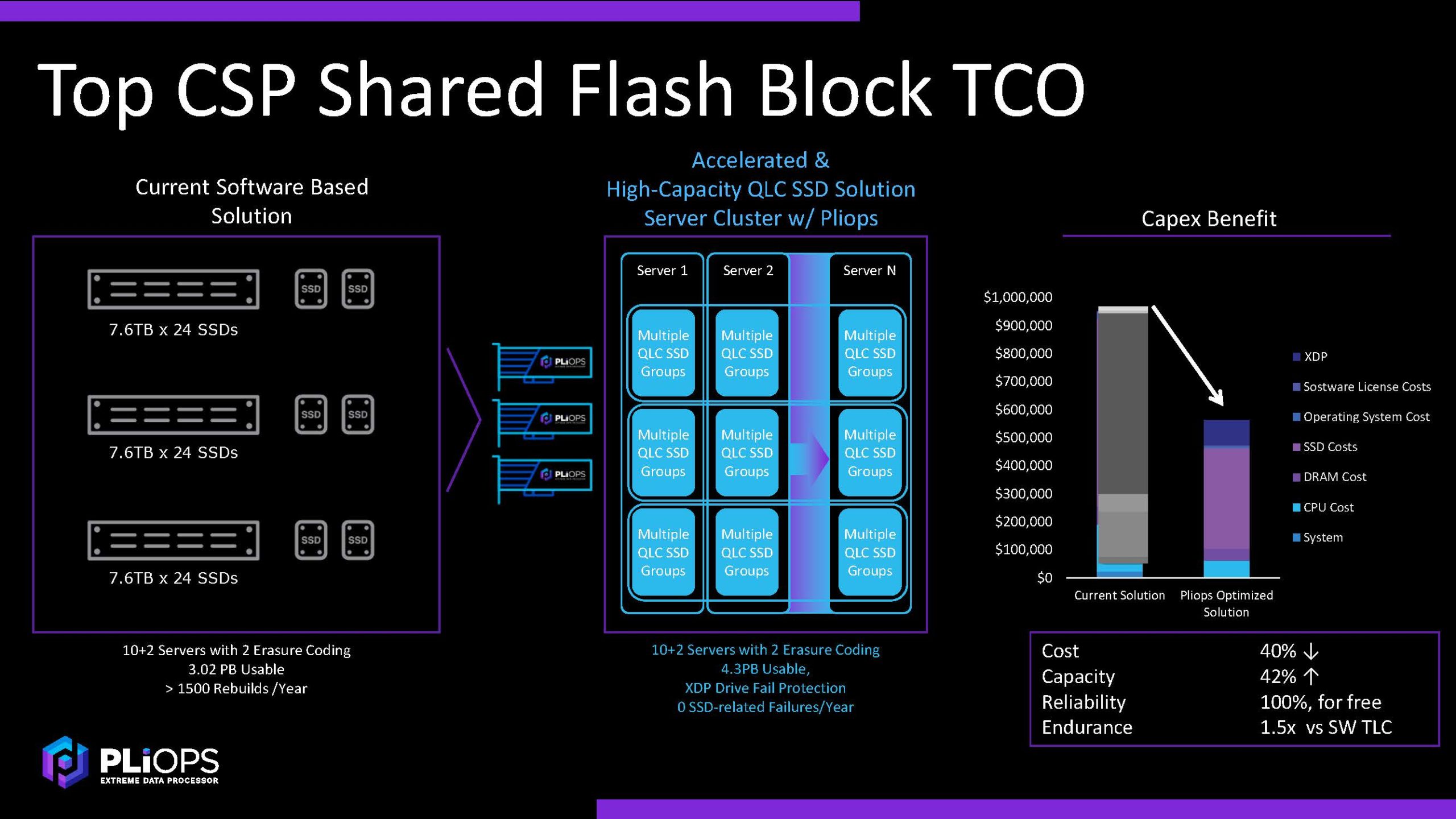

This example is where a cloud service provider is using Pliops to utilize QLC but also have drive fail protection in servers.

Here is Pliops’ summary before we get to ours.

Although it is being announced today, Pliops is already shipping for revenue.

Final Words

Running STH, I often get asked about RAID controllers and NVMe. The challenge is that often NVMe performance suffers on RAID controllers that were built for an era of disks. To me, the Pliops solution makes a lot more sense. Frankly, it would probably be something we could use in our own hosting servers.

Personally, I hear many DPU vendors discussing their products in terms of data center scale storage acceleration such as we saw in our Fungible DPU-based Storage Cluster Hands-on Look. Still, there is a segment of the market that is looking for a more local solution. That local solution is very interesting today, but in 2022 and beyond once Compute Express Link or CXL starts finding its way into products, an accelerator like a future Pliops XDP can provide real value and work in that operating model where a traditional RAID controller will become even more marginalized.

Hopefully, in the coming weeks/ months, we will be able to show off more of the Pliops solution if we get a chance to bring it into the lab.

Not sure, but can it beat ZFS reliability of whatever RAID ZFS offer utilizing an array of NVMe drives? Highly doubt that…

This is the type of solution that’d make more sense on the backplane of a NVMe server that literally sits between the processors and NVMe storage. While everything is PCIe and this card can communicate with the directs directly, data still has to pass through the processor’s root complex which would invoke a small latency penalty. With directly attaching drives to the card, data has to travel to from the processor, to the Pliops card, back to the processor and then to the NVMe drives. There is also an opportunity for bandwidth expansion by putting this chip in between the two as various redundancy/parity operations are generated by the chip but don’t inherently have to be sent through the processor complex. Thus a 16x PCIe interface can communicate with 24x lanes of NVMe drives to handle two drive failures (presume 4x lanes for all NVMe drives). Integrating this technology as part of say a 72+ lane PCIe bridge chip would be advantageous. This would also permit this Pliops technology to venture into DPU territory by simply leveraging existing SmartNIC chips that hang off of its own PCIe lanes (or add a E3.L form factor NIC card).

Without direct access to the drives, I am finding their performance claims difficult to achieve with “DRAM like performance”. Faster than a software based solution using flash? Seems achievable and I can see performance/watt with this card being improved. On paper there seems to be some real benefits here of offloading these operations. However, I don’t see this living up to some of the marketing hype. If they get an ASIC with direct drive access as I allude to above, then it’ll see a further jump in performance.

I think this could be a piece of the puzzle, considering that a modern CPU can’t really keep up with NVMe / PCIe4 bus speed when encrypting and compressing. I do wonder however if this works with common open source software (a lot is mentioned in the slides) or if it requires running software modified by the vendor. I assume the latter. it would be great to have a drop-in replacement for common libraries (zlib, openssl come to mind) that can use acceleration, would be even better to have it integrated in some way so you can get upgrades.

It seems like a good solution to running really big nosql datastores.

Wouldn’t this be a good use case for 3D x point?

Swap out the RAM for 3D point and you have a large non volatile buffer to cache writes.