Perfect Project TinyMiniMicro: Tier 2 Upgrades

Next, let us go “big” on this platform. In our Tier 2 upgrade package, we add more memory, more storage, and more networking.

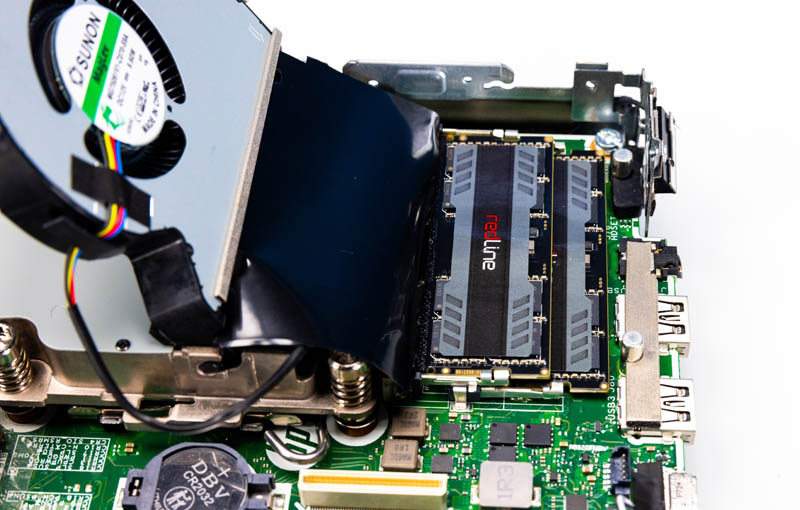

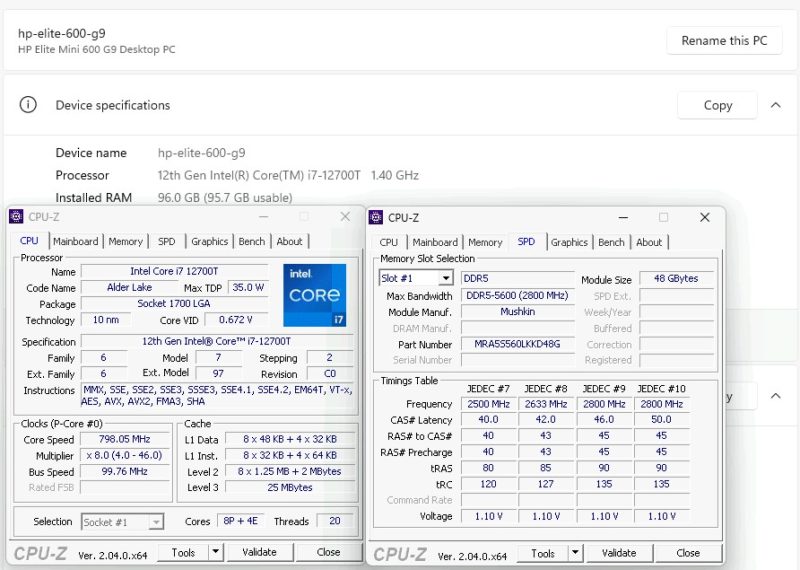

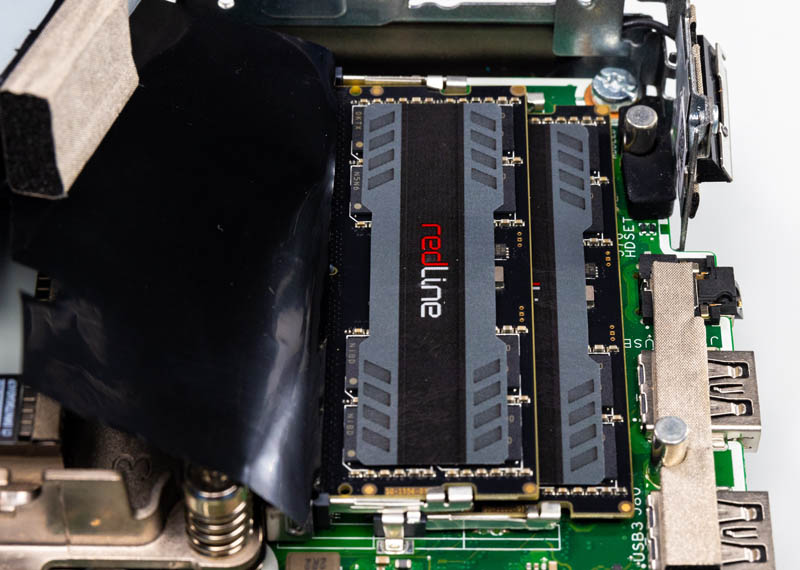

In our all-out upgrade, we are not stuck with the traditional 64GB limit in these two DIMM devices. Instead, we are adding the Mushkin Redline 96GB 2x 48GB DDR5-5600 SODIMM kit to the system. While we have the DDR5-5600 SODIMMs here, we also used the DDR5-4800 SODIMMs which are a better match for the speed of the system. We just had the DDR5-4800 kits installed elsewhere, but those are what we would recommend.

This is the first generation where we have been able to get 96GB of memory, so that is a huge update. It was great to see HP’s BIOS work with this configuration even if it was not officially supported by HP.

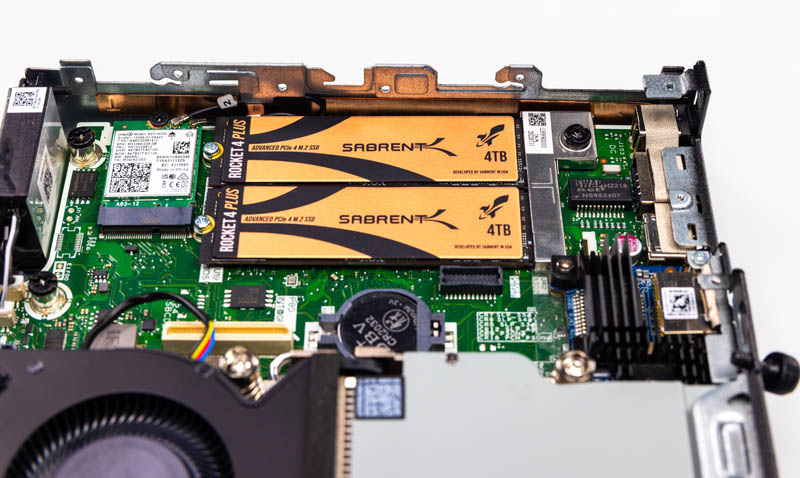

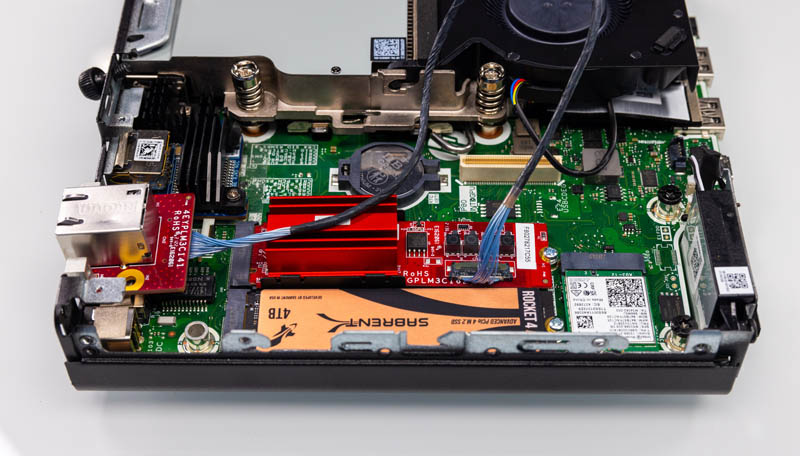

On the storage side, here we are using two 4TB Sabrent Rocket 4 Plus PCIe Gen4 NVMe SSDs for 8TB in total. Some users may look to stripe across those drives, but we are using this configuration to add redundancy.

These are very high-performance PCIe Gen4 drives, but some users, especially with warmer ambient temps may look for lower power and lower-performance drives just for better thermals (but this configuration has been working for us.) We also could have used 8TB drives in this system, but those tend to cost a lot more to the point where we wanted this to remain somewhat reasonable. It is a similar concept to why we are using the Core i7 here not the Core i9.

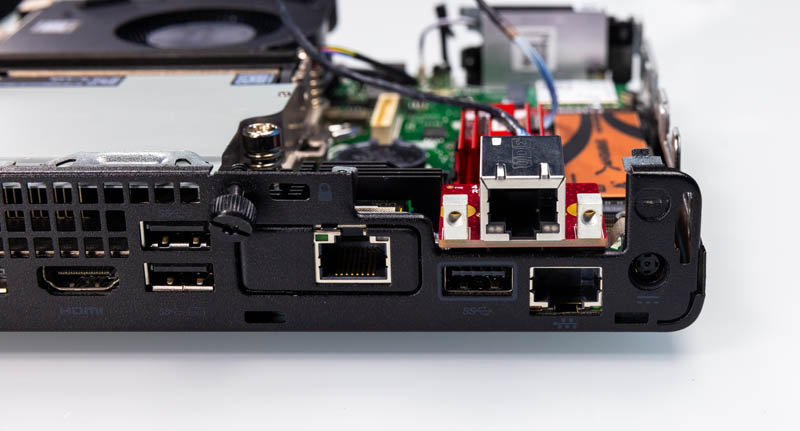

For networking, we are using the HP Flex IO V2 10Gbase-T adapter. This is a Marvell Aquantia NIC on a custom HP module. Adding this NIC gives us the Intel 1GbE for management and this 10GbE NIC for storage and VMs.

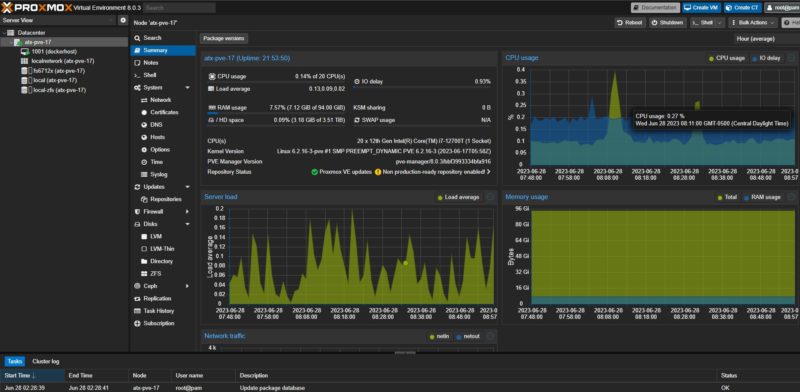

While we have the Windows 11 Pro installation as standard, when we swapped out the SSDs, we installed Proxmox VE 8 on this system. In the video, we go through a bit more on the setup.

In the end, we have a 12 core, 96GB, 8TB (mirrored ZFS 4TB drives), system with 1GbE, 10GbE, and even WiFi 6E. In terms of power, we were well under 80W maximum but our idle crept up into the 11-12W range. We also saw slightly higher noise than the super quiet unit that we saw before, but the fan was only adding 1-3dba to handle the increased heat load. So far, this upgrade package has been online for two weeks without issue. The bigger challenge was that Proxmox VE 8 was released so we had to re-do all of the demos.

Bonus: Tier 2 Upgrade

For those who do not care about mirrored storage, perhaps because you have great backups and SSDs are reliable, we also had this configuration. Here Bryan put not just the HP Flex IO V2 10Gbase-T NIC, but also the Innodisk EGPL-T101 M.2 to 10Gbase-T adapter that one of our STH readers kindly sent us (thank you!)

The reason we are not using this dual 10GbE solution for our main Tier 2 upgrade package is that this unit costs >3x as much as the HP module and it also requires 3D printing a bracket to get this to work. This just felt less clean.

Still, for those that love 1L PCs, and want more networking, this is an option.

Final Words

This was a fun project where we just wanted to show what is possible with Project TinyMiniMicro 1L PCs. What is “perfect” to one person may not get the same label from another so we wanted to show options. One caveat is that this is a solution that is best done on the HP Elite Mini’s instead of the Lenovo and Dell PCs. We have a few kits like AliExpress 4x 2.5GbE kits for the Lenovo Tiny units, but they felt less clean as well.

For our STH readers who are wondering if this is only TinyMiniMicro, it is not necessarily the case. We also discussed how one would do this in other mini PCs like the Beelink GTR7 (although we used a GTR7 Pro on set.) There, getting 10GbE networking requires using a Thunderbolt 3 adapter. It works, but it also means the network connection is only as secure as the USB Type-C plug. Those adapters also often have cooling challenges.

Still, adding non-binary 48GB DDR5 SODIMMs is a monumental upgrade. For many, virtualization hosts are RAM-limited, not CPU limited. In the DDR4 generation, we had been limited to 2x 32GB for 64GB total. Now, there is an option, albeit at a price premium, to get 96GB for 8GB/ core on this 12-core CPU.

Hopefully, our readers found this one to be exciting as it took a lot of work, especially with the Proxmox VE 8 release just as we were about to put out this video.

Where to Buy

Since there are a lot of components here, we are going to give you a few links. These may be affiliate links where we can potentially earn a small commission (we have to pay for this hardware somehow):

- Crucial DDR5-4800 64GB (2x 32GB) kit – Amazon

- Mushkin DDR5-4800 96GB (2x 48GB) kit – Newegg

- HP Flex IO V2 10GbE – Our fastest source to get these has been through B&H Photo. They tend to have one of, if not the best prices around and drop ship them.

- HP Flex IO V2 2.5GbE – Newegg

- Sabrent Rocket 4 Plus 4TB – Amazon

- Lower-Power Crucial P3 Plus 4TB – Amazon

- Innodisk EGPL-T101 – Amazon

- HP Elite Mini 600 G9 – Craigslist, eBay, or others.

The Lenovo M90q gen 3 is still a better option in my opinion :)

It can take the same 96G ram, and 2 x nvme, and has a half height PCIe slot so you can run a rock solid 2 x 10G network card. Or because it’s PCIe you have the flexibility to add whatever you feel like running in there

It’s an interesting comment that VMware does not handle well CPUs containing both performance and efficiency cores. We run VMware here on repurposed hardware from an HPC cluster. I didn’t set it up and have interacted with only the user-level interface but it works well.

I know Linux put a lot of effort into scheduling tasks on heterogenous CPUs. I’d have thought provisioning virtual machines was easier. Thus, VMware having trouble with performance and efficiency cores seems like a surprisingly limitation to me.

Peak STH here. Mucho gusto.

There’s a workaround to the PSOD you’re getting as you’ve mentioned. What you don’t mention is that even with the workaround the performance is pig filth. You’ll get it to run, but it’s not Intel Thread Director capable so VMware’s scheduler isn’t really able to cope with it. Plus, you’ve got cores with diverging instructions so that’s a no-go.

I don’t normally watch the videos, but this one’s really good.

I’ve been trying smilar things, except that I used an Erying Mini-ITX board with a “35Watt” i7-12700H as a base. Since the board quite willingly accepts 120 Watts of PL1 and PL2, I’ve been using liquid metal paste on the ‘naturally delidded’ die and an NH9 fan to get fantastic sustained performance out of this setup.

The downside was that I had to move it out of the original Pico-PSU chassis, because 120 Watts were simply not enough to run this ’35 Watt’ SoC even when I really tightened the PLx limits down. It’s now in a Mini-ITX cube with the same footprint and the additional height doesn’t matter where it sits, but will easily accomodate an ordinary AQC107 in the “GPU slot”. It was mostly about low idle power and noise but it does have the 96EU Xe iGPU for a rather competent 4k desktop punch, if needed.

It’s using DDR4 full sized DIMMs which seemed an advantage when I ordered, but RAM prices are astonishingly flat these days, even for ECC DDR5–which only makes it worse, that none of the Intel chips will run it without W680 ‘dongles’ that can’t be had.

The mobile SoC means the PCIe v4 x16 slot only has 8 lanes connected, but you get two m.2 x4 v4 slots from the SoC and another M.2 x4 v3 slot from the chipset: plenty of storage space now that NVMe storage gets to be really economical, too.

I still hate to waste 4 PCIe lanes on a single AQC107 or 113 10Gbase-T NIC when one v4 lane should be sufficient on the AQC113 at least and I’d much rather have those on board than any set of Intel or Realtek NICs, because they just work.

For P/E issues I’ve been using numactl to essentially partition the system into two domains, one running the 6 P cores with HT and another to act as an add-in i3-n305 system to run lesser containers or VMs.

The current Proxmox kernel might actually be modern enough to understand P/E, but I doubt KVM/QEMU really does, nor would guest VMs properly work across the P/E divide.

Could be another bonus point for the container faction…

abufrejoval, what’s the power usage on that board? I’d seen a lot of issue’s mentioned with the bios not supporting low power states.

What are these w680 dongles you mentioned? I’ve never heard of that before. I’ve seen some W680 boards from ASRock, but those have desktop sockets, and the boards seem impossible to source.

I’ve had my eye on the Erying boards, but have been a bit hesitant to pull the trigger. It seems like you’re liking it, are there any behaviors you’d warn people of?

ZFS with no ECC ram is a Dangerous couple :/

Varun, I think abufrejoval’s point is that the memory controller is on the CPU, not the chipset — but it will only support ECC if you connect it to a suitable server chipset. The W680 chipset is acting as an old-fashioned licensing dongle, letting the CPU know it’s allowed to do something it’s quite capable of doing by itself.

Can you remove the wifi 6e and add nvme storage instead? Think mini nas with 10Gb Ethernet. Does unraid support nvme ssd yet?

What was the total cost for your unit and upgrades?

Need one

Hello, great Video/Article!

Does anyone know if ECC Memory is supported on this Machine?

Thanks

i really would like to a tiny micro with iLO or something like that.

Any advice?

@olson look for one with Intel VPro or AMD DASH support. These should allow some of the remote management features you’re looking for such as: remote power cycling, remote console. Never tried remote media mounting. note that there would be no physically separated management network.

The Sabrent Rocket NVMe’s are shown without the silvery thermal strips that come with the unit. Should those be removed, or should we use them? If they should be removed, how is that done?

FYI, Lenovo M90q gen 3 can’t use the Mushkin Redline DDR5-4800. Just tried it and it won’t start, just beeps.

Those HP Flex 10GbE adapters are crazy money over here in Europe..

I see a lot of US retailers selling them for $130, here in the UK they seem around £200 (or $240, give or take a few $), and mainland Europe, around 230 euros… No wonder I’m starting to spend more on AliExpress than I do on eBay!

It’d be great if STH could tell us in their reviews of TinyMiniMicro systems if they take ECC ram. So far así haven’t been able to find one TMM that actually takes ECC memory.