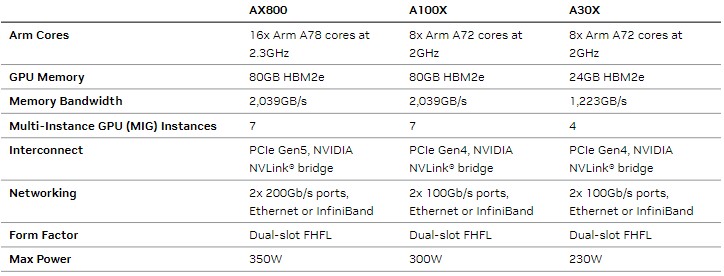

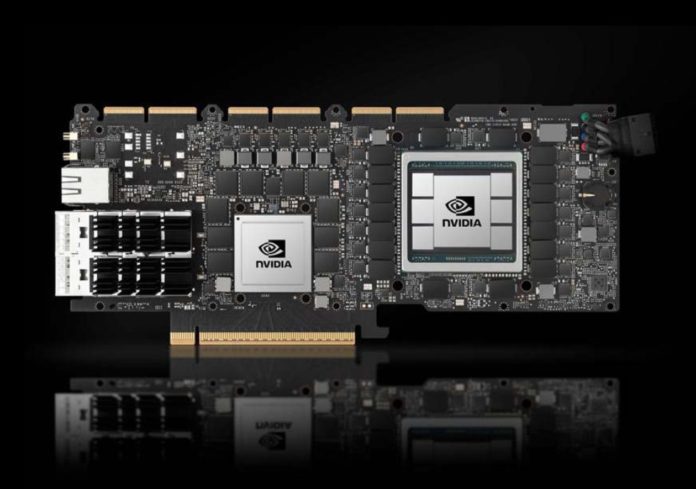

This is perhaps one of the biggest pieces of hardware. NVIDIA has a BlueField-3 DPU paired with an NVIDIA A100 GPU in a massive card. One way to look at the card is that it is “simply” an accelerator card. The other way to look at the NVIDIA AX800 is it is a server with 16x Arm cores, 32GB of memory and 40GB of storage, an ASPEED BMC, an A100 GPU, and over 400Gbps of total networking, plus NVLink on a single card.

NVIDIA AX800 High-End Arm Server on a PCIe Card

The new NVIDIA AX800 combines two main components:

- A NVIDIA BlueField-3 DPU

- A NVIDIA A100 80GB

Both of these are combined into a single dual-slot card with a 350W TDP and a minimum of only 150W TDP. This is up from the 300W of the previous generation NVIDIA A100X, but while the GPU remains the same, we get a much faster DPU.

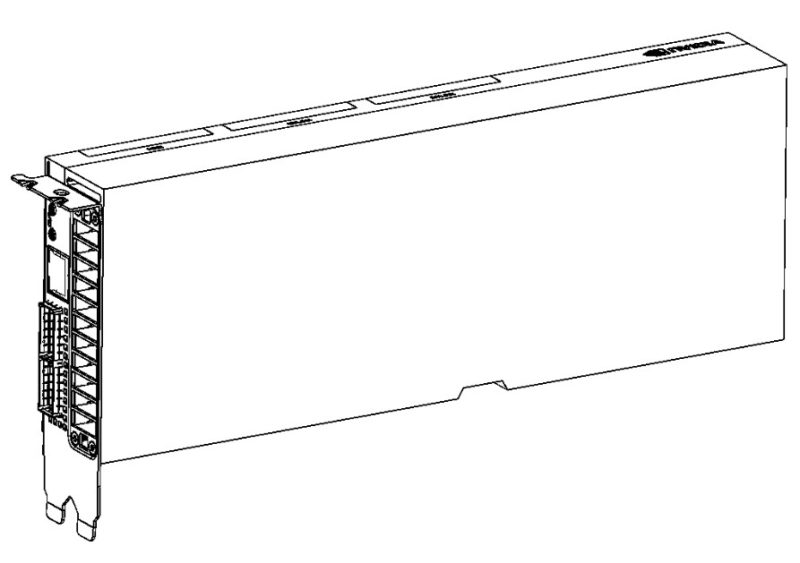

The fun part about this card is that the I/O panel looks like a NIC with the two QSFP56 ports and the out-of-band management port for a 1GbE connection to the ASPEED AST2600 BMC. We will quickly note here that NVIDIA is using two QSFP56 cages each at 200Gbps VPI so they can run in Ethernet or InfiniBand mode. We recently did our NVIDIA ConnectX-7 400GbE and NDR Infiniband Adapter Review and that utilized OSFP cages which were less than exciting to work with.

As much as the rear looks like a NIC or a DPU, the rest of the card looks like a professional NVIDIA GPU even with features like NVLink on top. The NVLink on this card needs to be linked directly to another card and is not meant to link to multiple GPUs simultaneously.

Still, the card has some impressive specs. From what we can understand, this is not an integrated DPU-GPU at the die level since we can see two packages on the board. Instead, this is a PCIe-connected card that combines the specs of a GPU and DPU onto a single card. Here are some of the highlights:

Key NVIDIA A100 GPU Specs

- GPU generation: NVIDIA A100 “Ampere”

- GPU base clock: 840MHz

- GPU maximum boost clock: 1.41GHz

- GPU memory clock: 1.593GHz

- GPU memory type: HBM2e

- GPU memory size 80GB

- GPU memory bus width 5,120-bits

- GPU peak memory bandwidth Up to 2,039GB/s

Key NVIDIA BlueField-3 DPU Specs

- DPU generation: NVIDIA BlueField-3

- DPU core generation: Arm v8.2 A78

- DPU core quantity: 16

- DPU memory clock: 5,600MHz

- DPU memory type: DDR5

- DPU memory size: 32GB

- DPU memory bus width: 128-bits

- DPU peak memory bandwidth: Up to 89.6GB/s

- DPU storage: 40GB eMMC

- DPU accelerators:

- Hardware root of trust

- RegEx

- IPsec/TLS data-in-motion encryption

- AES-XTS 256/512-bit data-at-rest encryption

- SHA 256-bit hardware acceleration

- Hardware public key accelerator

- True random number generator

- DPU BMC: ASPEED AST2600

Final Words

To recap, this card has:

- A 16-core Arm CPU, with its own 32GB of memory, and a 40GB eMMC boot drive

- Dual 200Gbps Ethernet/ InfiniBand networking

- A NVIDIA A100 80GB GPU

- NVLink for a high-speed interconnect

- A BMC for out-of-band management

- A PCIe connector

In most cases, we would consider that a complete server and a fairly high-end one at that. This is one of the most unique DPUs or GPUs out there that really does not fit neatly into a segment. NVIDIA is targeting these at the Telco markets, but it is one of those products that we wish had broader availability because it feels like something that could have a PCIe switch chip hung off of it to make amazing AI-enabled edge server nodes.

As a fun aside, we are covering it in June 2023, but we have had this draft in progress since Computex 2023 in May. It just, somehow, was released and got no coverage. Hopefully, this helps the card get a bit more exposure.

Does it run it’s own OS image separate from the host server?

At some point nV has to just give up on the PCIe appendage and admit these things are servers outright. What am I missing?

The lack of coverage is likely due to nVidia being mainly focused on Hopper and Grace generation of parts. I would expect a refresh of this using the newer generation parts to arrive in 12-18 months. The real question is if the next generation arrives before Hopper’s replacement.

What is interesting is that this card does have three nvLink interfaces which presumably can link to three other cards like this or three A100 PCIe cards. What is interesting is that while BlueField has direct access to the A100, mixing this with a normal A100 would also add the host system into the mix. Essentially room for some interesting heterogenous computing and memory mapping topologies.

@Kevin G If I recall correctly, all three bridges must be connected to the same sibling. Using one card’s three NVLink bridges to connect to more than one sibling isn’t a supported configuration. Standard A100s also have three NVLink bridges.

emerth brings up a good point which is does the card run it’s own operating system. In the specs it says “40GB eMMC boot drive” which seems to imply that it’s capable of running an operating system. If it was just scratch storage it would not say boot drive.

I guess these are basically designed for passive PCI[e] backplane systems which hark back to S100 or VME bus systems for industrial use or simply predate “motherboards”, which are a microcomputer invention.

I guess the main disadvantage is that you can’t use these to build your own home-brew DGX and that they are really designed for the most advanced edge AI surveillance inference systems they seem to run in Mellanox’ home country.

There have been plenty of CPU plug-in boards for PCs over the decades, even including a small IBM mainframe at one point. But just putting one of these in a server might not get you very far as the motherboard and this “add-in” card may not agree on who is the PCIe root.