At SC23, we saw the Neuchips RecAccel N3000 AI inference accelerator. This startup is looking to add dedicated AI inference accelerators to servers and workstations over the next 18 months. It has already submitted some MLPerf results as it builds its software and hardware stack.

Neuchips RecAccel N3000 AI Inference Accelerator at SC23

With NVIDIA’s recent data center revenue numbers, everyone wants to be in the data center with AI inference. Instead of building a large GPU and trying to compete directly with the NVIDIA H100/ H200, Neuchips is looking to use smaller chips with larger memory capacities.

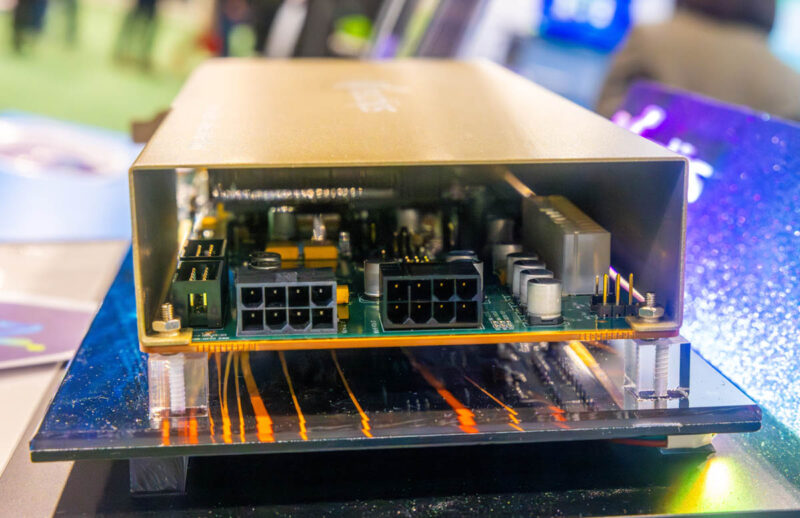

This is the double slot card that was shown at SC23:

One can see the main chip is passively cooled. There is LPDDR5 memory around it and then power is at the rear of the card. Our sense is this is an early card since there appears to be an ATX power input onboard.

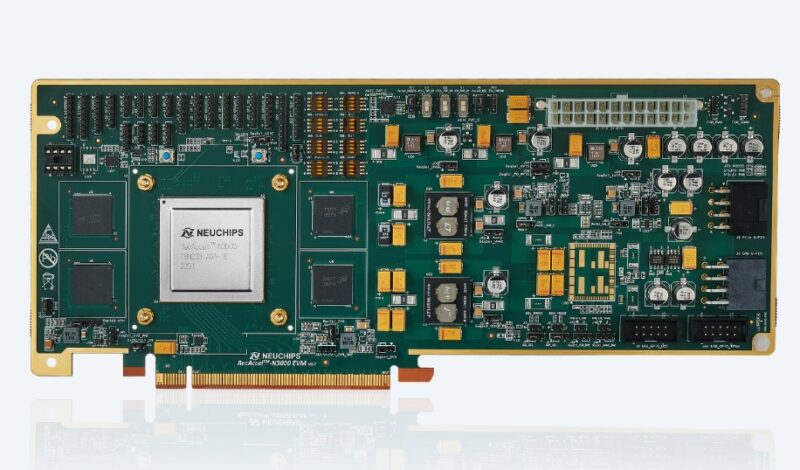

Here is a look at the card without all of the cooling. The main RecAccel N3000 chip has four LPDDR5 packages around it. Currently, this is a 32GB design, but with denser packages, that capacity can go up. All told, Neuchips says it is feeding the N3000 with around 200GB/s of memory bandwidth using low-cost commodity memory.

We were told the card supports 32 TFLOPS of bfloat16 performance, 206 TOPS at INT8, and also has special FP8 support going beyond what NVIDIA does with its transformer engine. The PCIe Gen5 x16 card can power itself using a PCIe slot, as the company showed in a RGB laden demo.

Here is another look at the system on the show floor.

The company also had a cheap power meter on the show floor with the entire system running at under 70W at the wall.

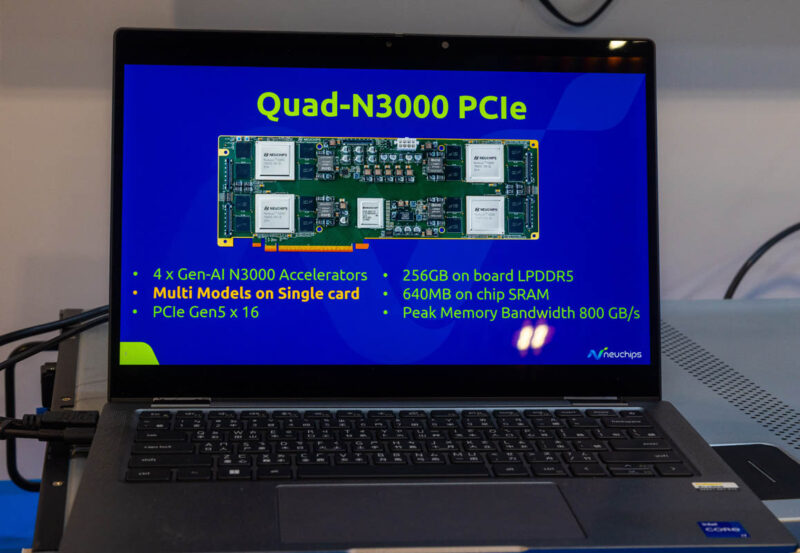

Looking ahead, we can see the next-generation idea for this card. Here we have four N3000 chips with a total of 640MB of on-chip SRAM. Neuchips uses large SRAM (~160MB) to help feed its execution paths.

The more interesting one is perhaps the 256GB onboard LPDDR5. 256GB / 4 = 64GB hinting that the company is already working on 64GB N3000 versions.

Final Words

It is always cool to get to see some of the new hardware at SC23. Neuchips is another Los Altos company. Los Altos was also home to Cerebras and STH was actually started when Patrick was living in Los Altos.

Hopefully, we will see these cards transition from lower-volume prototypes to higher-volume cards so we can start trying them out in the servers we review. The other aspect to these parts is really the other use case. At 32GB or 64GB per N3000 (or four times that for the quad card), doing local inferencing on larger models in a workstation would be possible. Even cards like the NVIDIA RTX 6000 Ada and NVIDIA L40S top out at 48GB of memory, so it is an interesting play by the company.

It all comes down to the software support but if they manage to deliver a 64Gb card with decent software support, this is a good competitor to A100/H100 as most cases you primarily need those for the extra memory capacity anyways and can accept if the actual compute takes longer.