At SC18 processors and co-processors held a commanding presence, but there was another star to the show: Mellanox ConnectX-6. Cray showed up with Shasta and its Slingshot interconnect, but let us face it, Mellanox has commanding share here. At the show, Mellanox showed off its newest generation of 200GbE and HDR Infiniband adapters: the Mellanox ConnectX-6.

Mellanox in the New November 2018 Top500 Systems

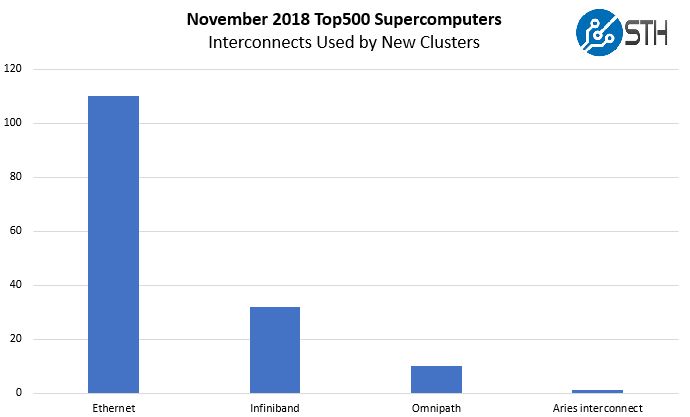

If you saw our Top500 November 2018 Our New Systems Analysis you probably noticed a few trends on the interconnect side. Here is a quick chart from that piece to frame the discussion:

Here is what the new systems in the November 2018 Top500 list used for interconnects. You can see that the Intel Omni-Path first generation (OPA100) is doing well, but as we mentioned in our Intel Xeon Gold 6148 Benchmarks and Review The Go-To HPC Chip piece, Intel nearly is giving away Omni-Path. That makes its market share not exactly compelling on this list.

On the other end of the spectrum, Ethernet has a huge presence. That is because of a trend where companies are taking portions of large Internet hosting clusters, running Linpack, and submitting them for the Top500.

For high-performance, low latency interconnects in HPC, Mellanox is still the leader. With HDR Infiniband, Mellanox hit 200Gbps while Intel Omni-Path is still at 100Gbps. We were told Intel is not releasing OPA200 because they are doing product soul searching to get a competitive feature set.

Mellanox ConnectX-6 at SC18

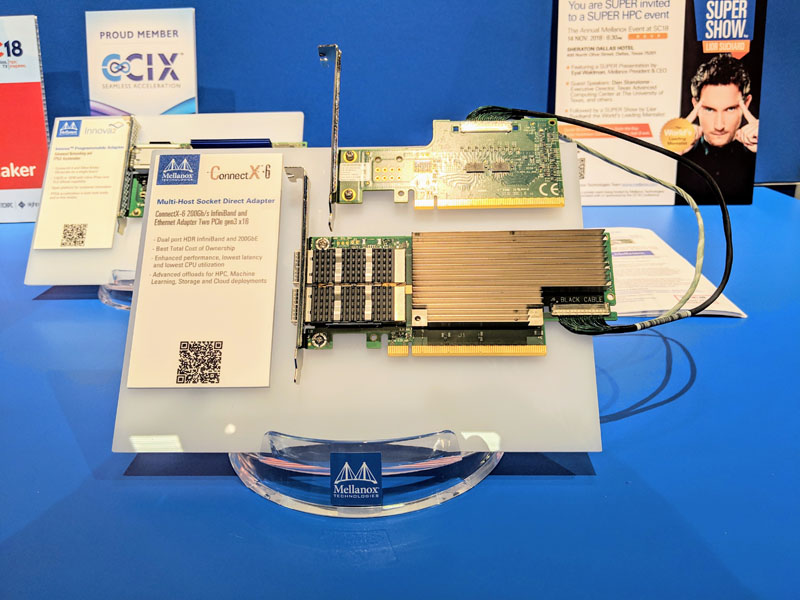

One of the Mellanox ConnectX-6 options is a multi-host socket direct adapter. You can see the main board with a second PCIe x16 board attached. Mellanox can take two PCIe 3.0 x16 slots and aggregate bandwidth to a single host adapter. Instead of needing two separate cards, each NUMA node can directly attach to the fabric with this method. This is important for Intel Cascade Lake-SP which will come out in a few months with PCIe Gen3.

For those who are looking for either 200GbE or HDR Infiniband and have PCIe Gen4, e.g. AMD EPYC 2 Rome or IBM Power9, you can use a single PCIe 4.0 x16 card.

This is one of the major reasons those who want more network bandwidth are clamoring for PCIe Gen4 (and Gen5.) One will also note that we are seeing an ecosystem of parts that are confusing on CCIX support, as we highlighted in the piece Xilinx Alveo U280 Launched Possibly with AMD EPYC CCIX Support.

Final Words

Moving up to 200Gbps interconnects either requires PCIe 3.0 x32 or PCIe 4.0 x16 slots. While the hyper-scale customers will look for 200GbE and the HPC customers will look more at HDR Infiniband, the message is clear: faster interconnects are coming and we need more PCIe bandwidth to support them. Mellanox ConnectX-6 is now the leader in the 200GbE and HDR Infiniband markets and others are catching up.

AMD will need some Mellanox ConnectX 6 PCIe 4.0 X16 Adapters to be compatative with NVIDIA DGX-2H Now with 450W Tesla V100 Modules.