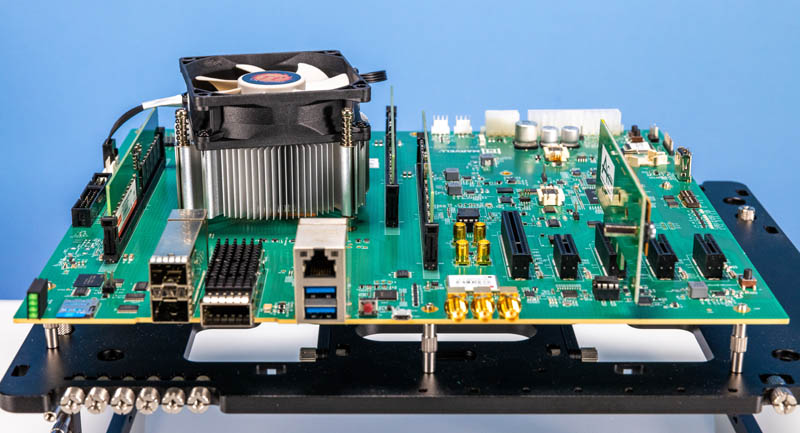

Today we get to take an exciting look at the Marvell Octeon 10 DPU. This is perhaps the product that we have wanted to see for a long time, and it is now here in the lab. It is in a bit of a different form factor than we are accustomed to, but after we saw the Marvell Octeon 10 at OCP Summit 2022 it was time to get to work.

Marvell Octeon 10 Arm Neoverse N2 DPU

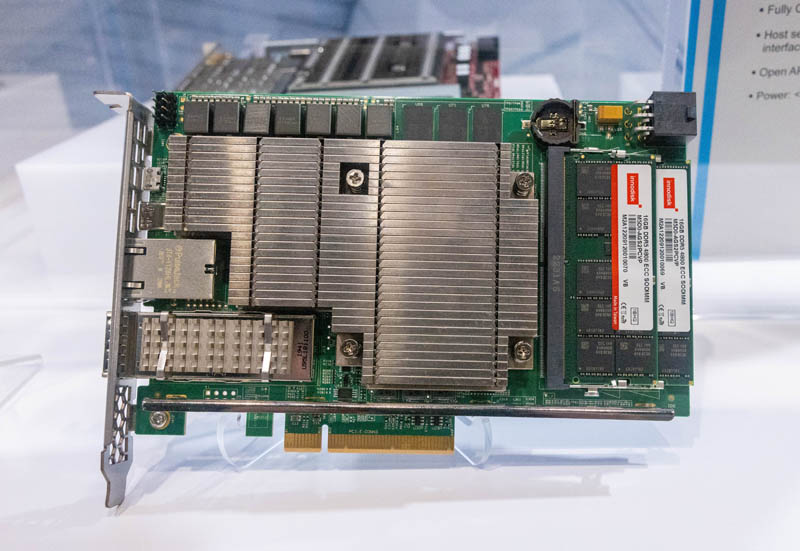

As a fun aside, when I heard Marvell was sending an Octeon 10 DPU, I thought we were going to get a PCIe card like we saw at OCP Summit 2022.

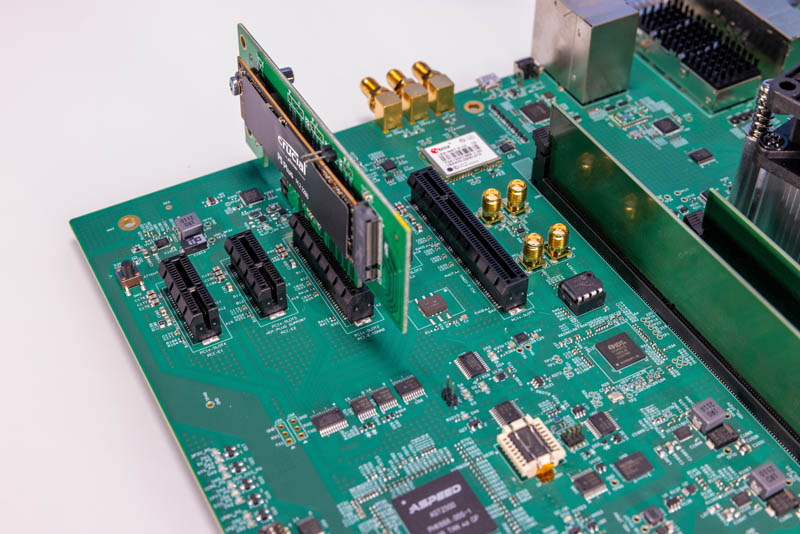

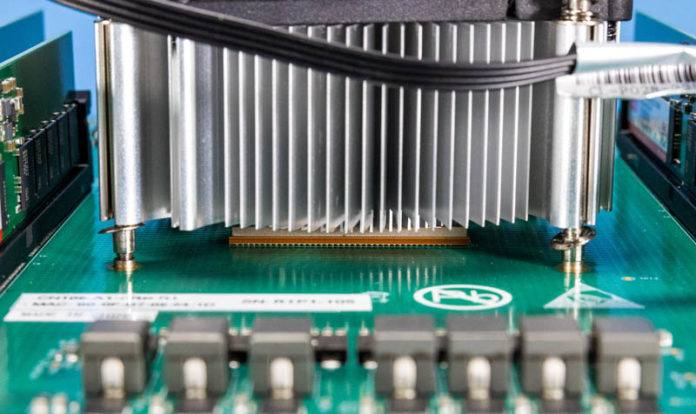

Imagine my shock at opening the box to find a motherboard like this!

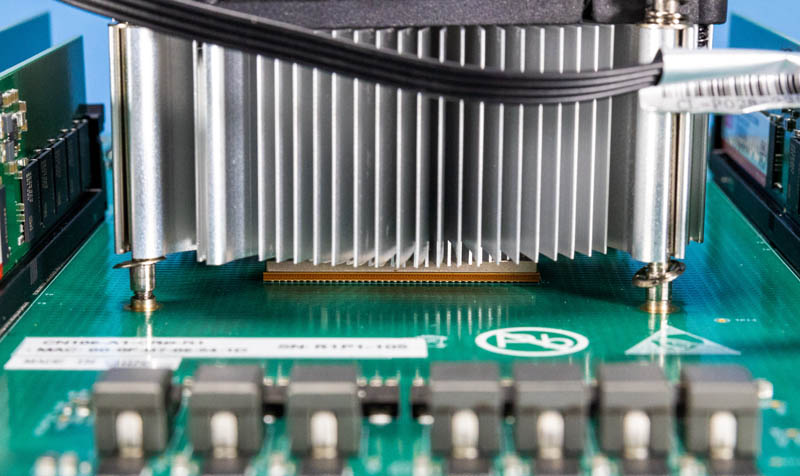

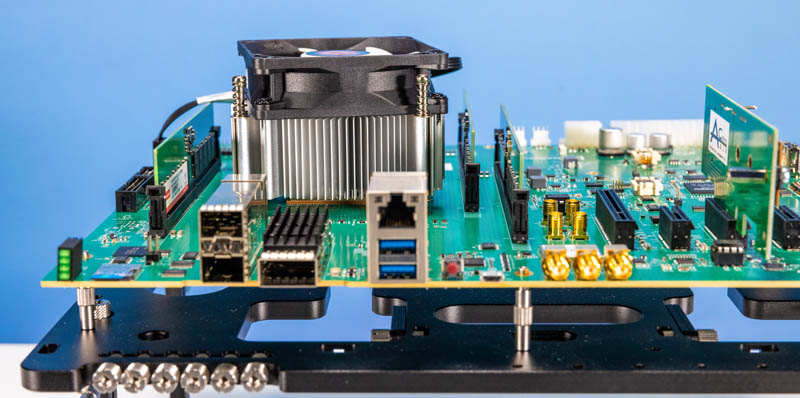

Underneath the large heatsink is the Marvell Octeon 10 DPU. This is a 24-core Arm Neoverse-N2 chip that we have been eagerly awaiting for over a year. The CORECLK on this is 2500MHz to balance performance and power consumption.

Prior experience has taught us not to play too much with CRBs, so here is a photo of a different Octeon 10 DPU CRB shown at OCP Summit 2022.

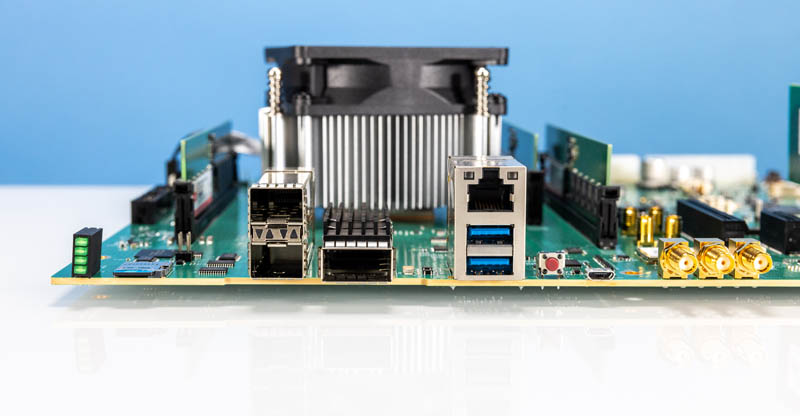

Taking a quick tour, we can see that the CRB is laid out similarly to a standard motherboard.

We have two SFP56 cages as well as a QSFP56 port. Marvell’s SKUs that we have seen thus far tend to use 50Gbps as their base networking speed so we have 25/50GbE SF56 ports and 100/200GbE QSFP56 ports. Marvell has other models with a built-in switch that can provide many more lanes of 50GbE than what we have here.

There is also an array of PCIe connectivity. In theory, the Octeon 10 DPU is a PCIe Gen5 device, but we have a lack of Gen5 endpoints at the moment. Instead, riding high above one of the PCIe slots is a M.2 SSD, a Crucial Crucial P5 Plus. At least the platform supports NVMe storage.

While that NVMe device may look funny at first, it is also very important. We discuss a key criteria for DPU is having the ability to serve a PCIe root complex. That is exactly what this is showing as the PCIe root for that M.2 NVMe SSD is the DPU. We often look at DPUs on PCIe cards, but this CRB is designed to also show device makers like 5G infrastructure providers and security appliance providers how this can be the basis for network platforms. If you recall our demo with the BlueField-2 DPUs, one could imagine the Octeon 10 providing NVMeoF, encryption, and compression services for networked storage with a number of storage drives connected. That is another use case for DPUs, and is driving this form factor.

There is also an ASPEED AST2500 BMC. This is running OpenBMC and it has been a lifesaver. We have been using the serial-over-LAN capabilities extensively.

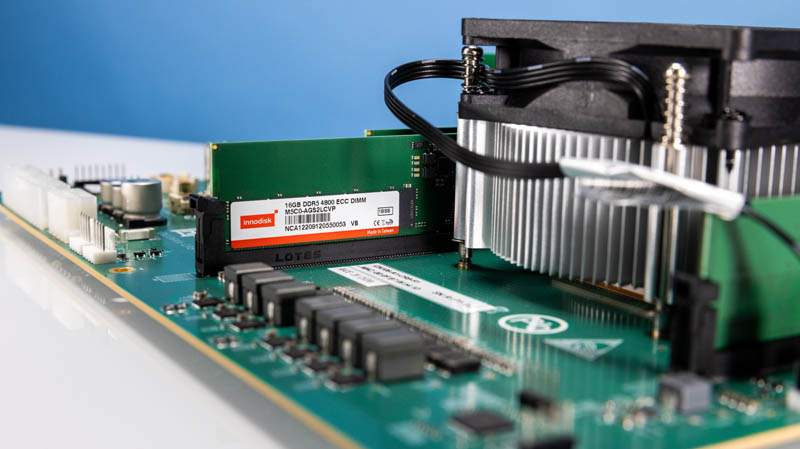

In terms of memory, one may notice three DIMM slots one for each channel. High bandwidth networking, a fast CPU, and accelerators mean that we need fast memory. The installed DIMMs are 16GB ECC DDR5 modules. We have not put 32GB or 64GB DIMMs in here. Memory bandwidth is a big deal. NVIDIA BlueField-1 is still in use even though BlueField-2 is out because the later model has a single memory channel that constrains performance. Marvell is going with DDR5, and the official documents on the platform aim for DDR5-5200 speeds. That is a lot of memory bandwidth.

With that, it is time to get the system setup and running.

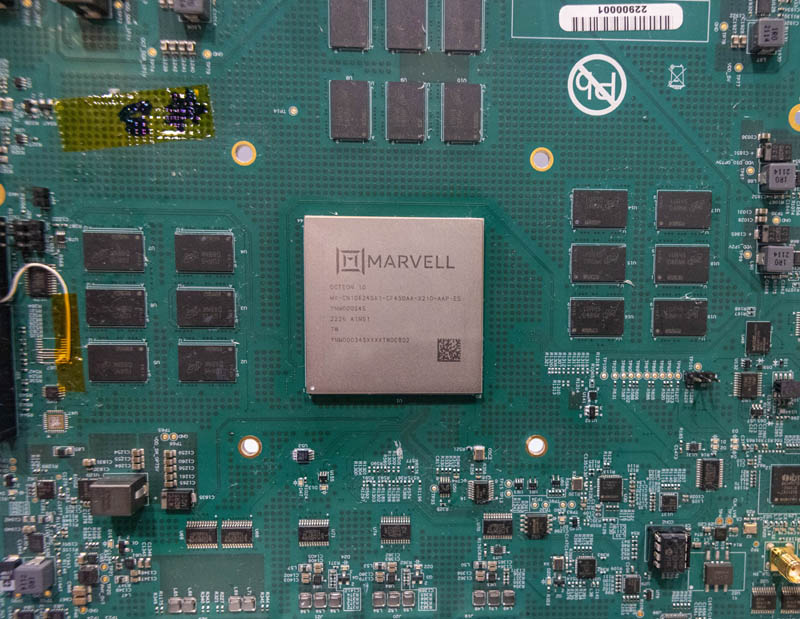

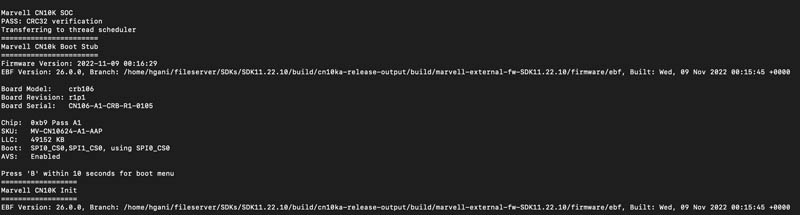

The prodecure to start the system is that one first boots the BMC, then manually boots the DPU. This felt like starting some of the more unique exotic cars. One of the first things we found as the system was booting was the model number. Marvell says “CN106XX” but the XX seems to stand for a core count. This is the MV-CN10624-A1-AAP.

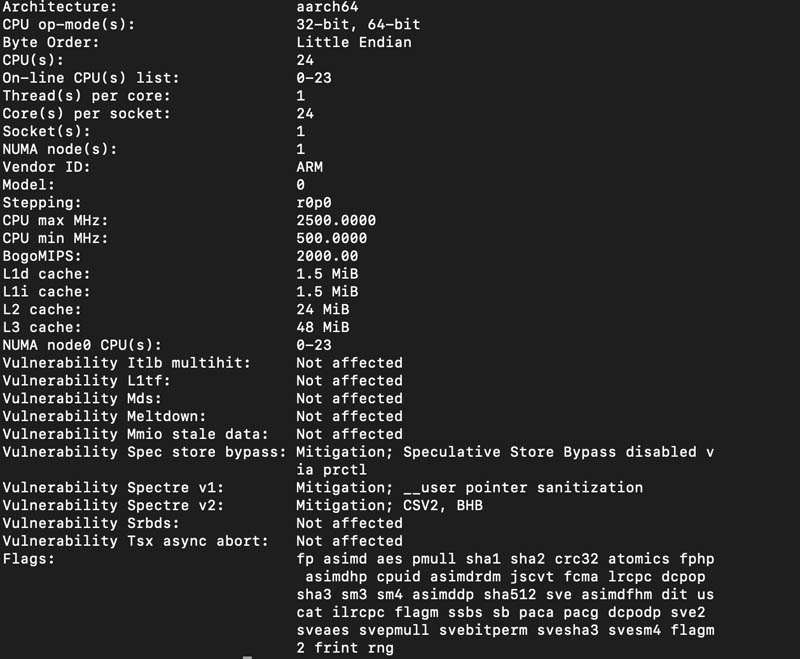

Next, we started the system and saw our new Arm Neoverse-N2 cores. This is the first time we have seen the new Arm IP in silicon.

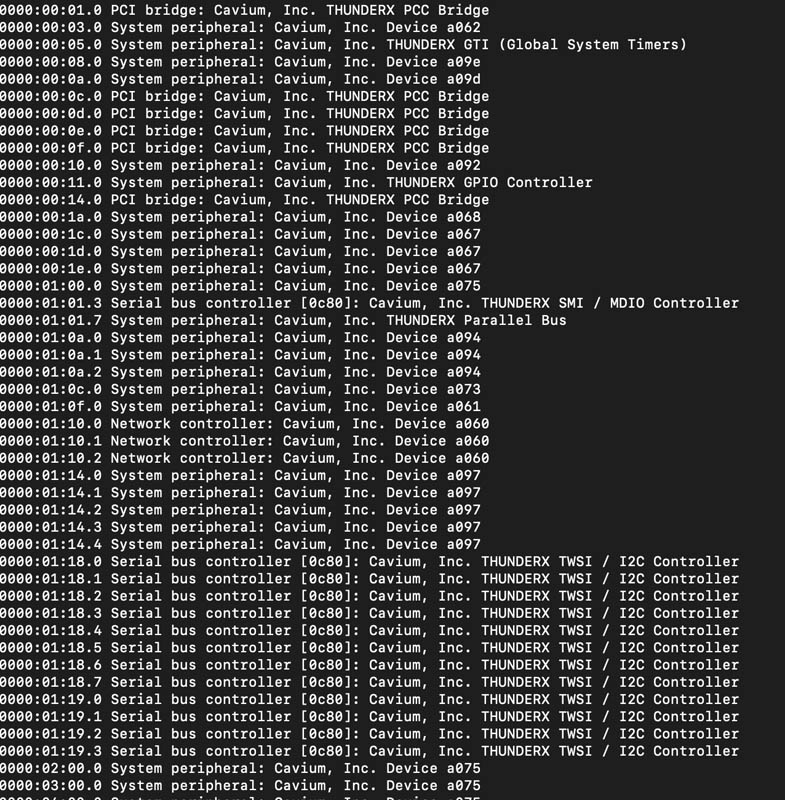

One of the other fun parts of checking out the new chip was seeing the Cavium/ ThunderX IP. I spoke at the ThunderX2 launch and we tested the original ThunderX parts, so it was fun to see the throwback “THUNDERX” references.

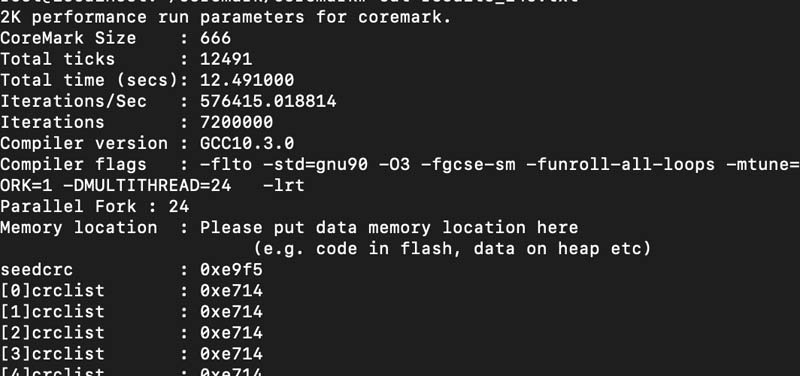

We are re-running numbers on other DPUs, but we figured we would tease at least one benchmark. Actually, the Pensando card is holding this process up. Still, here is a Coremark result:

Just for some context, 576K here is roughly in-line with a 20 core Intel Xeon Gold 6138. That is a fairly good result. We are getting a nice bump in core performance versus what we saw with the Neoverse N1 parts like the Ampere Altra Max 128-core chip. Even though this is a micro-benchmark Arm processor typically perform very well in, for some context a dual AMD EPYC 9654 (192 cores/ 384 threads) is around 8 million and an Ampere Altra Max 128 core is fairly common to see scores well north of 2.6M. Comparing this to modern desktop processors, this would be closer to an ~8 core AMD Ryzen 7000 or 13th Gen Intel Core.

This should be shocking to folks. Midrange 2017 Xeon performance is coming to PCIe cards on DPUs. We have the CRB, but this is awesome. What we will say ahead of our full results, is that Marvell has the fastest DPU we have tested, by a notable margin.

Final Words

This has been a fun process. It is taking much longer than anticipated, but that is part of the fun with the new hardware. The new Intel, AMD, and even Ampere server platforms have been relatively boring. This is exciting. Perhaps the strangest part of testing the Marvell Octeon 10 DPU is that the vast majority of websites on the Internet could be hosted with decent performance on a chip destined for a PCIe card. Marvell has done a great job of combining new cores (Arm Neoverse-N2), new memory controllers (triple channel DDR5), new PCIe controllers (PCIe Gen5), and high-speed networking (50Gbps per link) into a single package, along with its array of accelerators.

At the same time, we want to point out the current state of affairs. The Marvell Octeon experience felt like using a ThunderX2 chip for the first time. Things work, but you get the feeling you are in a special environment. Contrast that to a modern Ampere Altra (Max) system where Ubuntu is simply installed from ISO. NVIDIA BlueField-2 DPUs have been as simple to use as plugging them into a PCIe slot, connecting networking, and logging in. It even works in Windows and allows you to create Inception-style demos in minutes. AMD Pensando is probably a step or two behind NVIDIA in ease of use and documentation. Marvell feels like a distant third. Intel still needs to show up with Mount Evans.

Marvell’s CRB feels a bit more like a very fast and very complex machine. Just some of the basic benchmarking we have done to date confirms the Arm Neoverse N2 cores are fast, and having 24 of them is akin to having a 2017-2018 era mid-range to higher-end Xeon onboard. That is quite amazing to think that the Octeon 10 is combining that level of integer performance with high-end networking, faster PCIe Gen5, and a TDP that is designed to fit on a single-slot PCIe card. Hopefully, this is part of the productization plan. What Marvell has will work well in the OEM market, but at some point, NVIDIA is voraciously going after the average developer, and other vendors will either have to compete or be relegated to the exotic segments.

We still have a bit to go until our upcoming DPU roundup video, but it is awesome to see what may just be the top-end DPU in this generation with the Marvell Octeon 10. One thing is for sure, this chip is fast. Congratulations to the Marvell team on making this DPU a reality.

Stay tuned for a broader Octeon 10 deep dive on STH where we will look at things like the in-line crypto capabilities.

Someone should plug a DPU into one of those PCIe slots. A DPU for your DPU!

I think in the background of tomorrow’s video there is a Pensando DPU in this one.

@John imagine if the PCIe version had its own PCIe slot exposed where you could plug in another DPU into the DPU plugged into the DPU

I think that you will be quite happy with the out of the box experience on Mount Evans.

It fully supports Arm platform standards and is able of just installing Ubuntu from an ISO.

Both BlueField and Intel DPUs focus on platform compliance quite a lot.

IDK, Patrick. Can it really be a DPU if it does not require an expensive host server to plug into?

I think this is a fake FPU: it is in fact a PC with very unbalanced IO.

arm seems to be slouching towards producing more mid range appliances which is fantastic – will they be able to keep momentum and gain a foothold with volume is another issue – the fast networking support and nvme is key and we see this creeping to sbc mkt as well as these niche higher end prototype boards – what would red shirt jeff do with this is the only unanswered question. #dawg #Matryoshka dolls

We did that no-host server use case with the BlueField-2 DPUs https://www.servethehome.com/zfs-without-a-server-using-the-nvidia-bluefield-2-dpu-nvme-arm-aic-iscsi/

That is a big point/ feature of DPUs.

DPUs don’t make sense to me. They seem like some weird offshoot of the industry that solely exists because x86 manufacturers especially Intel was lacking in real improvement and up-to-date I/O. Putting a computer in a computer just weakens systemwide integration gains. At least that’s how I see it but I would be happy to be proven wrong.

I see more reason in other new exotic technologies like CXL and NVMEoF

One question: What the heck does “CRB” stand for?

CRB is Customer Reference Board

@Patrick Kennedy

Ah, that makes sense. Thanks. B-)

How do I get hold of one of these boards?