At Intel Innovation 2022, the company showed off its new Ponte Vecchio GPU in a slightly different form factor. We have seen the bare Intel Ponte Vecchio GPU Packages at Vision 2022. Now, the chips are being shown packaged as they are (finally) starting to make their way to Aurora.

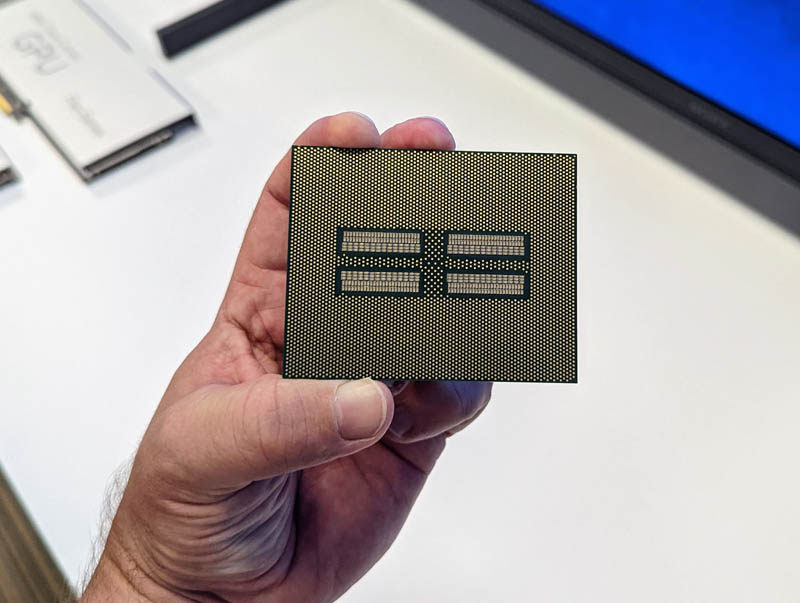

Intel Ponte Vecchio Liquid Cooled OAM Package at Innovation 2022

At the same event that we saw the Intel Arc A770, we saw the Intel Ponte Vecchio GPU. This one was packaged into something that looks ready to be put on an OAM module with cooling added.

Here is the bottom side of Ponte Vecchio with the pads exposed.

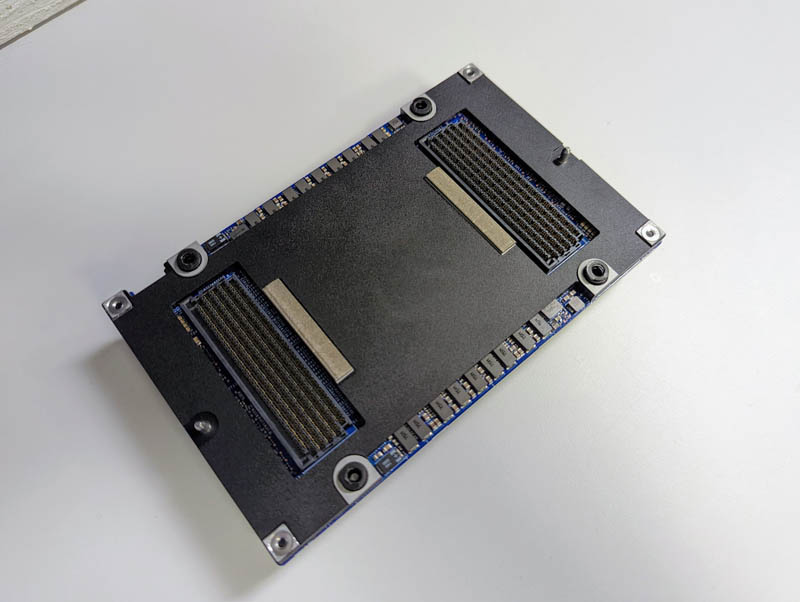

Aside from the package itself, the company also had an OAM module with the new chip and its liquid cooling solution. Here one can see the block with nozzles for coolant flow. The new GPUs use enough power that removing heat via liquid cooling is so much more efficient than using air cooling that the power savings makes the swap worthwhile.

We have been showing liquid cooling off on STH for some time, and did an entire liquid cooling system build with all of the components in: How Liquid Cooling Servers Works with Gigabyte and CoolIT:

If you just do not understand liquid cooling in data centers, then that should help understand what is going on and why. That demonstration was done with lower-power CPUs and the more power and heat devices generate, the more important liquid cooling gets.

Here is the OAM module’s bottom side. One can see the two connectors that mate to the baseboards. OAM modules carry both power as well as data signals through these pins.

If you want to learn more about OAM, you can see our old Facebook OCP Accelerator Module OAM Launched.

Final Words

It is great to see the new module ready to package up. We expect Ponte Vecchio’s 2022 volume to go toward Aurora’s production so perhaps this is one that we will see in 2023.

What I will say is that despite that module looking light, the cold plate inside feels like it is made from copper as that was a lot heavier than I expected picking it up at Innovation 2022.

Unfortunately those OAM module connectors are more costly, and slow down the bus more than just simple pads (transitions/reflections). We had seen that with Itanium, too. Interesting, thank you!

Funny enough I work on those… Beefy boys.

The connectors on that cooler look like basic barb designs; definitely gets the job done but offer none of the conveniences of (admittedly more expensive and complex) quick-disconnect type couplers:

Is the trend so far with liquid cooling that the vendor does whatever they deem suitable(presumably influenced by what has economies of scale; but not bound by any specific official or de-facto standard) within a given chassis but the connectors at the chassis boundary are meandering towards standardization of some sort; or is it currently the case that you are pretty much buying into a given vendor’s cooling system up to the rack level; at which point there’s either a back of rack radiator or enough room for plumbing to connect to facility water?

Has there been any interest in coupler standardization within the chassis; or is that considered fiddly enough for anyone operating servers at scale, rather than just messing around with their overclocked gamer rig, that the reliability potential of just making all the internal plumbing the integrator’s problem is seen as more valuable than the reconfiguration potential of being able to easily use standardized fittings?

Is there any interest in an intermediate position(eg. an arrangement where the integrator populates the parts of the loop that are ubiquitious and widely shared(CPU sockets, certain common GPU or accelerator cards) but leaves quick-connect fittings, just as they currently leave extra 12v connectors, to suit customer-option cards that are a little too uncommon to be stock catalog items?

Having dealt with some amateur liquid loops in my time I can absolutely see the virtues of letting the professionals handle that; but I can also the the virtue of being able to add high TDP parts that my integrator either didn’t offer at order time or simply didn’t exist at order time; and the virtue of enough standardization that I can continue to have most of the loop handled by professionals even if I need to shoehorn an Intel OAM package or an AMD GPU or something into a given brand server without needing some adapters in the loop that will add points of failure and constrict flow.

I assume it will be messier than +12v connectors no matter what; plumbing is just a pain like that; but I’m not so clear on whether liquid cooling standardization is something that people are working toward, albeit perhaps slowly, or whether it seems likely to end up with HDD caddies in the pile of relatively simple tech that is deliberately and pointedly non-compatible because vendors prefer it that way.

nice