At Hot Chips 2023, Intel detailed major changes coming to its Xeon CPU line. Namely, it is going to change how it is architecting and building chips. Years ago we discussed SoC Containerization A Future Intel Methodology. This is a big step in that direction. We even get PCH-less motherboards!

Since these are being done live from the auditorium, please excuse typos. Hot Chips is a crazy pace.

Intel on Changing its Xeon CPU Architecture at Hot Chips 2023

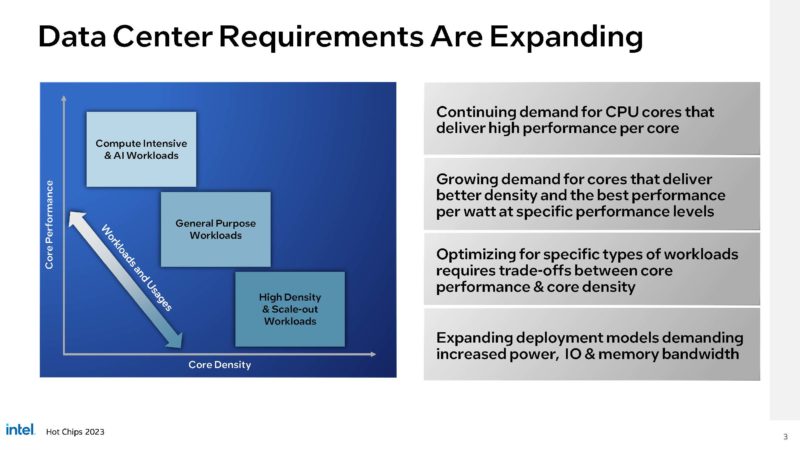

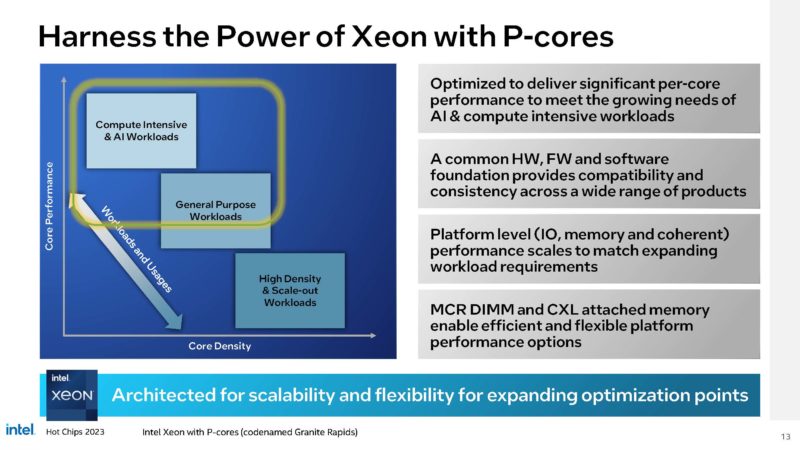

In the data center CPU market, there are now large workloads that need more than just one CPU design. In the current generation, Intel has its mainstream Sapphire Rapids, Xeon EE, and Xeon Max. Still, it needs more customization.

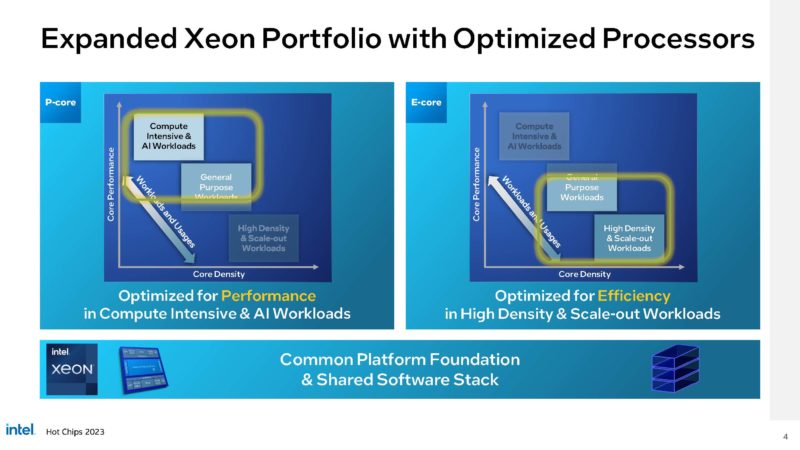

Intel’s immediate goal is to extend to processors optimized for cloud workloads that are less focused on high-performance vector computing. This is similar to how Arm has been focusing on this cloud native market, and AMD saw this market by introducing the AMD EPYC Bergamo this year in a four CPU 4th Gen AMD EPYC portfolio.

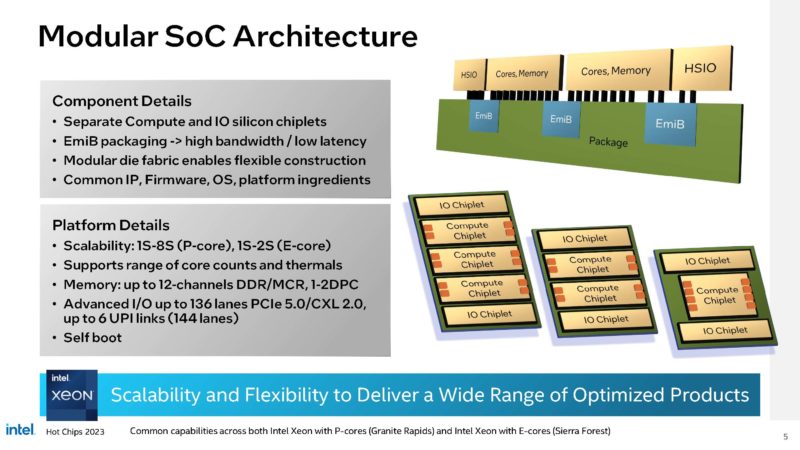

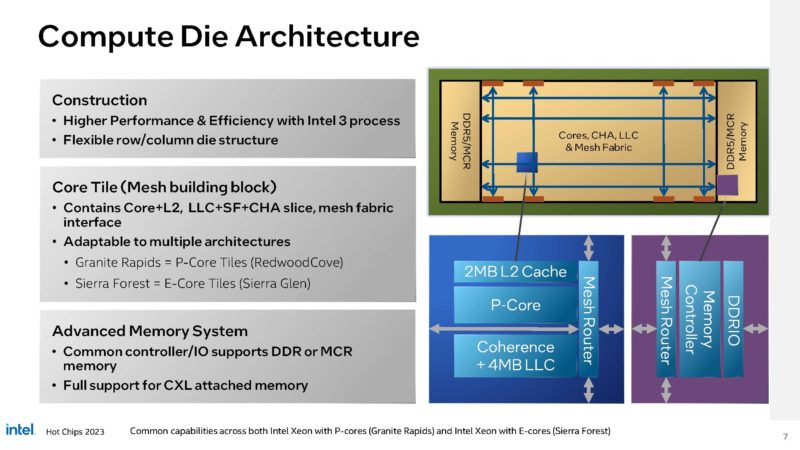

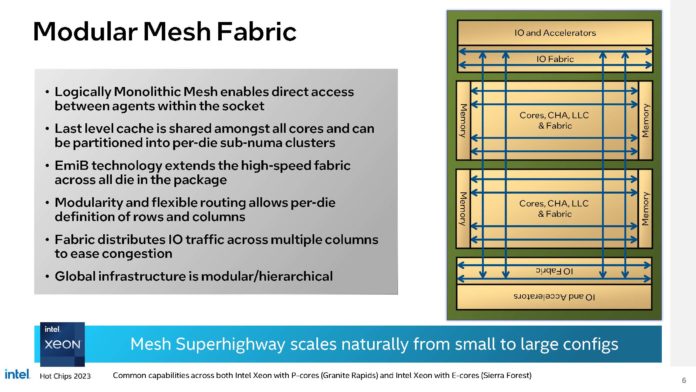

Intel is using a different approach. It plans to have compute chiplets, somewhat like AMD. Intel is keeping memory controllers on the compute tiles from what it seems. There are then I/O chiplets for things like CXL and PCIe at the package edges and using EMIB to tie CPU tiles and memory together with high-speed I/O.

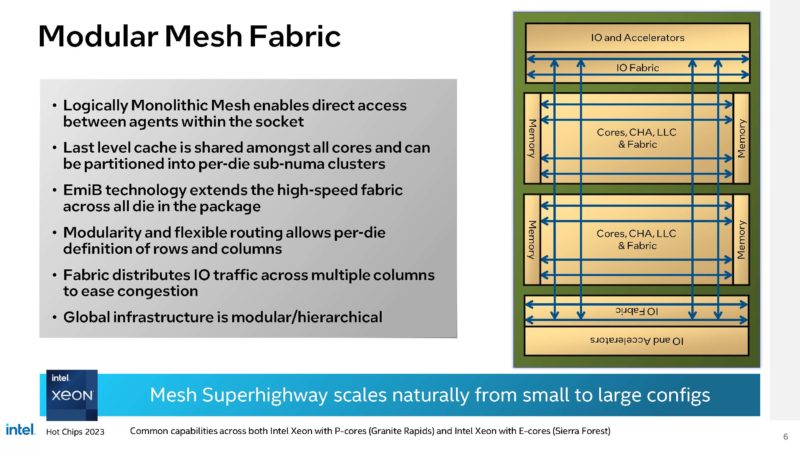

Intel’s Modular Mesh Fabric has to span not just a single compute tile, or multiple compute tile. It has to go over EMIB to cross tiles. Each die will have its own power management but there will be one primary controller. That way each tile can manage its own power.

On the compute die, Intel can use either P-core like Redwood Cove for Granite Rapids, Sierra Glent E-Cores as in Sierra Forest. The orange lines on the compute die below are the die-to-die connections.

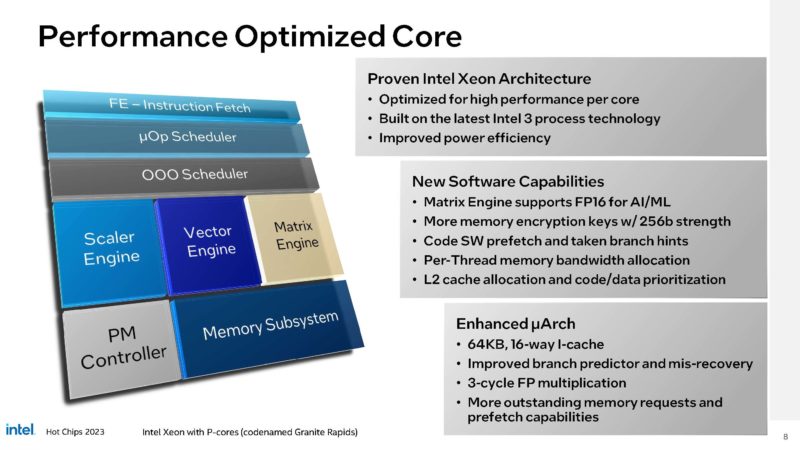

The new Granite Rapids Redwood Cove P-Core will be on Intel 3 and have new features. This is a derivative of the Sapphire Rapids core, but has two process node jumps as well as better power management. Along with that, there are many other improvements like the now twice as large 64K i-cache.

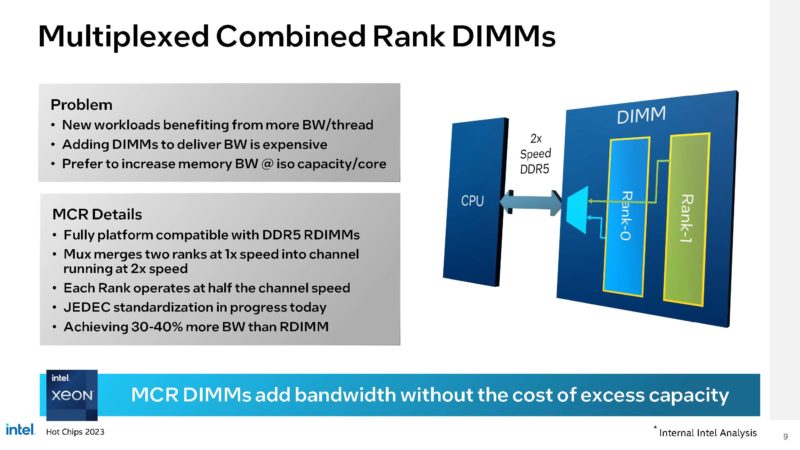

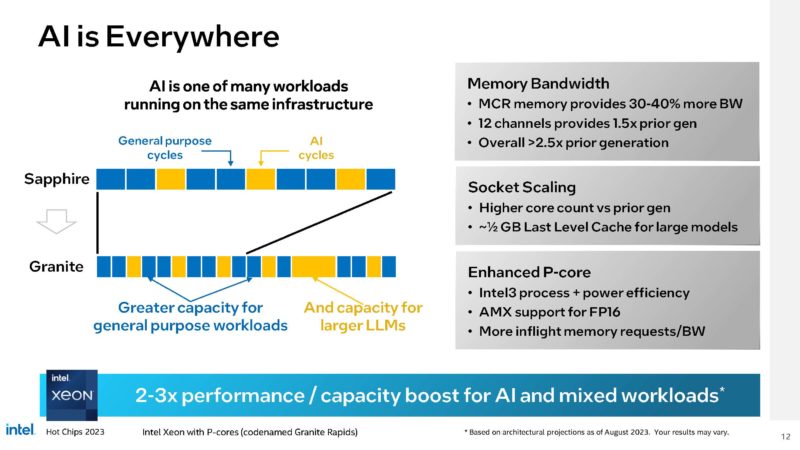

It will also support new features like MCR DIMMs. Intel told us that the new Granite Rapids with MCR DRAM can have more bandwidth than the current-gen HBM-enabled Xeon Max. This will be 8800MT/s, with each rank at half of that speed. With the MCR DIMMs at 12 channels, Intel will be at 2.5x the memory bandwidth of Sapphire Rapids.

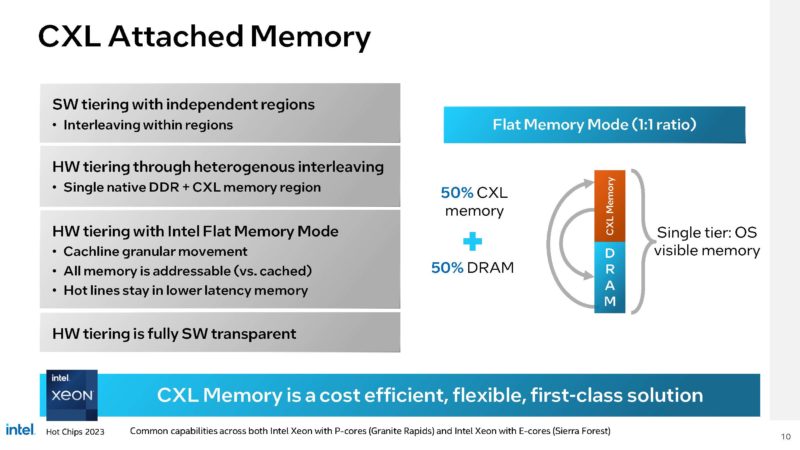

We also expect more focus on CXL Type-3 memory devices. CXL memory expanders can work with Sapphire Rapids, but that is not supported.

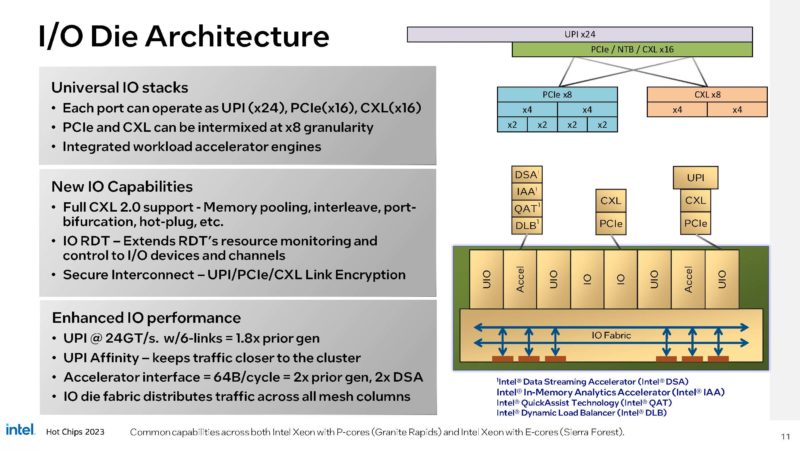

On the I/O die, Intel has UPI, PCIe, and CXL. This means we can expect Intel’s 2024 line-up to support new features like CXL 2.0. We asked about PCIe support, and these will be PCIe Gen5 parts still. The controller can be bifurcated so it can be all x16 CXL, all x16 PCIe, or x8 CXL x8 PCIe.

Intel also has DSA, IAA, QAT, and DLB as accelerators on the slide here.

We have more AI and bigger caches. Intel is saying up to 0.5GB of last-level caches up from just over 100MB today.

Here is the Granite Rapids summary slide:

This is all very cool.

Final Words

All we can say is FINALLY. It is great to see Intel start to take a more modular approach. It needs to move to chiplets aggressively to support the full vision of IDM 2.0 and also combat things like Arm CSS in the market (another topic at Hot Chips) that we are going to cover next on STH after this article. It should also help de-risk future large server CPU designs while also allowing for more customization.

These are some big disclosures on the next (or next-next?) gen Intel Granite Rapids Xeon as well as the E-core Sierra Forest. Intel has another talk slot at Hot Chips 2023 specifically for E-cores and Sierra Forrest, so stay tuned for a few hours.

Does Sierra Forest get the same MCR DIMMs, CXL 2.0, DSA improvements?

Will the E-core version be limited to 1 thread per core, for all cores, no core with dual threads?

@JayN Sierra Forest should support MCR DIMMs because Sierra Forest is socket compatible with Granite Rapids. Sierra Forest should support CXL 2.0 and the same 2x Data Streaming Accelerator (DSA) performance improvement as Granite Rapids because Sierra Forest uses the same I/O chiplet as Granite Rapids and the I/O chiplet is where this hardware is located.

@Park McGraw I’ve read that the efficiency cores in Sierra Forest, called Sierra Glen, do not support hyperthreading, unlike the performance cores in Granite Rapids. Even without hyperthreading, it is possible to run as many operating system threads as you want on one efficiency core. But that is different than simultaneous multi-threading, which was probably your question.

I am wondering if there will be a High Bandwidth Memory (HBM) version of Granite Rapids, like there is for Sapphire Rapids, called Xeon Max. With 3 compute chiplets in Granite Rapids, I would expect 6 HBM stacks, with 2 HBM stacks connected to each compute chiplet. If Intel allows one bad HBM die in an HBM stack of 8 or 12 die with 3 GBytes/die, that would be a total HBM of 6x7x3 = 126 GBytes or 6x11x3 = 198 GBytes of HBM. This is roughly 2 or 3 times more HBM capacity than Sapphire Rapids. Increased HBM capacity and bandwidth would be very useful for large language models.