At Hot Interconnects 2021, Intel had a keynote focused on CXL. CXL is short for Compute Express Link and is a technology coming to servers in 2022. This is a big deal as it will fundamentally change the relationship between components and memory in servers, and allow new technologies in the data center.

STH CXL Background

We have covered STH a few times. Most recently in our Planning for Servers in 2022 and Beyond Series we covered this as a Top 11 technology for 2022.

We also have a Compute Express Link or CXL What it is and Examples piece. Here is the video.

If you read or watched those, many of the concepts from the keynote will seem familiar.

Intel Hot Interconnects 2021 CXL Keynote Coverage

The slides for this keynote are very good. We are going to omit a few of them that are the standard slides around new computing models, the explosion of data creation, and so forth. If you read STH, you have seen hundreds of these slides over the years.

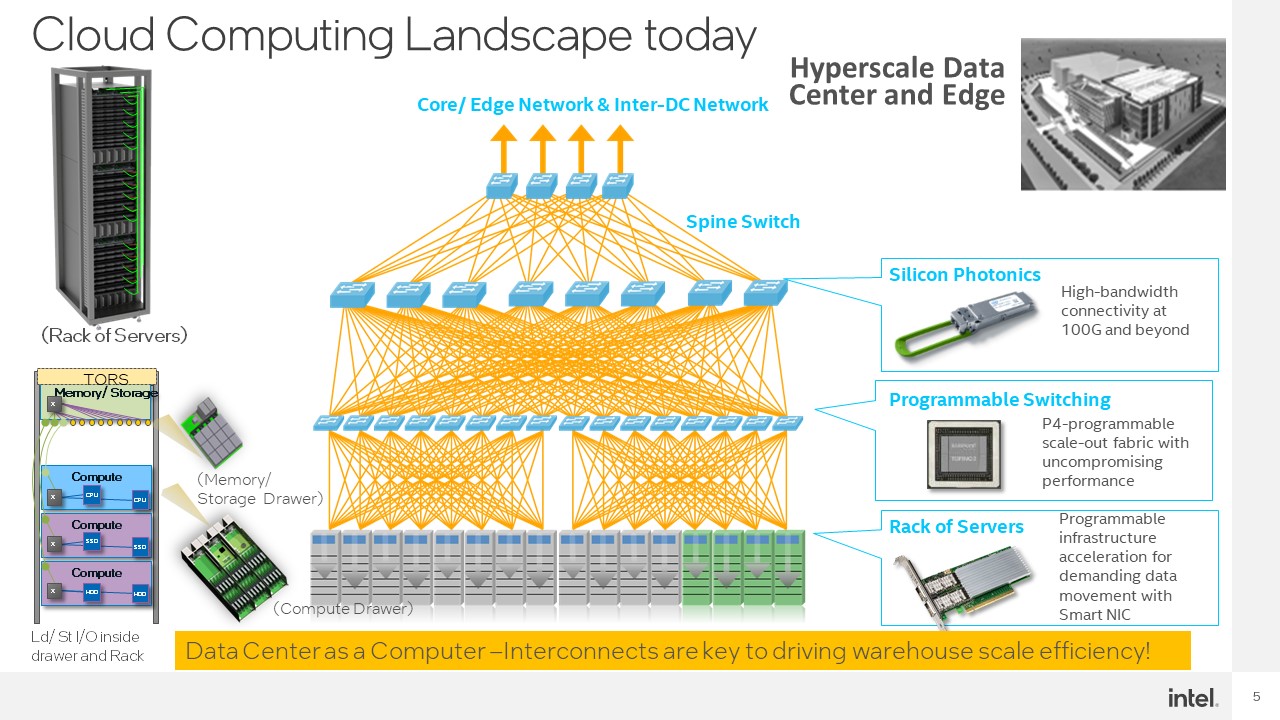

The first substantive slide is around the current cloud computing landscape. Here Intel is showing its silicon photonics products (we covered at Intel Labs Day 2020 as an example) and also the P4 switching as part of Intel’s Barefoot acquisition. Here Intel still ahs the smart NIC but is discussing how server racks are built.

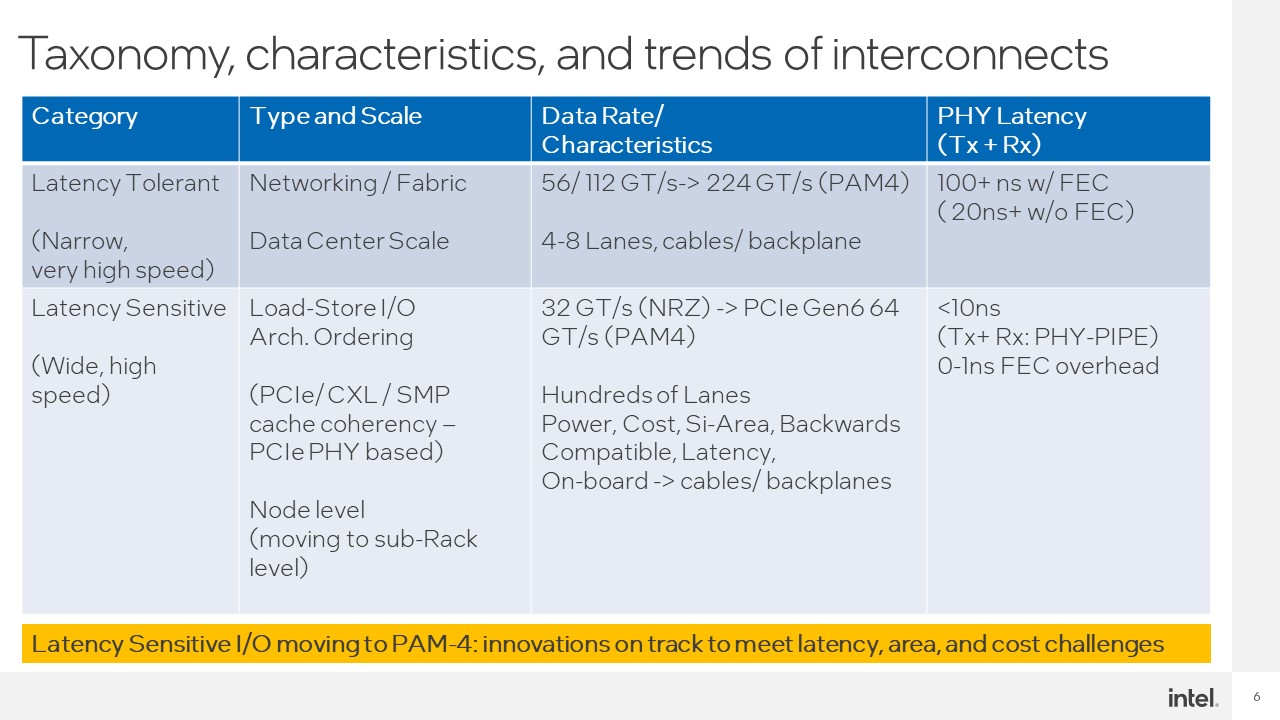

Intel was also discussing the difference between the interconnects that scale across data centers and those focused more inside the node or close to the node in a rack. The further the reach, the higher the latency.

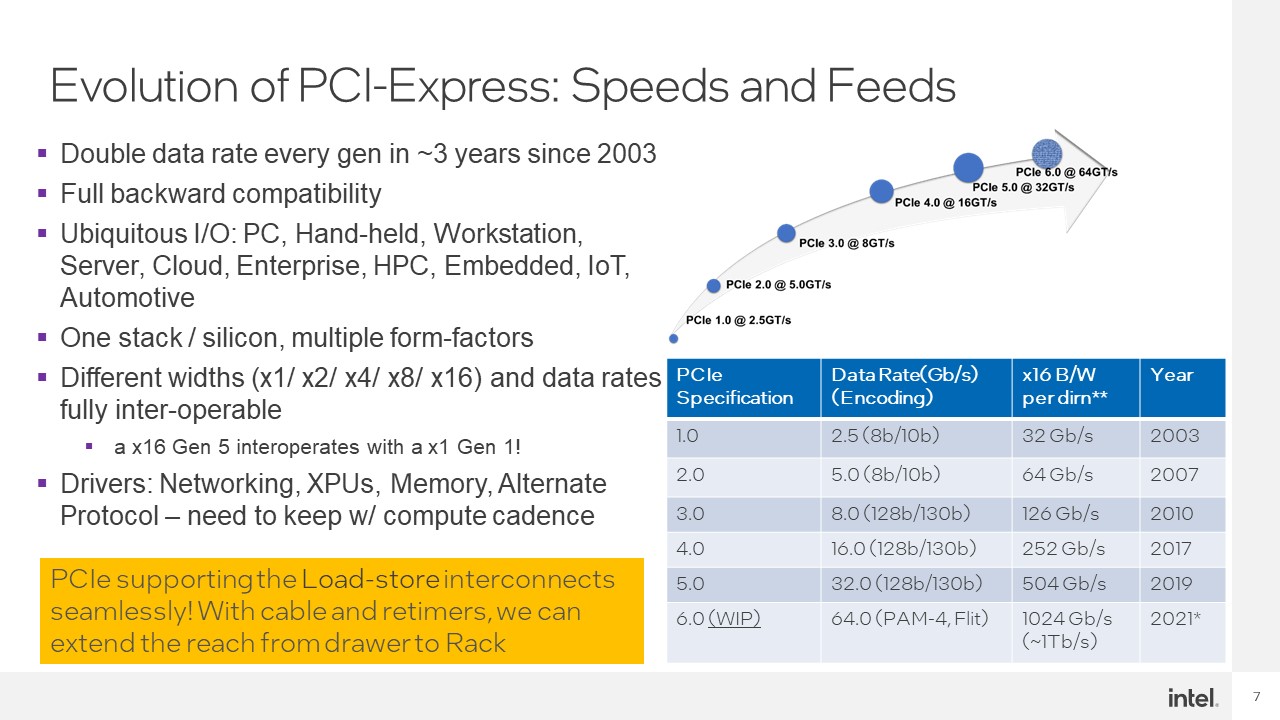

Intel also discussed PCIe Gen6 hitting around 1Tbps of bandwidth in an x16 slot. Also how PCIe has become ubiquitous in the server industry.

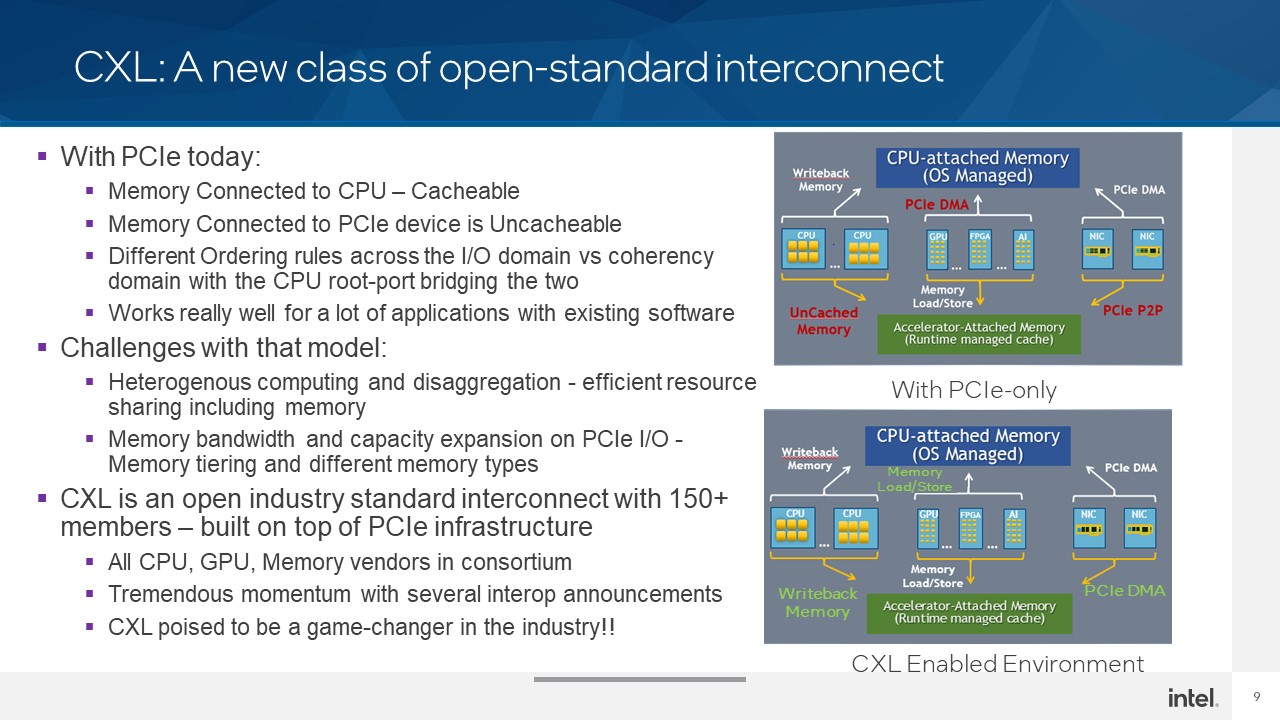

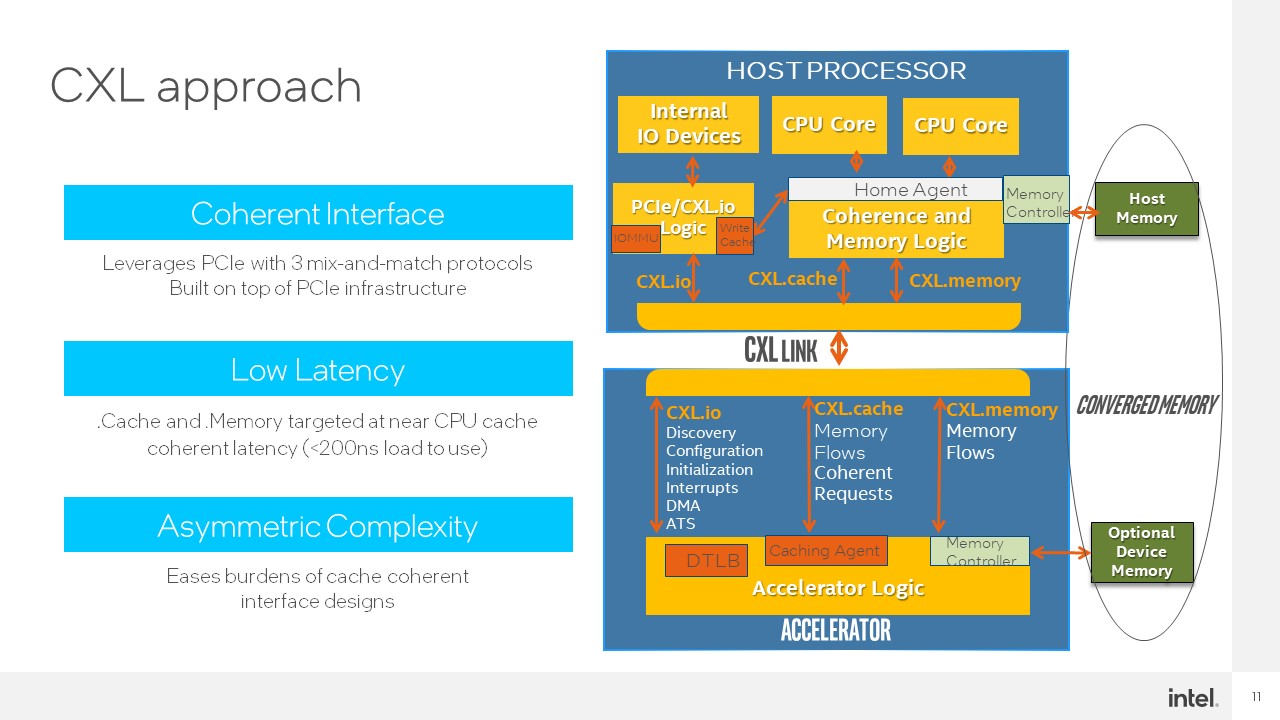

Intel is bringing up CXL since CXL is built upon the PCIe physical layer. As a result, it is designed to bring new capabilities to address memory and lower latency communication to support those transactions to the server.

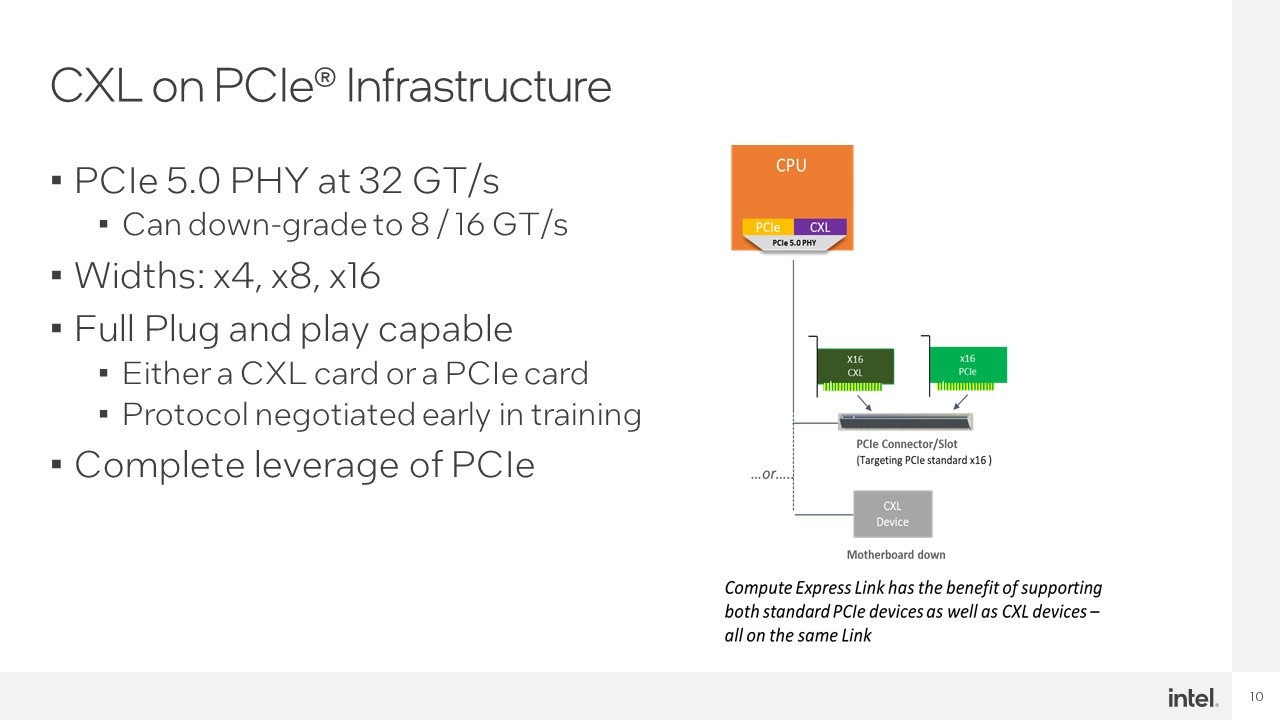

CXL devices are going to become more popular with the PCIe Gen5 generation. A benefit of sharing the physical layer, and the design of CXL is that a slot can operate as PCIe or CXL depending on the type of device connected.

CXL is designed to have coherency and lower latency so memory can be accessed/ shared in a more efficient manner. Memory is a huge cost in the data center.

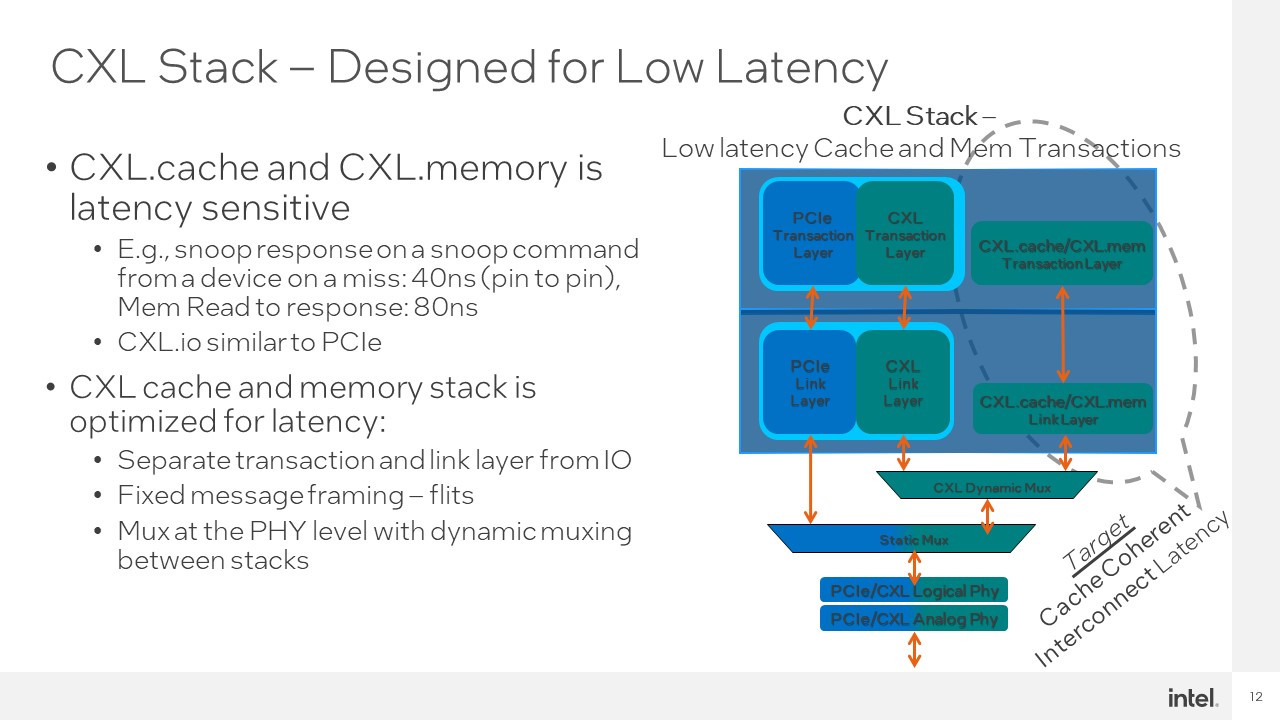

The companies behind CXL are really pushing the latency envelope. We expect higher latencies because of the longer distances. At the same time, driving lower latencies means new use cases.

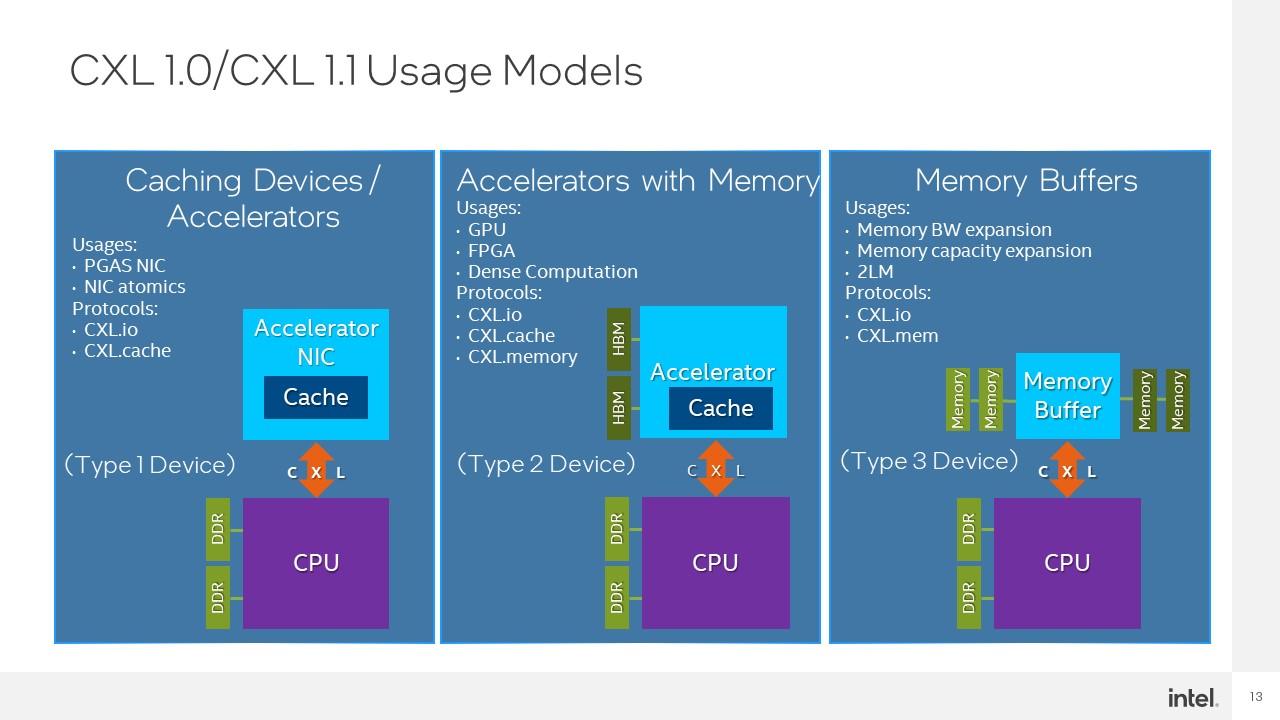

Intel mentioned that the first generation will be CXL 1.0/ 1.1 devices that we will really see in 2022. These are the simpler use cases that we have covered numerous times if you want to learn more about them.

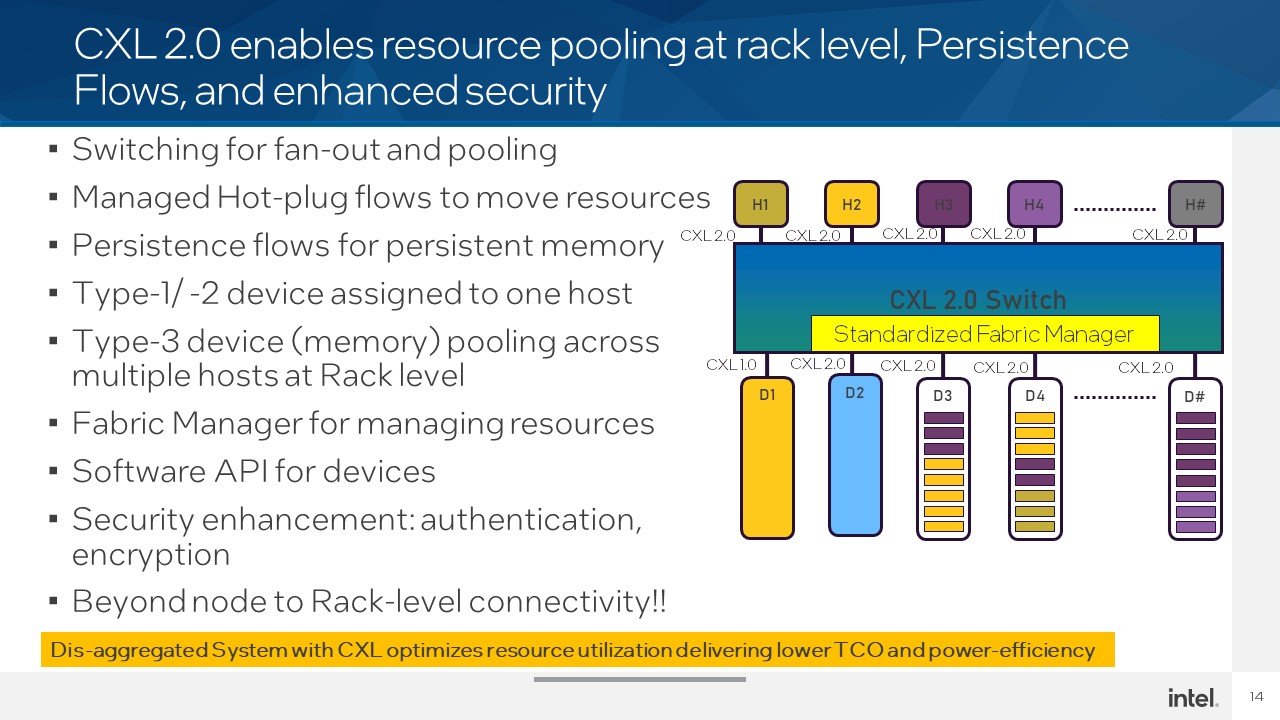

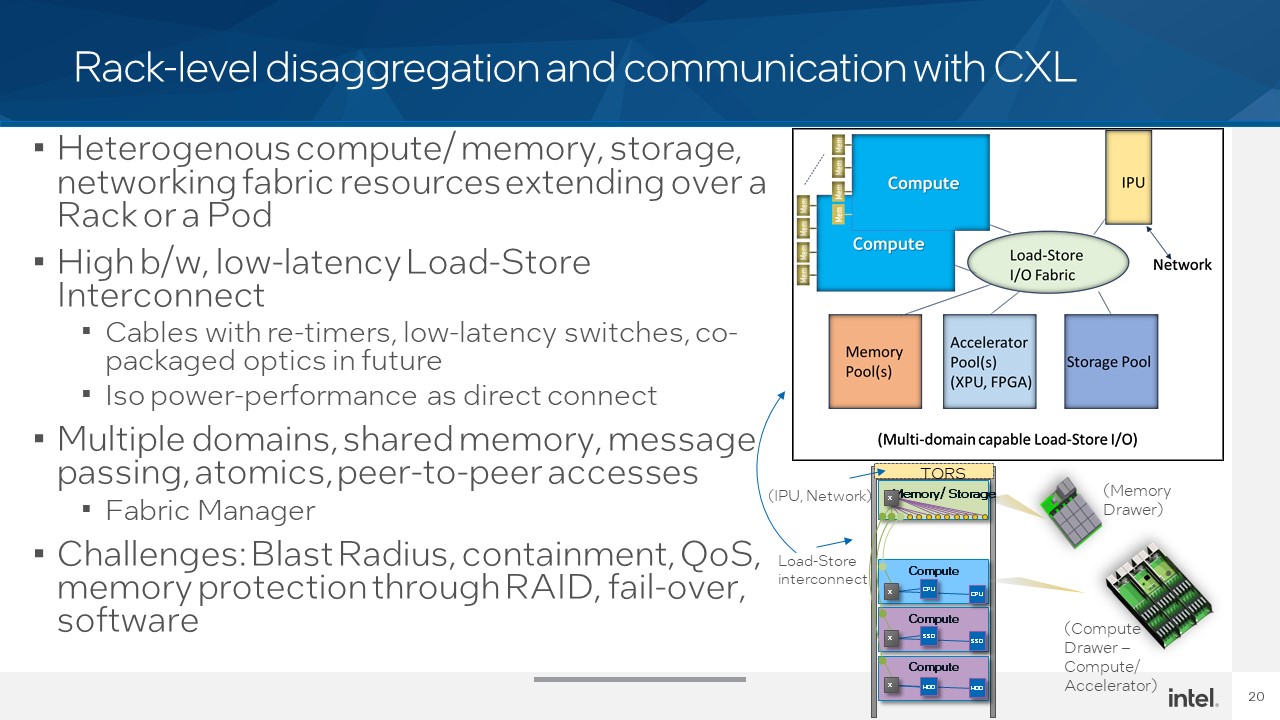

The industry is already looking beyond CXL 1.1 to CXL 2.0. CXL 2.0 is what gives us the ability to pool resources beyond a single node. CXL 2.0 adds switching capabilities and a fabric manager. This is how we can get shelves of memory that are accessed by multiple systems and really change the way systems are designed.

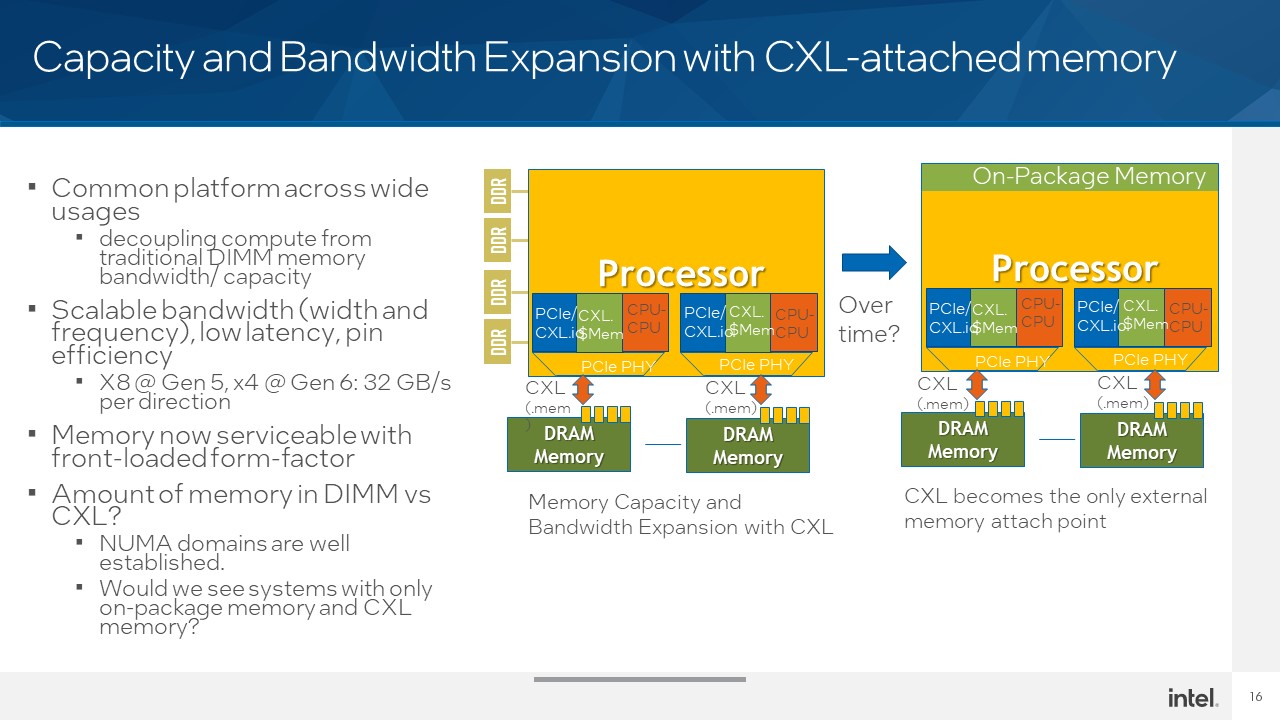

One of the interesting points here is that this has the opportunity to increase memory capacity since one can use multiple large CXL memory modules and dynamically allocate them to different servers, CPUs, or accelerators. CXL memory as we saw in our recent E1 and E3 EDSFF piece and with early technology such as the Samsung CXL Memory Expander with DDR5 we can get much higher capacities. Also, as the PCIe bus continues to evolve, we can get more raw bandwidth than a CPU has in just its memory channels since one can get standard DIMM memory and/ or on-package memory plus CXL memory bandwidth.

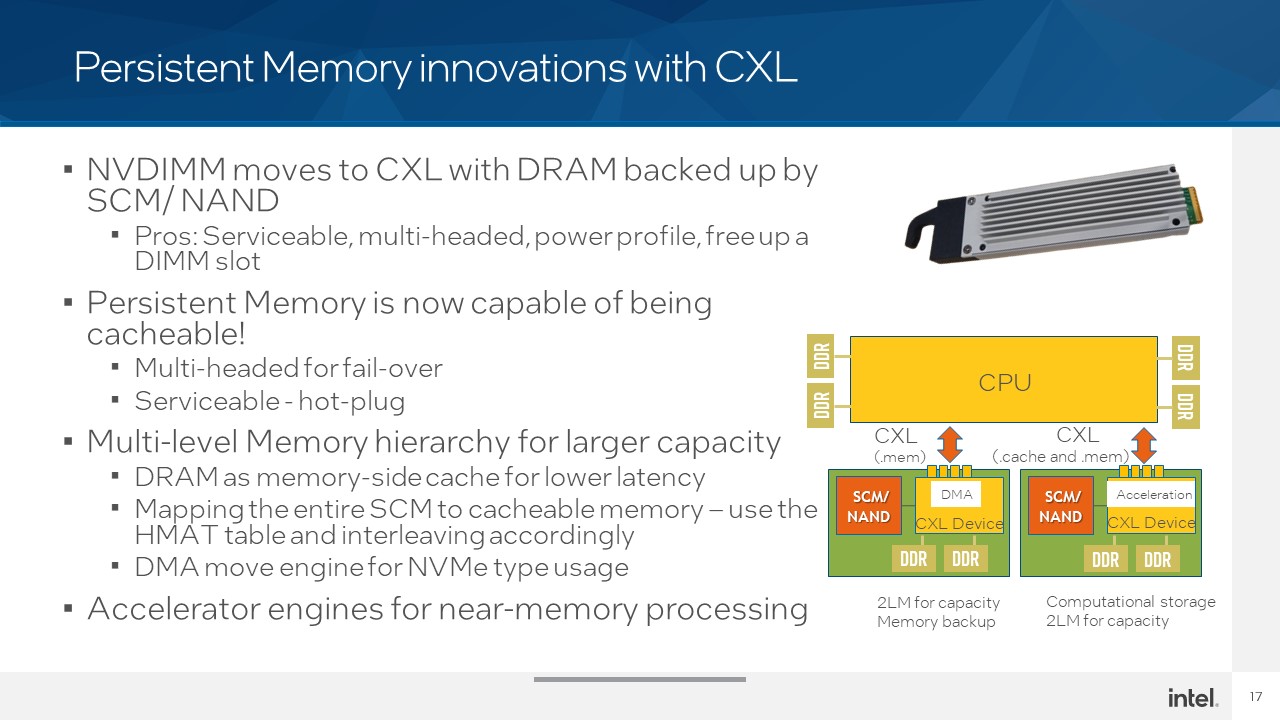

We covered Intel Optane PMem in Glorious Complexity of Intel Optane DIMMs and Micron Exiting 3D XPoint. Micron noted one of the reasons for its exit is that it wanted to focus on CXL memory. Intel, as we expect, is thinking about the same use case. Persistent memory can move to EDSFF CXL form factors. This is also better from a physical standpoint as one has more room and thermal headroom in an EDSFF form factor than in a standard DIMM for all of the different persistent memory types.

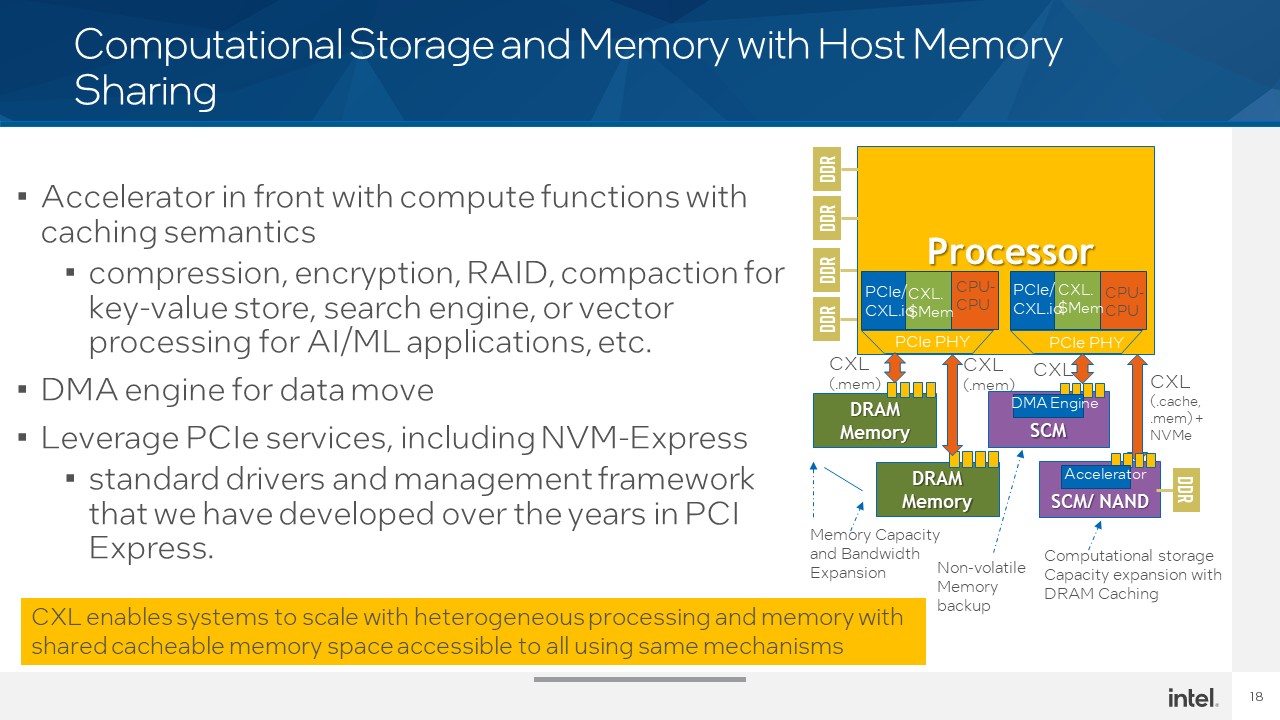

Intel is also discussing computational storage with memory. An example highlighted is moving what previously occurred on RAID controllers and crypto/ compression accelerators to computational storage devices. A lot of doors open with CXL.

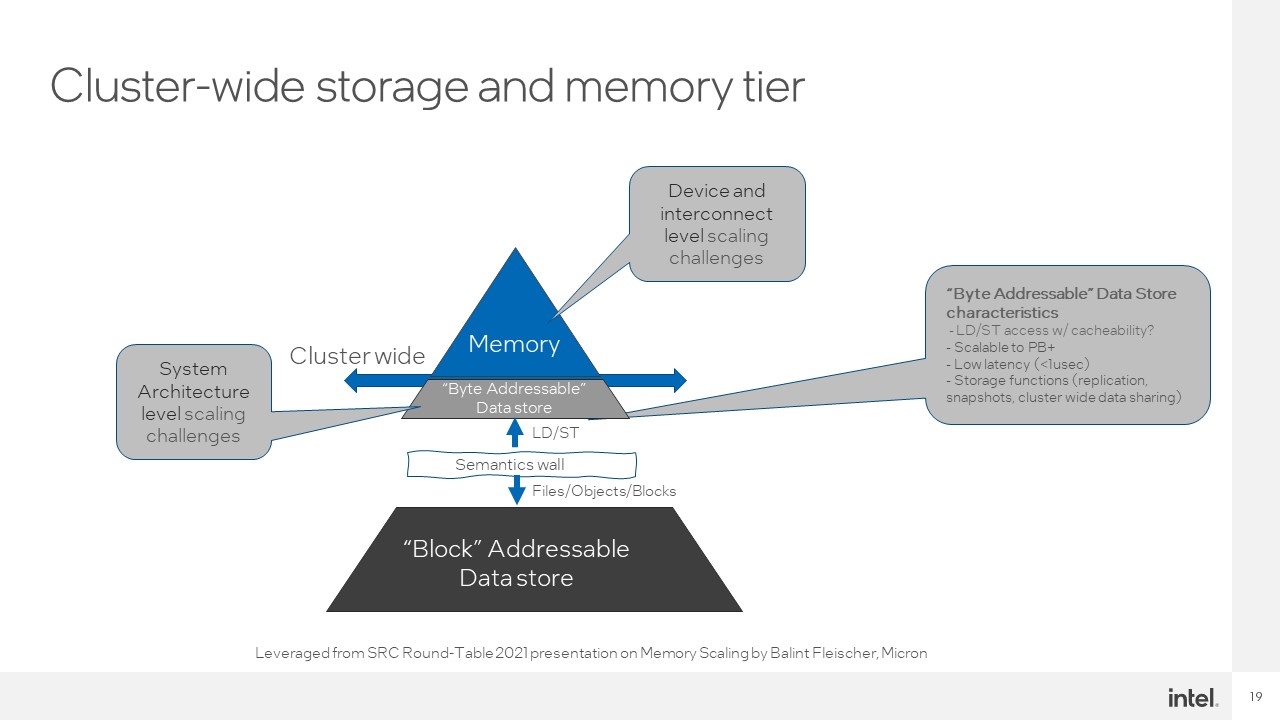

With CXL 2.0 and beyond the industry is moving toward’s Intel’s 2016 Rack Scale Design vision. The first step is being able to scale across the rack. With technologies that help bridge physical distances at low latency (e.g. silicon photonics), this can become a cluster-wide scale technology. There is also Gen-Z and CXL to Gen-Z bridges in the wings.

The ultimate goal is to have shelves of memory or memory and storage and be able to upgrade CPUs, accelerators, storage, and memory independently instead of overprovisioning within a server. This is almost like the the virtualization of the hardware layer in a hardware-centric approach.

Overall, CXL is a big focus for Intel and the industry. It is easy to see why.

Final Words

CXL is poised to present a major disruption in architecture. CXL 1.0/ 1.1 devices that we will see next year are really the early adoption generation. Armed with standards and devices, and a path to CXL 2.0 shortly thereafter, the industry will adopt CXL. Every server vendor we talk to thinks that this is a huge force in the industry and if you have been reading STH we have been discussing it since the beginning so you can be ready for the coming changes.

Get ready for big things with CXL.

“The ultimate goal is to have shelves of memory or memory and storage and be able to upgrade CPUs, accelerators, storage, and memory independently instead of overprovisioning within a server. This is almost like the the virtualization of the hardware layer in a hardware-centric approach.”

So how will that affect on (mother)board DRAM? Will it shrink a lot? Disappear? CXL still can’t offer the bandwidth that on board DRAM can provide? Or can it?

Just one question.. how they will maintain fail-over…

For example – if I have allocated 1Tb of temp storage per one task and remote storage rack is “destroyed” (plug-play)

Blast radius is a big challenge with CXL 2.0 and later since everything is load store. You are 100% correct this is a big challenge.