At Intel Architecture Day 2018, Intel Cooper Lake had a few new details that were discussed. Intel Cooper Lake generation Xeon CPUs are going to be extremely interesting and we learned that there will be a new instruction added to the ISA to help deep learning training on CPU.

Intel Cooper Lake Xeon in Roadmap

First off, we wanted to show the Intel Cooper Lake Xeon in the context of the Xeon Roadmap Intel presented at Architecture Day 2018.

As you can see, this will be the Xeon line that succeeds Intel Xeon Cascade Lake and Cascade Lake-AP, but is before Intel Ice Lake Xeon. Intel has discussed some of the Cascade Lake generation improvements. Now it is starting to publicly talk about Cooper Lake as the generation beyond.

Intel Xeon Cooper Lake Disclosures

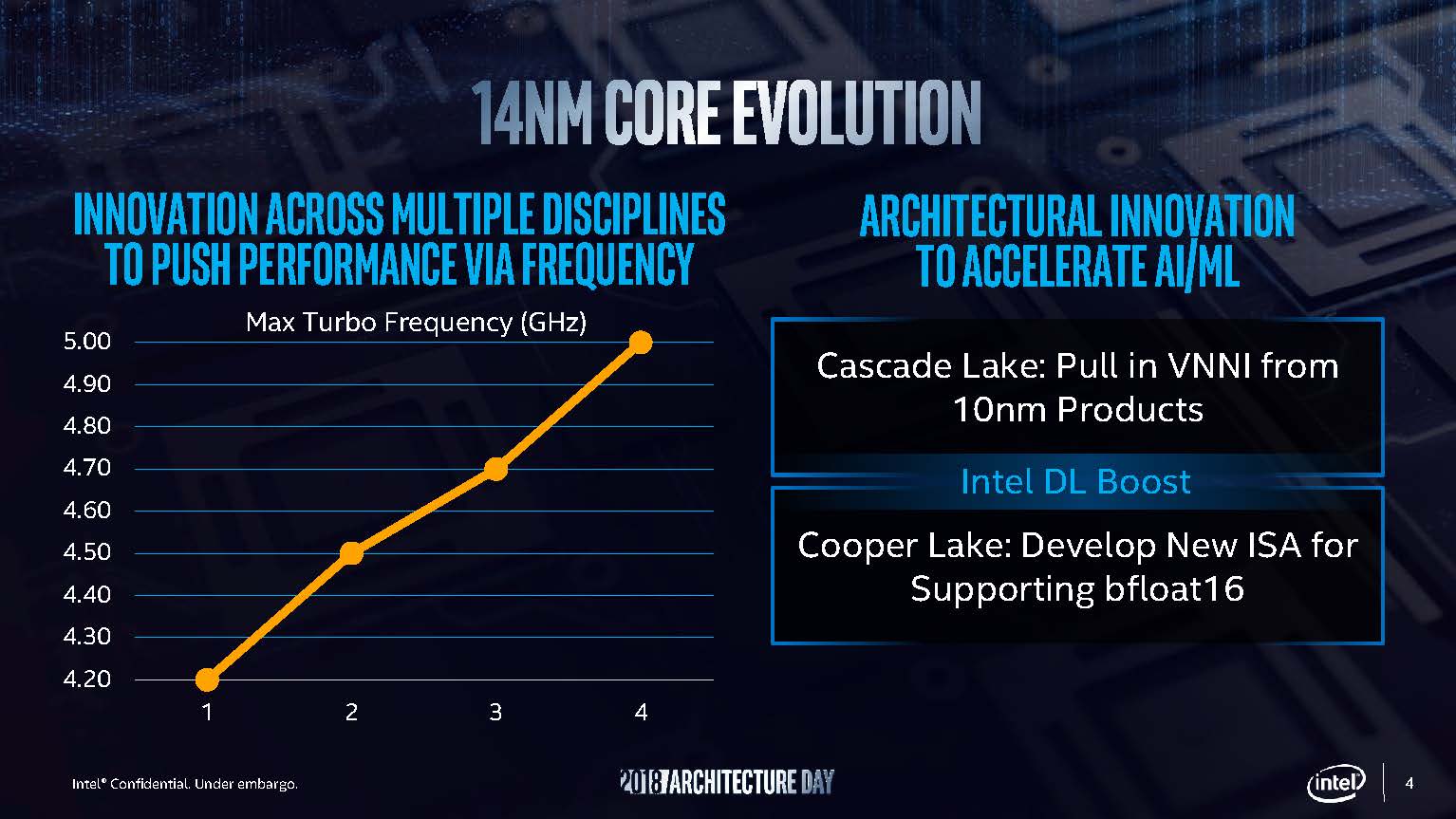

There were a few disclosures around Intel Cooper Lake at Architecture Day 2018. One of the first slides involved 14nm. Intel showed off that its 14nm node has seen a significant improvement over generations. Intel also showed how VNNI, Intel’s inferencing acceleration technology, was pulled in from 10nm to 14nm as 10nm moved out.

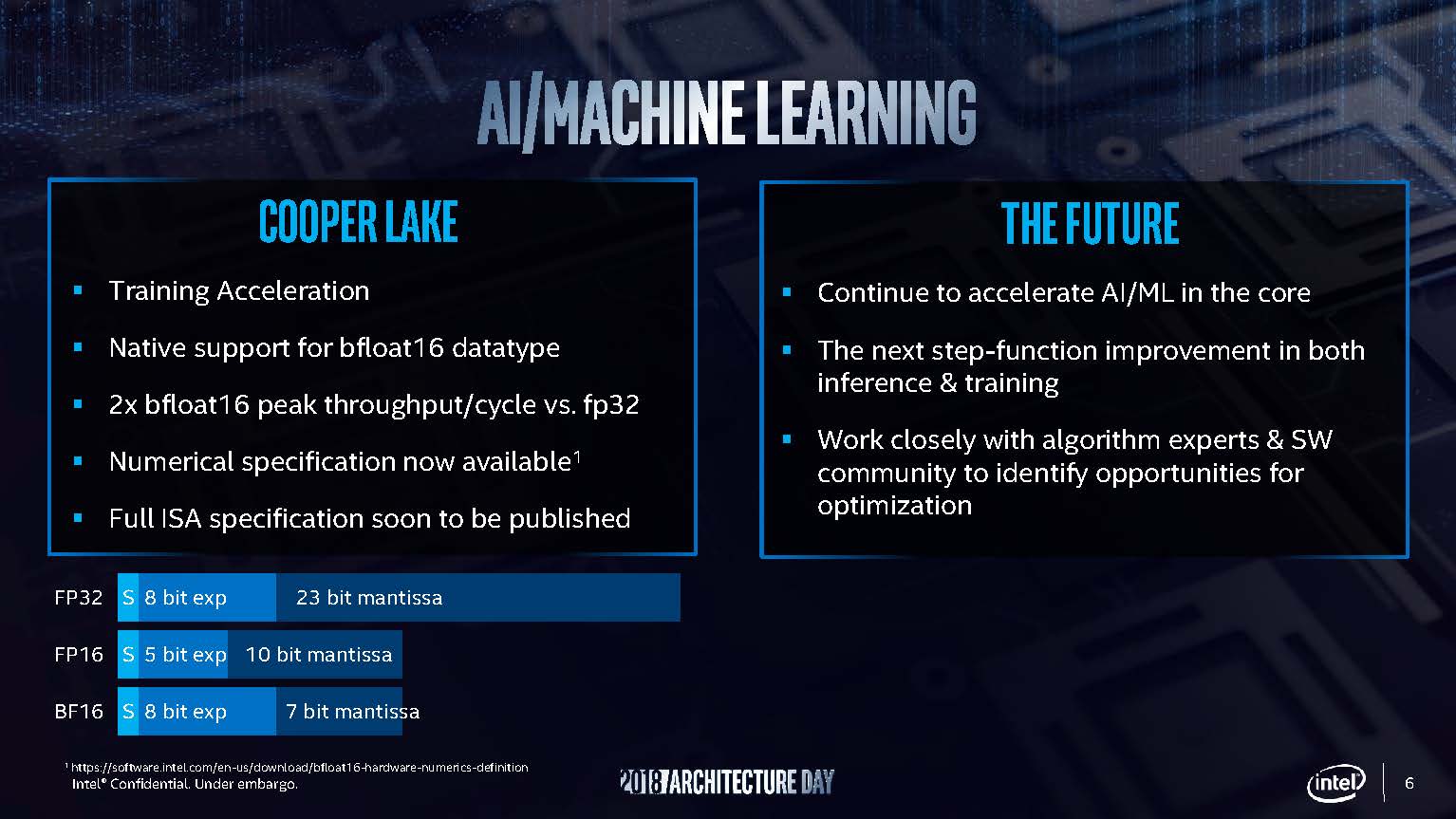

On the slide is also Intel Xeon Cooper Lake generation as a 14nm part. Here, Intel lists that it will deliver a new ISA with bfloat16 support. Here is what bfloat16 brings:

With bfloat16 one gets the same 8-bit exponent that one gets with FP32, but with a shorter 7-bit mantissa. For those wondering what a mantissa is, when you have an exponent such as 0.213943 * 10^4, the 213943 is the mantissa. One loses precision over FP32, but one gains speed about twice that of FP32 and more akin to FP16. Although there is less precision, the deep learning community has been looking at bfloat16 as a way to train models faster by reducing precision.

The Intel Cooper Lake Xeon parts will support bfloat16 and a new ISA addition will come to help programmers use the new feature. Intel is trying to bridge the gap between what CPUs can do and what GPUs can do using novel features like this. While Cascade Lake and VNNI will help the AI ecosystem with faster “free” CPU inferencing performance, Cooper Lake and bfloat16 will help on the training side. For those that want a GPU from Intel for training, Intel said that is coming sometime 2020 or later.