As part of the US Department of Energy’s goal of hitting exascale computing by 2021, it is investing in research covering a number of technologies. HPE is funded to perform research for the US DOE and one of the programs it is working on is called Vanguard. The National Nuclear Security Administration (NNSA) and HPE have been collaborating on a new Cavium ThunderX2 based supercomputer called Astra which will be installed at Sandia National Labs. Astra is the largest Arm-based supercomputer around.

Background on Astra

We had a chance to talk to HPE’s Mike Vildibill about Astra. He highlighted a few key challenges companies working towards exascale are facing. Power consumption is one of those challenges. We were told that the cost of electricity to move data inside a supercomputer takes 10x the energy to comptue the data. To address this, HPE has been working on memory-centric computing in its “The Machine” program. We highlighted that HPE The Machine used Cavium ThunderX2 at SC17.

HPE has been working with Cavium for a long time and says it was the first vendor outside of Cavium to receive silicon. It has a program called Commanche where HPE seeded hundreds of prototype servers to national labs, universities, and other organizations for the purpose of porting, optimizing and demonstrating software that runs atop these systems. During the Cavium ThunderX2 launch, we noted just how far the Arm server ecosystem has come in the last two years.

That Commanche program evolved into a product called the Apollo 70 which is at the heart of Astra, the new 2.3PFLOP supercomputer for the US DOE.

HPE Apollo 70 and Astra

The HPE Apollo 70 that Astra utilizes is a 2U 4-node Apollo 2000 enclosure. Each compute tray holds a dual socket Cavium ThunderX2 server. HPE is essentially offering its popular 2U 4-node Apollo line in Arm with ThunderX2. That means that outside of the motherboard, the rest of the system is largely shared with x86 machines making them high volume enclosures.

For Astra, the ThunderX2 parts are not the higher clock speed 32 core models we tested. Instead, the supercomputer utilizes 28 core parts at 2.0GHz. We asked why and were told that the expected workloads are memory bandwidth bound, instead of CPU bound. This SKU brings the price down and delivers on the full memory performance. These systems are being cooled by HPE MCS-300 liquid cooling units which is a trend we will see more of in the near future as we progress to exascale.

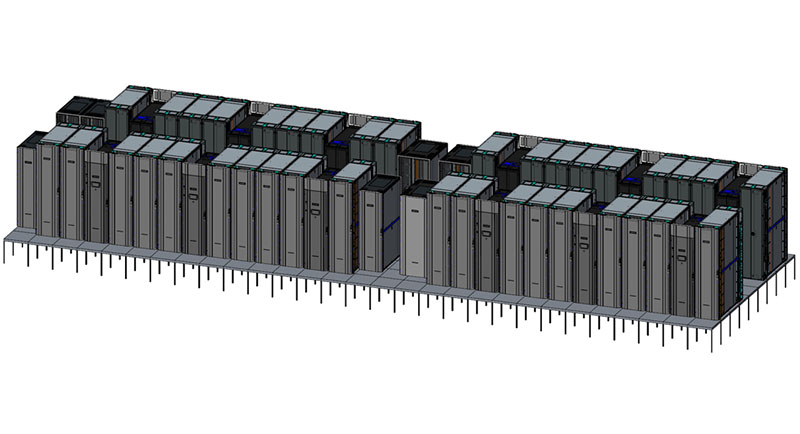

The HPE Apollo 70 is designed to handle GPUs, however, Astra is not planned to use GPUs as the focus is on Arm compute with 2592 dual ThunderX2 nodes. The interconnect is Mellanox EDR 100Gbps which is standard issue for supercomputers of this generation. All flash storage duties are handled via an HPE Apollo 4520 which utilizes Lustre for its high-speed distributed filesystem.

On the software side, the system will run Red Hat Enterprise Linux for Arm. Mellanox worked on the software stack including getting Arm OFED drivers working. Arm itself has been working on optimizing compilers and toolchains including GNU, LLVM, and their own commercial compilers. Sandia is insistent on multiple compiler sets for Astra. HPE ported its HPC software for Arm from x86. Examples are HPE MPI and HPE performance cluster manager HPCM which ease in the management of the large distributed system.

I’ll take two.

Lots of good news coming out of DOE, and Sandia specifically, with the scale up of US supercomputing. Glad to hear Thunder X2 if getting some love as well. 2.3PFlops, based on the size of the project I get the feeling they’re still working on ironing out ARM software side, porting and optimizations?

“It has a program called Commanche where HPE seeded hundreds of prototype servers to national labs, universities, and other organizations for the purpose of porting, optimizing and demonstrating software that runs atop these systems.”

This. To get more ARM servers into the data center, the machines have to be available so the developers can test code on them.