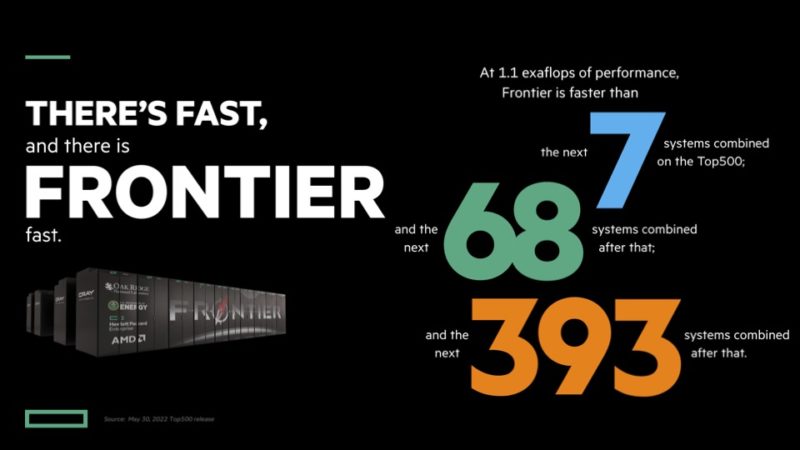

Today we have a new #1 on the Top500 list. The new HPE Cray EX platform with AMD CPUs and GPUs has hit 1.1EF with a 29MW power budget to take the top spot. Yesterday I got into Hamburg, Germany a day late for ISC 2022 dropped my suitcase off in the hotel then sprinted 2km to the pre-briefing for the system with Thomas Zacharia from Oak Ridge National Lab (ORNL) and Justin Hotard from HPE on the new Frontier supercomputer.

HPE and AMD Powered Frontier Tops the Top500 Ushering in Exascale

At STH, we have been closely tracking the HPE Cray EX platform for some time. It is great to see the new system out.

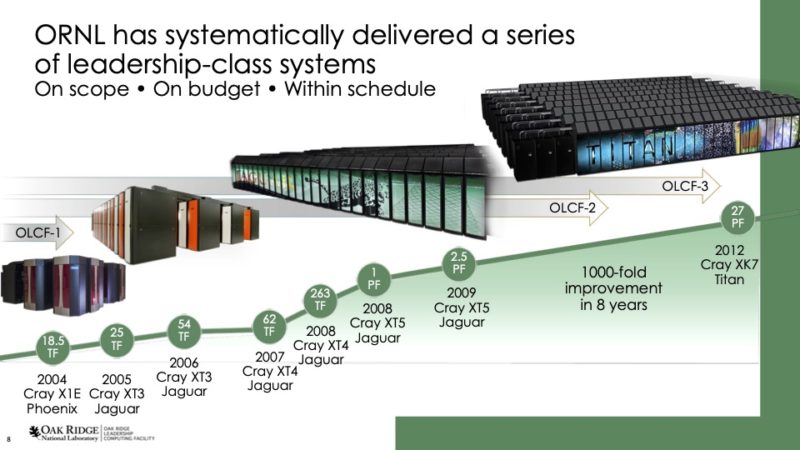

No only did Frontier hit #1 on the Top500 with 1.1EF on HPL out of around 2EF theoretical, but it also is #1 / #2 on the Green500. The test and development system for Frontier was #1 on the Green500 and the full Frontier was #2. That Frontier TDS system was #29 on the Top500 list for a sense of scale. Fugaku was highly regarded as ultra efficient with its Fujitsu Arm processors, but now x86 and GPUs have struck back on performance and efficiency.

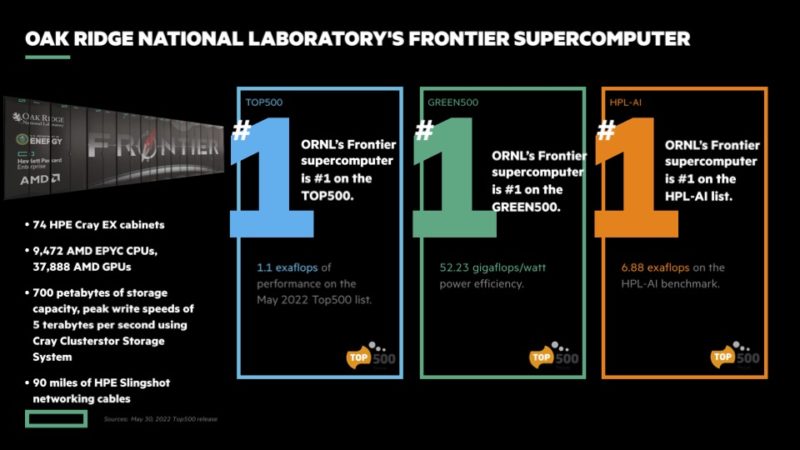

Making up Frontier is 74x HPE Cray EX cabinets and a total of 9,472 EPYC CPUs and 37,888 AMD GPUs. There is 700PB of storage that can write at 5TB/s speeds and 90 miles of cabling.

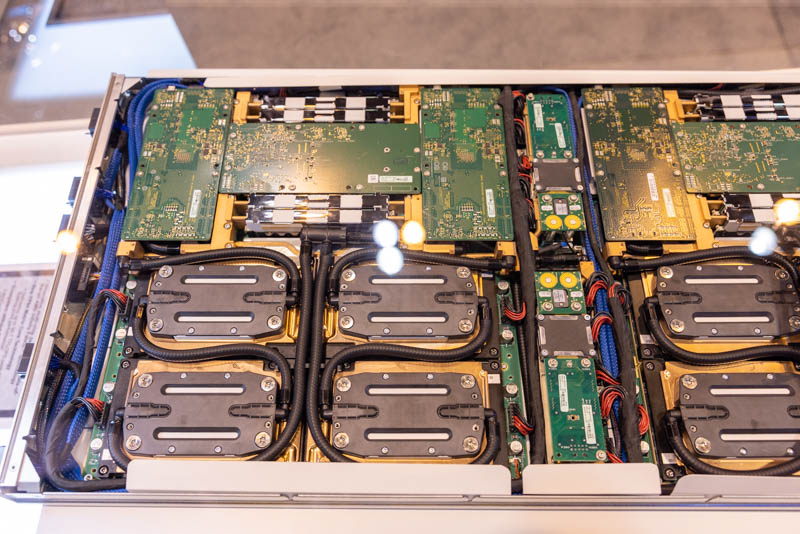

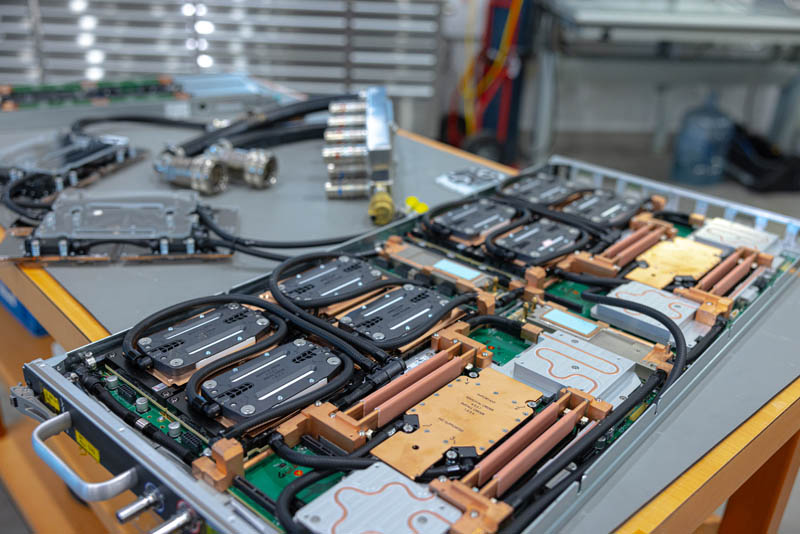

We saw the HPE Cray EX platform, and the MI250X nodes at SC21. These are fully liquid cooled platforms and that is a big reason that the new system is so efficient.

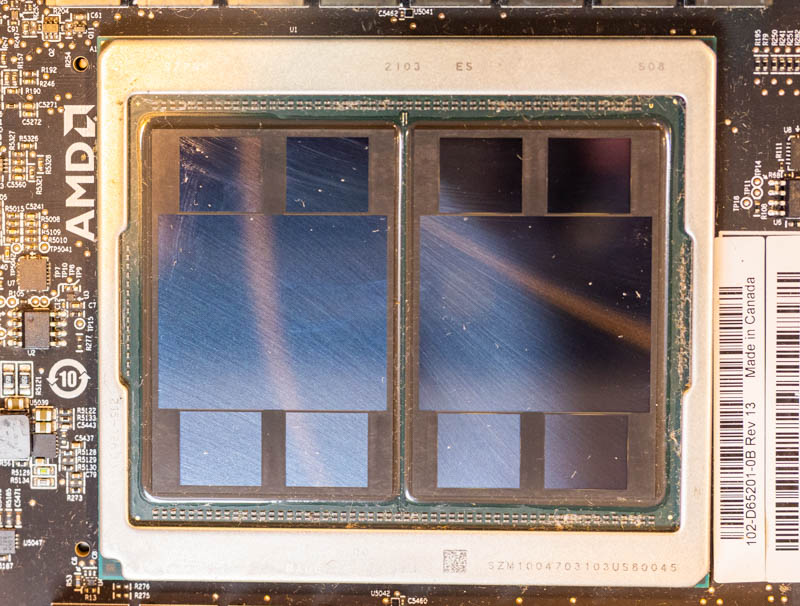

The GPUs are the AMD MI250X that have two GPU dies each with four HBM stacks next to them on an OAM module.

We also saw these nodes in-person in Calgary a few months ago while we were focusing on liquid cooling. Here is a HPE Cray EX node on a workbench at CoolIT systems:

Here is me holding the supercomputer node. Note, it is extremely heavy.

If you want to see a few more views, you can check out How Liquid Cooling is Prototyped and Tested in the CoolIT Liquid Lab Tour:

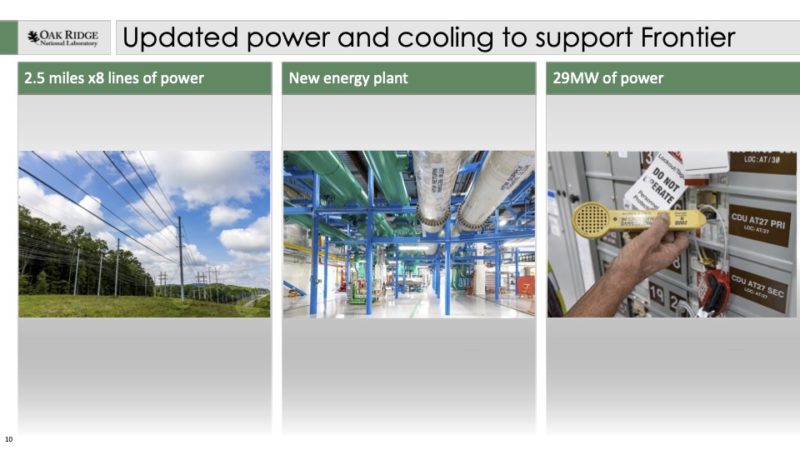

One of the biggest innovations with the new Frontier supercomputer is that it is using 85F water in its facility loops, not chilled water. Not having to chill water prior to using it in a cooling loop means that less power is dedicated to cooling and therefore the overall system is more efficient. This liquid cooling with relatively warm water is one of the reasons that the system only uses ~29MW but also one of the reasons it tops the Green500 list.

ORNL has something like 150MW of capacity, but a lot of power work had to be done to get the new system online. A fun stat from the pre-briefing is that when Frontier goes from idle to a HPL run for the Top500 the entire system uses something like 15MW of electricity more in the span of <10 seconds.

The cabinets themselves weigh around 8000lbs which means that only four could be carried in a tractor trailer at a time.

Here is the other side of the cabinets with the networking cables plugged in.

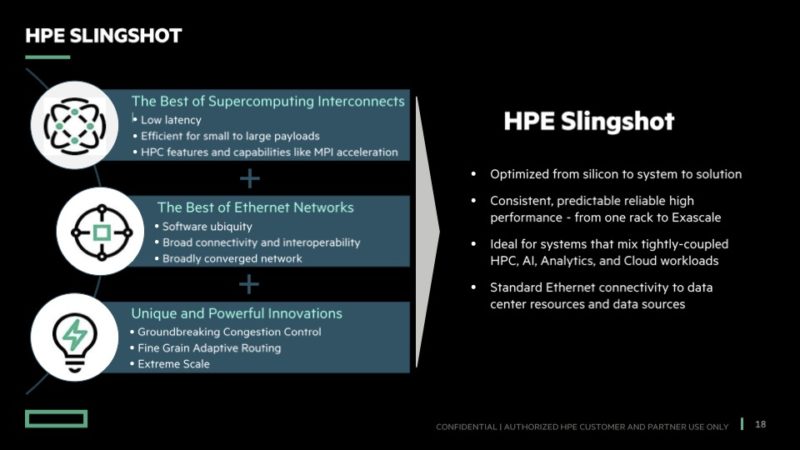

Instead of using Infiniband, the new systems use HPE Slingshot 11 that runs atop of layer 3 Ethernet. Frankly, we hope that HPE decides to open up Slingshot a bit more and make it an industry competitor to Infiniband, not just a HPE exclusive, but we can see both sides of this.

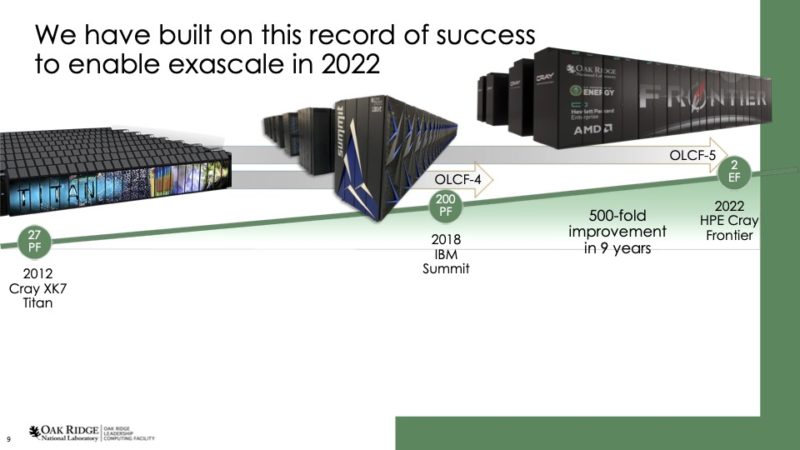

ORNL also discussed the history of the new machines. STH is going to turn 13 years old in a little over a week and the Cray XT5 Jaguar in 2009 was the top system from that era at 2.5PF.

Summit sits next to Frontier at ORNL and our sense is that post-Frontier ORNL will look to have something much larger take its place.

The fun part is that this is only one of the Exascale systems that we expect to see top the next few Top500 lists.

Final Words

With the new ORNL Frontier supercomputer taking its place atop the Top500, we are now in a new era. Perhaps as impressive is not just the #1 spot, but that this is filtering down to smaller systems. Aside from #29’s Frontier-TDS, the AMD-powered Cray EX platform also powered the EuroHPC Lumi at #3 on the Top500 and Green500 lists and France’s Adastra at #10 on the Top500 and #4 on the Green500. The top 4 Green500 systems are now based on this same HPE and AMD architecture.

Moving ahead to the Exascale era, at the briefing we heard something interesting. Thomas Zacharia of ORNL was discussing how next-generation systems would mean faster edge systems closer to data. This is similar to what we heard from Raja Koduri in Raja’s Chip Notes Lay Out Intel’s Path to Zettascale.

For those thinking that Zettascale is crazy, not only did we hear about this same theme from ORNL in a joint HPE and AMD briefing, but Arm also has a path to Zettascale talk on the first day of ISC.

More compute and higher efficiency, with the ability to bring more distributed computing to data means that more science can get done. While we generally focus on the hardware, since that is our thing at STH, really these systems are about hitting new levels of simulation and computation to help solve the previously unsolvable challenges for humanity.

Great article! Sad though is the realization that our high performance ” Personal Computers” are really consumer electronics and many years behind

@Jay; Hardware like this is undeniably in a different league; but IT gear is actually sort of interesting in that the consumer toy stuff is so close to the bleeding edge stuff; just in much smaller servings.

In terms of things like architecture and fab process; the fanciest Epycs you can buy don’t differ much from the chiplet-based Ryzens; and the MI250X is only slightly ahead, in process terms(TSMC 6nm vs 7nm) of Radeon and Radeon Pro parts; just a much bigger device with a lot more HBM.

Compare to, say, machine tools, where your harbor freight drill press and some big, mean, ITAR-controlled, aerospace CNC gear might as well be from different planets.

The biggest difference, as seems to be common with HPC, is interconnect; it is ethernet-based in this case; but the big cluster systems do tend to have genuinely somewhat exotic and atypically capable interconnects vs. consumer gear or even commodity datacenter stuff.

This is so cool! Every time the national labs add a zero to the end of those computation numbers I just imagine them adding more variables to the models and simulations.