Rounding out the traditional players, Google Cloud Platform now supports NVIDIA Tesla K80 GPU compute instances with up to 8 GPUs (four NVIDIA Tesla K80 cards.) Both AMD and Intel already tout the ability to run workloads using their chips on the Google Cloud Platform so it seems only fitting that NVIDIA joins the party.

NVIDIA Tesla K80’s Join Google Cloud Platform

By adding the ability to run multiple NVIDIA Tesla K80’s, Google is building instances that can handle heavier machine learning workloads. It is also a move that makes Google more competitive with Amazon. Amazon AWS already allows for multiple GPUs in a single instance. Amazon AWS also is using the NVIDIA K80 instead of the NVIDIA Tesla P100 chips. We suspect this is due to the tight supply of the Tesla P100’s.

From the company:

Rather than constructing a GPU cluster in your own datacenter, just add GPUs to virtual machines running in our cloud. GPUs on Google Compute Engine are attached directly to the VM, providing bare-metal performance. Each NVIDIA GPU in a K80 has 2,496 stream processors with 12 GB of GDDR5 memory. You can shape your instances for optimal performance by flexibly attaching 1, 2, 4 or 8 NVIDIA GPUs to custom machine shapes.

(Source: Google)

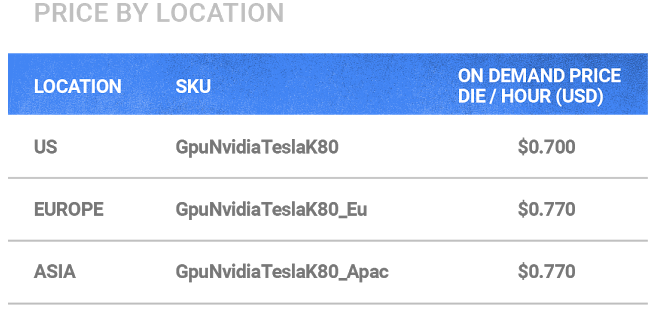

GPU Pricing on GCP

In terms of pricing, the Google Cloud Platform is $0.70 to $0.77 per hour with a 10-minute minimum. You can attach GPUs in 1, 2, 4 or 8 GPU increments with a maximum of 8 GPUs (4x K80 cards) per VM.

The great part is that this allows a user to get started using large CUDA systems for deep learning and AI without adding any upfront capital expenditures. The price per day is around $135- $148 for 8 GPUs or even $16.8 to $18.48 for a single GPU or half of the K80. If you wanted to scale problems quickly and then shut them down it makes a lot of sense.

If you are looking to instead have a local low-cost, CUDA based, machine learning/ AI developer platform, check out our starter build guides:

- Optimizing a Starter CUDA Machine Learning / AI / Deep Learning Build

- DeepLearning02: A Sub-$800 AI / Machine Learning CUDA Starter Server

Those are good options that are slower but are sized for someone just starting out in machine learning.

Still confused on how to get started. Need a Machine Learning for Dummies article. I’ll try to find one out on the interwebs…