The Gigabyte R272-Z32 was one of our first AMD EPYC 7002 “Rome” generation platforms. The company did a lot with the first-generation AMD EPYC 7001 series, so it is little surprise that their first product for the new generation is excellent. To get slightly tantalizing for our readers, the Gigabyte R272-Z32 is a single socket AMD EPYC 7002 platform that can be configured to be more powerful than most dual-socket Intel Xeon Scalable systems, with more RAM capacity and PCIe storage. Having a game-changing platform for some ecosystems is something we always welcome. In our review, we are going to go over the high points, but also a few items we would like to see improved on future revisions.

Gigabyte R272-Z32 Overview

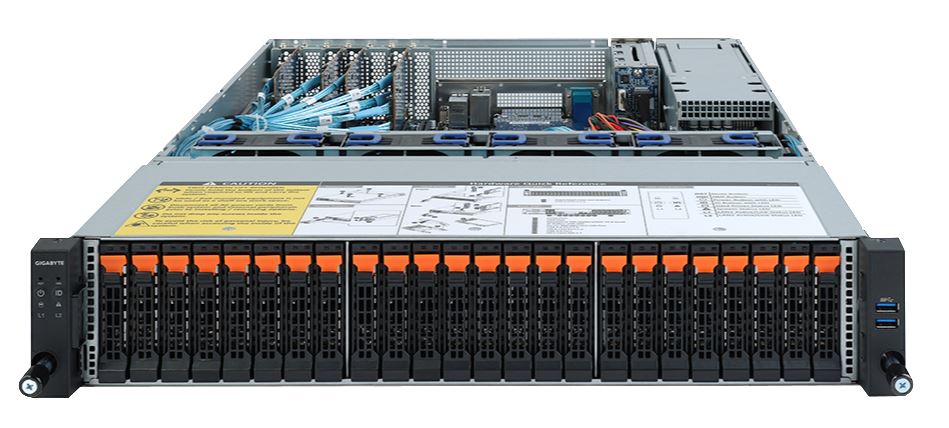

The front of the chassis is dominated by what may be its most important feature: 24x U.2 NVMe bays. We are going to discuss the wiring later, but the headline feature here is that all 24 bays have four full lanes of PCIe and they do not require a switch. In most Intel-based solutions, PCIe switches must be used that limit NVMe bandwidth due to oversubscription. With the Gigabyte R272-Z32 powered by AMD EPYC 7002 processors, one can get full bandwidth to each drive. Perhaps more impressively, this is enabled with the lowly AMD EPYC 7232P $450 SKU and there is still enough PCIe bandwidth left over for a 100GbE networking adapter.

The rear of the server is very modern. Redundant 1.2kW 80Plus Platinum power supplies are on the left. There are two 2.5″ SATA hot-swap bays in the rear which will most likely be used for OS boot devices.

Legacy ports include a serial console port and VGA port. There are three USB 3.0 ports as well for local KVM support. For remote iKVM, there is an out-of-band management port. Rounding out the standard rear I/O is dual 1GbE networking via an Intel i350 NIC.

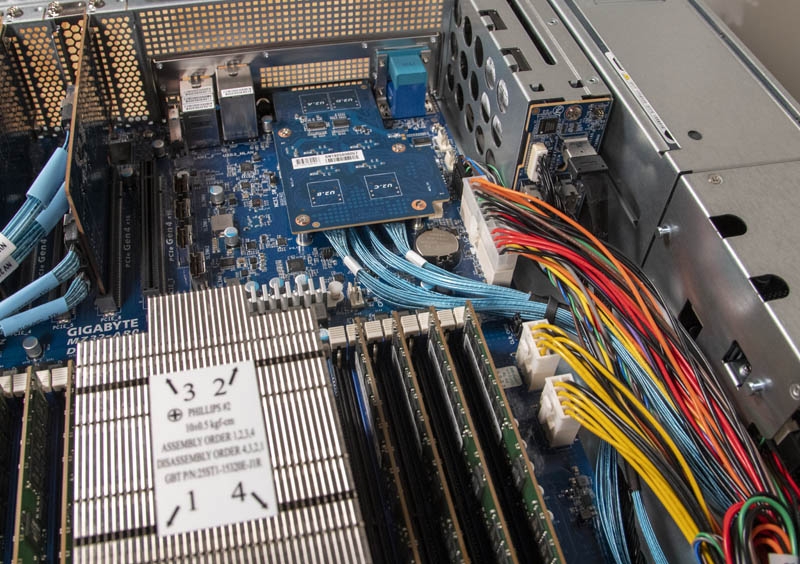

Opening the chassis up, one can see the airflow design from NVMe SSDs to the fan partition to the CPU and then the rest of the motherboard. We wanted to call-out Gigabyte for great use of the front label to provide a quick reference guide. These labels are commonly found on servers from large OEMs such as Dell EMC, HPE, and Lenovo, but were often missing from smaller vendor designs. This is not the most detailed quick reference guide, but it provides many of the basics someone servicing the chassis exterior would need. In colocation with remote hands, these types of guides can be extremely helpful.

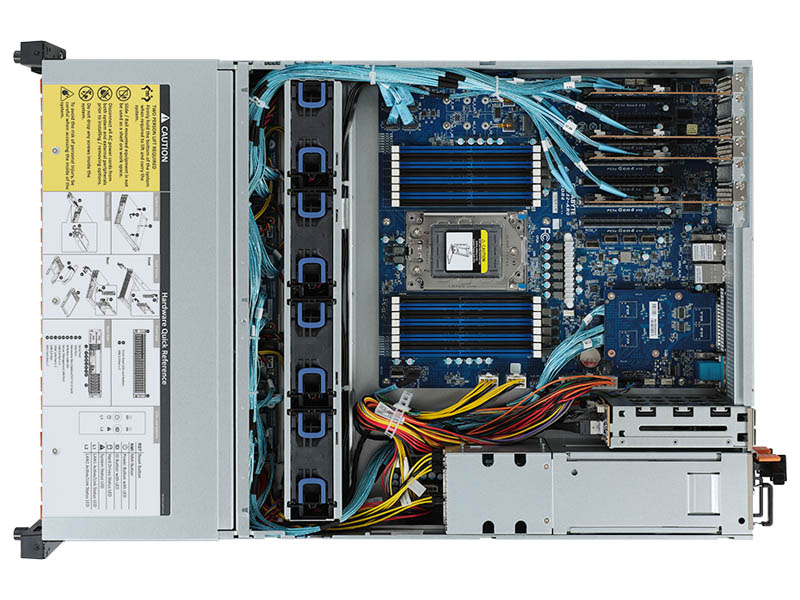

The motherboard itself is a Gigabyte MZ32-AR0. This is a successor to the Gigabyte MZ31-AR0 we reviewed in 2017. Make no mistake, Gigabyte made some major improvements in this revision.

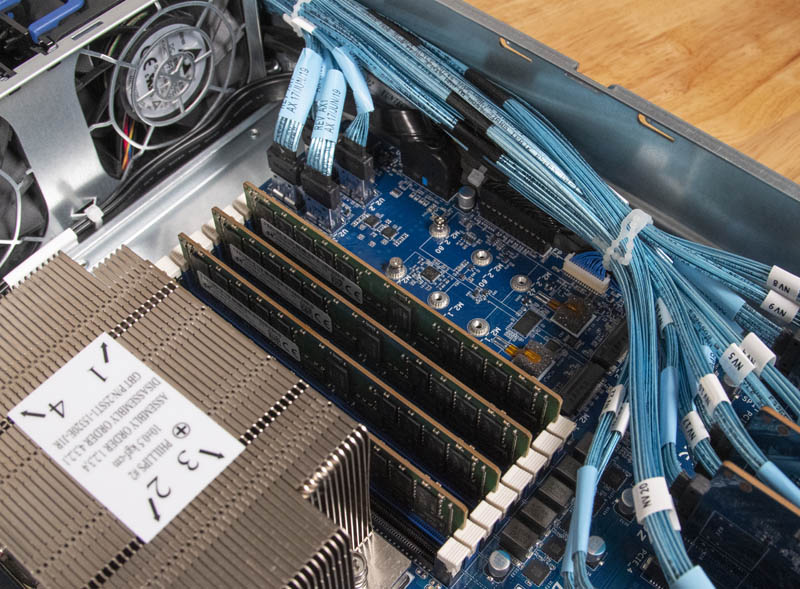

One of the big improvements is a shift from a single to dual M.2 NVMe slots. The dual M.2 option is limited to M.2 2280 (80mm) SSDs. For boot devices, that is plenty. It will not, however, fit the Intel Optane DC P4801X cache drive as an example nor M.2 22110 (110mm) SSDs with power loss protection (PLP.) This is a great feature, but one we would actually sacrifice for more PCIe expansion. We are going to explain why next.

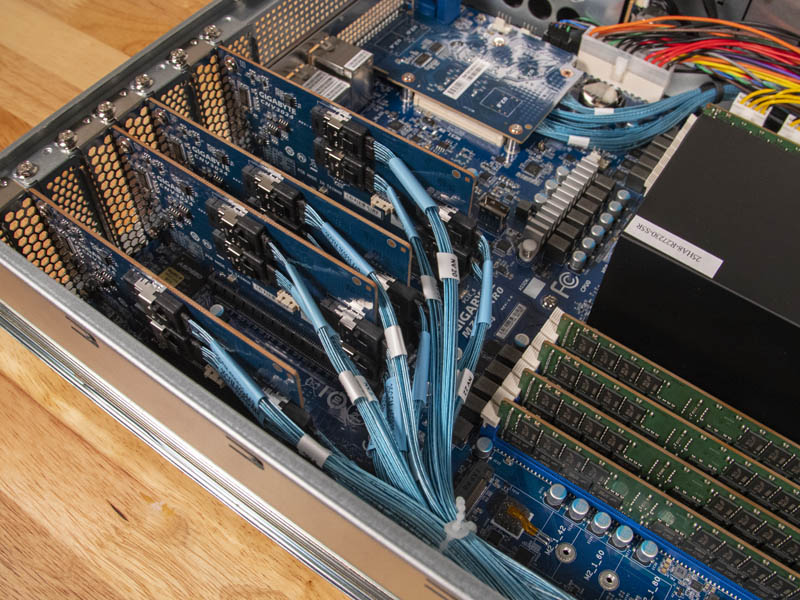

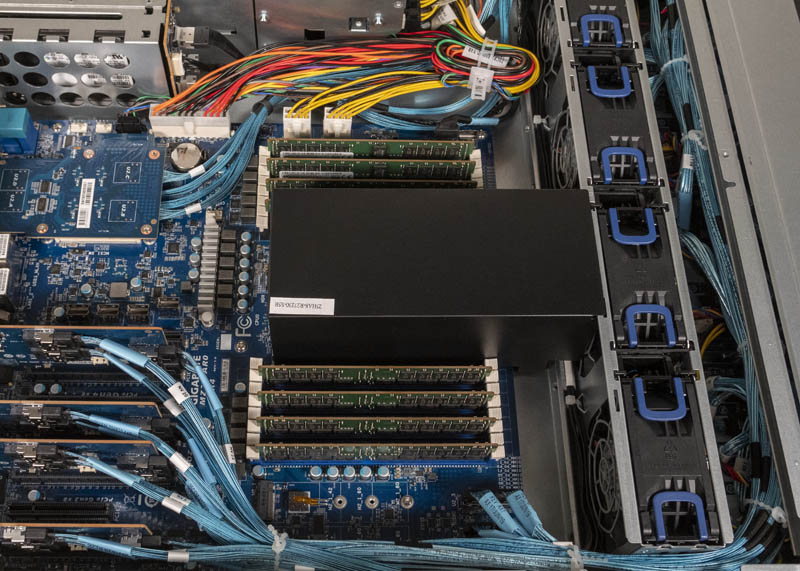

If you look at the physical motherboard layout, the PCIe slots appear to have three open PCIe x16 slots. The other four are utilized by the PCIe risers used for front U.2 drive bay connectivity. The remaining three slots are not all functional.

Instead, there is only one functional slot, Slot #5 which is an x16 physical but only a PCIe Gen4 x8 electrical slot. To us, this is a big deal. That means to utilize a 100GbE adapter, one must have a PCIe Gen4 capable NIC. With a PCIe Gen3 NIC in this slot, one is limited to just over 50Gbps of bandwidth. For a server with 96 PCIe lanes to the U.2 front panel drives, it would have been nice to have a PCIe Gen4 x16 lane for 200GbE or 2x 100GbE links for external networking. This would be worth losing the two M.2 drives, especially with the dual SATA boot drive option.

The OCP slot would be a networking option, however, it is utilized for four more NVMe front bay connectivity points.

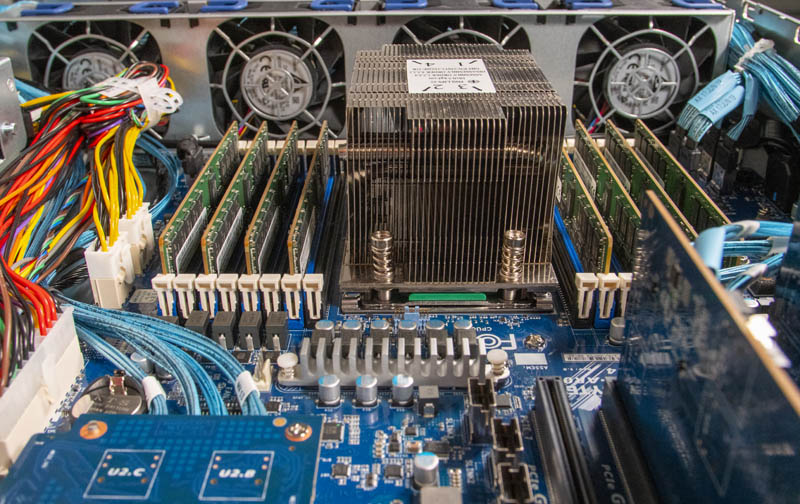

The AMD EPYC 7002 socket itself is not going to be backward compatible with the first generation AMD EPYC 7001 series if you want PCIe Gen4, but it will be compatible with the future EPYC 7003 series. One of the great features is that this solution has a full set of 16 DDR4 DIMMs. If one wants, you can use 8x DIMMs at DDR4-3200 speeds or 16x at DDR4-2933 but ensure that you ask your rep to get the right rank/ speed modules.

Fans in the Gigabyte R272-Z32 are hot-swap designs as one would expect in a chassis like this. We have seen this fan design before from Gigabyte and they are fairly easy to install. The one part of the cooling solution we did not love is the relatively flimsy airflow guide. We would have preferred a more robust solution that we have seen on other Gigabyte designs (e.g. the Gigabyte R280-G2O GPU/GPGPU rackmount server) That would have allowed Gigabyte to design-in space for additional SATA III drives using internal mounting on the airflow shroud.

The last point we wanted to show with the solution is the cabling which you may have seen. As PCIe connections increase in distance, we will, as an industry, see more cables. The blue data cables in the Gigabyte R272-Z32 are prime examples of that. Beyond these cables, the MZ32-AR0 motherboard is a standard form factor motherboard which means it is easy to replace, but there are a lot of cables. As you can see, the cables are tucked away around the redundant power supply’s power distribution. This design adds more cables, but it also means one can fairly easily replace the motherboard in the future.

At the end of the day, Gigabyte made perfectly reasonable tradeoffs in order to quickly bring this platform to market. With 96 of the 128 high-speed I/O lanes being used for front panel NVMe, Gigabyte is breaking new ground here. At the same time, Gigabyte has an opportunity to make a few tweaks to this design to create an absolute monster for the hyper-converged market if it is not already so.

Next, we are going to talk topology since that is drastically different on these new systems. We are then going to look at the management aspects before getting to performance, power consumption, and our final words.

isn’t that only 112 pcie lanes total? 96 (front) + 8 (rear PCIe slot) + 8 (2 m.2 slots). did they not have enough space to route the other 16 lanes to the unused PCIe slot?

bob the b – you need to remember SATA III lanes take up HSIO as well.

M.2 to U.2 converters are pretty cheap.

Use Slot 1 (16x PCIe4) for the network connection 200 gbit/s

Use Slot 5 and the 2xM.2 for the NVMe-drives.

How did you tested the aggregated read and write? I assume it was a single raid 0 over 10 and 14 drives?

We used targets on each drive, not as a big RAID 0. The multiple simultaneous drive access fits our internal use pattern more closely and is likely closer to how most will be deployed.

Wondering if it support gen4 NVMe?

CLL – we did not have a U.2 Gen4 NVMe SSD to try. Also, some of the lanes are hung off PCIe Gen3 lanes so at least some drives will be PCIe Gen3 only. For now, we could only test with PCIe Gen3 drives.

Wish I could afford this for the homelab!

Thanks Patrick for the answer. For our application we would like to use one big raid. Do you know if it is possible to configure this on the Epyc system? With Intel this seems to be possible by spanning disks overs VMDs using VROC.

Intel is making life easy for AMD.

“Xeon and Other Intel CPUs Hit by NetCAT Security Vulnerability, AMD Not Impacted”

CVE-2019-11184

Most 24x NVMe installations are using software RAID, erasure coding, or similar methods.

I may be missing something obvious, and if so please let me know. But it seems to me that there is no NVMe drive in existence today that can come near saturating an x4 NVMe connection. So why would you need to make sure that every single one of the drive slots in this design has that much bandwidth? Seems to me you could use x2 connections and get far more drives, or far less cabling, or flexibility for other things. No?

Jeff – PCIe Gen3 has been saturated by NVMe offerings for a few years and generations of drives now. Gen4 will be as soon as drives come out in mass production.

Patrick,

If you like this Gigabyte server, you would love the Lenovo SR635 (1U) and SR655 (2U) systems!

– Universal drive backplane; supporting SATA, SAS and NVMe devices

– Much cleaner drive backplane (no expander cards and drive cabling required)

– Support for up to 16x 2.5″ hot-swap drives (1U) or 32x 2.5″ drives (2U);

– Maximum of 32x NVMe drives with 1:2 connection/over-subscription (2U)

Hi BinkyTo – check out https://www.servethehome.com/lenovo-amd-epyc-7002-servers-make-a-splash/

There are pluses and minuses of each solution. The more important aspect for the industry is that there are more options available on the market for potential buyers.

Thanks for the pointer, it must have skipped my mind.

One comment about that article: It doesn’t really highlight that the front panel drive bays are universal (SATA, SAS and NVMe).

This is a HUGE plus compared to other offerings, the ability to choose the storage interface that suits the need, at the moment, means that units like the Lenovo have much more versatility!

This is what I was looking to see next for EPYC platforms… I always said it has Big Data written all over it… A 20 server rack has 480 drives… 10 of those is 4800 drives… 100 is 48000 drives… and at a peak of ~630W each, that’s an astounding amount of storage at around 70KW…

I can see Twitter and Google going HUGE on these since their business is data… Of course DropBox can consolidate on these even from Naples…

Where can I buy these products

Olalekan we added some information on that at the end of the review.

Can such a server have all the drives connected via a RAID controller like Megaraid 9560-16i?

Before commenting about software RAID or ZFS, in my application, the bandwidth provided by this RAID controller is way more than needed, with the comfort of providing worry free RAID 60 and hiding very well the write peak latencies due to the battery backup cache.

Mr. Kennedy, recently I buy a R152-Z31 with a AMD Epyc 7402P, that have the same motherboard of R272-Z32 the MZ32-AR0 to test our apps with the AMD processor, but, now I am confused with the difference between ranks within RAM memory modules, in your review I saw you use a micron one, in the micron web page (https://www.crucial.com/compatible-upgrade-for/gigabyte/mz32-ar0) there are some models of 16GB, could you advice me with a model that will be at least work fine. I appreciate a lot if you could help me.