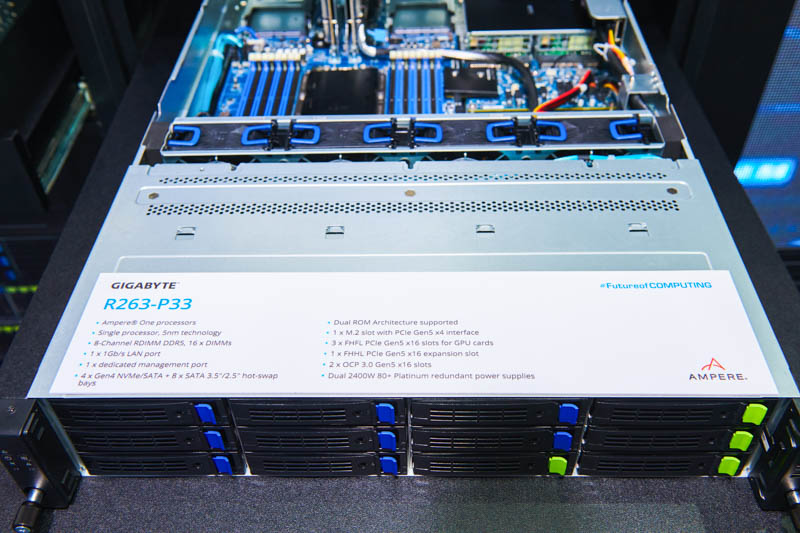

At Computex 2023, we saw the Gigabyte R263-P33 Ampere AmpereOne Arm server. This was a cool server to see as the release of AmpereOne CPUs inch toward general availability. While they are cloud-only right now, we expect that to change in the not-too-distant future, and so seeing servers like this Gigabyte one is exciting. Let us take a quick look.

Gigabyte R263-P33 Ampere AmpereOne Arm Server at Computex 2023

Here are the key specs of the Gigabyte R263-P33. Since we cannot see the back, the standard I/O is listed as a 1GbE port and a dedicated management port. The power supplies are 2.4kW 80+ Platinum units which seem extreme.

The front of the chassis has four 3.5″ bays focused on NVMe and the remainder on SATA and SAS with an optional controller. This is a 2U platform, but Gigabyte also has a 1U platform for those that want more density, as well as a dual-socket platform coming.

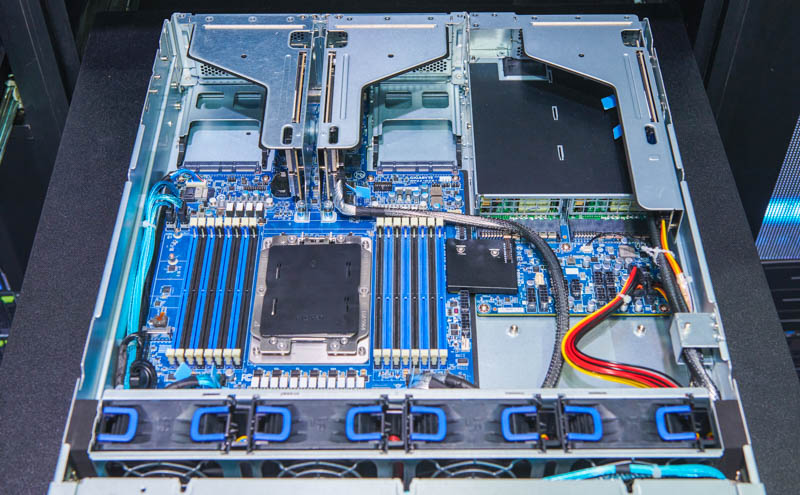

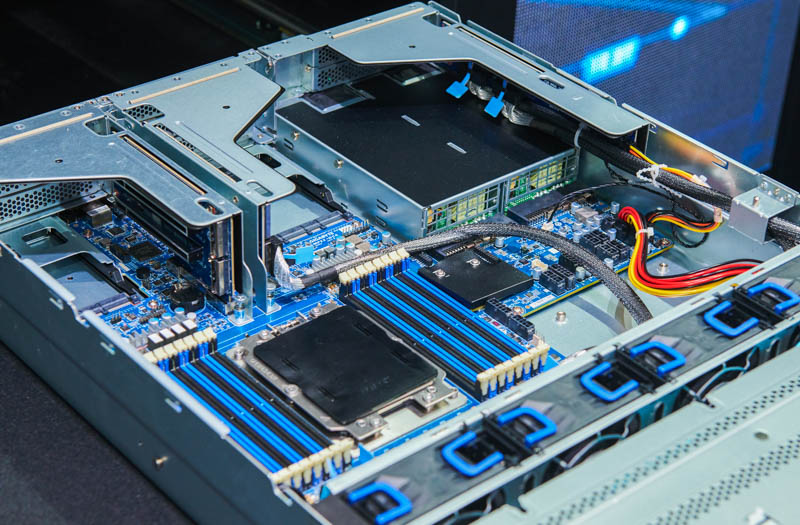

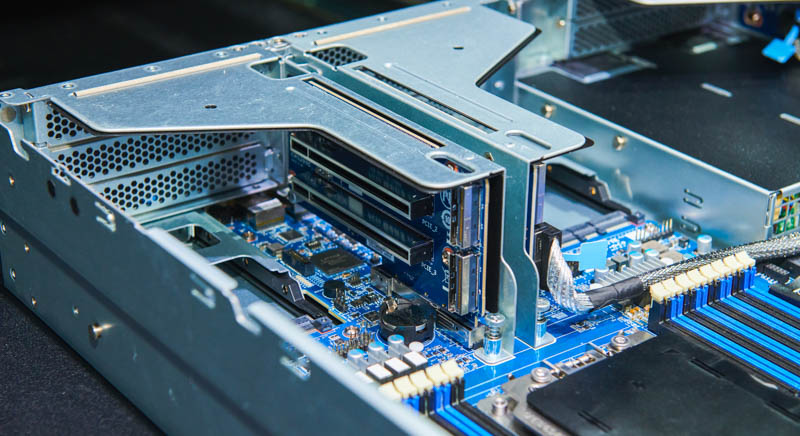

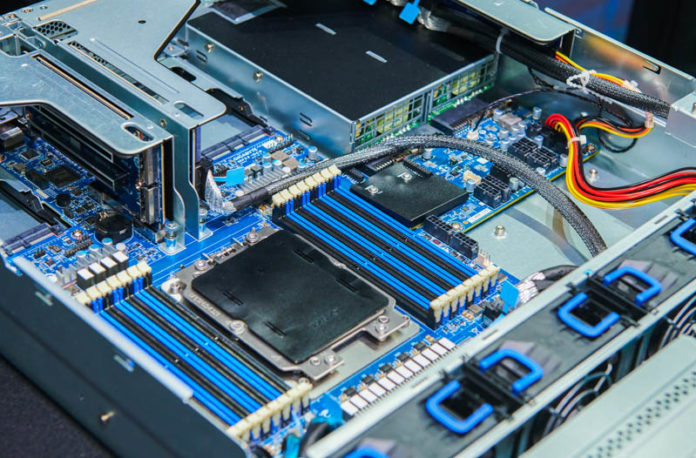

Inside the server we can see a fairly standard layout.

There is a four hot-swap fan partition in the middle behind the storage backplane. We can also see a more commercial-style motherboard than a proprietary form factor.

Here is another angle where we can see the layout a bit better.

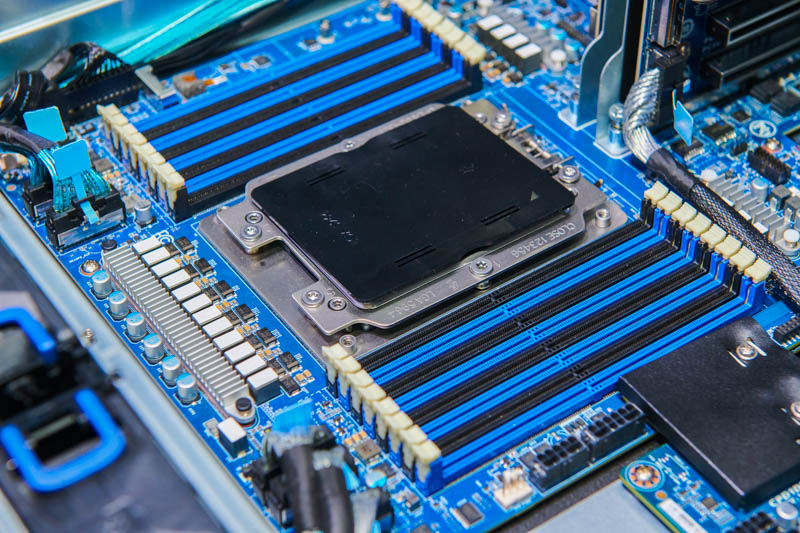

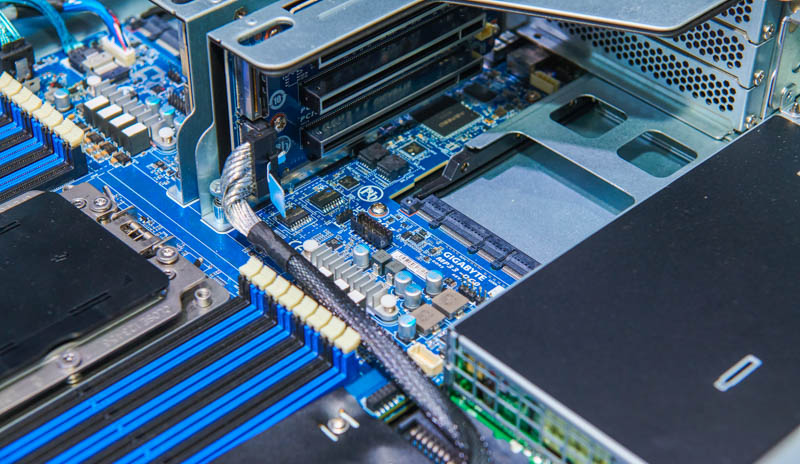

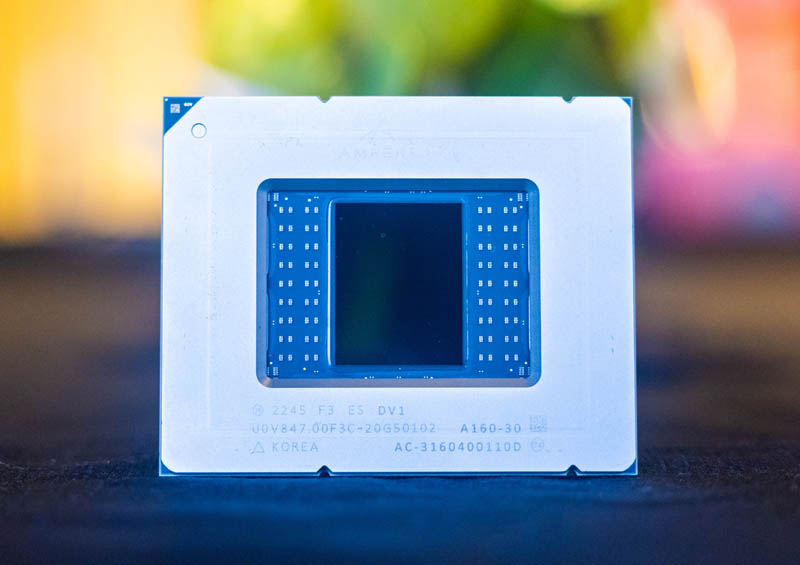

The start of the show is the LGA5964 socket for AmpereOne. We also get eight DDR5 DIMM channels.

On the back, we have two risers for PCIe slots.

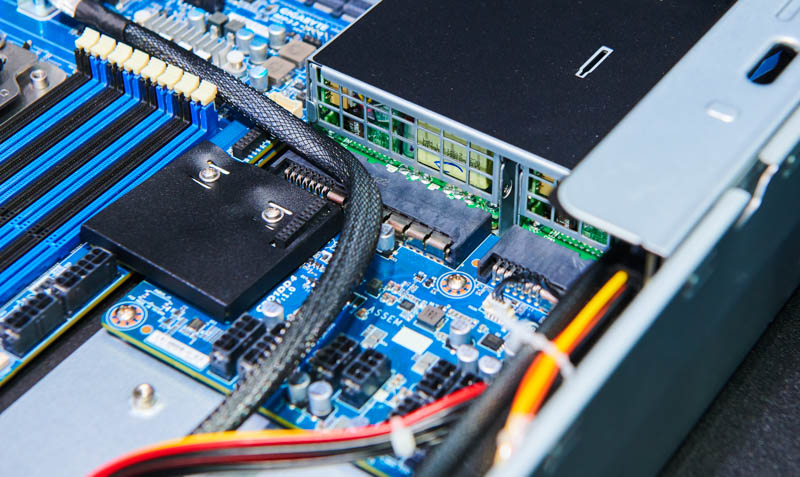

Here is the other riser. In total, we get 3x full height full-length PCIe Gen5 x16 slots with GPU support and another PCIe Gen5 x16 half-length slot.

Networking is provided via the two OCP NIC 3.0 slots on either side of the risers. There is also what might be the fastest boot solution around as a PCIe Gen5 x4 M.2 slot. Generally, we see PCIe Gen3 x1 and maybe x4 slots being used for boot devices these days.

Since our team had this question, the black box in these photos covers the connection between the power distribution board and the motherboard.

This is one of a growing number of AmpereOne servers.

Final Words

Overall, it is great to see more of these servers. Now we just need AmpereOne chips. The Ampere Altra Max 128 core part is great for having many cores, but AMD EPYC “Bergamo” is set to 3x the SPECint rates per socket. We will have more on that later this week. That is not the market that Ampere is necessarily competing in, but Ampere is going to need a higher core count and a new core to push its high core count agenda versus AMD now that AMD has a higher core count offering. Hopefully, we can bring you reviews of both the server and the chip in the not-too-distant future. For now, you can learn more about AmpereOne here.

Since memory bandwidth is more expensive than microcoded instruction pipelines, is it possible that CISC is intrinsically more performant then RISC? On the other hand, maybe AARCH64 isn’t really RISC.

SpecInt is relevant to many computing loads but not all. Do the high-core-count Bergamo processors underperform on floating point?

When it comes time to review these machines, it would be nice to include floating point performance along with the usual tests of memory, networking, storage and integer logic speeds.

Historically RISC has been more performant than CISC, as a result RISC replaced CISC in pretty much all markets. Note AArch64 code density is better than x86_64, so what makes you think CISC needs less memory bandwidth?

SPECINT/FP is gamed by vendor specific compilers like ICC and AOCC (and not by a small amount either). Hence for a fair comparison you need to use the same compiler and options. And using a compiler that is used to build most software (such as GCC) would be a good idea since that might be the compiler that is used to build the software you use too.

The low code density of x86_64 is a good point. It seems I was dreaming of some mythical CISC architecture that was designed for optimal code density.

The whole RISC vs. CISC debate at this era is mostly academic at this time. The big distinguishing features of RISC were a fixed instruction length with defined encoding, no computational operations on memory, and general aversion to microcoded instructions as defined in the architectural ISA. What these restrictions permitted is simplicity in design for early RISC architectures but at the cost of leveraging more instructions to performance the same work as a CISC architecture. Nowadays code density isn’t that critical to performance but decades ago it mattered due to memory capacity and cost. For similar reasons RISC architectures were generally more sensitive to caching algorithms and cache capacity. On the other hand, the fixed instruction length and defined encoding allowed some clever L1 instruction tricks to enhance performance that simply couldn’t be done on CISC designs back in the day. This leads into the real important design details between RISC and CISCS today: instruction scheduling, implementation and power consumption.

Modern CISC processors decode instructions to RISC-like micro-ops to leverage many of the inherent design advantages. This comes at the cost of additional transistors to perform that micro-op translation and more transistors performing an operation comes at increased power. This is where the advantages of RISC are currently shown, note that this is not raw performance. The power consumption side can be reduced on the CISC side by caching the decoded micro-ops so for repeated operations decoding is only done once while relevant in a program loop. This additional caching consumes more die space. However, modern high performance RISC designs also do some micro-op caching which seems counter intuitive to RISC principles. Additional tags are added to the cached RISC instructions to assist in extracting parallelism. Those additional tags enhance scheduling operations to extract more parallelism and maximize out-or-order potential. These types of instruction tagging can also be performed inside CISC designs, the challenge is building enough information quickly while being relevant in concurrent execution. The simplicity of RISC generally permits far more instructions to be analyzed simultaneously vs. decoded CISC operations. Similarly additional tagging on CISC consumes additional power and die space.

It should be noted again that instruction decoding and additional tagging are not part of an ISA definition but a detail on ISA implementation. A good implementation, be it CISC or RISC, will best out a poor implementation. Design is what matters most for performance.