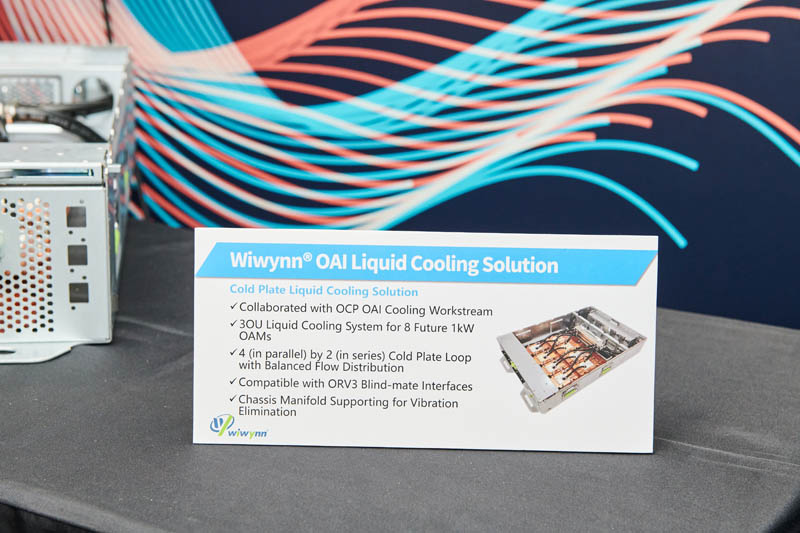

At Computex 2023, Wiwynn showed off a solution to house eight accelerators and cool them via liquid cooling. As TDPs rise, liquid cooling can save 10-20% of system power dedicated to fans, and that is making the technology very attractive for server operators, especially those running large GPU or AI accelerator farms. This is the company’s near-term solution for 1kW GPUs and AI accelerators as hyper-scale clients move away from 2-phase immersion cooling at least in the near term.

Wiwynn Liquid Cooling for 8kW AI Accelerator Trays Shown

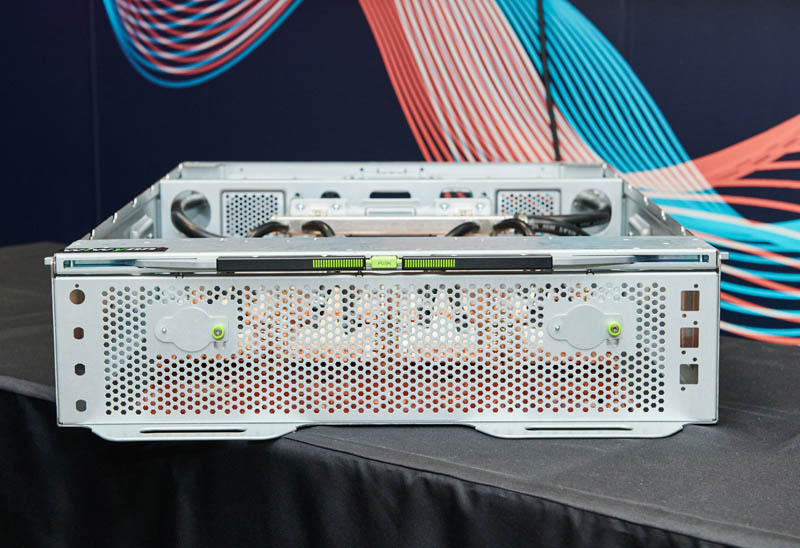

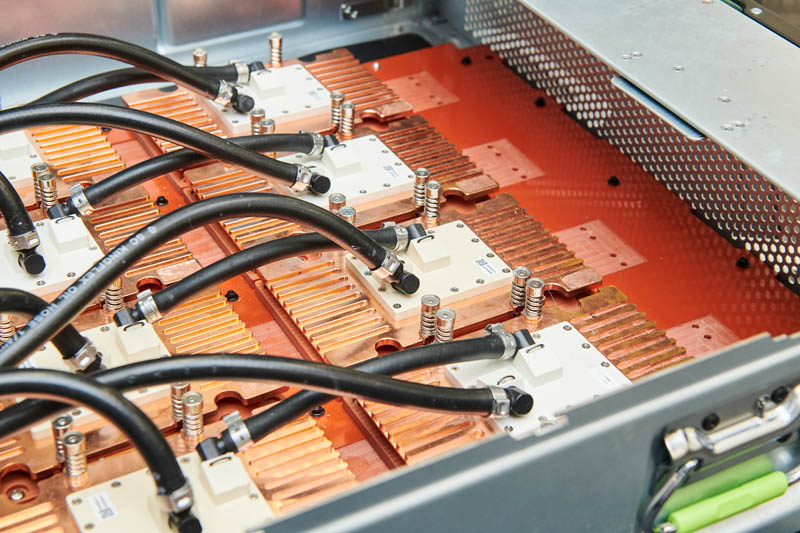

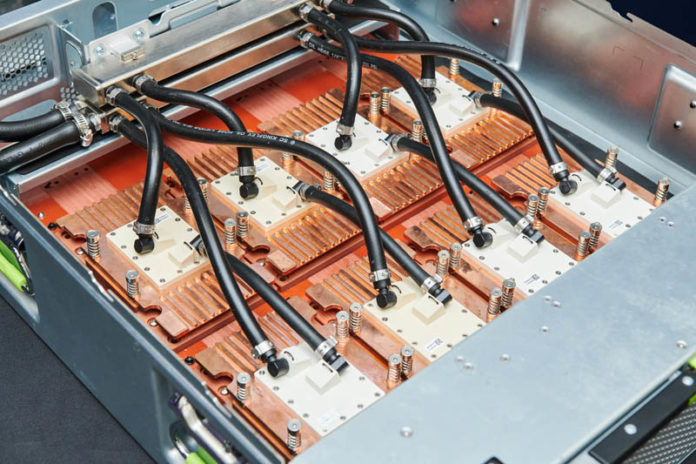

The chassis we saw was not populated, so ports and many components are missing, but this was to show off the cooling apparatus within the server.

Here is another look. This is a great example of even the prototype chassis having the bright green touch points labeled for things like handles and thumb screws.

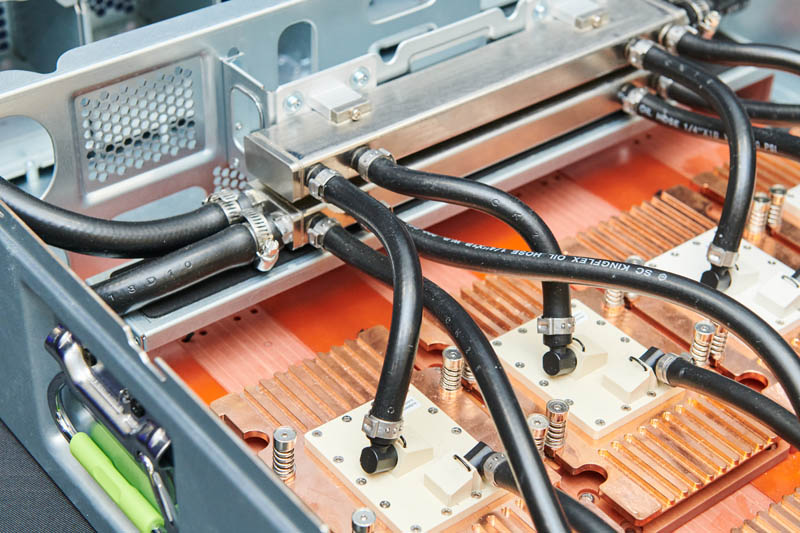

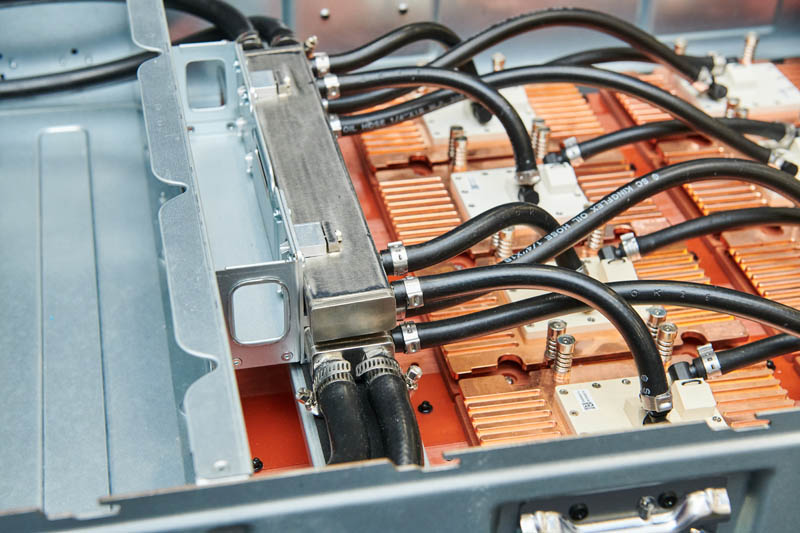

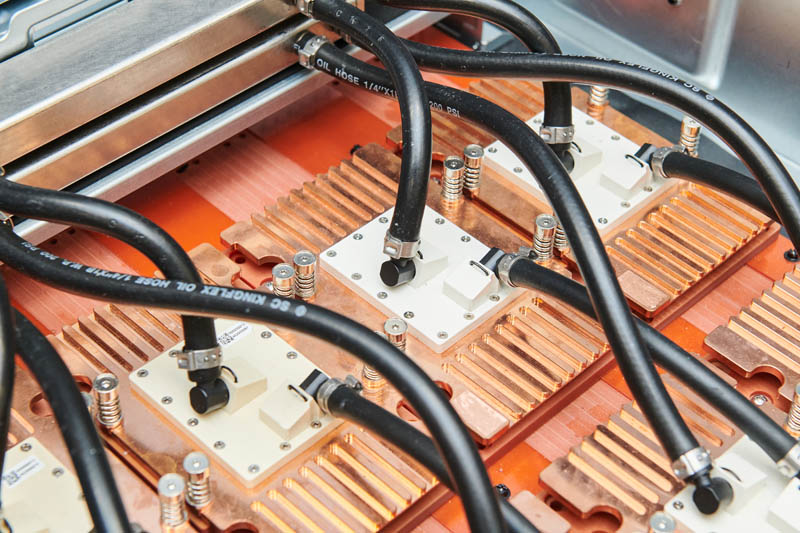

Inside the server, we can see larger diameter hoses feeding and exiting the internal cooling distribution blocks.

These are in many ways similar to rack manifolds that distribute cooling fluids at a rack level, but they are inside the chassis. Since this is specifically designed for 1kW GPUs and AI accelerators, the internal manifold is handling cooling components drawing a lot more power than the old typical 2208V 30A racks can deliver. Those racks are still used in many data centers.

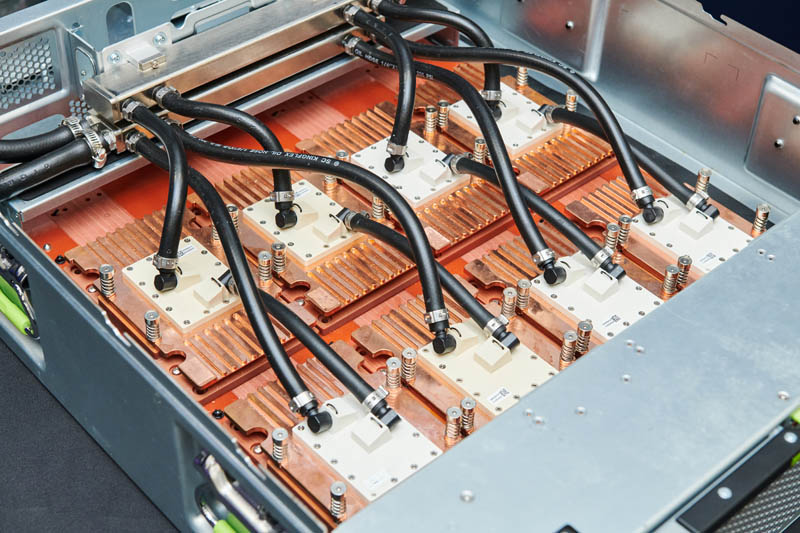

Another way to look at it is that there are 8x 1kW AI accelerator cold plates here. So this chassis has to cool an 8kW AI accelerator tray. The design is for OAM/ OAI modules and appears to also be able to cool the Universal Baseboard (UBB) for 8x accelerators.

Each cold plate is copper and the nozzles are set on top of the coldplates.

Although most current generation GPUs/ AI accelerators are in the 400-700W range, there is a clear path to 1kW in the near term before eventually eclipsing that. As a result, a single GPU or AI accelerator will use more than many dual-socket servers. We expect CPU TDPs to hit around 500W and then stay in that range for some time.

Perhaps that is part of the intrigue in this liquid cooling solution. Each cold plate is designed to cool something using as much power as a high-end dual-socket server uses.

Final Words

For those who are unaware, Wiwynn is the company that makes servers for large hyper-scale clients with both Microsoft Azure and Facebook showing off Wiwynn-made servers at various OCP Summits. That makes Wiwynn designs important and at the forefront of the AI revolution. If Wiwynn is showing cooling for 1kW accelerators, it is a good indicator that large hyper-scale clients are gearing up to deploy the same.

We have a bunch of liquid cooling content coming on STH. If you want to learn how liquid cooling works, you can see How Liquid Cooling Servers Works with Gigabyte and CoolIT.

I shudder at the cost of a leak! Surprised the solution is hoses & clamps, not custom machining given the value of the parts?

@Y0s, Think of brakelines and hydraulic lines. They run with MUCH higher pressure than these will and we don’t question tier reliability. There is nothing wrong with properly crimped hoses with clamps.

The automotive comparison is a bit different given that cars are subject to different types of vibrations than in a data center rack. Nowadays, outside of fans and liquid water there really isn’t any other type of actively moving part in these racks which should increase their reliability. Having said that, on the automotive side, I have suffered from a not so properly crimpled hose and/or ones that have failed while in transit. Not a perfect record but still exceptionally good given the context.

What does surprise me is that quick connect tubing is not being used. These offer better serviceability for working inside these systems. My presumption is that these connectors are reliable enough in these environment to not be a major issue or a move to reduce costs.

Additionally I am surprised that there isn’t more airflow over the VRM of these cards. Liquid handles the hot and thermally dense chips but there are additional components outside of that need some cooling. Granted there is airflow from the chassis but I would think that the portion of the heat sink over the VRM would have larger fins to leverage this.

Hi Eric,

How this liquid cooling technology will affect the warranty for main server components (CPU/SSD/DIMM)?

So, out of mild curiosity, what is the liquid exactly? Either the perfectly safe stuff they are phasing out for fun or…? If the answer is water (that’s the other option on their website), we need a national fight arena built. Data center drones vs Corpo Farmer. No holds barred, winner gets that quarter’s water rights. Or maybe the population just forgoes showers and drinking. Probably the last two things.

@W this way we have a solution for rising ocean levels (because of climate change) seems like a win win