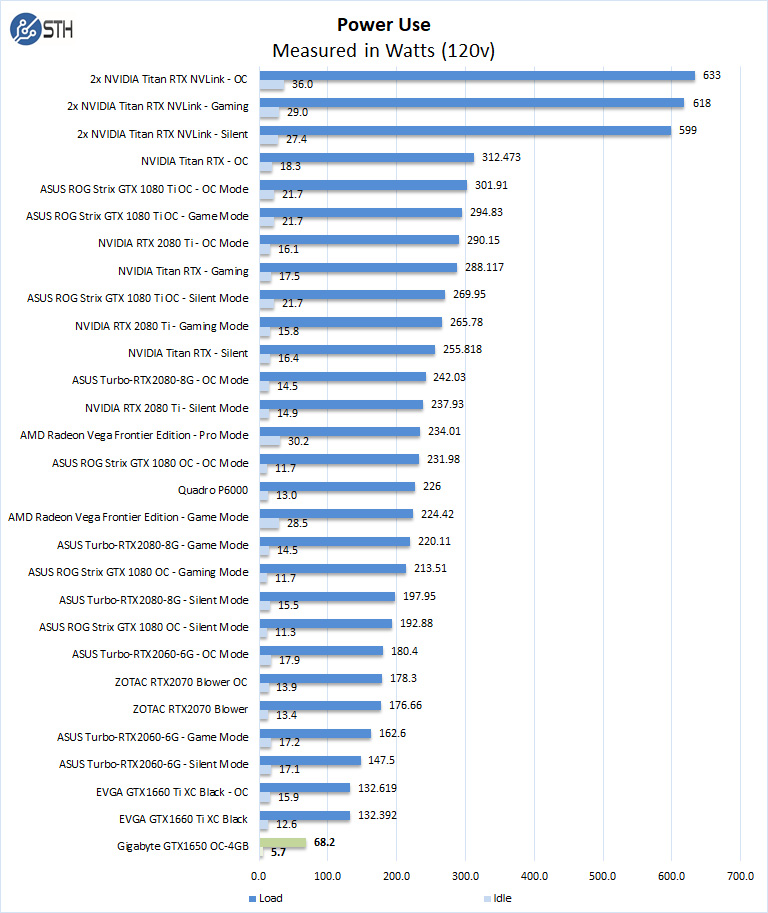

Power Tests

For our power testing, we used AIDA64 to stress the Gigabyte GeForce GTX 1650 OC, then HWiNFO to monitor power use and temperatures.

After the stress test has ramped up the Gigabyte GeForce GTX 1650 OC, we see it tops out at 68.2 Watts under full load and 5.7 Watts at idle. For entry-level graphics cards, the power usage shown is very good for systems in this class.

We wanted to quickly note here that without an additional 6/8-pin power connector and such a low power envelope, these GPUs can work well in edge applications with minimal power and power supply capacity.

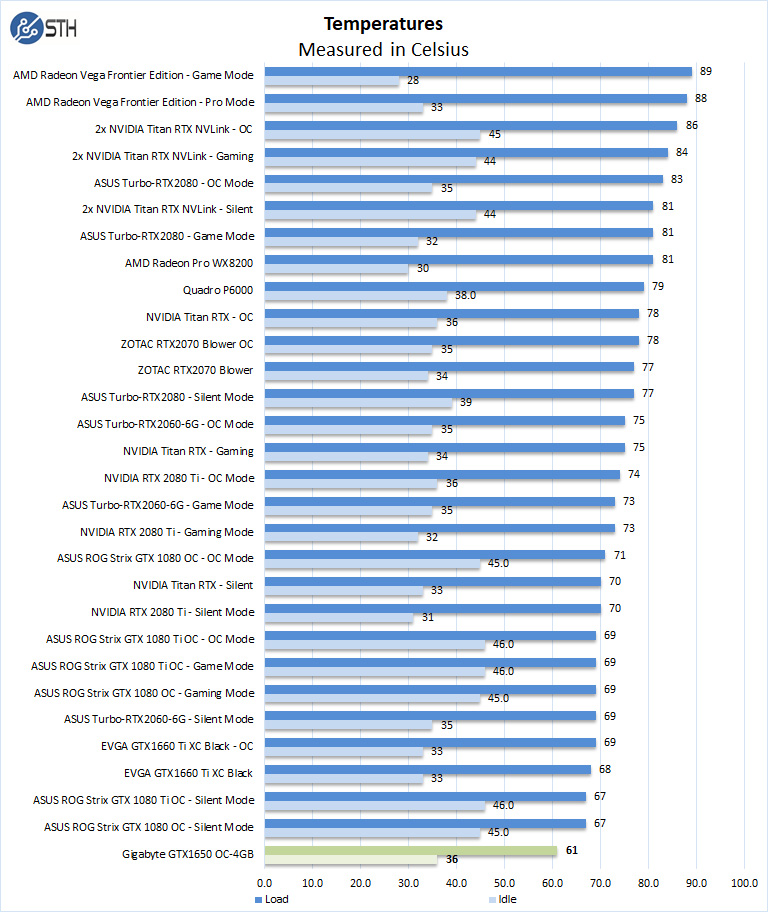

Cooling Performance

A key reason that we started this series was to answer the cooling question. Blower-style coolers have different capabilities than some of the large dual and triple fan gaming cards. In the case of the Gigabyte GeForce GTX 1650 OC which uses two fans which exhaust hot air out a single port at the top of the graphics card into the chassis. Here is our temperature data:

Temperatures for the Gigabyte Gigabyte GTX 1650 OC show its large heatsink and dual fans are easily handling the cooling of this card. We maxed out at 61C under full loads and ran at 36C at idle on the desktop. Those are low figures helped by cooling well designed for the thermal output of the GPU.

Conclusion

Looking over our benchmark results we find that is most cases the GeForce GTX 1650 OC shows up at the bottom of our results. This is expected for an entry-level GPU of this type. The Gigabyte GeForce GTX 1650 OC also uses lower power and costs less than other GPUs on our chart, sometimes by large margins lowering TCO. There are many users where a card like the GeForce 1650 OC will be the only or their first option for GPU compute making it a great onramp device.

Aside from the upfront and ongoing cost savings, the Gigabyte GeForce GTX 1650 OC offers a few compelling compute cases. Without requiring external power, there are a wide arrange of edge servers that can use this GPU in short-depth chassis for edge inferencing and GPU compute.

In terms of a GeForce GTX 1650 implementation, Gigabyte’s version that we reviewed here is reasonably priced and has excellent cooling making it an easy recommendation.

comptue is used twice on page 6.

Performance might seem on par with other nvidia models but if you compare this by price to lets say a RX570 or RX580, its very bad value for money.

You included too much worthless information and not enough practical information. We’re is the 1060 and 1070? Who gives a shit about how it stacks up against a Titan rtx x2? Get real.

I think this is one of NVidia’s most useless cards ever.

First the memory leaks, now the patent issue with Xperi Corp. It are hard times for mister “the more you buy, the more you save”. Linus Torvals will laugh his head off.

OVERALL SCORE 9.1?

Value 9.3?

Are you out of your mind?

How about comparison with cheaper and more powerful rx570?

I think people are being a little unfair here – or perhaps unrealistic – bearing in mind the target audience of the site is home severs, which may not have a PSU that supports extra power.

Yes, the price is high for the performance on an absolute scale. But that does not recognize that you simply *cannot get* a 75W PCIe-only powered card that matches it from AMD. No RX 580 or RX 570 here – no, you will be stuck with the RX 560 (or RX 460) and the same 4GB RAM, getting anywhere from two thirds to a half the performance, or maybe even less: https://www.anandtech.com/show/14270/the-nvidia-geforce-gtx-1650-review-feat-zotac

The WX8200, that meets the 1650 in some benchmarks, and does twice as well in others, uses *three* times the power – a TDP of 230W.

Absolutely, wait for Navi to see if it can do better. I hope it does, because I want to use a card with good open-source drivers. But if you can’t – if you need 75W, no more, now – this may be the best there is; and it’s priced accordingly.

This was clearly written by someone who doesn’t know anything about computer hardware or gpus.

We’ve been using these in many of our servers exactly for what you describe. We’re using them with Xeon D Supermicro boards powered off of the motherboard in 1U systems. It looks like someone here has linked and brought the gaming kids here. NVIDIA’s still where it’s at if you want inferencing at the edge. Anyone that thinks AMD’s in the game today against CUDA isn’t in the space. Maybe that’ll change, but NVIDIA’s easy and it works, AMD there’s always troubleshooting.

@Paul I can tell you it’s useful to us. We buy a spectrum of GPU’s for our customers. Having this info top to bottom is handy both for us and for our customers.

Good review. I’d like to see a blower style 1650 review since that’s the sweet spot in the market

Most of the comments here seem to have been made by those who own a giant rig and a powerful PSU — gamers. For those of us who prefer a system with a small physical footprint and low power consumption, while still being able to play at 1080p competently, this card is basically it for now until the fabled RX 3060 comes out. The comparison between this and the RX570 simply isn’t fair due to the huge discrepancy in power consumption. I do love AMD and support them when I can to create competition to Intel and NVIDIA, but it’s been a known fact that they compensate with lack of finesse with just raw and massive heat/power/energy consumption, which could costs end users hundreds if not thousands of dollars per year, and is horrible for the environment.

Hi, Thank you for providing such valuable information. In my opinion, the GeForce GTX 1650 is an excellent Graphics Card. It is in the best interests of everyone….