Microsoft announced Project Corsica this week as the culmination of work around its Zipline compression standard. During the Microsoft OCP 2019 Keynote on Denali and Project Zipline, the company showed that its Zipline compression standard is extremely efficient. It also mentioned that it was working with the industry to get not just the software compression side more utilized, but also opening the Verilog RTL implementation for hardware offload of the work.

Microsoft Project Zipline

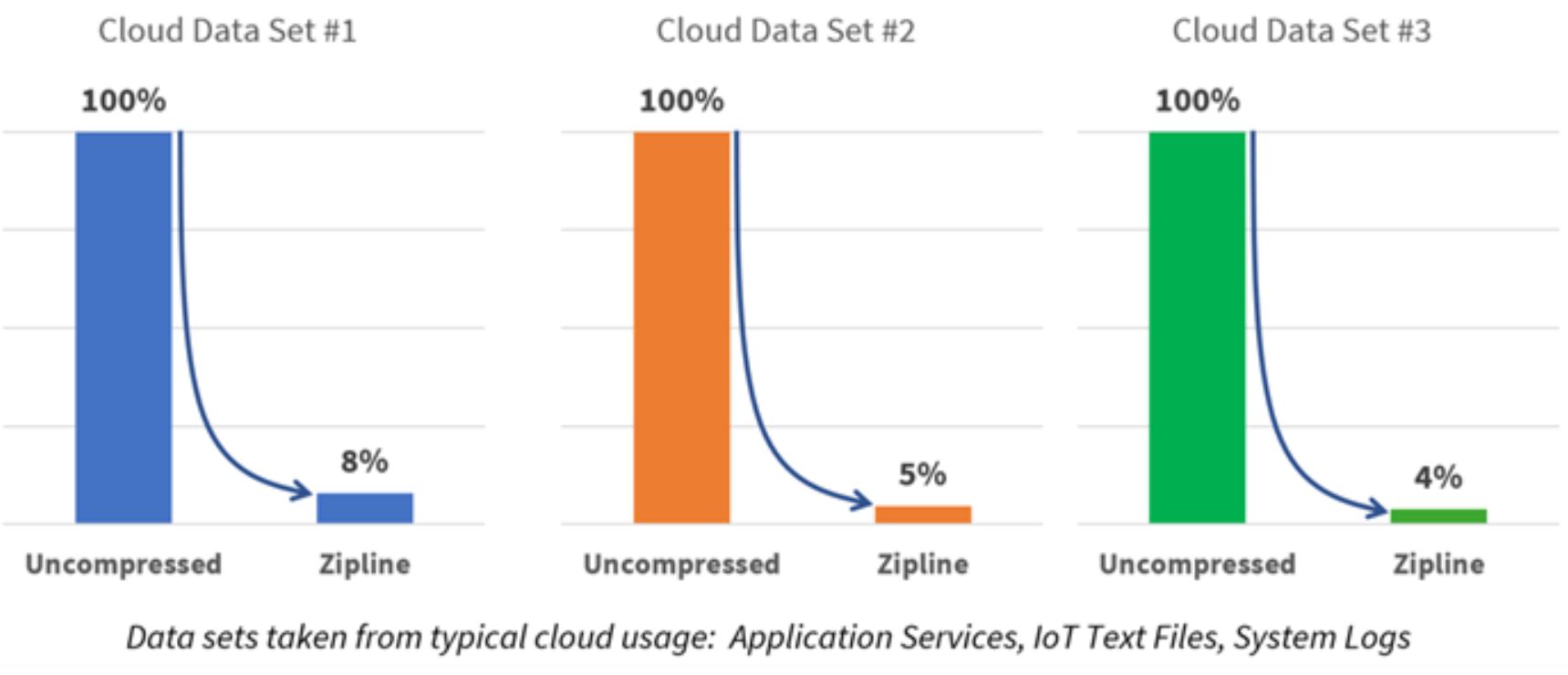

Microsoft’s rationale for Project Zipline is easy to understand. Zipline provides compression and encryption to both make file sizes smaller as well as keep data at rest encrypted.

For a service like Microsoft Azure, reducing stored data by several percent means millions in cost savings. Likewise, freeing up CPUs from doing that compression and encryption work can have an enormous impact on operating costs. Since OCP Summit 2019, the company has been pushing hard to gain traction from the hardware community. Now, we have a Zipline ASIC.

Project Corsica a New Zipline ASIC

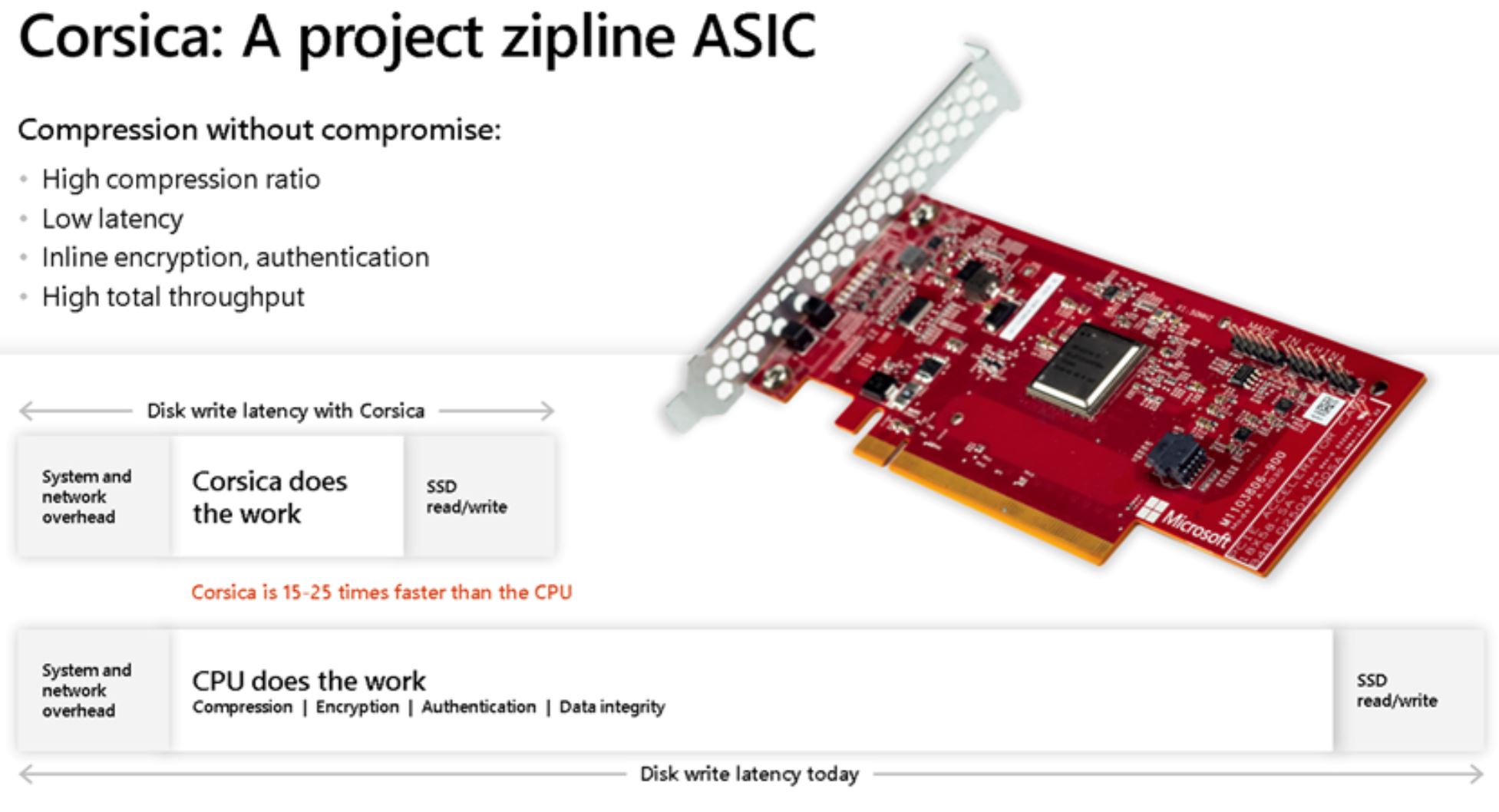

A joint project between Broadcom and Microsoft, Project Corsica takes the project’s logic and turns it into an ASIC capable of handling 100Gbps of encryption/ compression.

The ASIC is found on a relatively simple PCIe x16 card (required at Gen3 speeds for 100Gbps) showing why such an approach can yield huge benefits. The companies claim that the ASIC is 15-25 times faster than doing the same work on a CPU leading to substantially lower latency.

Final Words

Microsoft opened the work here, putting Zipline on Github. It is recruiting its hardware and CPU vendors such as Intel, AMD, Ampere, Marvell, Mellanox, and Broadcom to support the new standard. For others in the industry, this space is worth watching. If Microsoft can get its hardware partners to provide support, Zipline may become a defacto standard from the cloud to the IoT edge in a few years.

Wouldn’t this be nice as a chiplet on some AMD package?

Something seems to be missing from the explanation in this article. How exactly does this ASIC reduce the disk IO latency as shown in the last diagram? Does it use it’s own storage as the U.2 connector on the card seems to hint at? Eventually the data will need to go to reliable persistent storage, and also need to be decompressed/decrypted again.

For compression there are other options already available (mostly lzr1 or lz4 based), but those work poorly with encrypted content. From the compression ratio in the diagram (95%) it appears to be targeting pure text based data, so perhaps encrypted databases where the ASIC is provided with the key?

I can’t for the life of me see a viable implementation of this across an infrastructure without being more expensive or being extremely niche as it requires full software support as well.

If it were more like what intel QAT is but then for compression it would be a lot more interesting.

@david it seems pretty clear that they’re calling the entire operation, including CPU time to compress, as “Disk Latency”. This accelerates the CPU portion of it, thus reducing the whole operation. This is just marketing. The disk latency doesn’t come into play until the last part of their diagram, the SSD write operation. The first only thing this really is accelerating is the CPU part. Put a different way, lets say it takes 1 second today to compress something via cpu, then 1/4 second to write to disk. total time is 1.25 seconds. With this technology, the cpu time is reduced to 0.1 second, and 0.25 to write, thus “Latency”, in their usage, is reduced to 0.35 seconds, instead of 1.25 seconds. make sense?

If you’re compressing and encrypting data at rest as it suggests this is designed for, then as far as the application is concerned it is storage latency – as it has asked for a write to disk or read from it. The application doesn’t care if only part of that latency is getting bits from the disk and the rest is software time doing the decryption and decompression, it just wants the data it asked for, in plaintext.

Is it available for us regular homelabbers to buy?

Is this project now completely dead?