Gigabyte G242-Z10 Topology

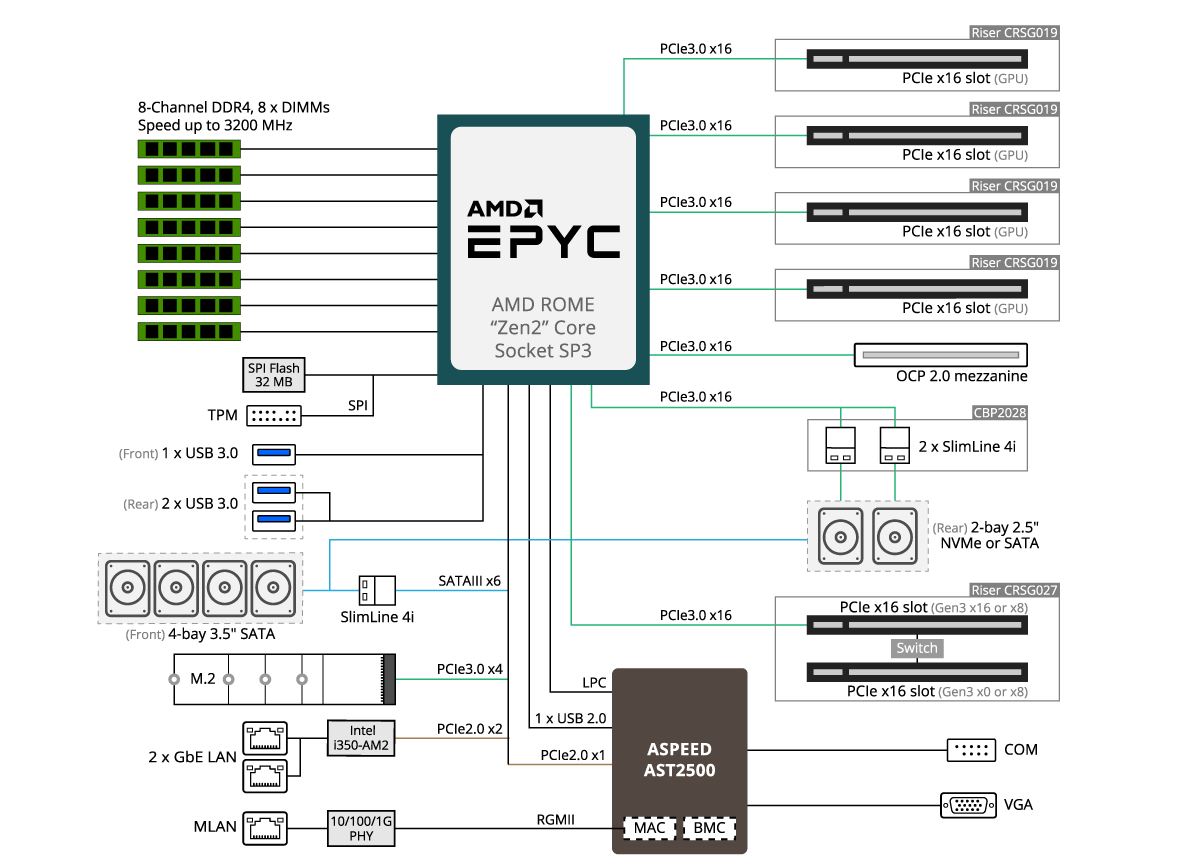

We wanted to start with the Gigabyte G242-Z10 block diagram. One can see the AMD EPYC 7002 series “Rome” CPU at the heart of the system. As we mentioned in the hardware overview, using AMD EPYC here allows for an enormous amount of I/O without resorting to using two sockets, PCIe switches, or even a PCH as we would see on a similar Intel Xeon platform. Instead, Gigabyte is delivering this system with I/O from a single CPU.

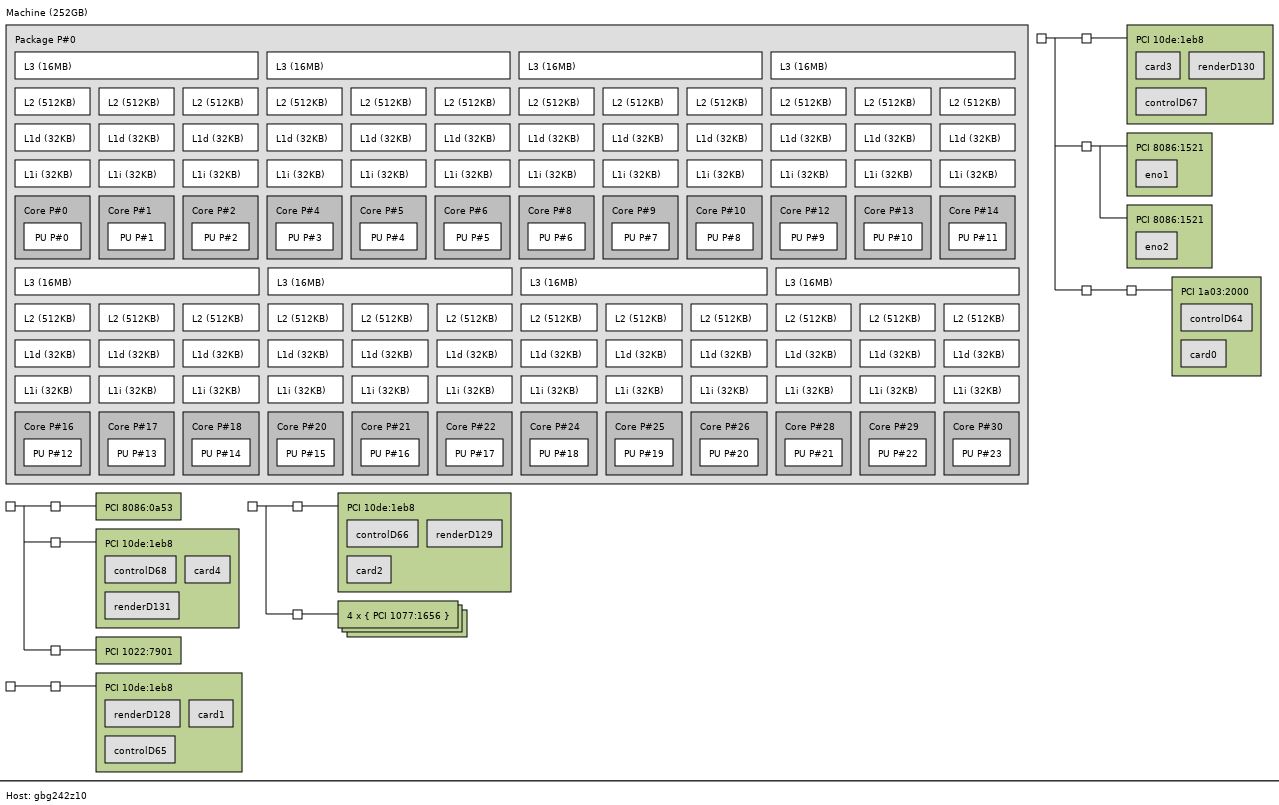

In terms of topology, here is the system with four NVIDIA Tesla GPU attached and an AMD EPYC 7402P 24-core CPU. We think the EPYC 7402P is a great pairing with this server. As you can see, unlike the first-generation EPYC, all of the PCIe devices including the GPUs and NICs are all attached to the same NUMA node.

The four GPUs, NVMe SSD, and the 4x 25GbE PCIe x16 card in this system topology above utilizes a total of 84 PCIe lanes without any of the other I/O and devices such as the 1GbE NICs. This amount of connectivity with Intel Xeon requires, at minimum, two CPUs or PCIe switches. With the AMD EPYC 7002 series, there is no compromise.

Gigabyte G242-Z10 Management Overview

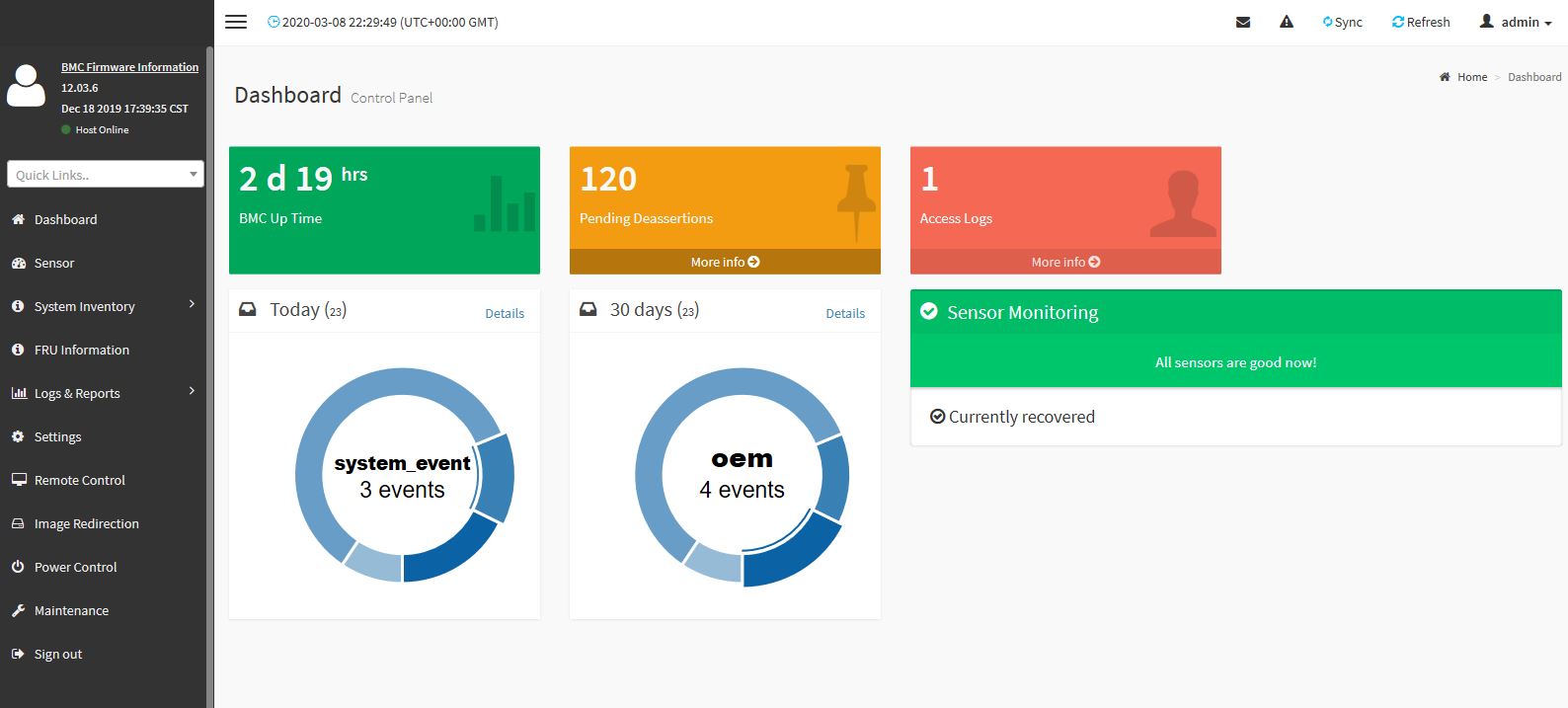

As one can see, the Gigabyte G242-Z10 utilizes a newer MegaRAC SP-X interface. This interface is a more modern HTML5 UI that performs more like today’s web pages and less like pages from a decade ago. We like this change. Here is the dashboard. One item we will quickly note is that you can see the CMC IP address in the upper left corner that is helpful for navigating through the chassis.

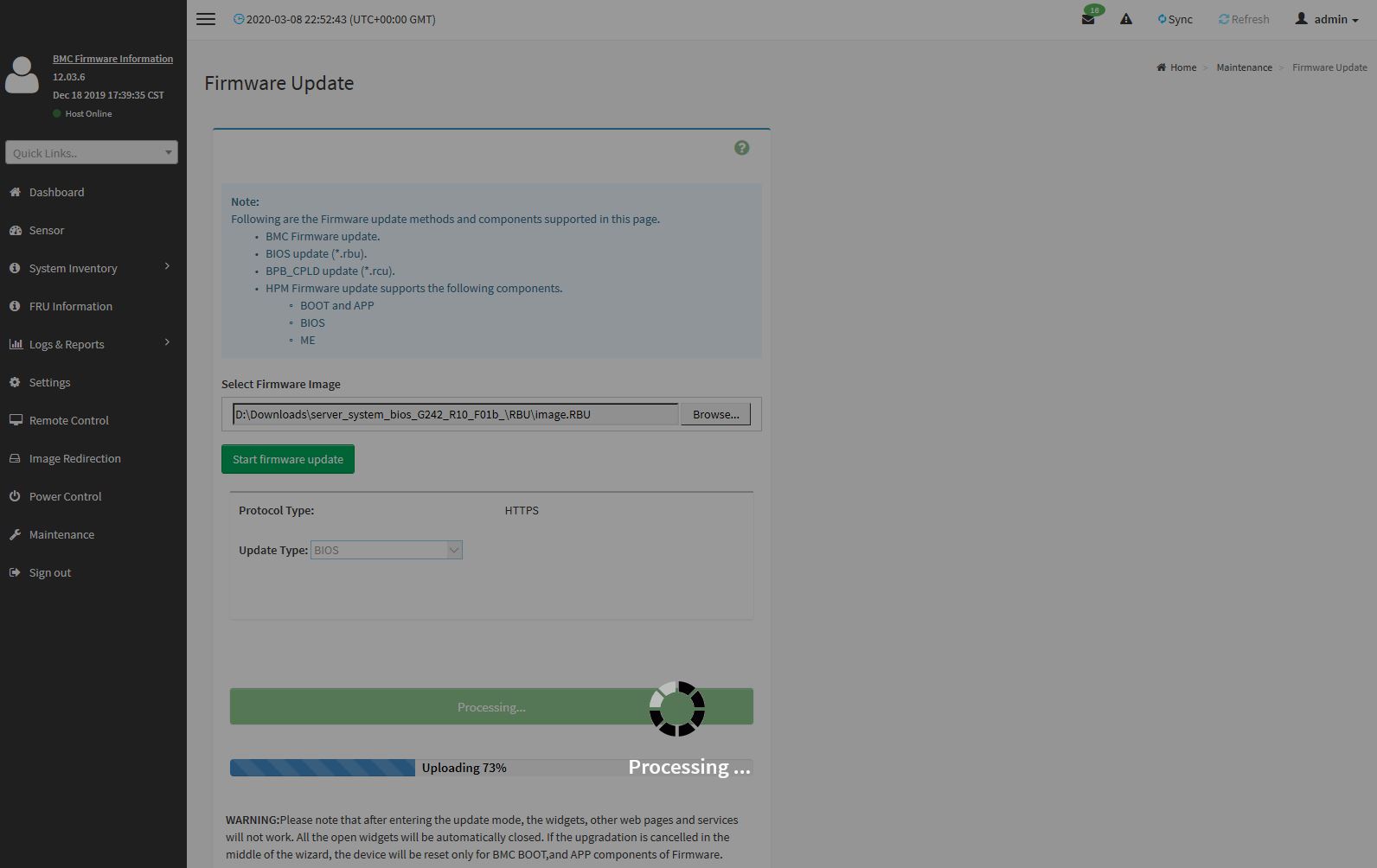

You will find standard BMC IPMI management features here, such as the ability to monitor sensors. One can also perform functions such as updating BIOS and IPMI firmware directly from the web interface. Companies like Supermicro charge extra for this functionality, but it is included with Gigabyte’s solution.

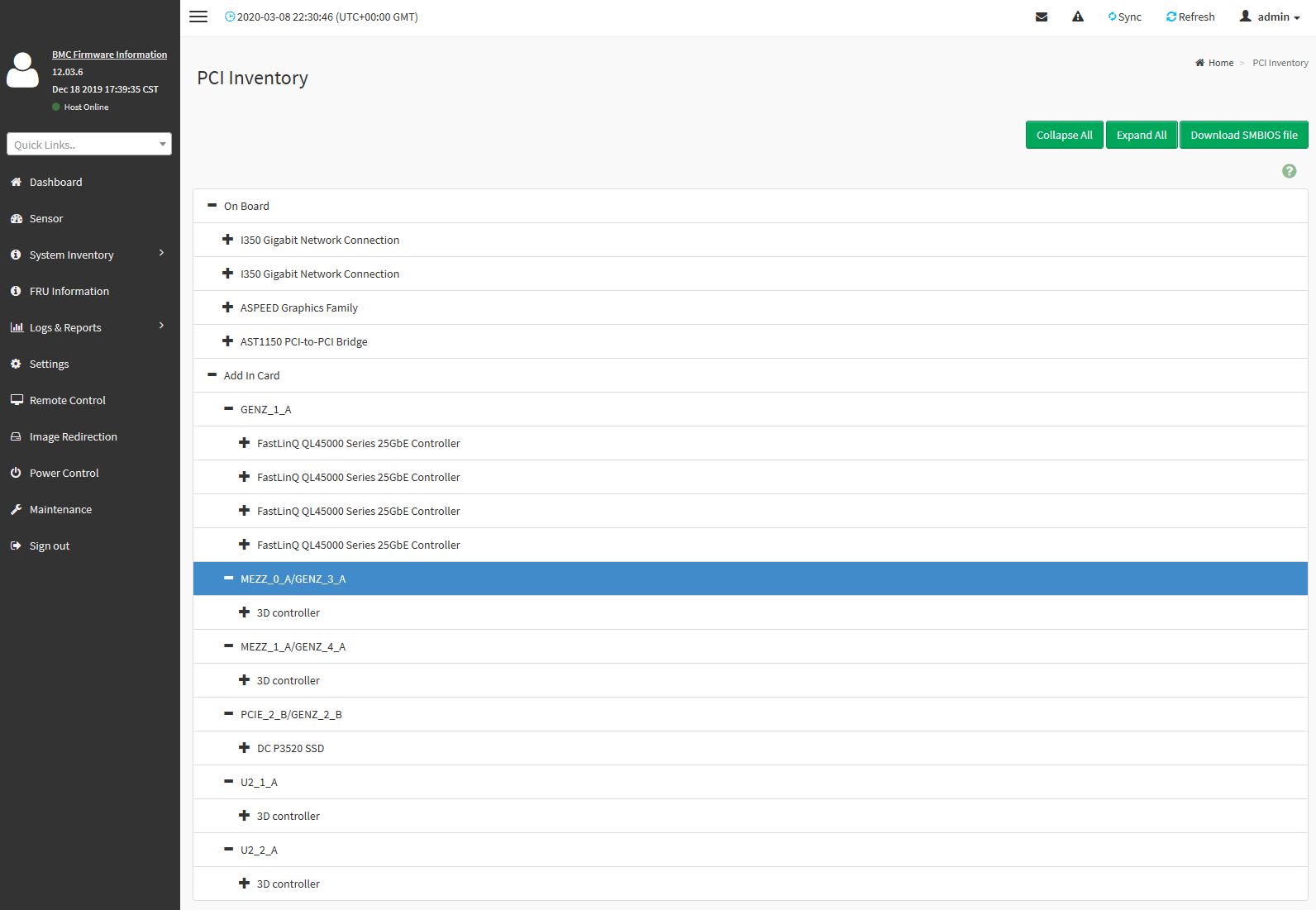

Other tasks such as the node inventory are available. One can see this particular node with four NVIDIA GPUs, an Intel DC P3520 2TB NVMe SSD, and four-port QLogic 25GbE NIC. One item we would have liked to have seen is the PCIe devices re-named to make more sense in this server. For example, the U2 ports are providing PCIe connectivity to the NVIDIA GPUs here.

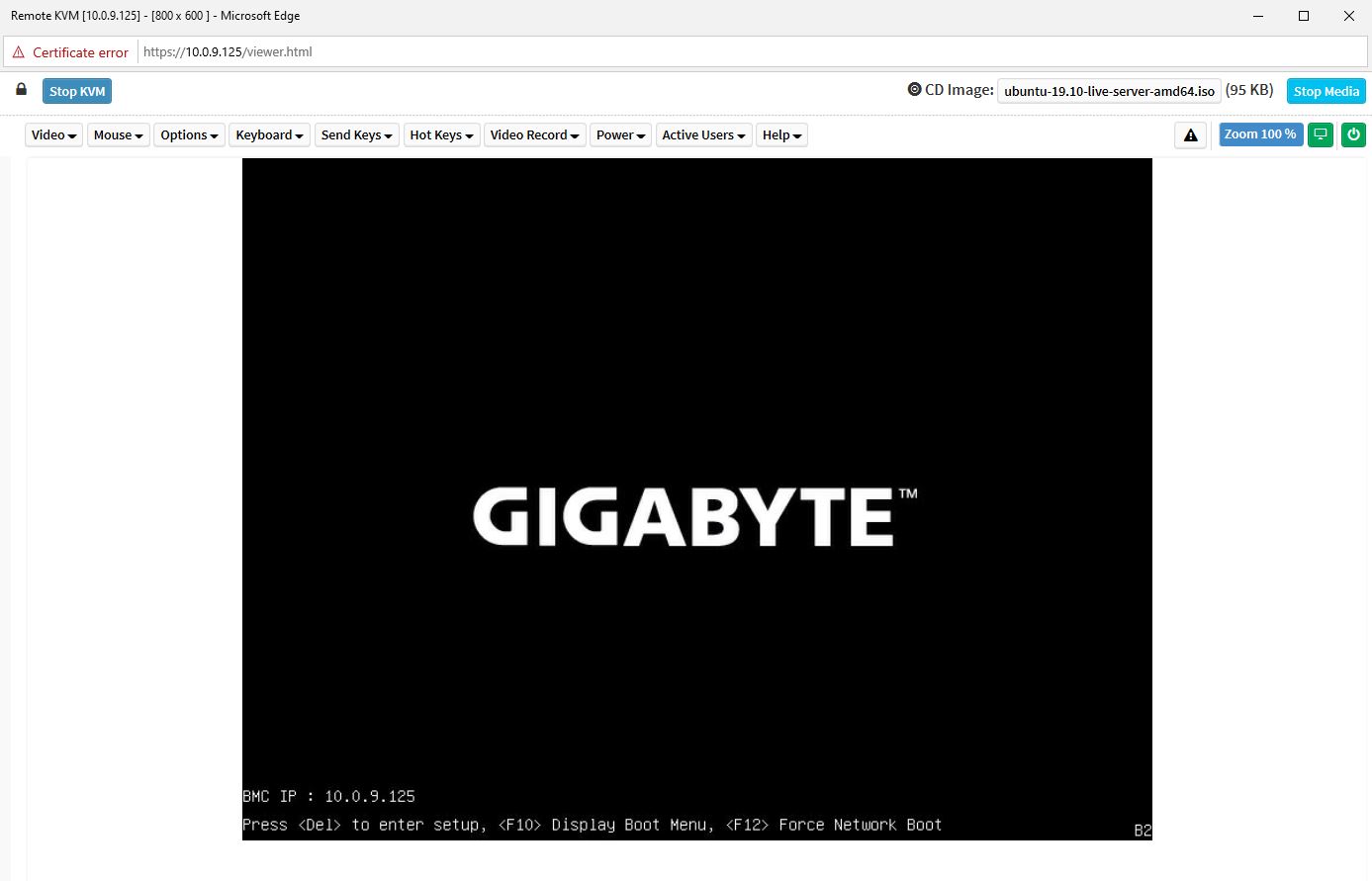

One of the other features is the new HTML5 iKVM for remote management. We think this is a great solution. Some other vendors have implemented iKVM HTML5 clients but did not implement virtual media support in them at the outset. Gigabyte has this functionality and power control support all from a single browser console.

We want to emphasize that this is a key differentiation point for Gigabyte. Many large system vendors such as Dell EMC, HPE, and Lenovo charge for iKVM functionality. This feature is an essential tool for remote system administration these days. Gigabyte’s inclusion of the functionality as a standard feature is great for customers who have one less license to worry about.

The web GUI is perhaps the easiest to show online, however, the system also has a Redfish API for automation. This is along what other server vendors are doing as Redfish has become the industry-standard API for server management.

Next, we are going to look at the performance before moving to power consumption and our final words.

Thanks for being at least a little critical in your recent reviews like this one. I feel like too many sites say everything’s perfect. Here you’re pushing and finding covered labels and single width GPU cooling. Keep up the work STH

As its using less than the total power of a single PSU, does this then mean you can actually have true redundancy set for the PSUs?

Also, I could have sworn that I saw pictures of this server model (maybe from a different manufacturer) that had non-blower type GTX/RTX desktop GPUs installed (maybe because of the large space above the GPUs). I dont suppose you tried any of these (as they run higher clock speeds)?

https://www.asrockrack.com/photo/2U4G-EPYC-2T-1(L).jpg

https://www.asrockrack.com/general/productdetail.asp?Model=2U4G-EPYC-2T#Specifications

I am looking forward to see similar servers with PCIe 4.0!

Been waiting for a server like this ever since Rome was released. Gonna see about getting one for our office!

For my application I need display output from one of the GPUs. Can not even the rear GPU be made to let me plug-in a display?

To Vlad: check the G482-Z51 (rev. 100)

Has anyone tried using Quadra or Geforce GPUs with this server? They aren’t on the QVL list, but they are explicitly described in the review and can be specc’d out on some system builders websites.