Every so often, a server arrives in the lab that feels very exciting because it feels different. The Gigabyte E251-U70 is one of those servers. This 2U short-depth platform is designed to offer a single-socket edge AI and networking platform. As such, it feels like something different compared to what we are accustomed to seeing. If you feel like this is a platform you have seen somewhere else, it may be. This is the server behind the NVIDIA Ariel 5G vRAN platform.

Gigabyte E251-U70 Hardware Overview

Since our reviews have grown in size, we are going to split our hardware overview into two parts. First, we are going to look at the external features. We are then going to take a look at what is inside.

Gigabyte E251-U70 External Hardware Overview

The first item one will notice is that the server itself is very small. Indeed, although we normally do not feature unboxing photos, this is what the server looks like in its shipping box.

That is perhaps the highest foam to server ratio out there in modern shipping boxes. The reason for that is that this is a short-depth front I/O platform. It measures 439mm x 86mm x 449mm or 17.3″ x 3.4″ x 17.7″.

On the left side of the system, we get normal status LEDs and power/ reset buttons as well as a USB 3.0 Type-C port. The main feature on this side of the chassis is the six 2.5″ bays. Our system was wired for these to be SATA bays. These used Gigabyte’s tool-less drive trays that we really like.

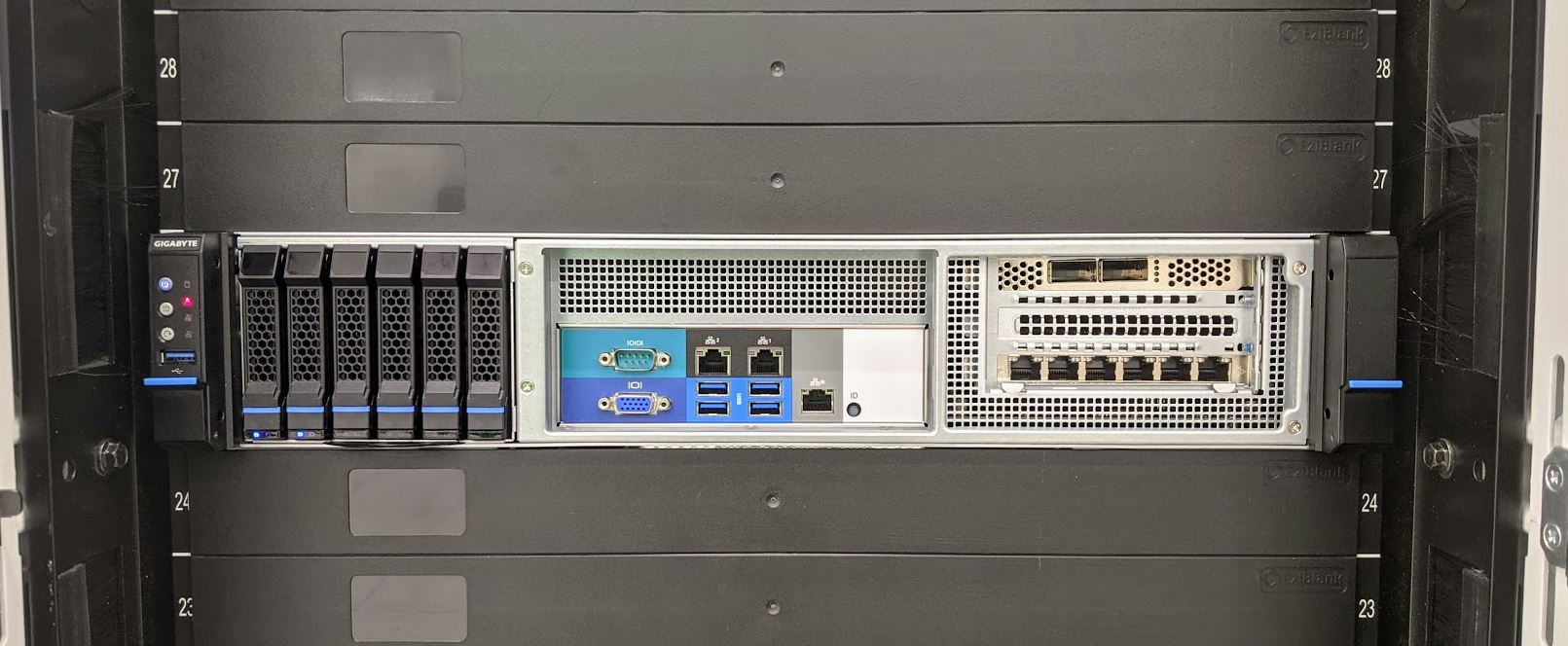

In the middle, we get a serial COM port, a VGA port, four USB 3.0 Gen1 ports for standard IO. We also get two 1GbE NICs powered by Intel i210 controllers. That would normally be a fairly low-end option, but in this platform, the design is specifically to have higher-end NICs installed for networking. These NICs end up as the software/ system management NICs and/or are used for provisioning. While that is for the server’s software and OS, there is also a RJ45 management port since this machine has IPMI as well.

On the right side of the chassis, we get our horizontal I/O which allows for full-height expansion cards to be used. We are going to discuss the configuration as we do our internal system overview.

Here is the front I/O of the system with a high-speed NIC as well as a low-speed NIC installed. The 6x 1GbE NIC we recently featured in our Silicom PE2G6I35-R review.

The system has four large fans in the rear and redundant power supplies on the side. This is a common configuration in the vRAN space. The alternative is usually having only fans in the rear with the power supplies moved to the front replacing some of the 2.5″ storage bays but having all cabling off the front of the chassis.

The hot-swap fans are removed by undoing a single thumbscrew and then a latch is used to lock the fans in place or remove them. This is a sturdier carrier than we were expecting.

The power supplies are redundant 800W 80Plus Platinum units from FSP.

This is the system installed in a rack in what is now the third data center we are using (we received permission to take this photo.) The system uses a port to power supply airflow design. As a result, you would want to have all of your racks to be set up like this either with scaling out these systems and getting matching switches. Alternatively, if you have these installed in racks with standard servers and PSU to port airflow switches mounted with ports in the rear of the rack, you will need to move cables from the front of the unit to the rear of the rack. The new data center racks have channels you can see on either side specifically designed for this cabling scenario while still allowing for cold aisle containment.

Another quick note on the system, Gigabyte has nice service labels. There is both a label on the top and inside the cover. The external one has a yellow “CAUTION” section that says this is a “TWO PERSON LIFT REQUIRED” server. That must be a standard caution template because this server is so small that there is less sheet metal which makes it very light. Also having only a single CPU contributes to the low weight. The data center tech and I both had a bit of a chuckle as this is lighter than many modern switches. Officially, it requires two people to lift. Unofficially, we did this with one person and thought it would take too long to get a server lift.

That level of external serviceability and port configuration is important in the vRAN market. While we had two people and access to various tooling, if these are installed in the field next to a 5G tower the technician may not have access to a server lift and even getting behind the rack may be a difficult task. Having such a short depth server also means that one does not need as wide of an aisle to be able to slide the server out of the rack on its tool-less rails. These may seem like small details, but they can make a big difference where these are expected to work.

Next, we are going to look inside the system to see how this package is built.

The combination of a powered riser card with PCIe switch, rather than a passive riser using some higher-density proprietary edge connector, and a bunch of fully populated but mechanically unusable PCIe slots interests me. I definitely wouldn’t expect to see an active PCIe switch dragged in to a cost-optimized design that isn’t starved for CPU-provided lanes; and when pennies really need to be pinched even having the connectors populated isn’t a given.

Do you know if this system just shares a motherboard with one or more other Gigabyte units that require all the slots and it was cheaper to avoid SKU proliferation than it was to cut the redundant headers; or if there is a variant of the riser card module that just provides a bunch of half-height slots? The one slot closest to the RAM looks like it’s blocked by non-removable chassis metal; but the rest of them certainly look like they could be configured as half-height slots with an appropriate rear plate slotted in.

Who is this “one” I keep seeing in all STH articles? Neo?