The Arm Hitch at STH 2025

Folks know that I am very open to running different architectures at STH. I have a belief that if we review it and I think something is good, then we should be willing to deploy it. We have had Cavium CPUs, Ampere CPUs, and more serving pages in the past. We still have a few EPYC 7001 “Naples” nodes that were at least a year or two before the EPYC 7002 “Rome” generation was announced and AMD adoption started taking off. That leads us back to our 2025 infrastructure refresh.

For those who do not know, I have become anti hard drive and am working on liberating our infrastructure fully from hard drives by the end of 2025. There are still a few left, but they are the lowest reliability pieces of hardware we have deployed, so I want them gone. Against that backdrop, picture this, you find a pair of Ampere Arm processors and have trays full of 64GB DDR4-3200 modules that are all there essentially “free” to use.

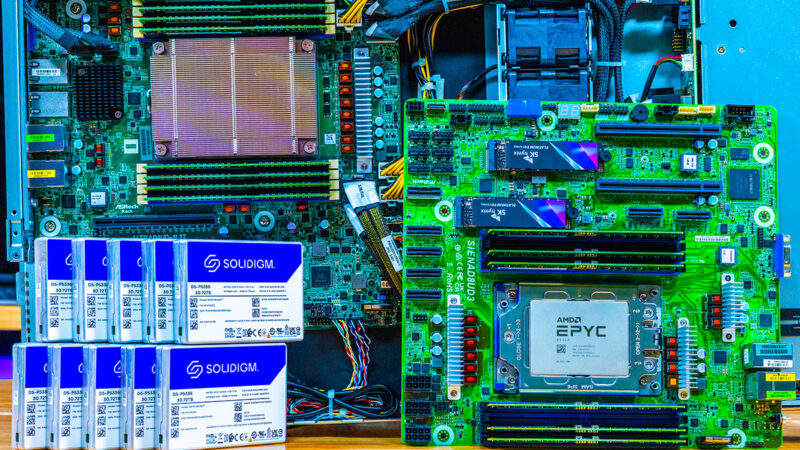

Given that, we purchased Ampere Altra servers by ASRock Rack that the idea was to fill them with 30.72TB or 15.36TB SSDs, and deploy them in our colocation facilities to replace the EPYC 7001 hard drive nodes. To many enterprises, 307.2TB or 153.6TB is not a huge amount of storage at one location, but a video is now occupying 200-250GB at the low end and 1TB at the higher-end. That is making sharing footage on cloud services like Google Drive far too expensive, especially if you want to retain footage for when a platform gets upgraded in a year or two and we review the new platform. We are not even using our cameras to their fullest since capturing 8K on some of the platforms burns through roughly 2TB per hour.

So, of course, the server barebones was inexpensive, and we had free Arm CPUs that were plenty for the build-out. We had the first node built with 512GB of DDR4 and then started re-thinking our path.

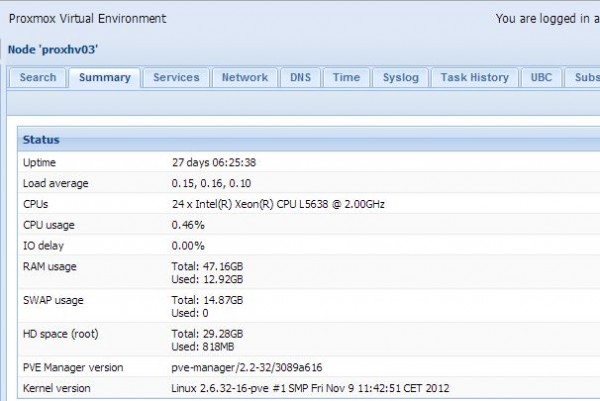

First, we use Proxmox VE and have been doing so at STH well before it became popular (for over a decade.) Ten years ago we could not afford VMware and Microsoft licensing, and probably cannot afford VMware today, especially on smaller clusters. When I went to go pull Proxmox VE for Arm, I could not. Instead, there is a community patched version on GitHub. I do not feel comfortable using that in our data centers, but I wanted to use Proxmox VE. That was a big problem.

I then thought about running Debian or Ubuntu Server as our base since I feel very comfortable with those options. That seemed like a good idea, then I re-thought about it. While it would be cool to have many Arm cores available, if something went really wrong one day, there was no option of simply being able to restore a virtual machine from a backup on the server and have it online in 60-90 seconds. While we have done data collection using Arm servers for web hosting at STH in the past, those were hand-built images that took several minutes to get running, but those were manual builds. When things go bad, it is much easier to just hit one command and be up and running in a few seconds with a progress bar.

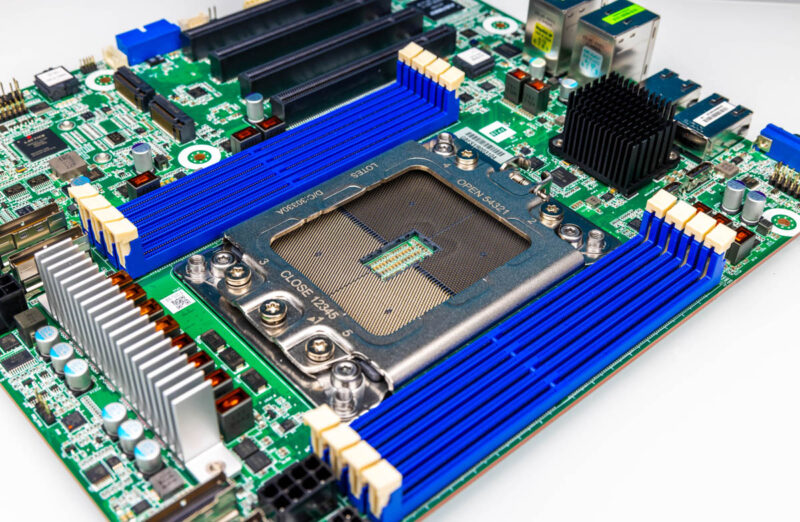

All of that meant we ended up going a different direction. Instead of using the “free” Arm option, we pulled the ASRock Rack motherboards and swapped to the ASRock Rack AMD EPYC 8004 Siena platform. We prototyped the swap using chips AMD sent, but then swapped them out for ones we got great deals on. We still had to buy DDR5, and the motherboards.

The net result was that we could just install Proxmox VE from an ISO image. We could create our storage arrays. Those are on the way to the data centers, but when they are installed, we can take images running on hosting servers and run any of our images on them. The Zen 4c cores are not the fastest by any means, but the chips are low power, compatible, and are quite speedy. It is neat to replace dual socket servers from previous generations with single socket current generation servers since that saves us power as well. Proxmox VE support is licensed on a per socket per year basis.

To be clear, a bit of this is also that I think that the EPYC 8004 “Siena” is an underappreciated platform so I have wanted to deploy it. Running an EPYC 9004/ 9005 big CPU with 12-channel memory as essentially a distributed backup/ cold storage server felt like overkill. With NVMe, we needed more lanes than the EPYC 4004/ 4005 could provide. Still, I wanted a lower power socket and we did not need 100+ cores in the socket, so Siena ended up being a great fit. We are now deploying a few of these as our budgets allow.

Final Words

As we are going though this refresh, I realized what we had just experienced was a microcosm of what is going on in enterprise IT. I am completely comfortable running Arm servers at this point from an administration standpoint. Still, we had limited options for buying an Arm server to deploy. Then we realized that we were going to run into challenges with our virtualization platform with it not being available for Arm. We were going to run into compatibility issues that were not hard to fix, but migration was not as easy as turning off a VM and turning it on running on an Arm server. That is largely due to legacy architecture, but it is still a thing.

In the end, even if running Arm was going to be highly discounted since STH has a sizable parts bin, it felt like the wrong decision because it would have split what we deployed into two pools. All of those reasons mirror why in a hybrid multi-cloud world we are seeing cloud providers push users to their Arm offerings, but enterprise adoption (outside of AI) is still very low. That thought became a piece. Hopefully our STH readers found it useful.

While software is key, it’s an interesting observation that current ARM hardware is not attractive enough to motivate further software development.

ARM had its chance when the Raspberry Pi craze put a small ARM development system on every software engineer’s shelf while Fujitsu and Nvidia started building systems with competitive performance. Unfortunately Nvidia’s bid to take over ARM with a well capitalised development team was rejected on political grounds, AmpereOne underperformed, the Raspberry Pi craze faded and ARM sued Qualcomm for breach of license. Given how ARM intellectual property appears impossible to sell, the only recourse was for SoftBank to purchase Ampere. The above chaos suggests an uncertain future and missed opportunity for ARM.

On the other hand, IBM Power has no entry level hardware, no new customers and as a result few independent developers. It’s possible OpenPower will lead to cost competitive hardware ahead of RISC-V. It’s also possible neither will succeed and Loongson with LoongArch will emerge as the next dominant architecture.

Yesterday the enterprise solution was System z, today it is x86 and tomorrow has not yet arrived.

If ARM were in the NVDA stable as obnoxious as it sounds, it would have a brighter future, forcing the software dev in the pursuit of AI. ARM has for decades chased efficiency rather than raw performance. And currently it’s close on performance, close enough it would take off if it weren’t for per-core pricing and migration headaches between architectures. The answer from ARM’s stable should be – extension of the arch for performance gains. That – means bigger silicon, losing the efficiency that got it in mobile splitting the designs between mobile and power.

LoongArch/Loongson would have an even larger up-hill battle in the enterprise for adoption even more so in the ‘west’, having all the caveates of ARM, and RISCV but also the fallout of political/tariff issues as well. Apple’s ARM arch will continue to be the prime competitor from an architectural standpoint. Compiler builds per arch probably, pits Apple ARM M chips vs x86, nothing else comes close today.

I really like RISCV but it’s open nature will mean fragmentation of designs. I don’t think it would be adopted server-side any better than ARM and probably worse.

Also, RPi’s are everywhere still, and I don’t think they are moving to RISCV anytime soon.

I think most arguments are not relevant. Regarding software, the Linux stack with its open software in the distribution’s repository overwelmingly runs on ARM, which covers the most common server use cases.

Things like nested virtualization, proprietry software (Oracle, etc.) exist but today are not comprising the majority of use cases.

My argument is that the thing that matters most is total cost per performance unit. On AWS, ARM is slowly eating into the marketshare, currently at 25% of total and rising.

I don’t see this trend changing anytime soon, and other hyperscalers will follow. people/companies self-hosting/colo-hosting these days are not early adopters and will follow over time.

WTF do you need all that computing muscle for ?

Do you have a massive operation and STH is just hobby for you guys or what ?

@Patrick Do you have any idea why there are no Tower Servers with EPYCs from any of the major OEMs? (Dell, Lenovo, HPe)? The Tower server market is a bit niche but it’s also a very useful option when you don’t have dedicated server rooms or cabinets. Unfortunately, all of these options are intel-Only. Any ideas?

That’s AWS. They’re discounting to lock companies into their cloud. If you’re running enterprise IT you’re running on x86 today b/c you’ve got many apps that don’t run on Arm. Outside of AI spend, the hip thing to do today is to move off of cloud into colo. Companies that are still cloud only are weak IT departments that don’t have the skills to do it themselves because they’ve got weak CIOs. I work at a large F500 company, and our ROI for moving workload off the public cloud was under 7 quarters. The workloads we moved off were the result of a trend following our previous CIO who wanted to sound like they were doing something on trend, but they were just putting IT on autopilot without adding skills to our team.

Public cloud is great if you need burst, or if you need so fast you can’t do it yourself yet. If you can, then it isn’t just about the instance pricing and it’s a lot more expensive once they’ve gotten you locked into their platform.

I have over 200 people working for me. If one of them stood by, we need to add Graviton because it’s cool, I’d coach them to find a new job.

For enterprise IT with established on-premise datacenters, hybrid cloud (whatever that means) is the sensible approach. For me hybrid cloud implies the same or similar infrastructure in the cloud is also available locally and provides flexible resilience as well as a lever when negotiating both on and off premise prices.

As discussed in the article, ARM is not great for hybrid cloud strategy because on-premise Altra and AmpereOne servers are slower than Amazon Graviton and Microsoft Cobalt. As also mentioned, since it’s difficult to migrate valuable legacy software to ARM an enterprise with existing datacenters ends up with a long-term combination of x86 and ARM systems–yuck.

For IBM shops the problem is reversed. Hybrid cloud is difficult because the major cloud providers–Amazon, Azure and Oracle–do not provide Power and System z instances. Given Amazon’s attempt to capture HPC and AI workloads, I’m somewhat surprised they haven’t sought traditional IBM workloads.

I also wonder what Serve the Home does with all their servers when not evaluating them for a journalistic review. Practical use provides important insight and that’s what this article is about.

While likely just a brainstorm, an independent test bed available for companies to compare competing hardware would be really useful and Serve the Home has the stuff to do that. It’s another level to securely give people access to run their own tests, but doing so would illustrate additional aspects the review hardware.

> “… there really is no legitimate way to download an instance image and turn it on in a server that you bought from a major vendor on prem. … but performance varies to the point that you might have to spend time analyzing that.”.

Easy to say: Just use “dd”, VMWare migration, or Hashicorp Packer. Slightly harder to do: Practice makes perfect. It’s not just the CPU (and this applies to x86 too), they’ve got the connectivity (and bigger pipes), more hardware in many cities to failover to (which you can do from the home or office; but monthly fee), and people 24 hours, and you can reconfigure or move quickly and scale-up huge for an event – all things difficult to do from the office.

It’s never one thing, one thing is the best. It’s frequently several things all work together extremely well, maybe almost perfectly, even if a few of those things aren’t the ‘best’ (and x86 isn’t far from it for most people), it’s that all the things just work; there’s no tripping point or wall or unexpected goal post movement.

@Vincent S. Cojot: The Lenovo ST45 V3 Tower Server features the AMD EPYC 4000 series CPU’s. As you call out, the tower form factor is ideally suited for deployments where you don’t have dedicated server room or cabinet. It is a compact tower and currently supports up to 12 cores (16 cores that can optimize Windows Server licensing coming soon)