The Dell PowerEdge R760 is this generation’s design study in server excellence by Dell’s engineers. In our review, we are going to see how the company’s new mainstream 2U dual Intel Xeon server compares to the rest of the industry. This is going to be a very in-depth server review as usual, so let us get to it.

Dell PowerEdge R760 Hardware Overview

As we have been doing, we are going to split this into an internal and external overview. We also have a video accompanying this article that you can find here:

We suggest watching it in its own browser, tab, or app for a better viewing experience. With that, let us get to the hardware.

Dell PowerEdge R760 External Hardware Overview

Looking at the front of the 2U PowerEdge R760, we can see a partially populated 24x 2.5″ design. Dell has other options for front storage including 3.5″ more SAS, more NVMe, and so forth, but we are only showing one configuration here.

On the left side, we get service buttons and then the eight SAS bays. Four of those bays are populated with 1.6T SAS SSDs. We would expect many of our readers to focus more on NVMe storage, these days, but many Dell customers are accustomed to using Broadcom-based PERC controllers for SAS arrays.

One of the cool features of the Dell backplane design is that it can put SAS components directly on the backplane, leaving the PCIe slot area free.

On the other side, we get the service tag, then USB console ports, a VGA port, and the power button. The big feature on this side is the NVMe drive connectivity. Here we have eight 3.2TB NVMe SSDs.

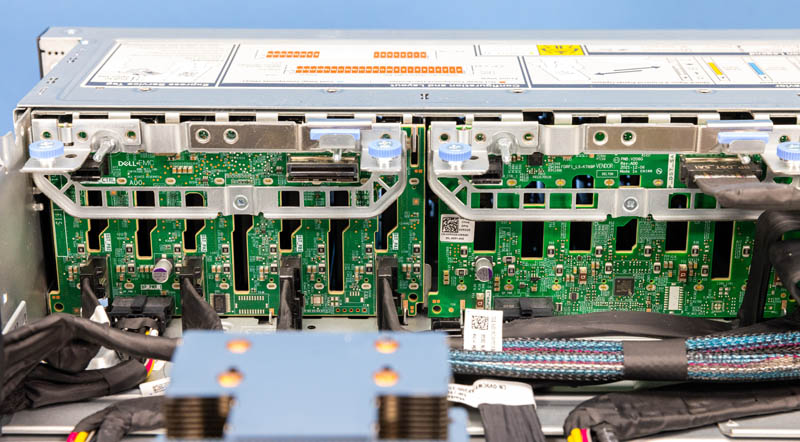

Dell’s 24x 2.5″ backplane is partitioned into three separate PCBs so one can customize this as we see in this unit. Some vendors use a single backplane, but these are easier to replace and have the SAS/SATA option. The physical design of these backplanes is the fanciest we have seen on a server to date.

Looking to the rear of the system, we can see a lot of customization potential.

First, we have the power supplies. Our unit has two 1.4kW 80Plus Platinum units. These are probably what we would target with most higher-end CPUs and without high-power GPUs and accelerators or full NVMe drive bays in the front. 80Plus Platinum is good, but we are seeing more servers with 80Plus Titanium power supplies. Dell has optional Titanium level power supplies as well, but many are still Platinum rated.

The power supplies are on either side of the chassis and are a bit different. Dell has a slender PSU here giving it a bit more room for airflow around the power supplies.

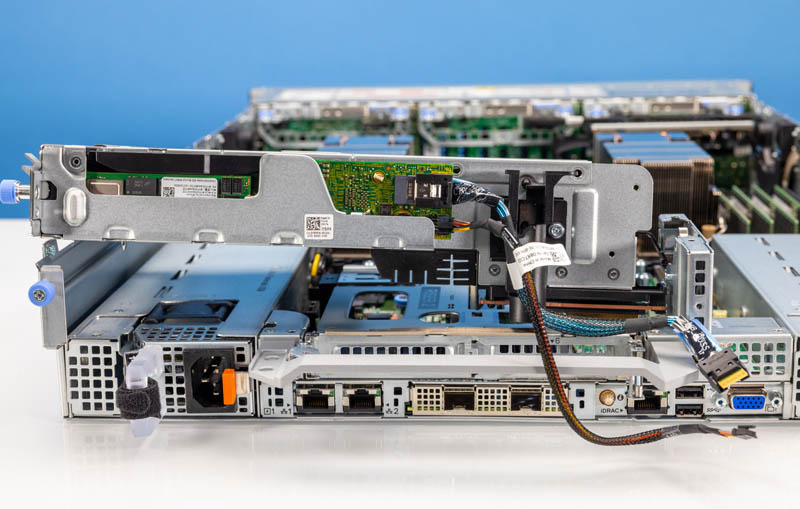

Next, is a STH favorite feature: the Dell BOSS. M.2 SSDs are very reliable these days so often we now see them inside the chassis. Dell has a solution that takes two SSDs and makes them rear-replaceable called the Dell BOSS.

This is a solution designed for boot media. The BOSS controller is a lower-end RAID controller so one can RAID two M.2 SSDs, then use that array for boot. This is important for OSes like Windows and VMware ESXi. One can use software RAID for most Linux distributions. Still, it is an easy-to-deploy solution.

One small item worth noting here is just how complex the BOSS solution is. Other vendors just use motherboard M.2 or will have a Marvell controller on a simple riser. Dell has custom sheet metal, cables, a captive thumb screw, and more.

Standard I/O on the server is non-existent. All of the I/O is via cards. Still, we wanted to cover what is in this server and show how it is implemented.

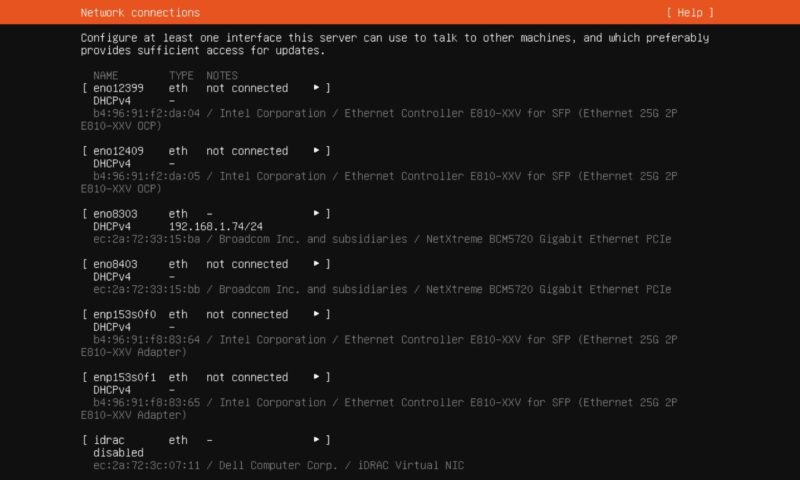

Dell has a custom Broadcom dual RJ45 module for its base networking. This is a Dell-specific custom module.

We can see this is a Broadcom NetXtreme BCM5720 dual 1GbE solution.

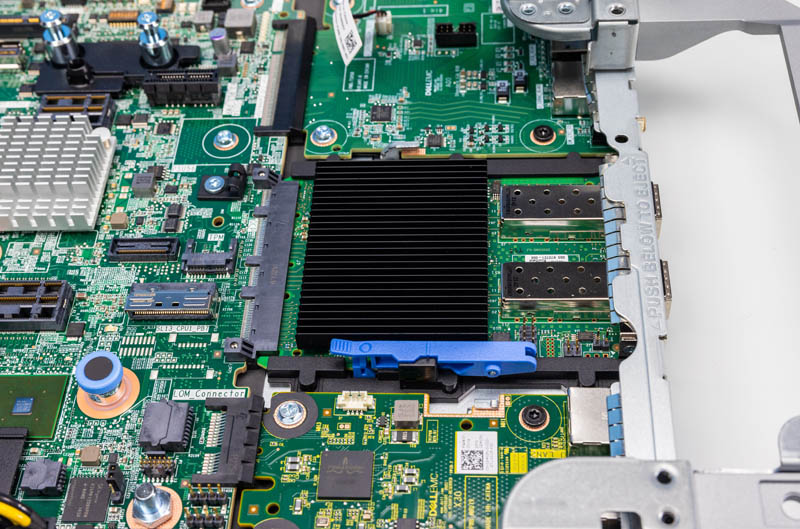

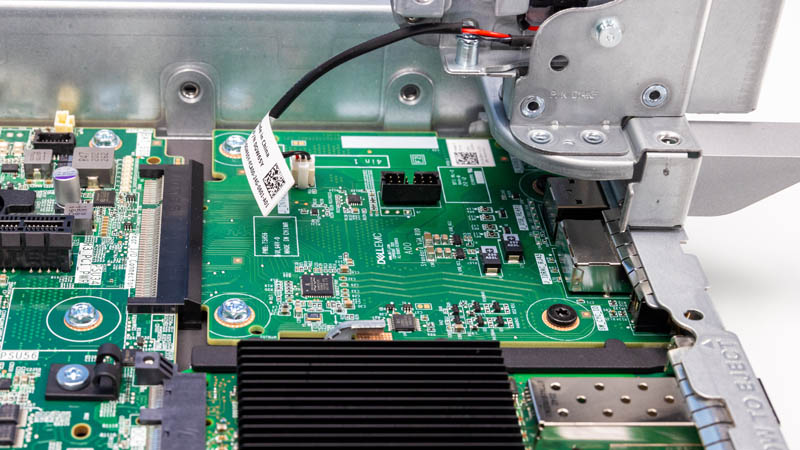

Next, we have the OCP NIC 3.0 port with an Intel E810-XXV dual 25GbE NIC installed. You can learn more about OCP NIC 3.0 form factors here, but this is the 4C + OCP connector and uses the SFF with an internal lock design to keep the card in place. That means that one has to open the system and replace risers to service this NIC. SFF with Pull Tab can be serviced without opening the system so that is why we see it on servers designed to minimize service costs like hyper-scale servers. Dell’s business model includes significant service revenue so it is likely less keen to use that design here.

The iDRAC service port, USB ports, and VGA port are on another custom module. This module also has chassis intrusion detection.

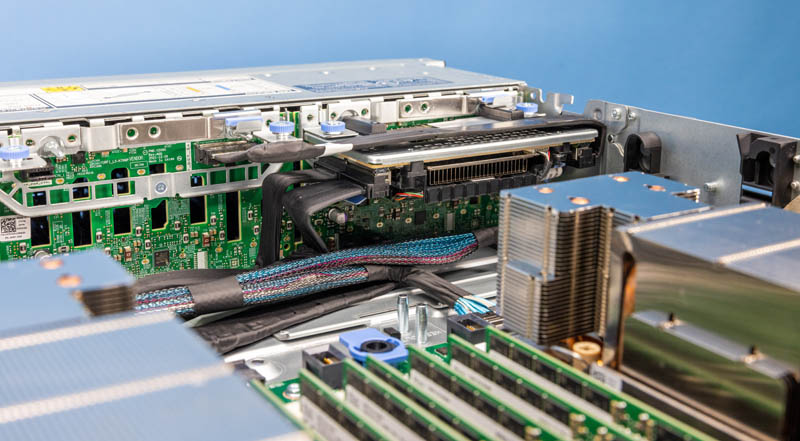

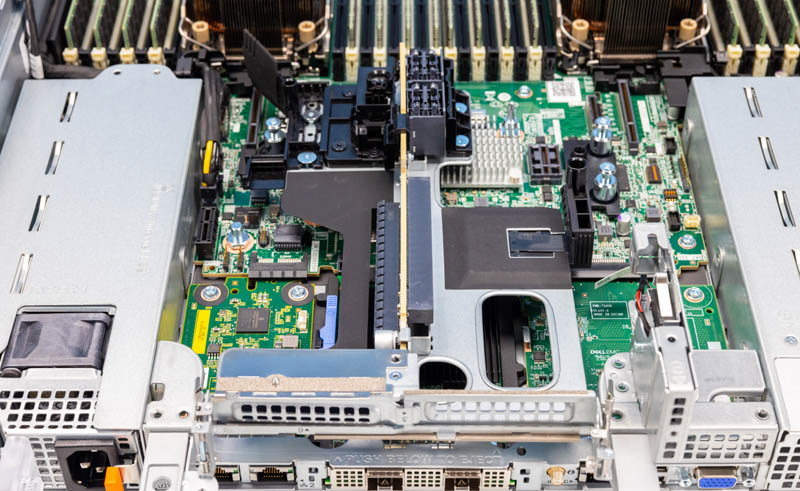

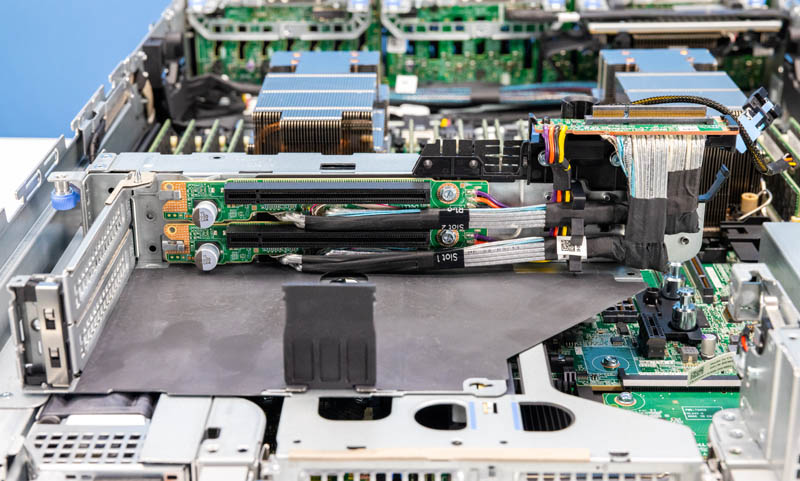

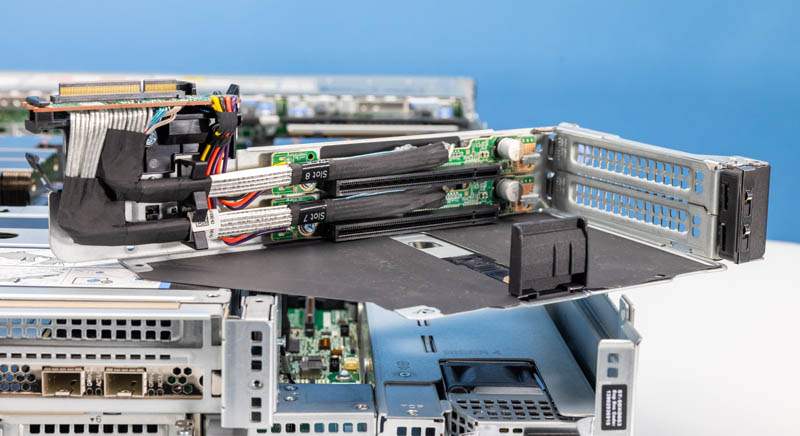

In terms of the risers, the PowerEdge R760 has an amazing ability to handle eight PCIe Gen5 slots, and that does not include the OCP NIC 3.0 slot.

Just above the OCP NIC 3.0 slot, there is a dual low-profile riser. We will cover the connectors in more detail later, but Dell is using high-density connectors instead of cables for all of its risers. It does have some cable-in-the-middle designs like this dual PCIe Gen4 slot riser design.

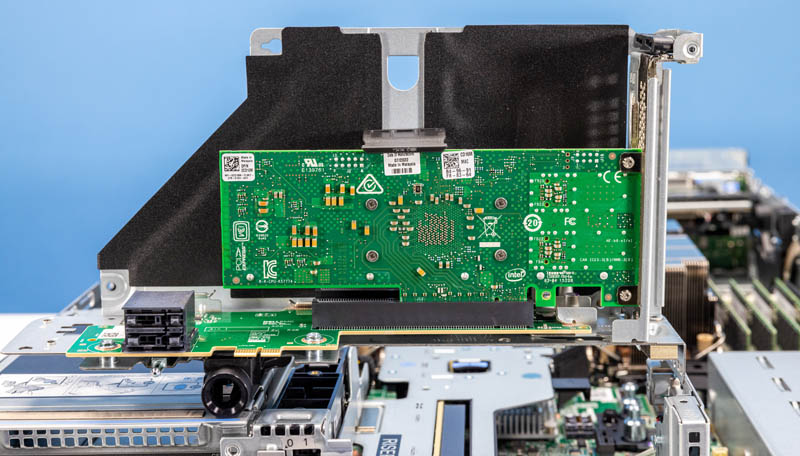

This is the middle riser where we have a PCIe 25GbE NIC.

This is another cable-in-the-middle dual PCIe Gen5 riser.

This is a great exterior design for the server, but now let us get inside the server to see how it works.

On BOSS Cards: Previous generations required a full power down and removing the top lid to access the M.2 card to change BOSS sticks. This new design allows hotswapping BOSS modules without having to remove the device from the rack. Very handy for Server Admins.

I’m loving the server. We have hundreds of r750’s and the r740xa’s you reviewed so we’ll be upgrading late this year or next thx to the recession.

Pricing comments standout here.

I’m in disbelief that Dell or HPE doesn’t just buy STH to have @Patrick be the “Dude you’re gettin’ a PowerEdge” guy. They could pay him seven figures a year and it’d increase revenue by nine to whoever does it. I’d be sad to lose STH from that tho

Shhhh….that worked with Anand, Kyle, Ryan, Cyril, Geoff, Scott….

I had a look at these server and preference the lenovo above them, I got a quote for a bunch of NvME drives and they wanted to put 2 Percs in to cover the connectivity their solution was expensive in inflexible especially if you put the NvME on the raid controllers I think it only does Gen3 and only 2 lanes per dive or something like that

the solution I got from lenovo with the new genoa chip was cheaper , faster and better because of their anybays and the pricing on the U3 drives was pretty good as well especially b/c I only wanted 16 cores and they have a single CPU version

you need to take the time to real the full technical documentation for this server withe the options and how stuff connects.

Agreed that the pricing is bonkers. I built a R7615 on Dell public, and it came out to $110k, while on Dell Premier it was $31k. A Dell Partner had built that out and tried to say $60k was a reasonable price, based on the lack of discounts Dell gave them. Mind you a comparable Lenovo or Thinkmate was $5-7k less than the $31k.

Second is their insane pricing on NVMe drives in particular. In a world where you can get Micron 9400 Pro 32TB’s for ~$4,200 retail (from CDW no less), Dell asking $6,600 for a 15.36TB Kioxia CD6-R (which is their “reasonable” Premier price) is shameful! I think they are actively trying to drive people away from NVMe, despite the PERC12 being a decent NVMe RAID controller for most use cases. Whenever I talk to a Dell rep / partner, they try to push me to SAS SSDs since NVMe “are so expensive”. You’re the ones making it so damn expensive!

Dell really should get more criticism / kick back for these tactics.

Tam, FYI the PERC12 is based on the Broadcom SAS4116W chipset and has a 16x PCIe Gen 4 interface and I believe is 2x Gen 4 lanes per SSD. Still a bottleneck if you are going for density of drives, but fairly high performing if you can split it out to a few more half capacity drives. E.g. four 15TB drives instead of two 32TB drives.

There are a couple of published benchmarks showing the difference, for example:

https://infohub.delltechnologies.com/p/dell-poweredge-raid-controller-12/

So, if you do need hardware NVMe RAID, such as for VMWare with local storage, the PERC12 looks like a solid solution. But they do have direct connect options to bypass using a PERC if you prefer that.

Thanks for that link Adam. The Broadcom docs for this gen chip, with 2, 8x connectors (8 PCIe lanes per connector), show that # of lanes assigned can vary by connector, and can be 1, 2 or 4 per drive, but must be the same for every drive on a specific connector. See page 12 of

https://docs.broadcom.com/doc/96xx-MR-eHBA-Tri-Mode-UG

Keep in mind though that the backplane needs to support matching arrangements. Not sure what Dell is doing there. It’s an issue on at least some Supermicro AFAIK.

So glad to see the latency came down to NVMe levels in that PERC12 testing and write IOPS improved so much. As a Windows shop, hardware RAID is still our standard.

Agreed on the silliness of the Dell drive pricing though – had to go Supermicro due to it on some storage servers, though I much prefer Dell fit/finish, driver/firmware and iDRAC for our small shop. I was able to get around it by finding a Dell outlet 740xd that met our needs one time as well (and was packed with drives so risk of needing to upgrade was very low).

Whereas I can buy a retail drive (of approved/qualified P/N) and put it in my Supermicro servers.

SIX HUNDRED WATTS IDLE AND 2000 DOLLAR MEMORY STICKS? What is this, man.

Do you know where the power is going for a 600w idle figure? You mentioned a specific high performance state config; is that some sort of ‘ASPM is a lie, nothing gets to sleep ever, remain in your most available C-states, etc.’ configuration(and, if so, are there others?); or is there just a lot going on between the lowest power those two Xeons can draw without going to sleep and the RAM, PERC, etc?

I wouldn’t be at all surprised to see 2x 300w nominal Xeons demand basically all the power you can supply when bursting under load, that’s normal enough; I’m just shocked to see that idle figure; especially when older Dells in the same basic vein, except with older CPUs that have less refined power management, less refined/larger process silicon throughout the system generally, plus 12x 3.5in disks put in markedly lower figures.

I think the R710 I was futzing with the other day was something like 100-150w; and that was with a full set of mechanical drives, full RAM load, much, much, less efficient CPUs, etc. Lower peak load, obviously, since the CPUs just can’t draw anything like the same amount the newer ones can; but at idle I would have expected it to be worse across the board.

thanks for the reply Adam, I did realize that after I posted it but you cant edit I dont think , the other thing was the requirement I was quoted to have 2 percs in stalled. If I was building some think “special” especially with NvMEs I would go the intel server you can really muck around with them , support is a bit of a pain unless you a big guy, but for what you save you can afford to actually buy a couple of spares, like a mainboard ect, they have connectivity on their boards directly behind the drive bays with MCIO connectors all run flat out if you needed it. I have a unit here with VROC I was testing but got some funny results and dont have the coin to buy really good drives to see if issue still arises.

I would love STH to actually build a VROC server and do some numbers with some quality components since you probably have most of it hanging around

I completely agree about VROC, I requested that in a comment on another STH article. Since VROC is supported by VMWare, I’m really curious what performance looks like versus software raid, ZFS & a current gen hardware RAID controller.

Also as a general question for all readers- does anyone know in practice how this generation of Dell servers handles non-Dell NVMe drives? What little Dell publishes just states there are certain NVMe features that are required.

Maybe STH could test tossing in a couple of Micro 9400 drives into the R760?

I created a thread about VROC on the forums and shared my very limited experience. Figured we do not want to pollute these comments further. I agree there is a dearth of information available on it which is a problem as there is a need for such solutions.

https://forums.servethehome.com/index.php?threads/intel-vroc-2023.39815/

Super thread on VROC.

Yuno put it about as well as anyone, Dell has lost their damn minds and their entire company must be high to think this crap is okay. I just saw today they’re still selling a desktop with an nvidia 1650 in it for almost $1000 ( https://www.dell.com/en-us/shop/desktop-computers/vostro-tower/spd/vostro-3910-desktop/smv3910w11ps75397 ), what kind of bizarro world are we living in?

What kind of performance are you seeing with the BOSS setup? I just received a PowerEdge R360 with a BOSS-N1 configured for RAID1 and I’m getting 1400MB/s READ and 222MB/s WRITE. I can’t find any reviews stating the read/write performance.