Just before the global shutdown stopped international travel, I remember a Wednesday in January 2020 very well. I drove over to Cerebras Systems one town over in Los Altos, California to Interview Andrew Feldman the company’s CEO. At Hot Chips 31 (2019) Cerebras was the standout as we covered in Cerebras Wafer Scale Engine AI chip is Largest Ever. I had the opportunity to first see the system built around the WSE, the Cerebras CS-1 Wafer-Scale AI System, at SC19. At Hot Chips 32, Cerebras disclosed some details of its new chip the WSE Gen2.

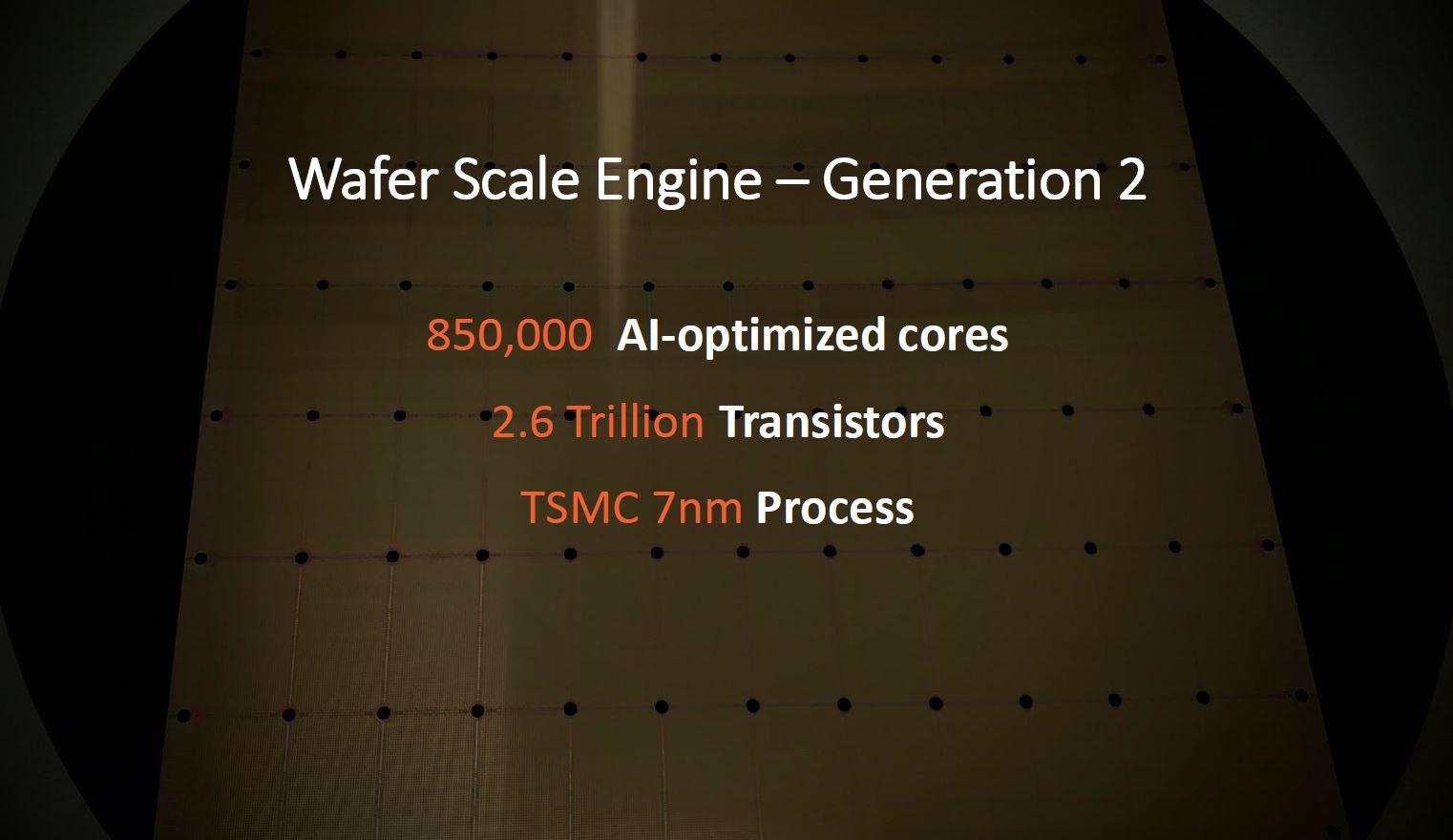

Cerebras Wafer Scale Engine Gen2

The Cerebras WSE Gen2 offers absolutely huge numbers. For some scale, the Xilinx Versal Premium’s top-end multi-chip package FPGA we covered today, that uses CoWoS to bind together multiple chips, tops out at 92 billion transistors. For GPUs the NVIDIA A100 has around 54 billion transistors. In contrast, the Cerebras WSE Gen2 encompasses 2.6 trillion transistors. That is on a single chip. These are all on 7nm TSMC processes.

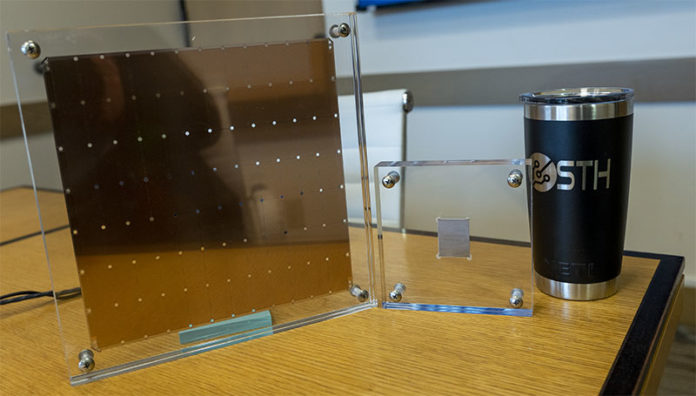

This 850 AI optimized core chip is already back at Cerebras headquarters. Likely it is in the same lab we toured earlier this year in Los Altos. This is the only photo we could take from the lab:

If you are wondering what that is, it is a fully integrated rack of Cerebras CS-1 systems that are being designed for high-density with cooling and power integrated. Cooling was being provided, in this case, not by air, but by facility water, for the specific customer, it was being designed for. That gives one some sense of the demand that Cerebras is seeing. They are beyond the phase of customers looking for single systems and are moving to customers purchasing many systems and asking for higher integration.

One of the benefits of the giant WSE structure is that it eliminates an extraordinarily amount of chassis, PCB, cooling, networking, and other components from AI clusters.

Final Words

The Cerebras Wafer Scale Engine Gen2 is very exciting. Cerebras declined to comment on the amount of SRAM on-chip, but there is a good chance it has scaled with the number of cores. We expect more information is coming later this year. Hopefully, one of these days I will be able to head over to the Cerebras lab again and see the WSE Gen2 in-person to see if the Cerebras lobby skeleton has managed to thrive through the global pandemic.

The big question we have now is will the CS-1 get a WSE Gen2 upgrade, or will we get a CS-2?

Very neat, I am glad to hear they are getting business!

But what is with the skeleton?

No idea on the skeleton. It was just sitting there in the lobby when I popped in during a January 2020 visit.

Thanks, Patrick!

They just did an install at LLNL: https://www.hpcwire.com/2020/08/19/cerebras-llnl-deployment-teases-second-gen-wafer-scale-ai-chip/

Good work on special processing to define info to meet solution. ✔️****✌️