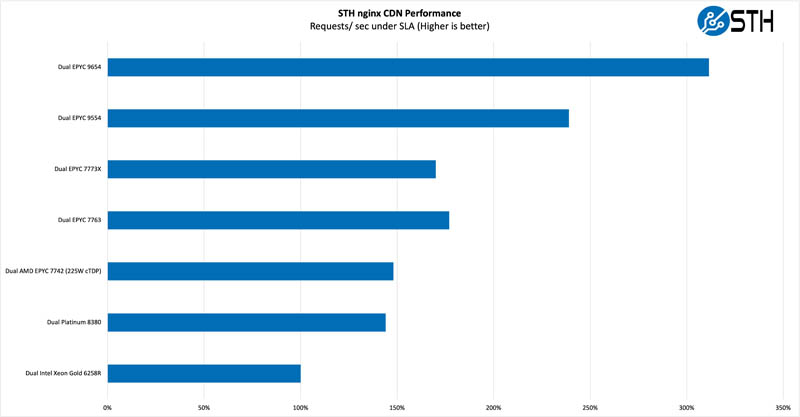

STH nginx CDN Performance

On the nginx CDN test, we are using an old snapshot and access patterns from the STH website, with DRAM caching disabled, to show what the performance looks like fetching data from disks. This requires low latency nginx operation but an additional step of low-latency I/O access which makes it interesting at a server level. Here is a quick look at the distribution:

This is a case that was actually not great for Milan-X, and frankly is not taking advantage of all of the new instructions and features of the new chips. This is just running real-world website traffic patterns through the system and over the network so this is a longer chain. Still, the performance gap relative to the older generation parts is astounding. If you have early 2021 systems (the Gold 6258R), we are getting more than 3x the performance. 3:1 consolidation ratios in less than 24 months is almost unheard of in the industry.

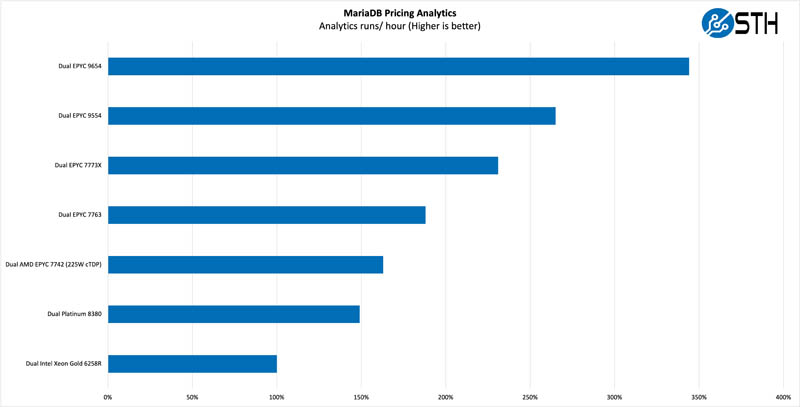

MariaDB Pricing Analytics

This is a personally very interesting one for me. The origin of this test is that we have a workload that runs deal management pricing analytics on a set of data that has been anonymized from a major data center OEM. The application effectively is looking for pricing trends across product lines, regions, and channels to determine good deal/ bad deal guidance based on market trends to inform real-time BOM configurations. If this seems very specific, the big difference between this and something deployed at a major vendor is the data we are using. This is the kind of application that has moved to AI inference methodologies, but it is a great real-world example of something a business may run in the cloud.

On the MariaDB pricing analytics side, this is another real-world enterprise workload. Here we can see around 3.5:1 consolidation over the top-bin dual-socket server CPUs that one could have purchased in 2022.

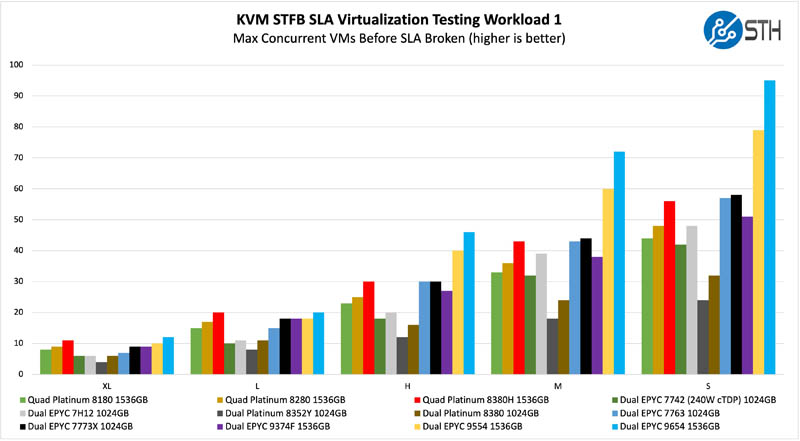

STH STFB KVM Virtualization Testing

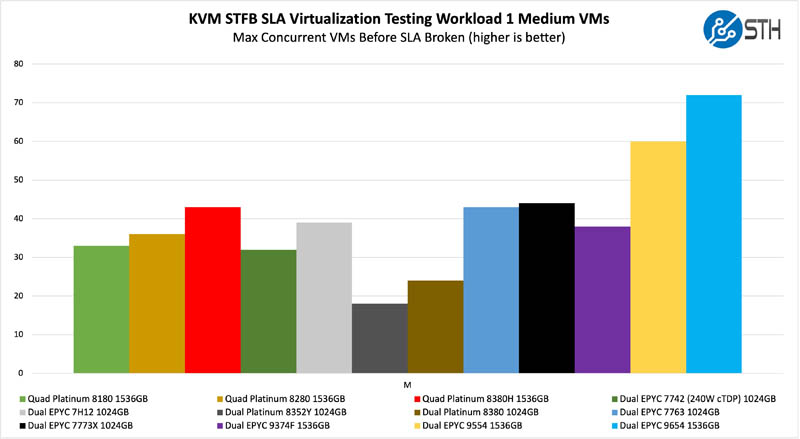

One of the other workloads we wanted to share is from one of our DemoEval customers. We have permission to publish the results, but the application itself being tested is closed source. This is a KVM virtualization-based workload where our client is testing how many VMs it can have online at a given time while completing work under the target SLA. Each VM is a self-contained worker. This is very akin to a VMware VMark in terms of what it is doing, just using KVM to be more general.

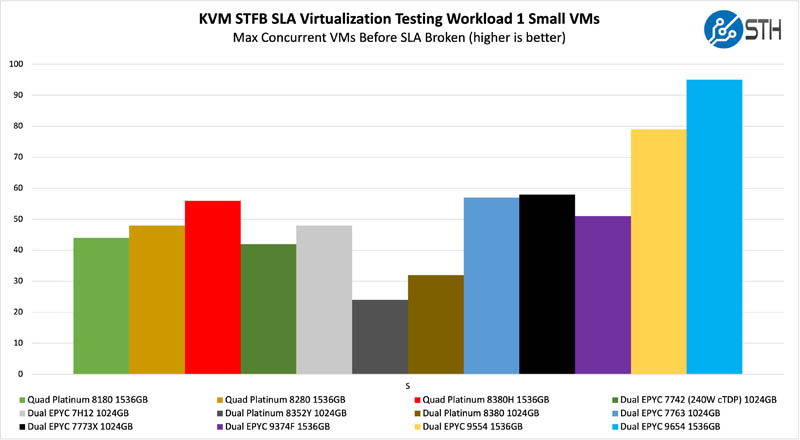

There are a lot of bars on this chart. The top end looks compressed just because those VMs are so big that we are not getting enough resolution due to the large VM sizes. Instead, let us focus on some of the smaller VM sizes.

This is where things get really interesting. We get fairly massive changes between the AMD EPYC 9654 and the top-bin 3rd Gen Xeon Scalable processors. If you have a dual-socket Intel Xeon Platinum 8380 or a quad-socket Intel Xeon Platinum 8380H, you can get huge consolidation ratios moving to the new EPYC chips.

Moving up to the medium size VMs, we get a similar view of consolidation. This is one where if AMD had stuck with 8-channel DDR5 instead of 12-channel, the performance gap would have closed.

Even with this, folks are going to be noting higher TDPs on the chips. As such, next, we wanted to address power consumption observations on the new Genoa platforms to put the performance increases into a performance-per-watt context at a system level.

Any chance of letting us know what the idle power consumption is?

$131 for the cheapest DDR5 DIMM (16GB) from Supermicro’s online store

That’s $3,144 just for memory in a basic two-socket server with all DIMMs populated.

Combined with the huge jump in pricing, I get the feeling that this generation is going to eat us alive if we’re not getting those sweet hyperscaler discounts.

I like that the inter CPU PCIe5 links can be user configured, retargeted at peripherals instead. Takes flexibility to a new level.

Hmm… Looks like Intel’s about to get forked again by the AMD monster. AMD’s been killing it ever since Zen 1. So cool to see the fierce competitive dynamic between these two companies. So Intel, YOU have a choice to make. Better choose wisely. I’m betting they already have their decisions made. :-)

2 hrs later I’ve finished. These look amazing. Great work explaining STH

Do we know whether Sienna will effectively eliminate the niche for threadripper parts; or are they sufficiently distinct in some ways as to remain as separate lines?

In a similar vein, has there been any talk(whether from AMD or system vendors) about doing ryzen designs with ECC that’s actually a feature rather than just not-explicitly-disabled to answer some of the smaller xeons and server-flavored atom derivatives?

This generation of epyc looks properly mean; but not exactly ready to chase xeon-d or the atom-derivatives down to their respective size and price.

I look at the 360W TDP and think “TDPs are up so much.” Then I realize that divided over 96 cores that’s only 3.75W per core. And then my mind is blown when I think that servers of the mid 2000s had single core processors that used 130-150W for that single core.

Why is the “Sienna” product stack even designed for 2P configurations?

It seems like the lower-end market would be better served by “Sienna” being 1P only, and anything that would have been served by a 2P “Sienna” system instead use a 1P “Genoa” system.

Dunno, AMD has the tech, why not support single and dual sockets? With single and dual socket Sienna you should be able to be price *AND* price/perf compared to the Intel 8 channel memory boards for uses that aren’t memory bandwidth intensive. For those looking for max performance and bandwidth/core AMD will beat Intel with the 12 channel (actually 24 channel x 32 bit) Epyc. So basically Intel will be sandwiched by the cheaper 6 channel from below and the more expensive 12 channel from above.

With PCIe 5 support apparently being so expensive on the board level, wouldn’t it be possible to only support PCIe 4 (or even 3) on some boards to save costs?

All other benchmarks is amazing but I see molecular dynamics test in other website and Huston we have a problem! Why?

Olaf Nov 11 I think that’s why they’ll just keep selling Milan

@Chris S

Siena is a 1p only platform.

Looks great for anyone that can use all that capacity, but for those of us with more modest infrastructure needs there seems to be a bit of a gap developing where you are paying a large proportion of the cost of a server platform to support all those PCIE 5 lanes and DDR5 chips that you simply don’t need.

Flip side to this is that Ryzen platforms don’t give enough PCIE capacity (and questions about the ECC support), and Intel W680 platforms seem almost impossible to actually get hold of.

Hopefully Milan systems will be around for a good while yet.

You are jumping around WAY too much.

How about stating how many levels there are in CPUS. But keep it at 5 or less “levels” of CPU and then compare them side by side without jumping around all over the place. It’s like you’ve had five cups of coffee too many.

You obviously know what you are talking about. But I want to focus on specific types of chips because I’m not interesting in all of them. So if you broke it down in levels and I could skip to the level I’m interested in with how AMD is vs Intel then things would be a lot more interesting.

You could have sections where you say that they are the same no matter what or how they are different. But be consistent from section to section where you start off with the lowest level of CPUs and go up from there to the top.

There may have been a hint on pages 3-4 but I’m missing what those 2000 extra pins do, 50% more memory channels, CXL, PCIe lanes (already 160 on previous generation), and …

Does anyone know of any benchmarking for the 9174F?

On your EPYC 9004 series SKU comparison the 24 cores 9224 is listed with 64MB of L3.

As a chiplet has a maximum of 8 cores one need a minimum of 3 chiplets to get 24 cores.

So unless AMD disable part of the L3 cache of those chiplets a minimum of 96 MB of L3 should be shown.

I will venture the 9224 is a 4 chiplets sku with 6 cores per chiplet which should give a total of 128MB of L3.

EricT – I just looked up the spec, it says 64MB https://www.amd.com/en/products/cpu/amd-epyc-9224

Patrick, I know, but it must be a clerical error, or they have decided to reduce the 4 chiplets L3 to 16MB which I very much doubt.

3 chiplets are not an option either as 64 is not divisible by 3 ;-)

Maybe you can ask AMD what the real spec is, because 64MB seems weird?

@EricT I got to use one of these machines (9224) and it is indeed 4 chiplets, with 64MB L3 cache total. Evidently a result of parts binning and with a small bonus of some power saving.