Earlier this month, I tweeted a fun little snippet early on a Monday morning. We were talking about this with some in our team and had a few external requests, and people thought it was interesting. We had an AMD EPYC 7601 server that was up for almost two years.

Finding an AMD EPYC server that has been up for 725 days at around ~70% load average is like an archeological find. This has been running since only about a quarter after EPYC was in solid supply. Alas, time to reboot. pic.twitter.com/XnFc9URKiJ

— Patrick J Kennedy (@Patrick1Kennedy) December 2, 2019

The 725 Day AMD EPYC Server

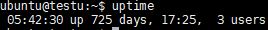

Here is a quick shot of the uptime report before rebooting the server.

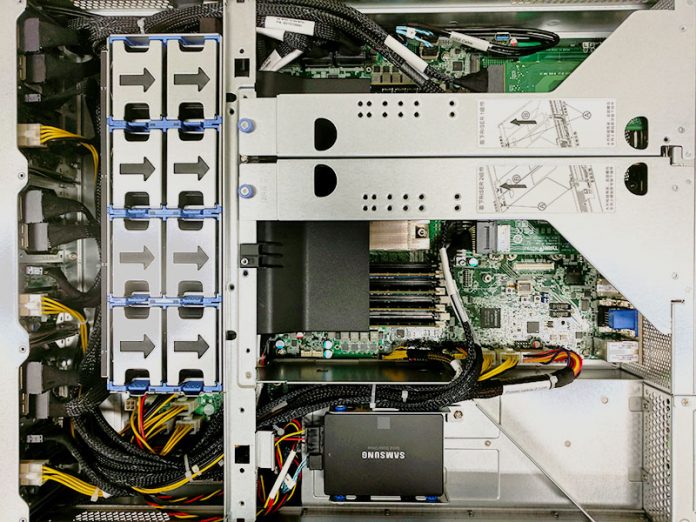

The story behind the Tyan Transport SX AMD EPYC-powered server, a predecessor to the Tyan Transport SX TS65A-B8036 2U 28-bay AMD EPYC server we just reviewed, is fairly simple. At STH, we run our own infrastructure. As a result, I have a strong belief that if STH is going to recommend something, that I should be willing to use it.

During the AMD EPYC 7001 launch, AMD AGESA, or the basic code underpinning EPYC systems, was rough to say the least. To give an example, we rebooted a test system the day before the launch event and it ran into an AGESA bug that required one to pull the CMOS battery. That would be annoying on a consumer platform, but it is an extra level of annoyance on a server. Pulling a CMOS battery is not a simple remote hands job like a power cycle or drive swap when you are 1500 miles away. That is why we pushed our Dual AMD EPYC 7601 review. I refused to review a server or a chip when the AMD AGESA was that rough, even if it meant we would not be first to publish benchmarks.

The Tyan Transport SX server was our primary 1P benchmarking system for some time. It, however, transitioned into another role. We hammered the box seeing how long until it failed in the lab. After the AGESA updates in 2017, we looped through different Docker benchmark containers constantly to see when it would fail.

By mid-2018, we started to deploy EPYC in our production web hosting setup. First, we utilized the systems for a backup server, then more nodes came online for hosting and some of the other bits. As I am writing this we are around 50/50 Intel Xeon and AMD EPYC. This server went a long way in proving that the new platform can be trusted.

On December 2, 2019, we needed to physically move the box. Logging back in, the server has been dutifully looping benchmarks for over 725 days (we let it hit 726 days before moving it.) It had zero errors in that time which is impressive, but what one would expect from an Intel Xeon server.

Final Words

The AMD EPYC 7002 series launch was completely different. Everything just worked for us. There is a huge level of maturation that happened on the EPYC 7001 platforms. In interviews, the AMD team talks about getting the ecosystem primed. This is a great example of how far that has come.

For those wondering, whether the AMD EPYC platform can run 24×7, this is a great example of where it ran, without issue, longer than most servers practically will. Today, servers are getting more frequent security updates and patches. Even with live patching, sometimes a reboot is required. 726 days, or essentially two years is a great result that frankly exceeded our team’s expectations.

“Today, servers are getting more frequent security updates and patches.”

With Intel’s 243 security vulnerabilities, it will be interesting to know how long Intel-based servers ran before getting patched and rebooted?

very informative post, thank you so much for sharing.

I remember the old days, when our admins got misty-eyed as they were about to dismantle a Linux server after 4-5 years and found that it hadn’t been rebooted once during its operation: “Look what a stable system I built!”.

Today they’d get fired if a box exceeds one month of uptime, because it means it didn’t get the PCI-DSS prescribed monthly patches that avoid us having to launch a full scope vulnerability management.

While those trusty Xeons ran for years without a single hitch, the mission-critical and fault-tolerant Stratus boxes ran almost as well, except that every couple of days a technician would come and swap a board, because the service processor had detected some glitch. Of course, being fault tolerant, the machine didn’t have to be stopped in the process, but I never felt quite comfortable about that open heart surgery.

Then one day the only thing that wasn’t redundant in those Stratus machines, failed: The clock or crystal on the back-plane. You pretty obviously can’t have two clocks in a computer and since it was such a primitive part, it was assumed it could never fail. In fact, there wasn’t even a spare part on the continent. We had to sacrifice a customer’s stand-by machine to get ours running again …and went with software fault tolerance ever after.

Yet 15 years later, I am more ready to put my faith into hardware again: Even if the best of today’s hardware is sure to fail daily at cloud scale, my daily exposure to such failures is anecdotal while cluster failures on patches and upgrades seem scaringly regular.

Thanks for such good article. I had similar problem but you helped me. It’s always nice to see talented youth. Especially students who find time not only for study but also for their hobbies and who develop diversely, although this is not always easy. I think that the most worthy people received the scholarship.

good post

Thanks for sharing it, It’s really informative.

I have read through other blogs, but they are bloated and more confusing than your post. I hope you continue to have such quality posts to share with everyone! I believe a lot of people will be surprised to read this article!

It’s really an amazing blog great to get the relative’s information through your site for all the people, I appreciate your efforts. Thank you for sharing your knowledge and experience with me

miolle

Why would someone use a physical machine rather than a cloud instance?. You should also include your network speed. Nano nodes take a significant amount of bandwidth.

What a post. I have never seen such a great post ever. You guys should be promoted.

Hi,

i find this blog really useful and very informative. There is no doubt, that you guys are doing great and helping communities all over the world.

Cheers!

Hey!

You will not believe how much your post is informative and useful for me.

just continue your best work and Thanks for the post.

Hi,

This post solve my problem and I really appreciate you guys for this helpful post.

if you guys know any other helpful material, then I will for your other informative post just like this post.

You guys doing a great job by posting this blog. i hope you will continue your hard work and thanks for the post.