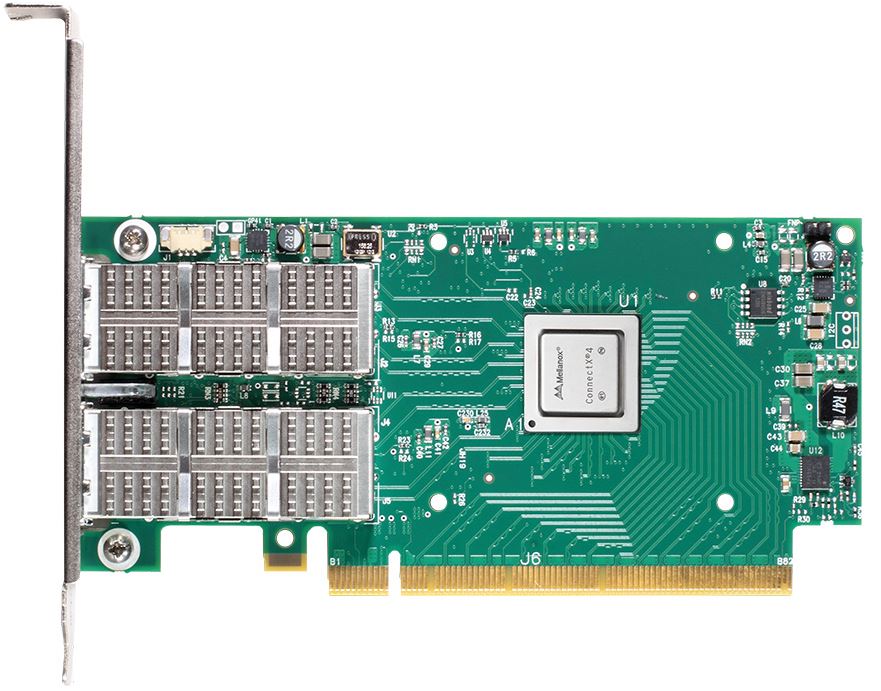

I wanted to take a few moments to address Intel’s purported $5.5B-$6B bid for Mellanox. According to Israeli media, Intel has placed a bid of $5.5B-$6B for the interconnect company. Since we cover Intel, including their competitive Omni-Path Fabric, and today’s story was going to be a Mellanox ConnectX-5 100GbE/ EDR Infiniband adapter review (pushed to a later date), I wanted to provide some product perspective.

HPC Fabric Focus

From an HPC fabric perspective, Omni-Path and Infiniband are perhaps involved in a holy war that neither side is necessarily winning in the Top500. Both of the fabrics are mostly used in the high-performance computing space. A proxy in the space is the Top500 list of most powerful supercomputers. Indeed, the #1 system on the list is using Mellanox Infiniband as its interconnect. There is much more to this story.

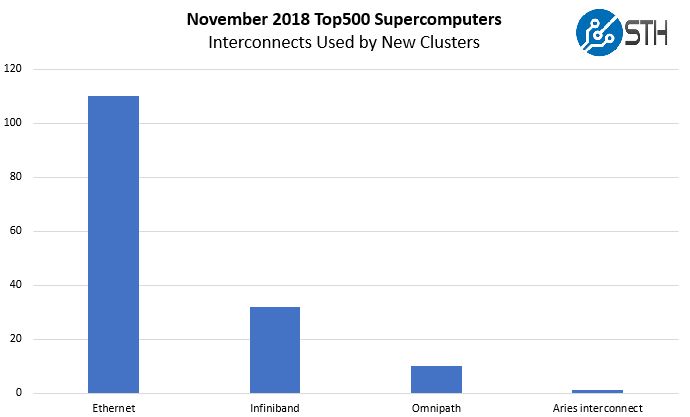

We highlighted a concerning trend again in our piece: Top500 November 2018 Our New Systems Analysis and What Must Stop. Here is one chart from that:

The point here is that the while Infiniband and Omni-Path are winning in new systems, neither is really winning. In the November 2018 Top500 lists’ new entries, the vast majority of those systems had Ethernet. We noted that this practice was driven by companies, especially Lenovo, parsing portions of clusters delivered to cloud customers, running Linpack and calling it a “system” for Top500 entries. That is simply to pad stats. These Ethernet clusters that the next day will stop running scientific code and instead run as web and database servers which has many in the HPC community asking why they are included.

Mellanox and Omni-Path are fighting a war that Ethernet is winning.

For the premier HPC systems, Infiniband which is now at 200Gbps with ConnectX-6. Omni-Path is at 100Gbps but the company has hinted at OPA200 200Gbps interconnects coming. These and custom interconnects from companies like Cray will have a place. In scientific computing cases, an enormous amount of TCO is dominated by moving data and the fabric. Freed from the need to service the Internet, Infiniband and Omni-Path can offer lower latency and feature sets that make them a top choice for the highest-end systems. At the same time, the hyper-scale world uses Ethernet extensively which is part of what is enabling these large clusters to enter the Top500 list.

Intel purchasing Mellanox may doom OPA or IB, but the need in the HPC community is still there. Beyond compute, Infiniband (and by extension OPA) started largely as a storage interconnect. For high-performance storage, Infiniband is still the fabric many solutions are built upon whether from large vendors or using open source software-defined solutions. Given the market size, one if not both can survive a merger, and our bet would be on the Mellanox IP.

For those who are raising anti-trust bells, the above chart should quell that. The Top500 is now dominated by Ethernet, for which there are many vendors. Further, other companies like Cray can enter and successfully compete. There is a place for both Infiniband/ OPA and Ethernet, but the cloud guys tend to use Ethernet.

While the Infiniband and Omni-Path interconnect families are important, they are not the entire reason for the deal.

Ethernet Networking Focus

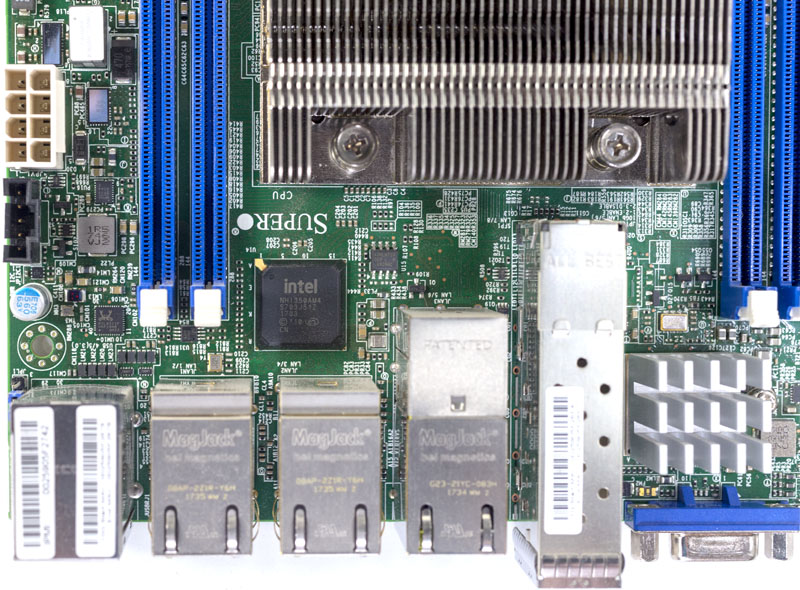

One area where I have been starting to push hard on is Intel’s networking. For 1GbE, Intel set what is more or less the gold standard. There are less expensive chips out there from companies like Realtek, but in the server industry, Intel 1GbE is a widely accepted solution. The NICs may not be perfect, but if you have an Intel i350 or i210 NIC, or a previous-generation 82574L NIC they will be supported in just about every OS.

On the 10GbE front, Intel maintained a leadership position. Indeed, even our recent Gigabyte MZ01-CE0 review saw an AMD EPYC platform utilizing Intel X550 10GbE. With 10GbE, the question of SFP+ or 10Gbase-T became larger. Also, as the network scaled more companies like Chelsio and SolarFlare arrived to do more offload functions so that the Intel Xeon CPUs did not need to handle packet processing.

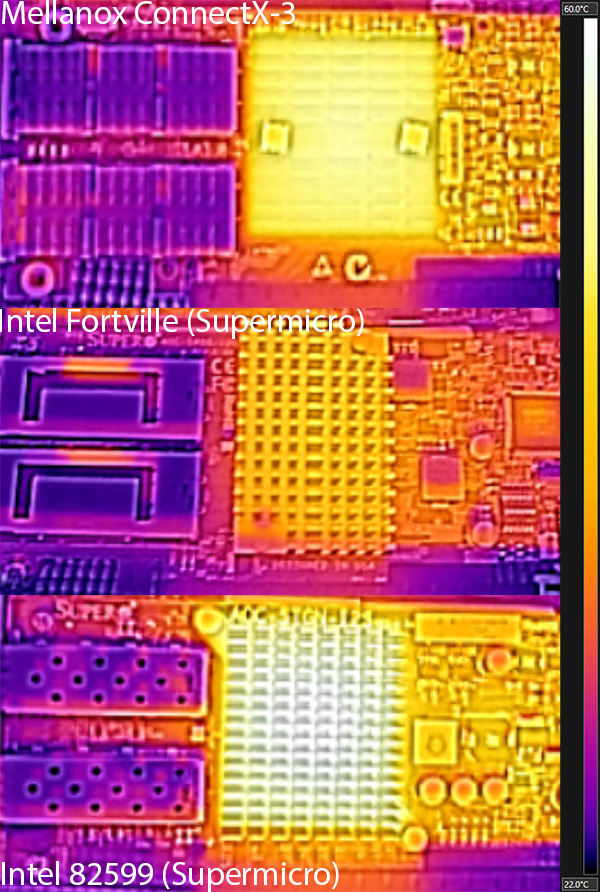

When the industry moved to 40GbE, the Intel Fortville solution was indeed interesting. We did two early (2014 era) Intel Fortville pieces, both 40GbE Intel Fortville XL710: Networking will never be the same and Intel Fortville: Lower Power Consumption and Heat. Fortville had a reasonable cost and low power consumption making it highly attractive.

A few things happened at 40GbE. Intel Fortville NICs had a bug that caused the company to stop shipment and re-spin. During the several quarters, they were out of the market, Mellanox 40GbE NICs were in high demand, along with NICs from other companies.

Intel Fortville (XL710) is a 40GbE solution with relatively low offload capabilities. That meant lower heat and power consumption as we showed in our 2014 article. At the same time, 40GbE speeds are high enough that doing packet processing on the CPU becomes expensive, potentially consuming expensive Xeon cores to handle a throughput problem.

During the transition to 25GbE/ 50GbE/ 100GbE Intel saw much slower innovation. Intel released the XXV710 series chips and adapters. At 100GbE, the company has been very quiet even after showing off cards in 2015 at IDF. Meanwhile, Mellanox has iterated from its first generation ConnectX-4 100GbE parts now to its ConnectX-6 products capable of 200Gbps. Intel went from a leader, to on par, to now being thoroughly behind in high-speed Ethernet networking.

On the switch side, Intel had switch IP with Fulcrum. We actually reviewed a system with Fulcrum in our Supermicro MicroBlade Review Part 3: 10Gb Networking with MBM-XEM-001. Fulcrum has not scaled and the current switch silicon lineup launched in or around 2013 is set to be discontinued in 2021.

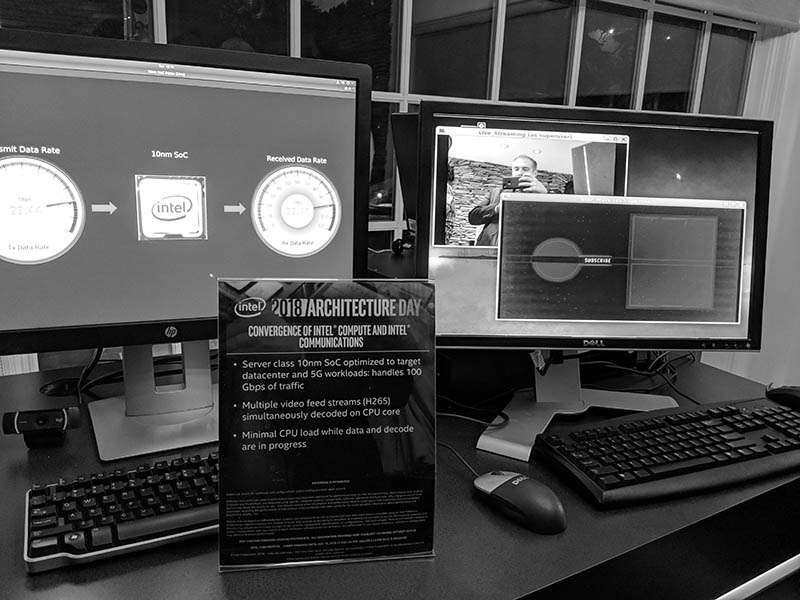

Intel still enjoys broad software and OS support for its NICs. Indeed, products like the Intel Atom C3000, Xeon D-1500 to Xeon D-2100, and its mainstream Intel Xeon SP Lewisburg PCH chips all integrate 10GbE networking. We know Snow Ridge that we spotted will support up to 100Gbps networking (although we are not sure how) as we showed in our piece: Intel shows off new 10nm server SoC with 100Gbps and H265 offload.

The bottom line is that Intel needs 100GbE network card and switch silicon sooner rather than later if it wants to remain relevant in networking. The industry is already eyeing the next jump to 200GbE and 400GbE speeds. Indeed, Dell EMC is deploying 200Gbps fabric as we saw in our piece: In-depth Dell EMC PowerEdge MX Review.

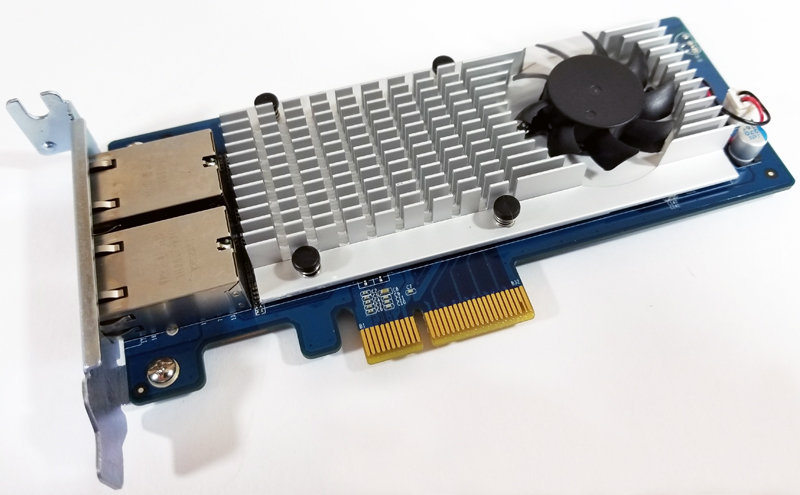

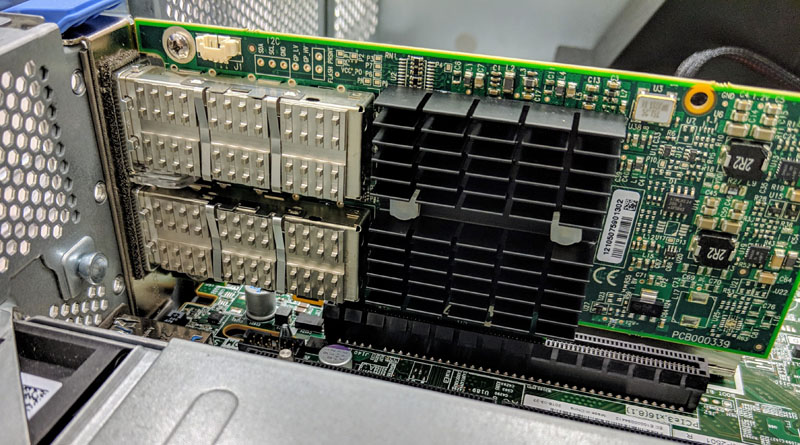

Mellanox just happens to make the ConnectX-4 and ConnectX-5 cards that we use in the STH lab for all of our 100GbE nodes. They also have switch silicon for Infiniband and Ethernet with the IP to bridge and provide gateways between the fabrics. Intel tried killing Infiniband with onboard OPA fabric for pennies on the dollar compared to Mellanox PCIe NICs. Instead, it has found itself needing Mellanox’s vastly superior Ethernet switch and NIC portfolio.

Final Words

As Editor-in-Chief at STH, I work with folks from both Intel and Mellanox. There is a human perspective to any acquisition and I can say that whatever the result, I hope they do well.

At the same time, a belief that Intel’s networking product portfolio is strong entering 2019 is an outdated one. Intel does not have a traditional PCIe x16 100GbE adapter card on ARK and only has its multi-host adapter products available. For some perspective, in the STH lab we are phasing out 40GbE and all dual socket capable machines are adding 100GbE. Intel does not have a product that we can use, so unlike the 40GbE generation, we are using exclusively Mellanox for our 100GbE generation adapters. A 100GbE Mellanox adapter costs us more than Intel Xeon Silver CPUs but Intel has no alternative for us to use.

25GbE is great, and Intel can address that market, but it has a very noticeable product hole in its networking business at 100Gbps and beyond. While Intel had been in the business of high-performance networking four years ago, at this point most of its volume is in what we would consider low-performance networking these days at sub 50GbE speeds.

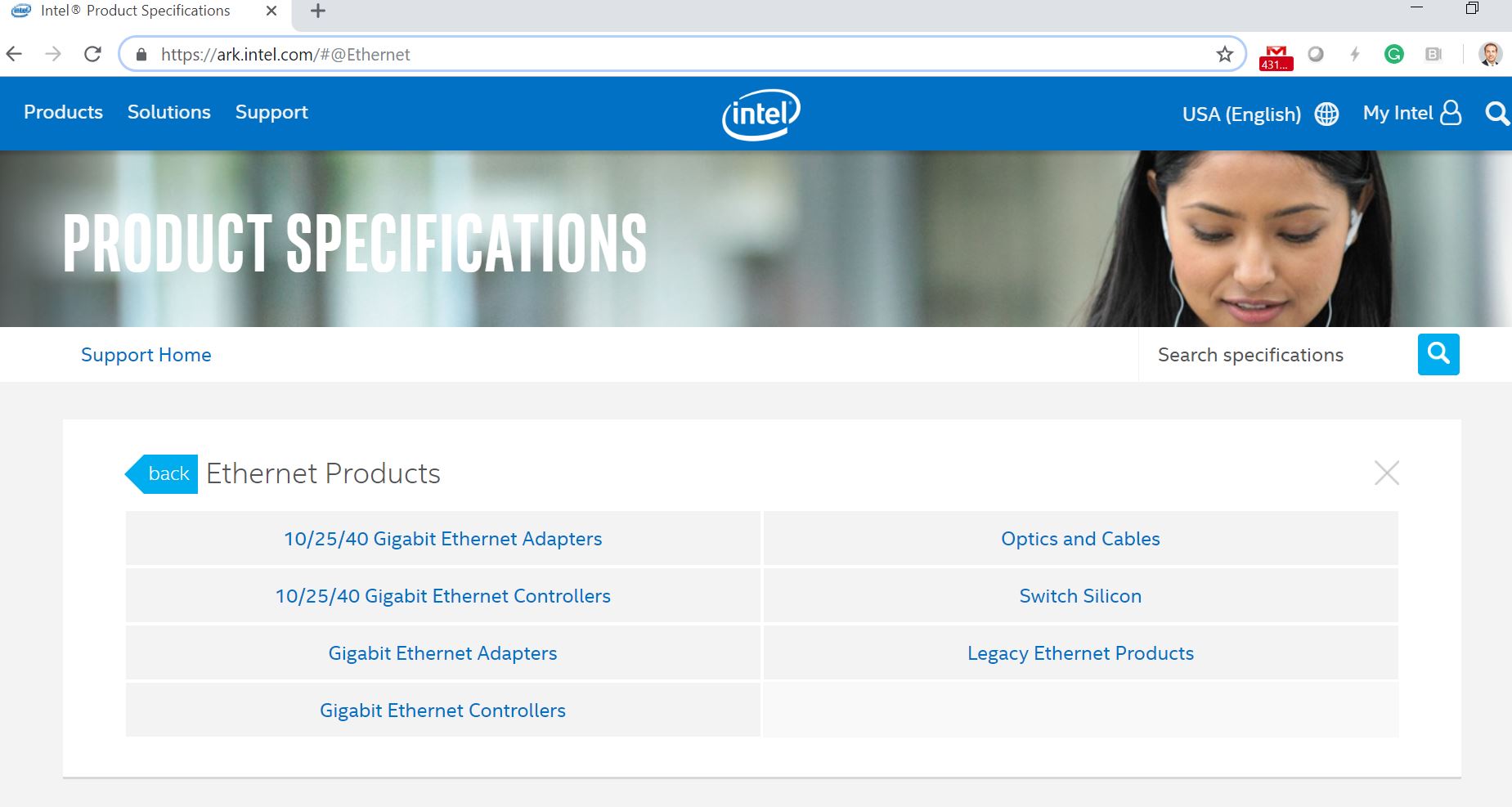

Let me leave this discussion with a simple image of Intel Ark, accessed on 2019-01-31 for the high-level categories in its Ethernet products business. High-performance networking is conspicuously absent, and Mellanox gives Intel both Infiniband as well as Ethernet adapter and switch products:

Good perspective outside of InfiniBand rules

You’re right, we buy thousands of servers a year with 100GbE and Intel doesn’t have a product for us. That’s millions in revenue that they’re missing out just from us by not having product in-market.

Everyone knows Lenovo is cheating to make it look relevant in the Top500. Lenovo’s cheating dilutes IB and OPA fabric impact.

Our network requirements are a little more mundane so 10GbE still suits us fine. We standardized on Intel NICs several years ago when we first moved to 10GbE and have been pretty pleased. Our next servers though will use Mellanox or QLogic even for just 10GbE simply because Intel hasn’t released a NIC that can do any form of RDMA. With VMware and Hyper-V both adding support for this in different areas, it’s just too short sighted to buy NICs that aren’t RDMA capable.

Whats puzzling is that Intel already has InfiniBand IP they aquired from Cavium a few years back, so why they have to buy Mellanox now is puzzling

The company I work for just moved from 1GbE to 25GbE using Mellanox Connect-X4 LX adapters connected to the Mellanox SN2100 switch via DAC Breakout Cables. One of the biggest reasons for us moving to Mellanox instead of HPE, Dell, Cisco, etc… is that since we are a small business we cannot afford to be vendor locked by the SFPs. Since Mellanox doens’t lock their SFPs, it allows us to get DACs that are vastly less expensive. @Jonathan when your company gets its new servers I highly recommend going with Mellanox and 25GbE cards. You will have backwards compatibility with your 10GbE and can move to faster Ethernet at a later date and not be stuck at 10GbE.

Does absence of 100G really matter for INTC when their servers are still only supporting PCIe 3? Isn’t that the bottleneck maxing out at 96 GBPs? Thanks,

Don’t forget that Intel have 100GpbE integrated directly onto Xeon Scaleable F CPU’s, available as a small incremental cost option on the CPU. This CPU’s will completely remove the need for an add-on NIC as the world moves to 100GbE.

Is this a rumour only? I hope it is not true.

Like Marc said above INTC bought Qlogic IB assets and some interconnect licensing from Cray. Not to mention cleaning out OPA bugs for OPA 2 with better scale out performance. Mellanox had 56 Gb IB that helped against Intels 40Gb but that’s history.

IMHO they’re too late too to start playing in the 100GE party.

They’re still going to need commodity >25gbps single-lane NICs, but IMHO they should really be concentrating on COBO & silicon photonics e.g.:

https://www.nextplatform.com/2019/01/29/first-silicon-for-photonics-startup-with-darpa-roots/

(of course, the two are not mutually exclusive, especially when you’re a shop as big as Intel)

@Paul

The “F” is Omnipath only, not Ethernet, this is the whole story.

Also next gen Xeons won’t have “F” variants.

Would be great to have Mellanox on Mainboards instead of Intel, since this is what I would usually swap in anyways rendering the redundand onboard Intel NICs redundant.

NVIDIA To Acquire Mellanox For $6.9 Billion

Hi qrusher – here you can find the product analysis of the NVIDIA and Mellanox acquisition.