At ISC 2018 Intel made a number of incremental announcements in the field of the world’s top supercomputers. The first was that it made some pedestrian gains in the supercomputing space with single digit shares of gains in the Top500 list. The expected, but perhaps more impactful, announcement was a second generation Intel Omni-Path 200Gbps or Intel OPA200 which is expected in 2019.

Intel Xeon in the June 2018 Top500 List

With ISC 2018, we have the June 2018 Top500 list coming out. Here, Intel is now up to 95% of all Top500 systems and 97% of the new systems, with the most notable exception being the top system, Summit. You can read about Summit in our piece, US DoE Announces Summit with IBM Power and NVIDIA Tesla.

Intel Xeon Scalable only was on 37 of 129 new Top500 Intel Xeon systems. By sense of scale, there were 133 new Top500 systems giving Intel 97% share of the new systems. Procurement times are long in this space, but one may reasonably expect a higher percentage to utilize Xeon Scalable.

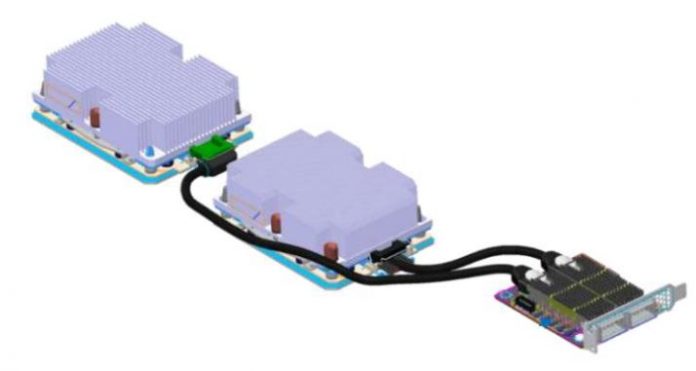

Intel Omni-Path 200Gbps or OPA200 Announced

At the show, the company is announcing Intel Omni-Path 200Gbps or OPA200. Details are at best sparse, and we have a sense of why. First, the company is saying that OPA200 will arrive in 2019, but that presents a significant challenge for Intel. Intel Xeon Scalable Cascade Lake generation we expect to still be 14nm and still support PCIe 3.0, not PCIe 4.0. Enabling Intel OPA200 will mean that Intel needs to either create cards, like Mellanox does, to utilize two PCIe 3.0 x16 connectors, a certain possibility. The other option is to add a new server CPU into the mix with an on-package Altera IP part to handle PCIe 4.0 so that there are stots capable of 200Gbps if you subscribe to SemiAccurate, you probably have an idea of how those scenarios will play out. Aside from those two scenarios, OPA200 will be very difficult to support since PCIe 3.0 x16 slots cannot handle 200Gbps interconnects at full bandwidth.

Here is the blurb on OPA200 from Intel.

“For both supercomputing and traditional HPC clusters, Intel continues to deliver and innovate high-speed interconnect technologies that enable cost-effective deployment of HPC systems. Intel shared at ISC its next-generation Omni-Path Architecture (Intel OPA200), coming in 2019, which will provide data rate speeds up to 200 Gb/s, doubling the performance of the previous generation. This next-generation fabric will be interoperable and compatible with the current generation of Intel OPA. Intel OPA200’s high-performance capabilities and low-latency at scale will provide system architects the ability to scale to tens of thousands of nodes while benefiting from improved total cost of ownership.” (Source: Intel)

Final Words

Between Intel’s somewhat bland announcements and NVIDIA’s underwhelming ISC2018 release, ISC 2018 is turning into a sleeper. We expect Supercomputing 2018 to be significantly more exciting in terms of announcements. Still, there is a major void in the HPC space as vendors are between generations. 2019 and 2020 are largely the ramp to exascale that we expect to start becoming a reality in 2021 and that will require major technological advancements. The US DOE Summit supercomputer is the crown jewel of ISC 2018 and it is only 1/5th of an exaFLOP of performance.

“The first was that it made some pedestrian gains in the supercomputing space with single digit shares of gains in the Top500 list.”

When you already dominate with 90+% share a single-digit gain is literally the only possible improvement that can occur. It would be more accurate to look at Intel’s gains in the context of a percentage drop of systems that *don’t* include the hardware, and that’s actually pretty impressive considering the hype is all about Nvidia GPUs but the real-world deployments are increasingly using standard x86 hardware that doesn’t require CUDA code to work.