With the launch of Intel Xeon Scalable Intel added an intriguing new feature: Omni-Path fabric. We touched upon the feature during the SKU list and architecture discussions for Skylake. Essentially, Intel is launching “F” SKUs with Integrated Omni-Path fabric. The F SKU variant is interesting for two reasons. First, it adds 100Gbps fabric for only $155 or so per CPU when compared to non-F variants. Second, this 100Gbps fabric does not take any of the standard 48x PCIe 3.0 lanes. Instead, Intel Xeon Scalable F SKUs have an additional PCIe x16 link for this fabric making the effective total PCIe lanes per CPU 64. What we did not realize is that the fabric enabled parts do not work in every server and motherboard for the Intel Xeon Scalable family.

Intel Xeon Scalable Omni-Path Fabric Chips

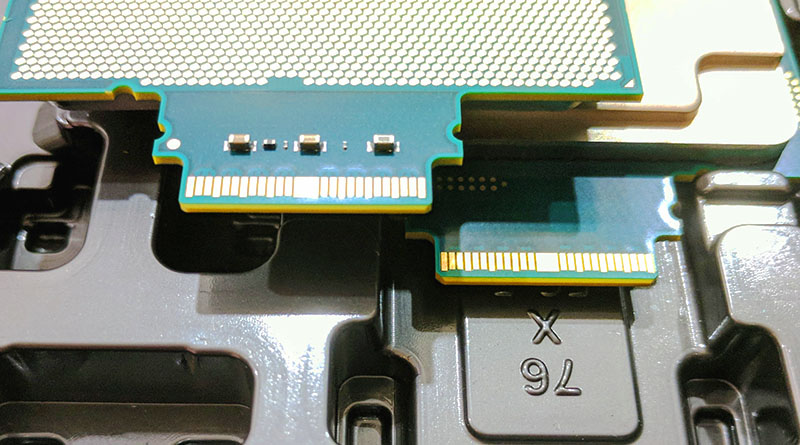

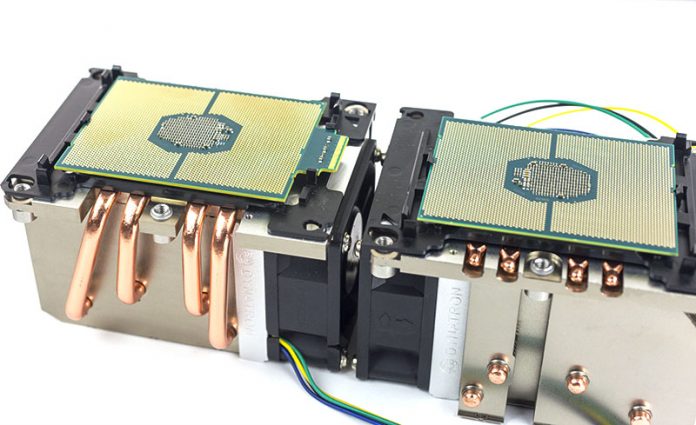

Since many of our readers are interested in the Omni-Path fabric (OPA), but do not yet have the hardware, we wanted to show what the OPA SKUs look like. The fabric enabled SKUs have a protruding PCB that allows room for an on-package OPA HFI as well as room for an external connector.

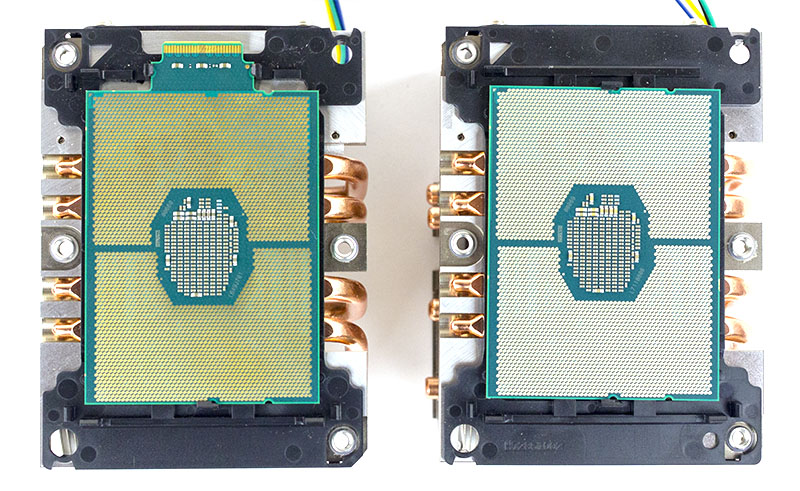

You can see the protrusion has electrical connections both on the top and bottom of the protrusion. Here is a view of the top and bottom of Intel Xeon Silver 4110 chips which cannot have integrated fabric at this time:

That protrusion has an impact beyond the physical package. One needs a different LGA3647 retention bracket for Omni-Path enabled parts. On the left is an OPA package and on the right is a non-OPA package:

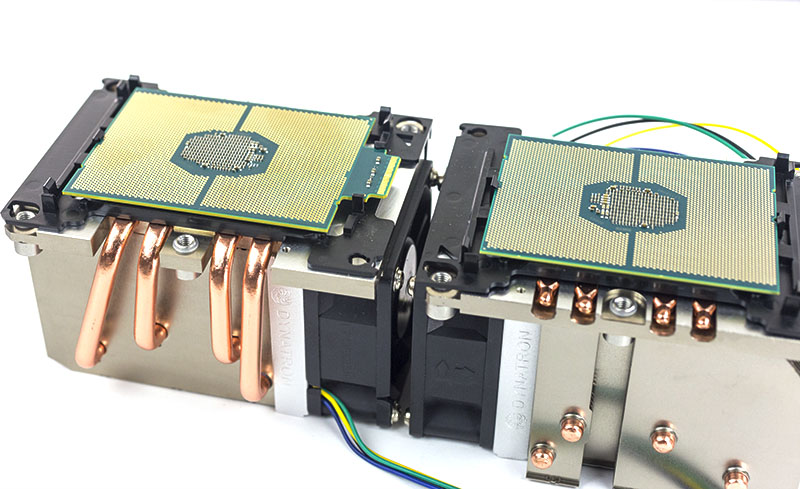

One can see that one of the standard notches on non-OPA packages is replaced by the PCB protrusion. As a result, there are two notches on the protrusion which must line up with the retention bracket. Here is an angled view to provide you with a better vantage point of the difference. We oriented the chips so the side where the protrusion is/ would face in the middle of the two assemblies.

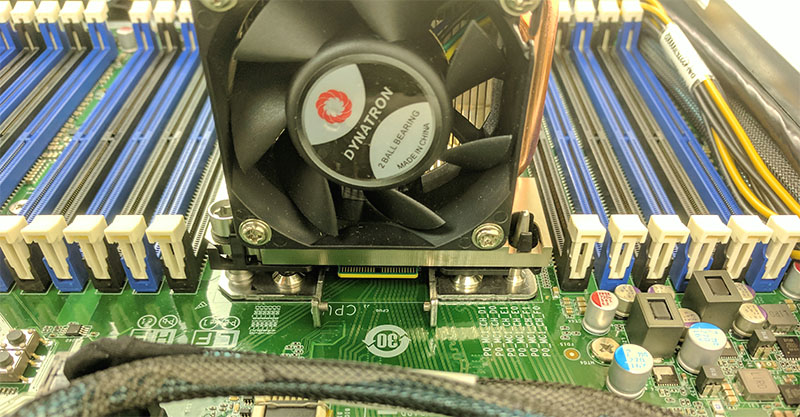

Different vendors have different retention mechanisms. The Dynatron 2U coolers we are using come packaged with both retention brackets.

Not Every Server Takes Intel Xeon Scalable Fabric SKUs

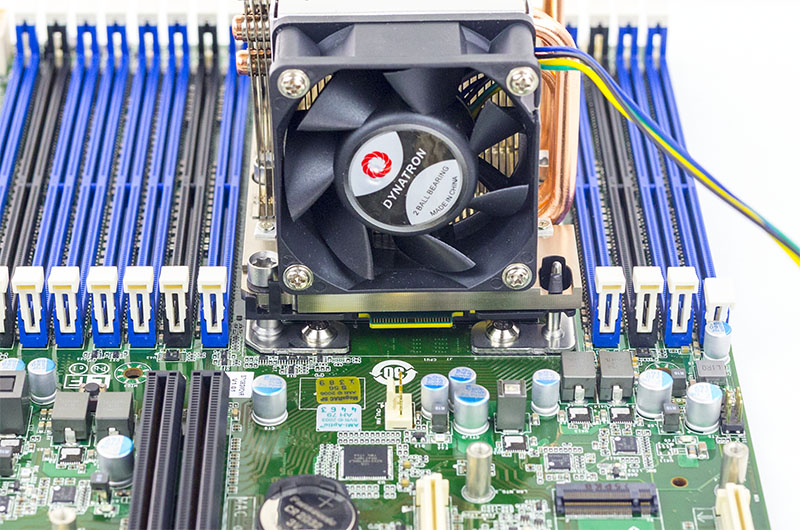

Our initial understanding that every LGA3647 server and motherboard will accept the Omni-Path fabric SKUs is false. The assemblies do fit. For example, here is the same Dynatron/ OPA enabled Xeon assembly in a motherboard that does not support the fabric SKUs.

It physically fits. In this instance, there is no metal socket retention clip for the Omni-Path cable as can be seen on the following motherboard with the same assembly:

For those who are unaware of how this works, there is a cable that clips onto the Xeon’s protruding connector and into the socket retention. The other side attaches to the OPA ports on the chassis. OPA is not being routed through the socket and motherboard.

It turns out that there is more than just the right physical socket to support the Intel Omni-Path enabled Xeons. We have been told by server vendors that there are aspects such as additional power circuitry that must be present to for a server or motherboard to support the OPA parts. In order to save costs, especially on systems intended for Intel Xeon Silver and Bronze parts that do not have integrated fabric, server and motherboard makers may elect to forego this additional circuitry to reduce costs.

It is a trade-off that makes sense but it means you do need a supporting server/ motherboard for Intel Xeon Scalable CPUs with Omni-Path fabric integrated.

Intel Xeon Scalable Skylake Omni-Path Fabric lspci output

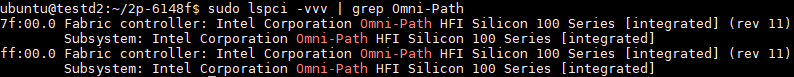

Our readers will likely want to see what the Omni-Path SKUs look like. We previously showed two other lab OPA nodes using Broadwell with Omni-Path and Intel Optane/ P4800X, but here is what it looks like for the on-package version:

Here is the full lspci -vvv output for both of the Omni-Path controllers:

7f:00.0 Fabric controller: Intel Corporation Omni-Path HFI Silicon 100 Series [integrated] (rev 11)

Subsystem: Intel Corporation Omni-Path HFI Silicon 100 Series [integrated]

Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR+ FastB2B- DisINTx+

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- SERR-

Latency: 0

Interrupt: pin A routed to IRQ 246

Region 0: Memory at c0000000 (64-bit, non-prefetchable) [size=64M]

Expansion ROM at c4000000 [disabled] [size=128K]

Capabilities: [40] Power Management version 3

Flags: PMEClk- DSI- D1- D2- AuxCurrent=0mA PME(D0+,D1-,D2-,D3hot-,D3cold-)

Status: D0 NoSoftRst+ PME-Enable- DSel=0 DScale=0 PME-

Capabilities: [70] Express (v2) Endpoint, MSI 00

DevCap: MaxPayload 256 bytes, PhantFunc 0, Latency L0s unlimited, L1 <8us

ExtTag+ AttnBtn- AttnInd- PwrInd- RBE+ FLReset+

DevCtl: Report errors: Correctable+ Non-Fatal+ Fatal+ Unsupported+

RlxdOrd+ ExtTag+ PhantFunc- AuxPwr- NoSnoop+ FLReset-

MaxPayload 128 bytes, MaxReadReq 512 bytes

DevSta: CorrErr- UncorrErr- FatalErr- UnsuppReq- AuxPwr- TransPend-

LnkCap: Port #0, Speed 8GT/s, Width x16, ASPM L1, Exit Latency L0s <4us, L1 <64us

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp+

LnkCtl: ASPM Disabled; RCB 64 bytes Disabled- CommClk+

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 8GT/s, Width x16, TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt-

DevCap2: Completion Timeout: Range ABCD, TimeoutDis+, LTR-, OBFF Not Supported

DevCtl2: Completion Timeout: 50us to 50ms, TimeoutDis-, LTR-, OBFF Disabled

LnkCtl2: Target Link Speed: 8GT/s, EnterCompliance- SpeedDis-

Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS-

Compliance De-emphasis: -6dB

LnkSta2: Current De-emphasis Level: -3.5dB, EqualizationComplete+, EqualizationPhase1+

EqualizationPhase2+, EqualizationPhase3+, LinkEqualizationRequest-

Capabilities: [b0] MSI-X: Enable+ Count=256 Masked-

Vector table: BAR=0 offset=00100000

PBA: BAR=0 offset=00110000

Capabilities: [100 v2] Advanced Error Reporting

UESta: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UEMsk: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UESvrt: DLP+ SDES+ TLP- FCP+ CmpltTO- CmpltAbrt- UnxCmplt- RxOF+ MalfTLP+ ECRC- UnsupReq- ACSViol-

CESta: RxErr- BadTLP- BadDLLP- Rollover- Timeout- NonFatalErr-

CEMsk: RxErr- BadTLP- BadDLLP- Rollover- Timeout- NonFatalErr+

AERCap: First Error Pointer: 00, GenCap+ CGenEn- ChkCap+ ChkEn-

Capabilities: [148 v1] #19

Capabilities: [178 v1] Transaction Processing Hints

Device specific mode supported

No steering table available

Kernel driver in use: hfi1

Kernel modules: hfi1

and

ff:00.0 Fabric controller: Intel Corporation Omni-Path HFI Silicon 100 Series [integrated] (rev 11)

Subsystem: Intel Corporation Omni-Path HFI Silicon 100 Series [integrated]

Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR+ FastB2B- DisINTx+

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- SERR-

Latency: 0

Interrupt: pin A routed to IRQ 271

Region 0: Memory at f4000000 (64-bit, non-prefetchable) [size=64M]

Expansion ROM at f8000000 [disabled] [size=128K]

Capabilities: [40] Power Management version 3

Flags: PMEClk- DSI- D1- D2- AuxCurrent=0mA PME(D0+,D1-,D2-,D3hot-,D3cold-)

Status: D0 NoSoftRst+ PME-Enable- DSel=0 DScale=0 PME-

Capabilities: [70] Express (v2) Endpoint, MSI 00

DevCap: MaxPayload 256 bytes, PhantFunc 0, Latency L0s unlimited, L1 <8us

ExtTag+ AttnBtn- AttnInd- PwrInd- RBE+ FLReset+

DevCtl: Report errors: Correctable+ Non-Fatal+ Fatal+ Unsupported+

RlxdOrd+ ExtTag+ PhantFunc- AuxPwr- NoSnoop+ FLReset-

MaxPayload 128 bytes, MaxReadReq 512 bytes

DevSta: CorrErr- UncorrErr- FatalErr- UnsuppReq- AuxPwr- TransPend-

LnkCap: Port #0, Speed 8GT/s, Width x16, ASPM L1, Exit Latency L0s <4us, L1 <64us

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp+

LnkCtl: ASPM Disabled; RCB 64 bytes Disabled- CommClk+

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 8GT/s, Width x16, TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt-

DevCap2: Completion Timeout: Range ABCD, TimeoutDis+, LTR-, OBFF Not Supported

DevCtl2: Completion Timeout: 50us to 50ms, TimeoutDis-, LTR-, OBFF Disabled

LnkCtl2: Target Link Speed: 8GT/s, EnterCompliance- SpeedDis-

Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS-

Compliance De-emphasis: -6dB

LnkSta2: Current De-emphasis Level: -3.5dB, EqualizationComplete+, EqualizationPhase1+

EqualizationPhase2+, EqualizationPhase3+, LinkEqualizationRequest-

Capabilities: [b0] MSI-X: Enable+ Count=256 Masked-

Vector table: BAR=0 offset=00100000

PBA: BAR=0 offset=00110000

Capabilities: [100 v2] Advanced Error Reporting

UESta: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UEMsk: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UESvrt: DLP+ SDES+ TLP- FCP+ CmpltTO- CmpltAbrt- UnxCmplt- RxOF+ MalfTLP+ ECRC- UnsupReq- ACSViol-

CESta: RxErr- BadTLP- BadDLLP- Rollover- Timeout- NonFatalErr-

CEMsk: RxErr- BadTLP- BadDLLP- Rollover- Timeout- NonFatalErr+

AERCap: First Error Pointer: 00, GenCap+ CGenEn- ChkCap+ ChkEn-

Capabilities: [148 v1] #19

Capabilities: [178 v1] Transaction Processing Hints

Device specific mode supported

No steering table available

Kernel driver in use: hfi1

Kernel modules: hfi1

Final Words

There is a lot of misperception in the market that the Omni-Path fabric Xeon SKUs will work in every server. That is false and for good reason. It allows platform vendors to lower BOM and therefore end customer costs. At the same time, it would have been nice to not have to make this distinction while shopping for servers. We acquired a 100Gbps Omni-Path switch for the lab and now have an additional consideration for using it with the inexpensive $155 upgrade F SKUs.

Omni-Path seems to be a “white elephant” of Intel creation.

– No support for RoCE/RDMA/iWARP

– No support for CPU offload

– No bridge to other ethernet switches via QSFP/QSFP+/QSFP28 cables

So, while the low cost of $155 to add 100G NIC is attractive, a NIC that can only talk to a limited network space is not attractive.

@patrick, the embedded link “Omni-Path and Intel Optane/ P4800X” seems point to the wrong the article

Thunderbolt all over again.

@Misha Engel Thunderbolt Enterprise edition

@BinkyTO iWARP it does. I do agree on the need for an Ethernet gateway. If only they had a VPI like feature that would be killer.

@dbTH check out that first image. That is Optane over Omni-Path fabric.

And here I think the Xeon SP Generation was going to be uncomplicated…

This post is very informative. Is there anyone who can kindly teach me:

1. Can F processor be mixed with non-F processor assuming both are of the same sku?

2. If so, what is the benefit to adopt two F processors? To have two OPA ports?

Thank you.

According to TYAN, if both socket support Skylake-F, you can indeed have two links for a total of 200Gbps.

http://www.tyan.com/Barebones%3DFT77DB7109%3DB7109F77DV14HR-2T-NF%3Ddescription%3DEN

Can somebody help me here:

I have 2 F processors, and have a motherboard supporting Omni-path, can I just do not connect this Omni-path connector?

I have built the machine to test yet.