Getting to 400GbE Speeds

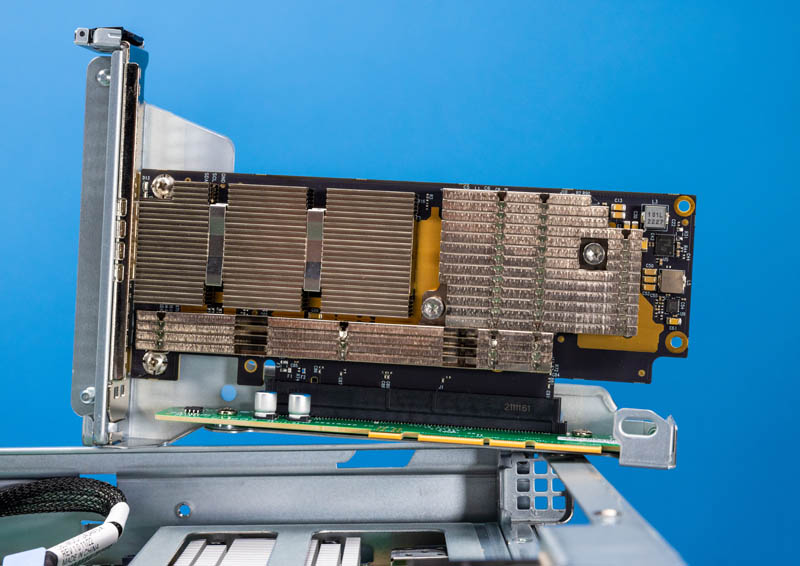

To get everything working, we use the Supermicro SYS-111C-NR and Supermicro SYS-221H-TNR systems and NVIDIA ConnectX-7 NICs from PNY. Since this required two servers and a switch that can use up to around 2.4kW for around 4kW of total power headroom needed to do the demo, we setup everything in the data center. The ConnectX-7 cards had to go back and so we JUST managed to get this working thanks to an awesome STH reader who answered a Phone-a-Friend to get this all working. One of the biggest challenges is that this is an OSFP NIC.

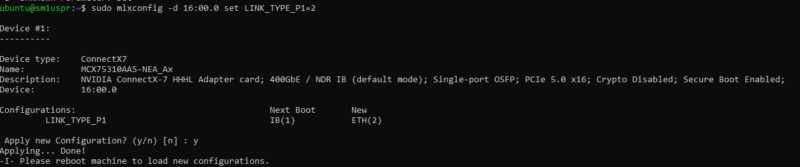

A cool feature is that the NVIDIA ConnectX-7 cards we had can run either InfiniBand or Ethernet. We tested these in Infiniband as part of our ConnectX-7 piece. For this, we needed Ethernet.

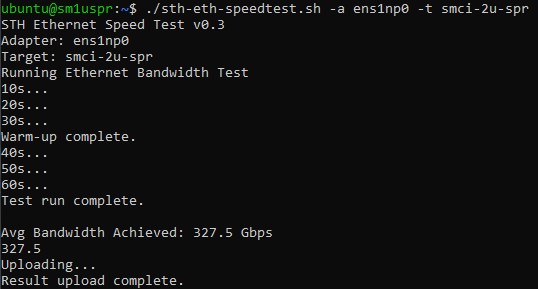

This is how far we got before having to have the NICs pulled and packed for FedEx’s impending pickup. We will quickly note that while we were using Intel Xeon “Sapphire Rapids” here since those are typically easier to get running at higher speeds quickly, and we also needed a PCIe Gen5 capable processor.

While that is not a full 400GbE, we are over 200GbE by quite a bit. Also, for some sense of what makes this challenging, 400GbE is on an order of magnitude a similar level of bandwidth as the entire CPU memory bandwidth of an average desktop or notebook. With 400GbE, you can put that level of bandwidth over fiber optic cable and go much further than a few centimeters within a system.

The challenge was not just ensuring that we were on a single NUMA node, and on Intel Xeon CPUs. AMD got much better with Genoa but still usually has a bit lower I/O performance without tuning than Intel, and we did not have time to tune it. Our biggest challenge was just getting everything linked up. Folks may be familiar with QSFP+, QSFP28, QSFP-DD and those are the up to 12W modules we used in our switch.

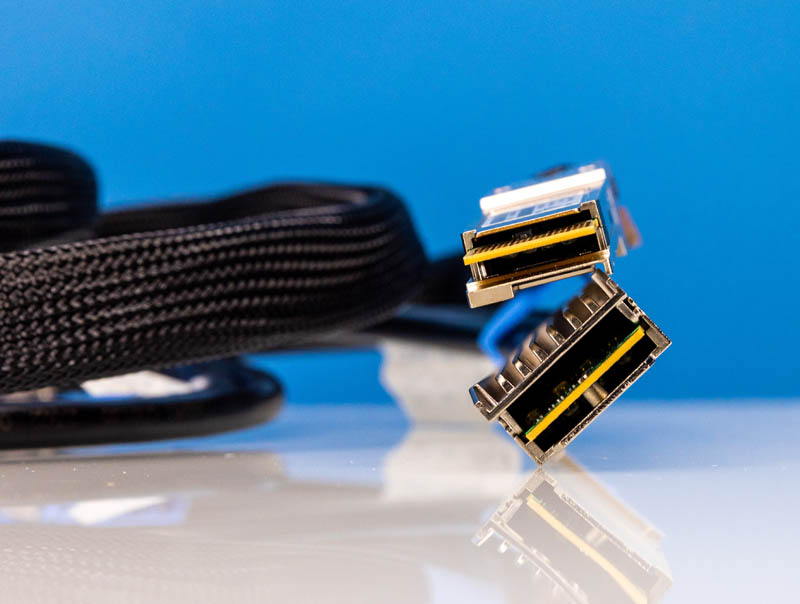

Our challenge was with the QSFP-DD and OSFP cables and optics. We thought we would be able to just get a DAC or two, but that was incorrect due to the different signaling speeds the pool of optics and DACs we had accumulated utilized. The one challenge that we were not expecting is just getting something to fit into the ConnectX-7’s OSFP slots. Most OSFP DACs and optics out there utilize heatsinks on the DAC/ optic for cooling since it is not part of the cage. NVIDIA, however, uses a “flat” OSFP connector without the heatsink on the module.

NVIDIA’s reasoning is that it has huge heatsinks on the ConnectX-7 cage, so it does not need them.

We have been telling this story to folks for the past few weeks, and apparently, it is a big issue. Companies order ConnectX-7 cards and OSFP DACs or optics only to find that the DACs and Optics have heatsinks. The NVIDIA-branded cables are hard to get and at ISC 2023 STH learned of several installations where the cables were the long pole holding up deployment. Our Phone-a-Friend (thank you again) got this sorted, but the flat OSFP cables and optics are harder to get these days. We do not have a photo of this since it was in the data center not the lab.

Power Consumption and Noise

On the subject of power consumption, there are four 1.3kW 80Plus Platinum power supplies. This is needed since the switch has a maximum of 2524W of power consumption. Four power supplies give 2.6kW plus two extra power supplies for redundant power rails.

In our testing, we did not get the switch over 700W. Part of that is really just due to the fact we did not have enough optics. 62 more optics at up to 12W each would be 744W and add another 20% for cooling and that would add over 800W without even stressing the management CPU or the switch chip.

To be frank, we did not get to stress this unit too hard. A big part of that is that we had over $100,000 of gear to just get two servers connected over a 400GbE link. Those parts were almost made of unobtainium so even if we had more servers, it was not a given that we could get them working.

Final Words

This is just awesome. With 64x 400GbE ports this is just a lot of bandwidth. For modern servers, 400GbE is enabled by PCIe Gen5 x16 slots as it was not possible with a PCIe Gen4 x16 slot. To put that into some perspective, this is so fast that a 3rd Gen Intel Xeon Scalable Platinum 8380H “Cooper Lake” CPU that was the highest-end Xeon one could buy in Q1 2021, does not have enough PCIe bandwidth to feed a single 400GbE link.

While many modern servers are being deployed with 100GbE, 400GbE is only 4x the speed of 100GbE. If an organization was deploying 100GbE with 24-core CPUs in previous generations, then that is the same bandwidth per core as deploying 400GbE with 96-core AMD EPYC Genoa parts today. For others, having this much bandwidth in a single switch means a smaller network radix and fewer switches when connecting downstream servers and 100GbE switches.

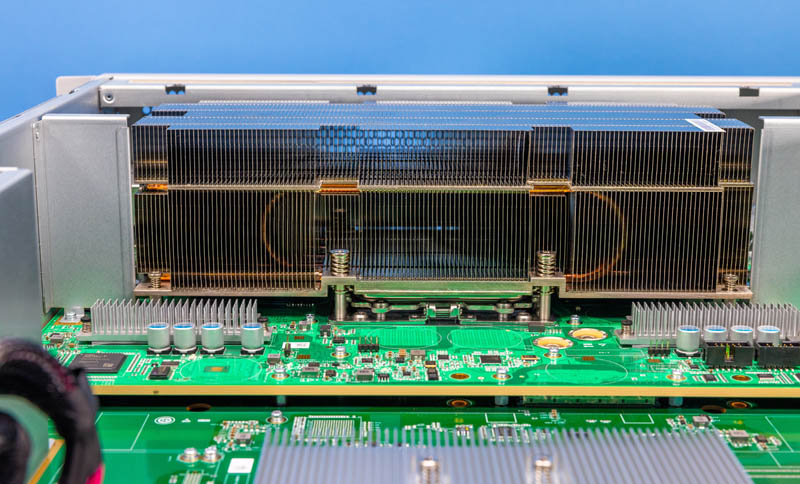

Of course, perhaps one of the most exciting parts was seeing the Broadcom Tomahawk 4 in action. This is a massive chip and we ended up getting one for the YouTube set just to have in the background after doing this piece.

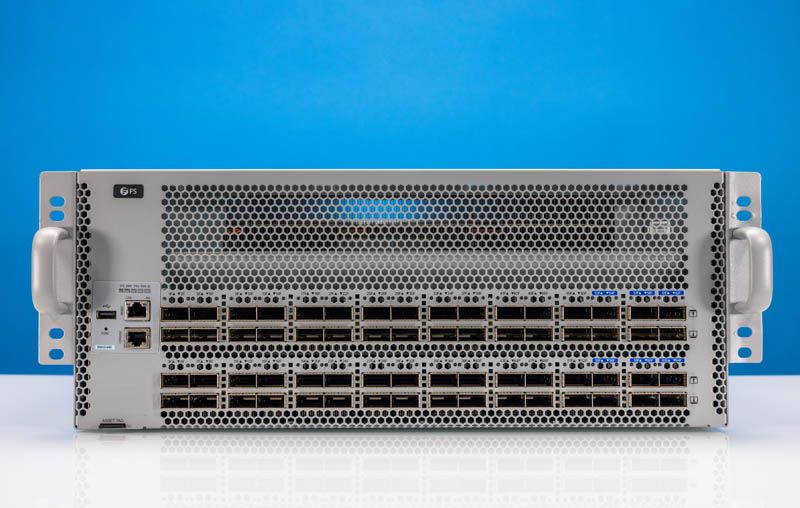

Hopefully, you liked this look at the FS N9510-64D. This is a massive switch and something that is just fun to show our readers.

That heatsink is crazy. Makes me wonder what is going to happen (en mass) first: co-packaged optics, or water-cooled switches?

Question: How much does the Tomahawk 4 weigh?

Just musing..but is it odd a 64 port 400GbE switch (and other enterprise or service provider level equipment) is presented on a site called “Serve the HOME” Don’t get me wrong, I love the content here!

Yeah, that is an interesting switch. The thing is so big that it needs a standard server chassis just for that MASSIVE heatsink and 8,000-plus pin Broadcom Tomahawk 4 switch ASIC. Very cool. :-)

Page 1, paragraph 4: “The top 4U is used for cooling.” should read “The top 2U is used for cooling.”

Page 3, paragraph 3: “We will quickly note that while we were using Intel Xeon “Sapphire Rapids”” – missing end of sentence.

Sure hope this has a better warranty than “30 days then we send it back to China for a few months” I experienced with an obviously much less expensive switch.

as mentioned before, use the 2x200G cx-7 nic and a cheap ($150) QSFP-DD to 2 x QSFP56 DAC.

Don’t waste your time with the flat top OSFP weirdness!

@Kwisatz: It’s definitely a misleading site name and it causes so much confusion it’s an FAQ in the “about” section: https://www.servethehome.com/about/

“STH may say “home” in the title which confuses some. Home is actually a reference to a users [sic] /home/ directory in Linux. We scale from those looking to have in home or office servers all the way up to some of the largest hosting organizations in the world.”

I do wonder how many of us ended up here because we were after information about home server equipment. I guess judging by the number of non-enterprise mini-PC and 2.5 GbE articles, quite a few of us.

I wonder how often 400Gb ports are saturated in this context – aren’t latency and rate more interesting?

It would be useful if you would cover the management features of the switch. Sure, the heatsink is cool and all but that’s not really functional or useful to users, is it?