At FMS 2025, we stopped by the Kioxia booth. They had a few cool features there, and one major theme was that there was a bigger focus on the silicon and memory side than the drive side. There were three neat things we wanted to show. First, there was the 245TB Kioxia LC9 that will allow 8PB or more in a 2U chassis. Second, there was a cutaway view of a 32-die stack BiCS 8 Package. Third, we saw a Kioxia CM9 that was feeding five NVIDIA H100 GPUs.

Kioxia at FMS 2025

At NVIDIA GTC 2025 we showed the Kioxia LC9 122.88TB PCIe Gen5 NVMe SSD and the capacity was upgraded to 245.76TB a few weeks ago. Here is the EDSFF E3.L form factor that will allow for massive storage. With 32-bay E3.L chassis, and some going up to 40 drives, that is roughly 8PB or up to around 10PB in existing infrastructure.

Of course, cooling that many drives is not negligible, but it is a lot less power than modern CPUs and GPUs. That 8PB may sound like a lot, but we saw a 1PB prototype style single SSD at FMS 2025 that was still some time off. Still, high-capacity is hot right now.

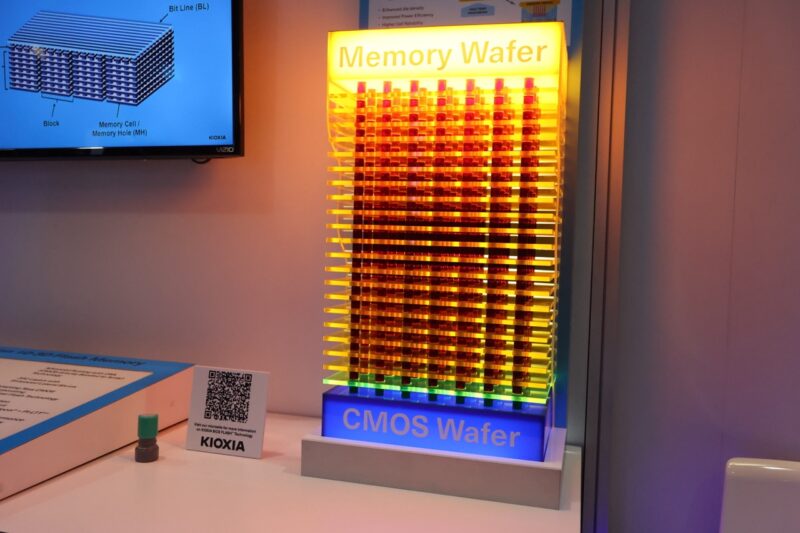

Part of the higher-capacity push is new BiCS 8 and BiCS 9 NAND that Kioxia showed. There was a bigger focus on this at FMS 2025, and there was even a little mock-up of BiCS 8’s structure.

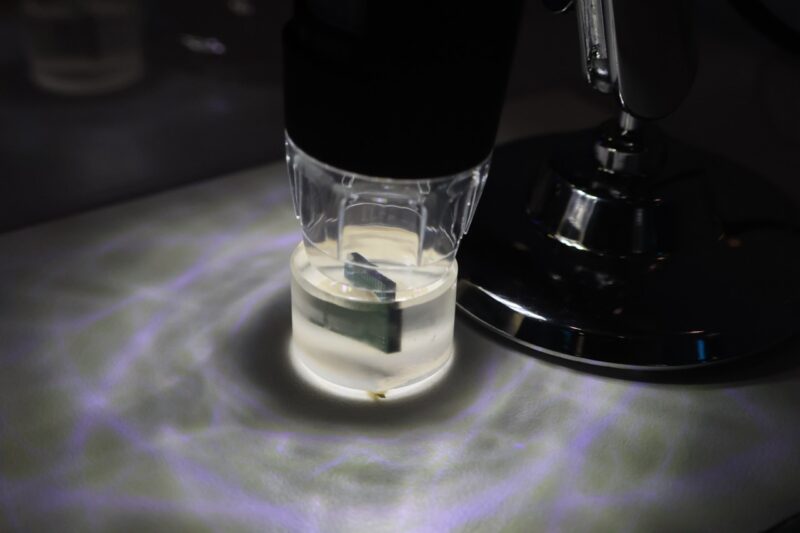

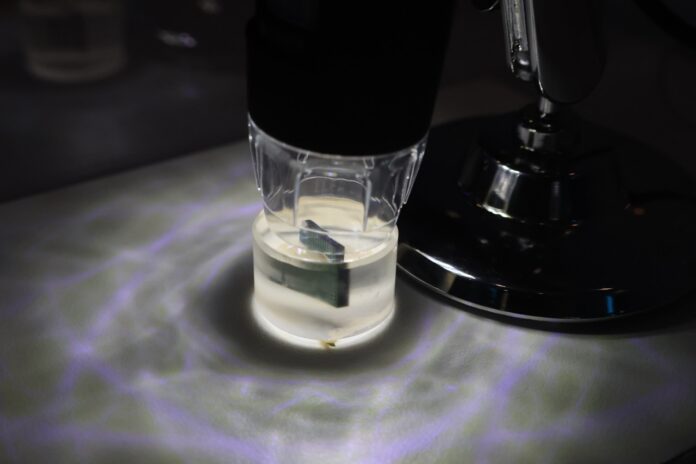

Then we turned around and saw this microscope with a package that was cross-sectioned.

This was showing a 32-die stack for massive capacity packages. These are the types of packages that make very high capacity SSDs possible.

We will also note that with MLPerf Storage coming out recently, Kioxia had its new Kioxia CM9 PCIe Gen5 NVMe SSDs feeding five NVIDIA H100 GPUs.

Most PCIe Gen5 performance SSDs top out at feeding four NVIDIA H100 GPUs, so this was a neat little performance demo that was run on-site.

Final Words

We thought these were pretty cool to see at FMS 2025. The show was much smaller this year, but there was a lot of focus especially on CXL and the high-capacity SSD segments. Kioxia took a bit of time to get into the high-capacity SSD space, but drives like the LC9 will sell very well since the AI community has a voracious appetite for high-capacity SSDs.

The ability to pack 8 petabytes or more into a single 2U chassis is a breakthrough in data center density and performance.

Wouldn’t it be great if this actually made it into consumer SSDs? Too bad it probably won’t. All the evidence I’ve been seeing (and the couple of replies I’ve received to my grievances about this issue) suggests that nobody in the consumer space really wants bigger SSDs (too expensive, questions surrounding reliability, operational longevity, data retention, et cetera) and that those will likely forever and always be stuck at 4 or 8 TB. Bigger capacity NAND chips don’t mean anything if they are only ever used in enterprise hardware in drives exceeding 256 TBs or more capacity. I apologize if this comes off as “too negative”. I’m just miffed that regular “average Joe” SSDs aren’t benefitting from the stupidly fast growth that’s ending up in “rich corporations only” grade SSDs. Maybe I’m just too impatient. Or, maybe I need to give up on ever having anything larger than 4 TB in my laptop PC.

I think it’s long overdue to create a new formfactor for solid/flash storage.

I never got it, why the heck they could not spare another 30mm for the m.2 22110 form-factor for medium and bigger laptops. And even then it think there are better ways to design it, 22mm isn’t enough, a more rectangular shape is desirable. On the DRAM side, CAMM is a nice approach.

On larger workstations a change to the edsff formfactors (e1.s, e3.s, e3.l, e2…) would be a way and especially passive heatsinks (backplane-designs) which can effectively absorb the amount of heat four drives or more generate.

About the 32-layers, it looks like they use four “sub-packages” with eight layers each and somehow connect them to each other, if you look at the spacing. Which makes sense from the production/yield perspective.

This is a time to get back to SLC/MLC and have still enough space, less latency, no retention troubles and a quite high reliability; it can be a way to reduce the high power-consumption of the controllers LDPC engines. >10 cores sometimes? The P5800X used one core… but the memory itself draws a ton of power.

About prices, on m.2 2280 SSD’s @ maximum capacity you need to use the highest capacity packages because of space constraint, on the other side u.2 drives can use a lot of smaller packages to get 64Tbit.

@DarkServant. In reference to your comment regarding U.2 SSDs, the stupid thing is, one COULD currently do just that with SATA drives NOW. We COULD have 64 Tbit capacity 2.5″ SATA drives, but they just won’t do it. If I’m getting it right, one refrain about SATA drives not going larger than 4 or so TB has to do with transfer speeds at the interface. Which seems like a lame excuse since we have 20+ TB regular spinning-platter hard drives, all SATA-based. The difference between a hard disk drive and an SSD doesn’t change the I/O situation with SATA drives, yet we’ve got HUGE HDDs while SSDs are stuck at 4 TB. You’re still gonna wait forever to make any kind of backup whether it’s to (or from) a hard disk or flash memory because SATA is limited to something like 6 Gbit/second, which translates to around 540 MB/second. The companies making SSDs shouldn’t have given up on U.2 in consumer devices. They really should have made a better effort to promote that standard for consumer as well as enterprise systems, but we got what we got and here we are. It is claimed that hard disk tech is somehow “cheaper” than flash, but I’m not really buying that either. Today, I can buy a 512 GB USB 3.x THUMB DRIVE for under $40 direct from SanDisk. A freakin’ THUMB DRIVE, of half a terabyte capacity, for under $40! Please don’t tell me that they can’t get at least 16 TB in a 2.5″ consumer SSD for a bit under $300, be it SATA, SAS or U.2 NVMe.