Whenever we do a 4P server review, such as our recent Supermicro 2049P-TN8R Review, we inevitably get the question: Why are all servers not 4-socket servers? In each review, we try to cover the pros and cons, but instead, we wanted to get an article out discussing some of the factors. This is not an exhaustive list, but it frames some of the dynamics in the market. We also want to note that this is being done in Q1 2020, and subsequent CPU generations may change how these are viewed.

Why All Servers Are Not 4-Socket Servers Video

We took some video of this discussion. If you are on the go and prefer listening to learn about the market dynamics, this should help. Check that out here:

Of course, we have more detail in our piece here so keep reading to dive in.

Why All Servers Are Not 4-Socket Servers

In our discussion, we are going to talk about some of the reasons that 4-socket servers can make a lot of sense. We will then discuss why they are still a relatively small part of the market by unit volume. Finally, we are going to discuss some of the regional variations.

Why 4-Socket Servers Make Sense

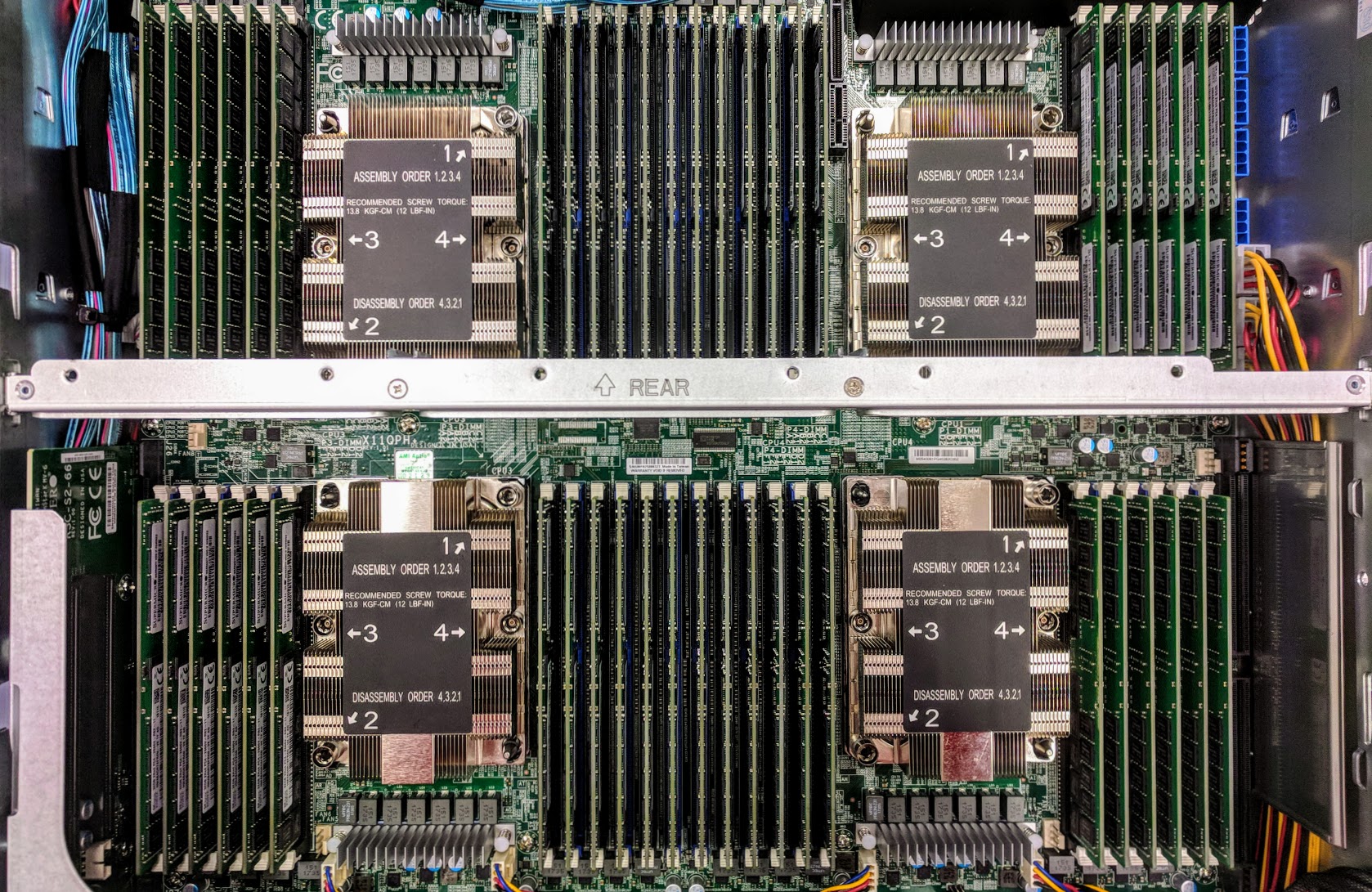

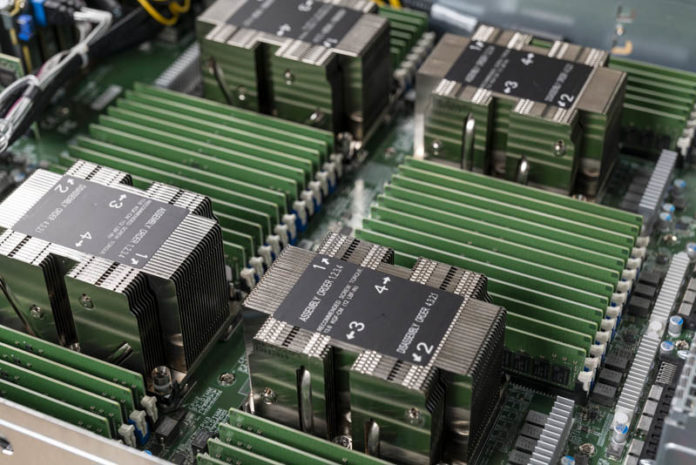

Perhaps the biggest reason to go to 4-socket servers is to scale-up node sizes. This means that one has more memory and more CPU power in a node. As a result, one can scale-up problem sizes without having to move off the node and onto network fabrics. Even for virtualized servers, larger nodes mean that one can get higher virtual machine density with fewer stranded resources.

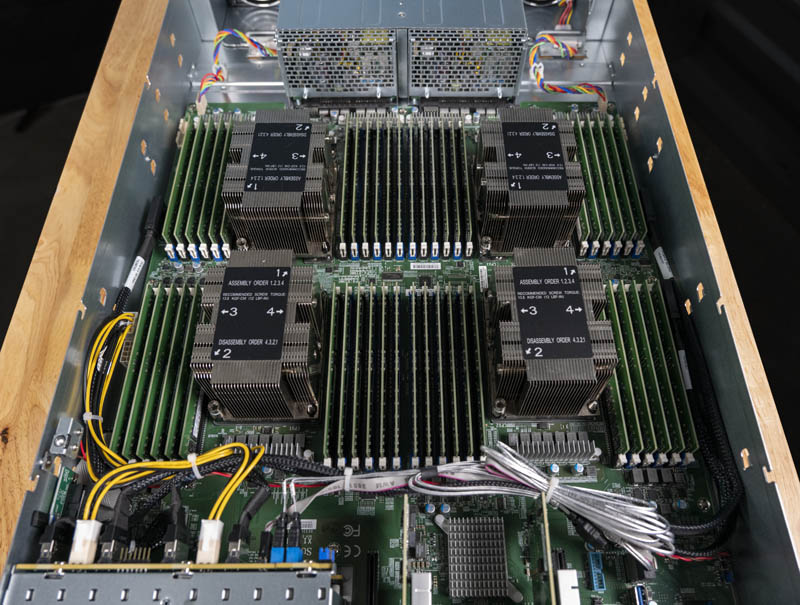

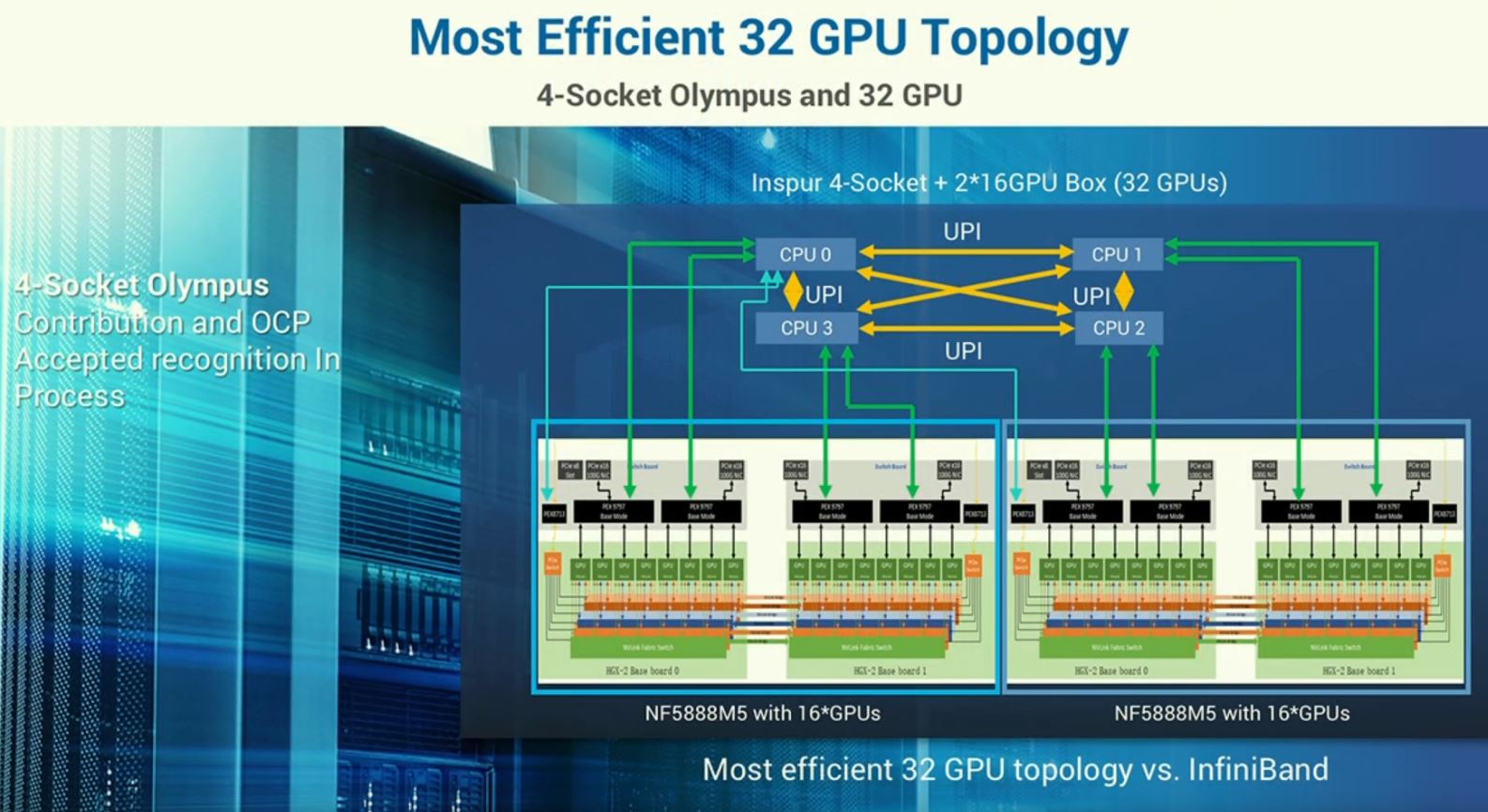

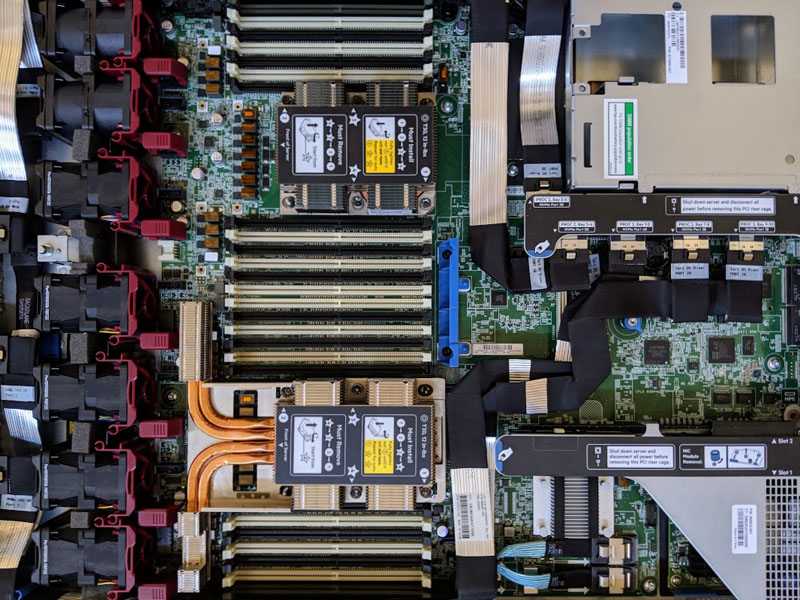

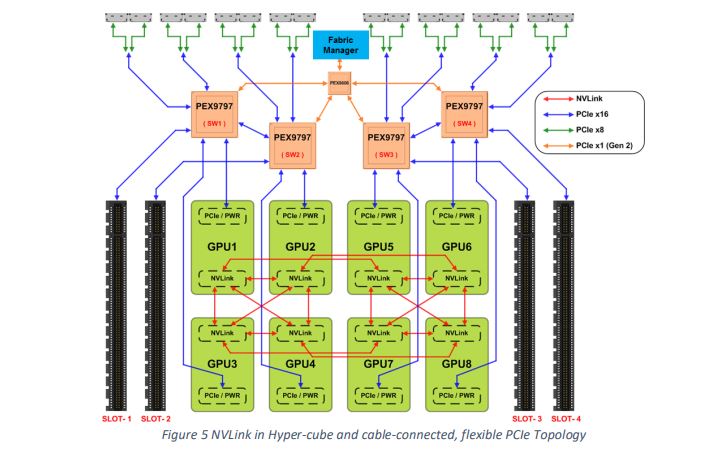

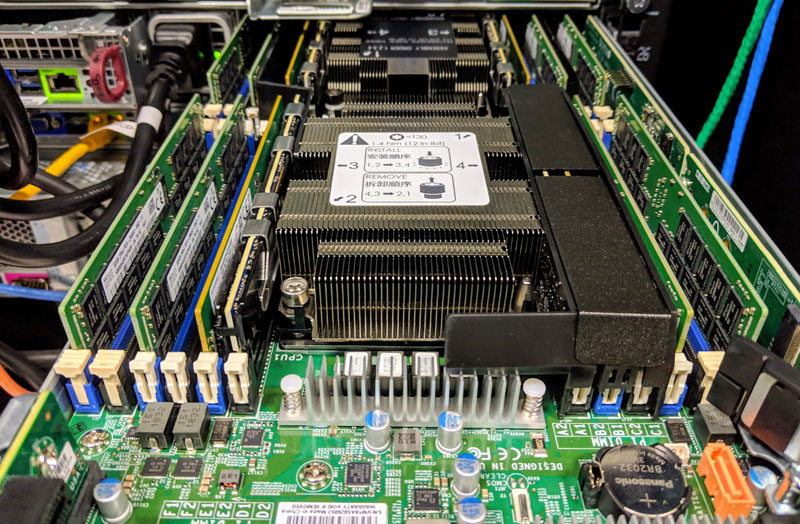

One can also get more expansion per node. In modern servers, PCIe lanes emanate from the CPU socket. In four-socket servers, specifically the current generation Intel Xeon Scalable servers, you effectively get twice the PCIe lanes possible by doubling CPU lanes. We have seen storage systems utilize these additional PCIe lanes to connect more NVMe drives and add more SAS connectivity. We have even seen GPU servers where sets of GPUs are attached to each CPU in 4-socket configurations.

From a cost perspective, there are a number of factors that can help make 4-socket servers sensible. For example, there are many infrastructure pieces based on a per-node model where costs can be reduced by consolidating into larger nodes. These types of costs include management software as well as simple items such as fewer management switch ports.

Cost savings extend to the hardware side. Many servers can utilize a single NIC per node. If this is the case, doubling the capacity of the node essentially means half the number of NICs. It also means half the switch and optic/ cabling costs per node, greatly reducing network costs.

Inside the node itself, there are a number of cost efficiencies as well. For one node per chassis systems, the cost of the chassis and two power supplies are split across twice the amount of CPU sockets and memory which effectively lowers overhead costs. One needs a boot drive or set of boot drives no matter the size of the node so this also scales with nodes, not sockets. On the motherboard, one utilizes half of the BMCs, PCHs, and basic networking such as 1GbE management NICs than with dual-socket servers.

The easiest way to think about these cost savings are that there are server infrastructure costs that still scale per node rather than per socket or per core. As a result, using larger nodes can effectively yield 50% cost savings in some areas.

Running each node at higher power levels from utilizing more components means power supplies run at higher-ends of their operating ranges. That can usually yield higher power supply efficiency further lowering TCO.

Aside from the benefits of scaling up a node and lower per socket infrastructure costs, there is another benefit, upgrades. Some fairly large service providers had a strategy that they would deploy 4-socket servers with two CPUs then upgrade them mid-lifecycle to four CPUs getting twice the performance or more. They would not have to buy more servers and instead, just add CPUs. Many of these upgrade projects were put off indefinitely, but that is a reason some have gone 4-socket.

Why 4-Socket Servers Are Lower Volume

Despite the advantages laid out above, as well as some others, 4-socket servers are far from the dominant architecture today. Instead, 2-socket servers are dominant in terms of unit volume.

The obvious answer here is cost. Each node simply costs significantly more even though one may be able to get per-node cost savings. For smaller installations of a rack or less, many organizations simply want more nodes for smaller failure domains. With fewer more costly nodes a node that is offline for any reason represents a higher proportional cost of offline resources in small installations.

As we saw with Licenseageddon Rages as VMware Overhauls Per-Socket Licensing, modern software is increasingly becoming licensed per-core rather than per-socket or per-node. In the enterprise space where software costs often dwarf hardware costs, getting more performance per core is more important than driving scale-up performing in a node.

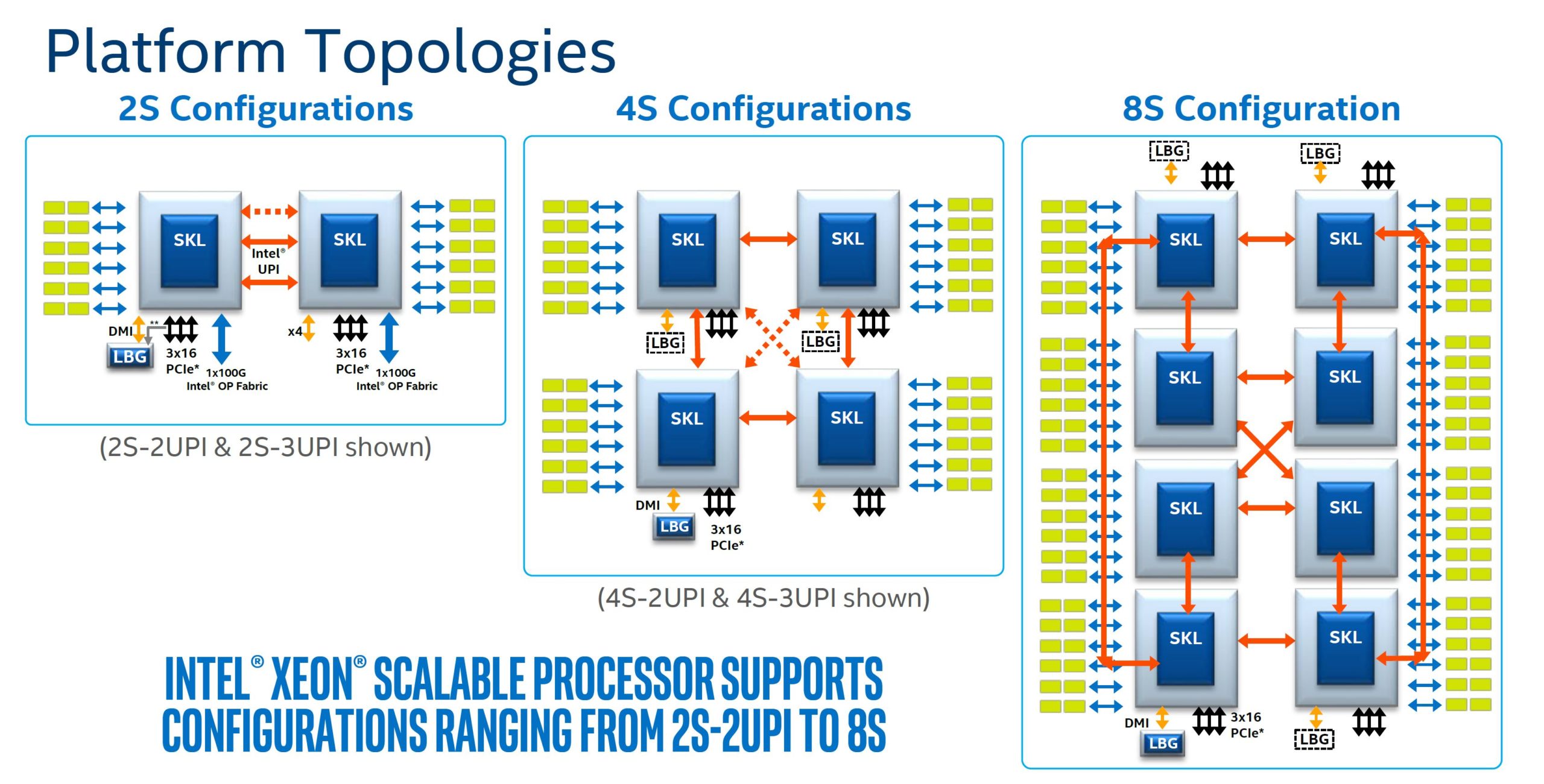

When it comes to performance, one of the biggest impacts comes from UPI link bandwidth. Intel Xeon Scalable CPUs in the Gold 6200 and Platinum 8200 SKUs each have 3 UPI links. In dual-socket servers, server designers generally elect 2 or 3 UPI links per. Often in lower-cost servers, you see 2 UPI links to save on motherboard cost and power consumption. In Higher-performance servers, and often with the cloud providers, 3 UPI links are used providing around 50% more bandwidth. The Supermicro BigTwin review we did has 3 UPI links even in dense a dense 2U 4-node configuration which required design work to provide the higher-performance links.

With 4-socket servers, there is no more than a single UPI link between each CPU. We wrote this article before the Big 2nd Gen Intel Xeon Scalable Refresh which was for 2-socket “R” SKUs. For the launch SKUs, the Gold 6200 and Platinum 8200 series had 3x UPI links that could directly attach a CPU to its three peers in a 4-socket server. Even with that direct connection, maximum direct CPU-to-CPU bandwidth is effectively 33%-50% of a dual-socket server even on higher-end parts.

For the Intel Xeon Gold 5200 series CPUs, each CPU only has two UPI links. That means each CPU can only talk directly to two of the three other CPUs in a 4P system. If you have very little data traversing socket infrastructure, then this is OK. For many applications, this is a significantly worse version of the 4-socket topology since it can mean making two hops instead of one. As a quick anecdote, this is a similar situation to what we saw on the NVIDIA Tesla P100 SXM2 NVLink versus the Tesla V100 SXM2 NVLink topologies with the P100 version being the Xeon Gold 5200 here. That was a major innovation with the Volta series of GPUs because more links enable better topologies.

Rounding out the Xeon discussion we still need to address the Platinum 9200 and the Xeon Bronze and Silver. The Xeon Platinum 9200 series, is not socketed and is limited to two packages. Underneath, it is essentially a 4-socket server in two “sockets” so it will have chip-to-chip bandwidth more similar to a 4-socket bandwidth. The Intel Xeon Bronze and Silver lines cannot do 4-socket so one is left to single and dual-socket applications.

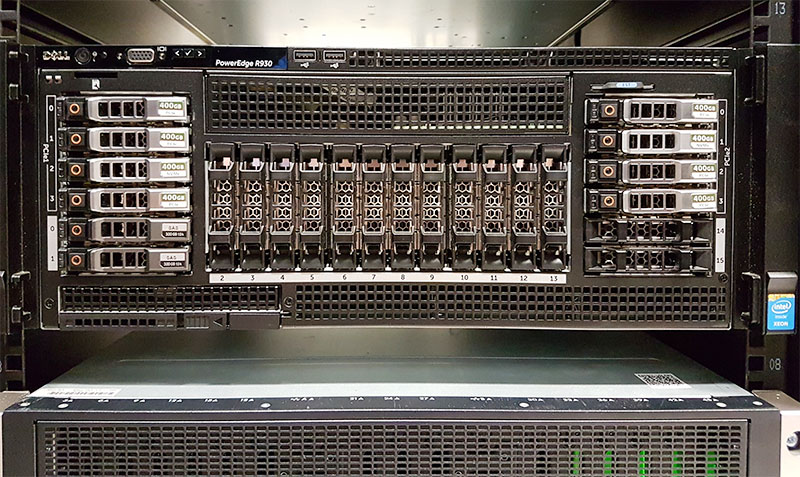

From a density standpoint, in the best case these 4-socket servers, if they were a single U each, would have the same density as a 2U 4-node server. Most 4-socket servers are instead 2U-4U servers so they effectively are no better than half of the modern 2-socket density.

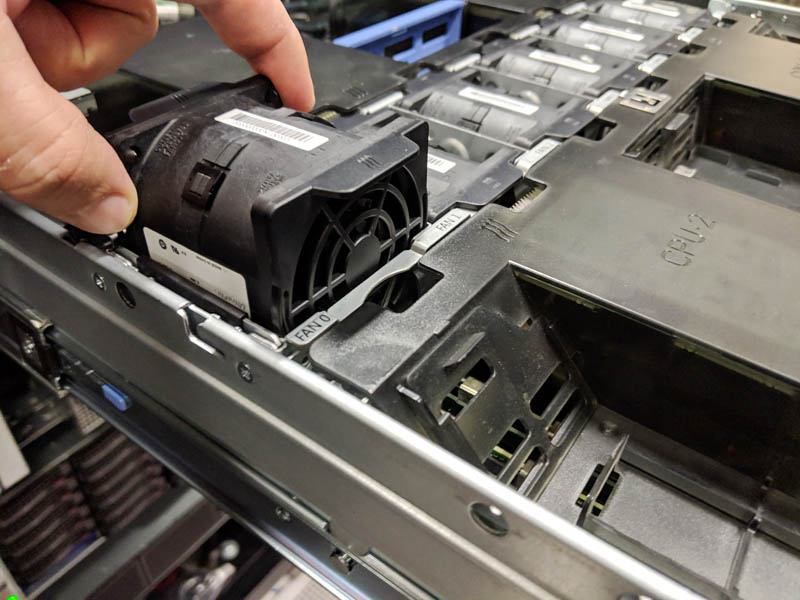

Complexity is also higher in 4-socket servers. More complex PCB and integration make it slightly harder to design systems. As a result, we see fewer vendors make 4-socket servers. This is further reinforced by lower volumes which means there is a volume and complexity barrier to entering the market.

Another aspect that is often overlooked is what happens with 4-socket boot times. If you ever have to reboot a four-socket server, they can be very slow. They can take minutes to POST and boot. Years ago we raced two 4-socket servers from Dell and Supermicro and they took minutes to boot. More recently we used a server with several terabytes of DCPMM and it took over 15 minutes to POST. This is actually a big reason we do not use 4P servers in the STH hosting infrastructure since they take so long to come online.

Regional Preferences

The world of 4-socket and even 8-socket servers have a fun quirk and that is a numeric regional preference. The number “4” is often referred to as unlucky in Chinese culture. The background for this is that the word for four is pronounced very similarly to the word for “death.” I would totally mess this up if I were trying to say the Mandarin for it. This is similar to why in the US you do not often see elevators with 13th floors and airplanes with 13th rows of seats. If you in Asia, there are many buildings without 4th floors. That is just a regional difference.

From what we have heard from both OEMs and Intel, the vast majority of 8-socket servers today are sold in China. The most common reason for this that we hear is not because of the scale-up benefits. Instead, it is because of the numerology of the 8. It just so happens that 8 in China is a lucky number, much like 7 is considered by many in the US to be a lucky number. That is an interesting tidbit in the event you thought that all server purchasing was done solely on completely rational performance merits. Humans still run the processes, and therefore things like this are more common than you think.

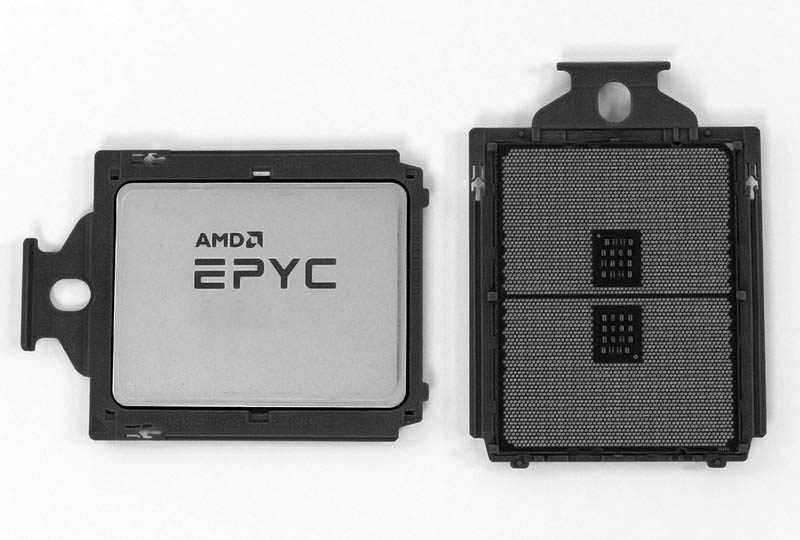

AMD EPYC Impact

Since we are likely to get comments around AMD’s focus on dual sockets being a factor here, we wanted to quickly address this. One could say that dual-socket popularity is partly driven by AMD EPYC “Rome.” With the EPYC 7002 series, one can get 128 cores in 2 sockets compared to 112 maximum in 4-socket 2nd generation Intel Xeon Scalable.

Maybe that moves some small portion of the market, but realistically the 4-socket market has been small for generations and AMD, as of the time of this writing, does not yet have 10% market share. This is relatively a non-factor at this point.

Final Words

There are plenty of applications that one wants to scale-up a single node to 4-sockets. For example, a lot of the business intelligence and analytics workloads such as SAP HANA, you want big, high-memory capacity nodes.

Even if you do not have an application like that, you can still see a lot of cost savings by moving to 4-socket infrastructure, both in the initial purchase and in some of the ongoing operational costs. You also get features like more PCIe expansion per node.

There are some negatives to going 4-socket. UPI communication/ NUMA node topology is not as strong as dual-socket configurations and that is one of the big ones holding 4-socket servers back.

Still, if you are aware of the pros and cons of four-socket servers, they can make a lot of sense for some organizations. There are probably segments of the market out there that could or should be using four-socket servers and are not at this point.

Is the 80 core Ampere benefiting from this lucky phonetic tradition as well?

“Eighty” sounds good in English, to my Celtic ears. It’s also been about the percentage thruput attained by most networking connections I’ve encountered in the wild. This thought has somehow prompted me to inquire if you’re in planning for any higher speed NIC reviews in the near future? Cray’s new switch would be of especial interest to my future, we’re going to need a new omnipath equivalent, for scalemp links and improved topology is simply killer c.p. for us. We have pooled HPE Z stations in our trading room for silly good improvements in performance while maintaining near silence. But that’s housing the switch under the floor tiles behind many inches of acoustic baffles. I have just begun reading about sound cancellation enclosures such as from Silentium, who claim 32dBa reduction. This would let us run standard TOR and hot aisle servers and reduce or eliminate our physical separation for soundproofing notably. We’ve tried remote graphics, but there’s something psychological about having your work under your desk and if you never saw the desks traders were seated at not a blink ago, space for half a dozen workstations per seat was the norm and our feet were toasty but they convinced us that management was conspiring to keep us from doing deals ducked under our desks, because they sure were audible across the line. London is a noise pollution hellhole, avg restaurant ambience is 94dBa, goodness… but the electricity grid can’t support better than 300W/230V in locations like Gresham St. So we need something like soundproof half racks with built in Tesla battery systems for overnight (I’m thinking physical, on a trolley, like we had tea delivered, if you’re old enough to remember pre Big Bang City.. delivery of power. One kilowatt hour or two, luv? How’s your programming getting along now? Mary said you were still stuck on the letter k, but don’t worry my popper, you’re still ahead of those sharp F lot and good for you! Now.. Extra TDP with your bun today, young man, or just a little lump of core turbo, dear?

That’s ~ 600W of American electric juice for every desk, reading the realtor sell sheet for a prime A grade new office right opposite Guildhall, that sold a few years ago. This was bad enough but the agency was spacing the desks so far apart we’d be on the hootnholler even for muttering swear words, sorry, each ways, under our breath…

More serious inquiries:

If Dell can do away with the mid plane, and anyway we know good solid wires make better PCI links than gazillion layer PCB, why can’t we have any installable daughter board 2C cards on risers above if trace length is sensitive?

For that matter, I’ve always wanted to be able to purchase AICs with machines Aluminum heatsink-cages that would plug in over high density cables to sockets instead of edge slots, to the rear of any box. I remember in electronics classes, edge connections were frowned upon from a great and intolerant height by our prof…

>> The world of 4-socket and even 8-socket servers have a fun quirk and that is a numeric regional preference. The number “4” is often referred to as unlucky in Chinese culture.

Is this a joke?

There are really two reasons why four socket servers aren’t more popular:

1) On the hardware side, Intel likes charging a premium for 4P/8P capable CPUs.

2) On the software side, a 2P setup is forgiving to software that isn’t NUMA friendly unlike 4P (on up).

imagine 4P epyc 7742…

I am from China. That is a “superstition” but it is not everyone shares.

I like that the white dude is the one bringing this perspective. Sure enough, I looked it up and it’s a thing https://en.wikipedia.org/wiki/Tetraphobia

It looks like how we have “lucky number 7” and Friday the 13th is unlucky.

Great write-up. New perspective for me

Actually, some servers are 4 socket servers.