Today we are going to take a look at something a long time coming, specifically, we are going to run a ZFS sever entirely from a PCIe card, the NVIDIA BlueField-2 DPU. This piece has actually been split into two based on our recent Ethernet SSDs Hands-on with the Kioxia EM6 NVMeoF SSD piece. In that piece, we looked at SSDs that did not require an x86 server. With this, we are going to show you another option for that that will hopefully start some discussion on the topic as we go through some of the basics of how this works. What we realized after the last piece is that it is a new enough concept where we could do a bit better of a job explaining how things are happening, and why. So we are splitting this into two. In this piece, we are going to use NVMe SSDs and ZFS to help folks understand exactly what is going on here and keep it as close to a NAS model as we possibly can before moving to a higher-level demo.

Video Version and Background

Here is the video version where you can see the setup running, and likely some more screen capture here, and there is a voiceover.

As always, we suggest opening this in a separate tab, browser, or app for a better viewing experience.

For some background:

These mostly have videos as well but they explain what a DPU is, and why it is different from traditional NICs. The AWS Nitro was really the first to implement this and we recently discussed the market in the AMD-Pensando video.

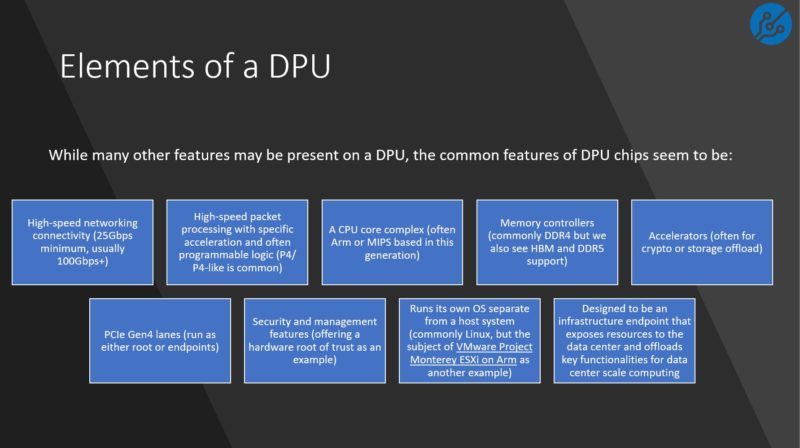

The short version, however, is that a DPU is a processor that combines a few key characteristics. Among them are:

- High-speed networking connectivity (usually multiple 100Gbps-200Gbps interfaces in this generation)

- High-speed packet processing with specific acceleration and often programmable logic (P4/ P4-like is common)

- A CPU core complex (often Arm or MIPS based in this generation)

- Memory controllers (commonly DDR4 but we also see HBM and DDR5 support)

- Accelerators (often for crypto or storage offload)

- PCIe Gen4 lanes (run as either root or endpoints)

- Security and management features (offering a hardware root of trust as an example)

- Runs its own OS separate from a host system (commonly Linux, but the subject of VMware Project Monterey ESXi on Arm as another example)

Most of the coverage with DPUs has been DPUs in traditional x86 systems. The vision for DPUs goes well beyond that, and we will touch on that later. Still, it is correct to think of a DPU as a standalone processor that can connect to a host server or devices via PCIe and network fabric.

In this piece, we are going to show what happens when you do not use a host server, and run the DPU as a standalone mini-server by creating a ZFS-based solution with the card.

NVIDIA BlueField-2 DPU and Hardware Needed

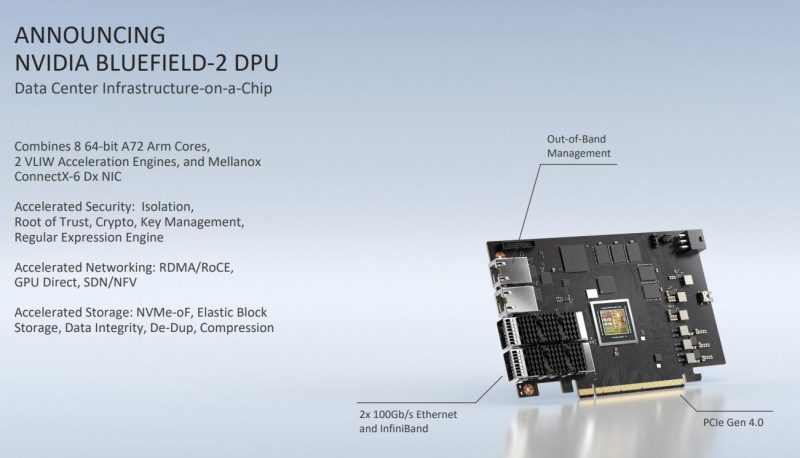

For these are are using the NVIDIA BlueField-2 DPU. Here is the overview slide.

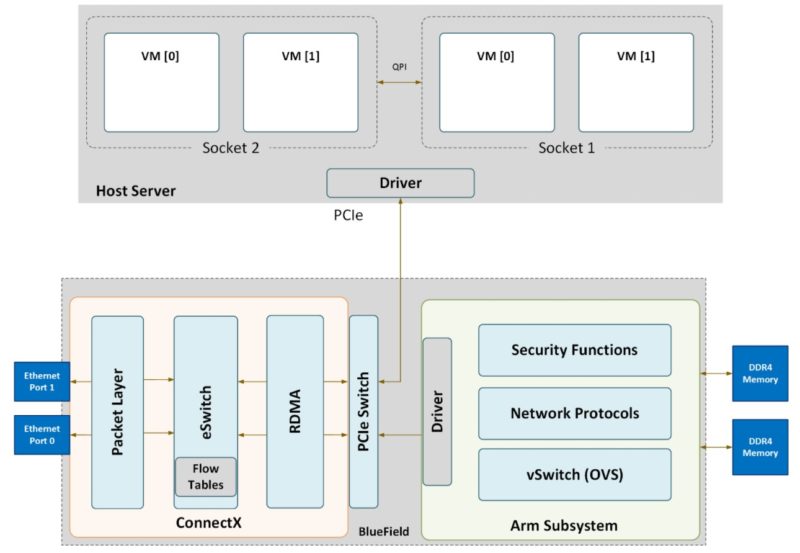

These are interesting because they are basically a ConnectX-6 NIC, a PCIe switch, and an Arm processor complex on the card.

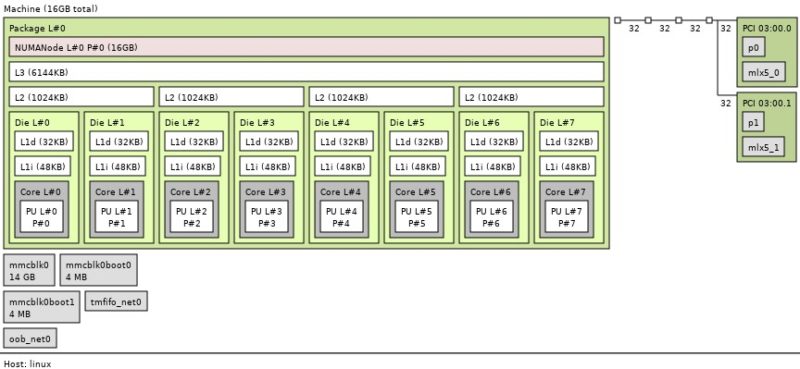

The Arm CPU complex has 16GB of single-channel DDR4 memory. NVIDIA has 32GB versions, but we have not been able to get one. We can see the ConnnectX-6 NICs on the PCIe bus here. There is also eMMC storage that is running our Ubuntu 20.04 LTS OS.

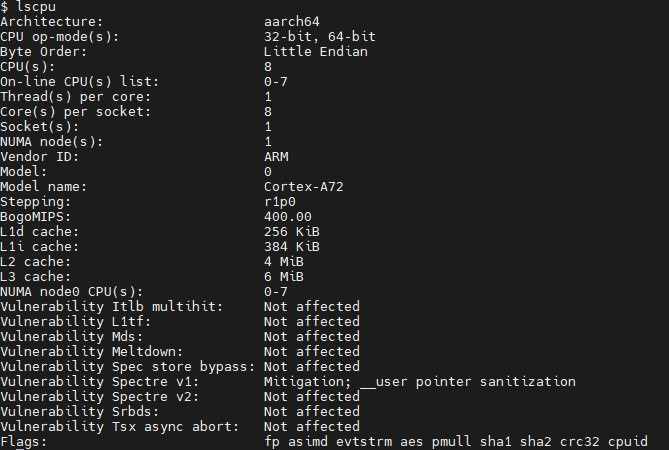

There are eight Arm Cortex-A72 cores with 1MB of L2 cache shared between two cores and a 6MB L3 cache.

Here is one of the BF2M516A cards that we have with dual 100GbE ports. We will note that these are the E-cores not the P or performance parts.

In the Building the Ultimate x86 and Arm Cluster-in-a-Box piece, we showed that each of these DPUs are individual nodes running their own OS leading to a really interesting little cluster.

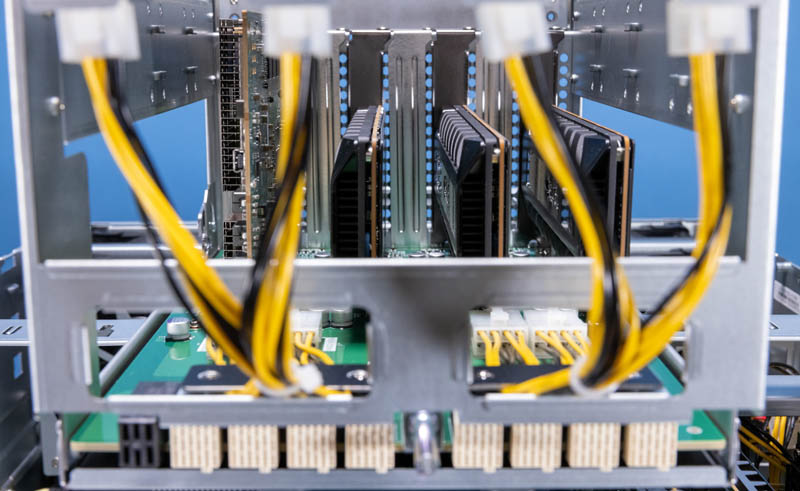

We are exploiting that property by utilizing the cards in the AIC JBOX that we recently reviewed.

The AIC JBOX is a PCIe Gen4 chassis that has two Broadcom PCIe switches and is solely meant to connect PCIe devices, power them, and cool them. There is no x86 processor in the system, so we can use the BlueField-2 DPU as a PCIe root and then use NVMe SSDs in the chassis to connect to the BlueField-2 DPU.

With the hardware platform set, we are able to start setting up the system with ZFS.

very nice piece of writing.

now i understand the concept of DPU.

waiting for next part.

You’ve sold me on DPU for some network tasks, Patrick.

This article… two DPUs to export three SSDs. And, don’t look over there, the host server is just, uh, HOSTING. Nothing to see there.

How much does a DPU cost again?

Jen Sen should just bite the bullet and package these things as… servers. Or y’all should stop saying they do not require servers.

This is amazing. I’m one of those people that just thought of a DPU as a high-end NIC. It’s a contorted demo, but it’s relatable. I’d say you’ve done a great service

Okay, that was fun. Does nVidia have tools available to make their DPUs export storage to host servers via NVMe (or whatever) over PCIe? Could you, say, attach Ceph RDB volumes on the Bluefield and have them appear as hardware storage devices to host machines?

Chelsio is obviously touting something similar with their new boards.

SNAP is the tools and there’s DOCA. Don’t forget that this is only talkin’ about the storage, not all the firewalls that are running on this and it’s not using VMware Monty either.

Scott: Yup! one of the BlueField-2’s primary use cases is “NVMEoF storage target” – there are a couple of SKUs explicitly designed for that purpose, which don’t need a PCIe switch or any weird fiddly reconfiguration to directly connect to an endpoint device (though it’d be a bit silly to only attach 4 PCIe 4.0 x4 SSDs to it!)

Scott: Ah, just realized I misread – but yes, that’s one of the *other* primary use cases for BF2, taking remote NVMEoF / other block devices and presenting them to the host system as a local NVMe device. This is how AWS’s Nitro storage card works, they’re just using a custom card/SoC for it (the 2x25G Nitro network and storage cards use the same SoC as the the MikroTik CCR2004-1G-2XS-PCIe, so that could be fun…)

I think that mikrotik uses an annapurna chip but its more like the ones they use for disk storage not the full Nitro cards being used today. It doesn’t have enough bandwidth for a new Nitro card.

I would like to see a test of the crypto acceleration – does it work with Linux LUKS?

Hope we will arrive at two DPUs both seeing the same SSDs. Then ZFS failover is possible and you could export ZFS block devices however you like or do an NFS export. Endless possibilities like, well, a server The real power of a DPU only comes into play when the offload funktionalitet comes into play.

Did you need to set any additional mlxconfig options to get this too work?

Any other tweaks required to get the BlueField to recognize the additional NVMe devices?

Thanks for the good article. I like the computer equipment of this company. It stands out for its reliability. If possible, write a few more articles about it. I think it would be interesting to read.