Today we have a fairly exciting piece. This is our first hands-on with a new class of SSDs designed for a different scale-out deployment model. The Kioxia EM6 is a NVMeoF SSD that uses Ethernet and RDMA instead of PCIe/ NVMe, SAS, or SATA to connect to the chassis. We get hands-on to show you why this is very cool technology.

Ethernet SSD Video Version

We have a video version of this one as well. In the video, we are able to show what the screens look like and show blinking videos and features like hot-swapping the drives.

As always, we suggest opening this in a new browser/ tab for the best viewing experience.

Kioxia EM6 NVMeoF SSD in the Ingrasys ES2000

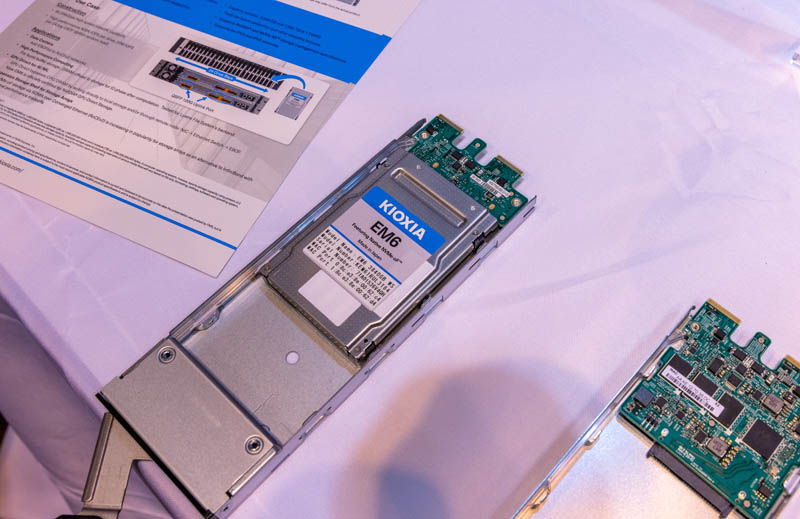

First off, the biggest change in this entire solution is the Kioxia EM6 SSD. This may look like a normal 2.5″ SSD, but there is a big difference, its I/O is Ethernet rather than traditional PCIe/ NVMe. One can see that the Ingrasys ES2000 sled has two EDSFF connectors on it. This is to maintain a common design with traditional NVMe products, but it highlights another feature. These are dual-port NVMeoF SSDs for redundancy. That is an important feature to allow them to compete in markets traditionally serviced by dual-ported SAS SSDs.

We looked at the Ingrasys ES2000 for Kioxia EM6 NVMe-oF SSDs at SC21. This is the first time that we got to actually use the system though.

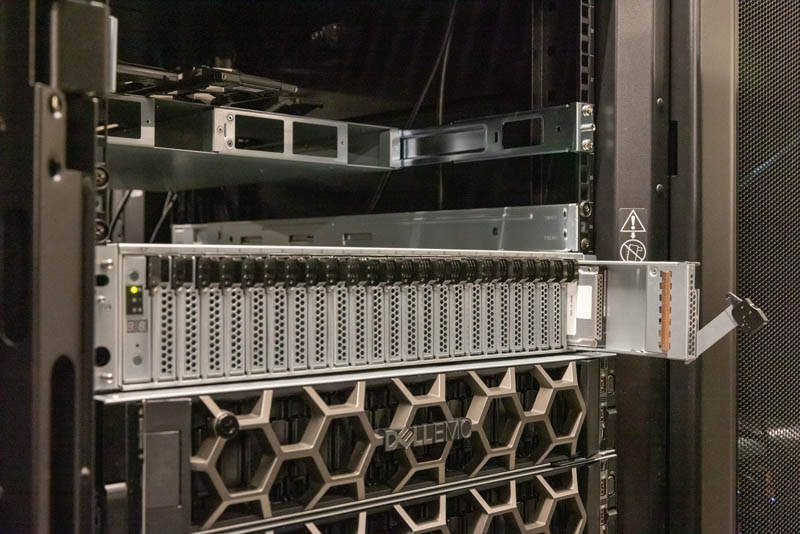

In the Kioxia lab, we got to use an older revision chassis but one can see it is a 24-bay 2U that looks like many other servers and storage shelves we have seen over the years.

We show this in the video, but we can actually hot-swap the EM6 SSDs. The EDSFF connectors are designed for hot-swap, and Ethernet networks, of course, are designed to handle devices going online/ offline.

Here is a look at the backplane that the drives plug into. As one can see, we have EDSFF connectors but only one set even though the drive trays have two connectors.

This is because there are two in each chassis. Here is a look at the switch node under a plexiglass cover.

Looking at the rear of the system, we can see the two switches. This particular system only has the top node connected. Also, as a fun note, since this is an Ethernet switch node, we can have 1-6 connections and in this system the one connection being used is 100GbE.

One can also see that each network switch tray has a USB port, an out-of-band management port, and a serial console port. While they may look like servers, these are switches.

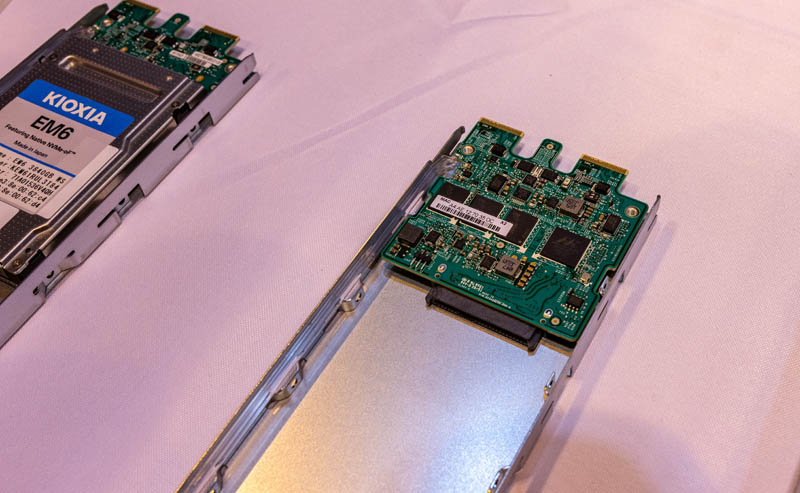

Inside the system, we can see the Marvell 98EX5630 switch chip covered by the big heatsink. Marvell also makes the NVMe to Ethernet controllers in the EM6 SSDs.

There is also a control plane for the switch under the black heatsink. This is powered with an Intel Atom C3538 CPU along with a M.2 SSD and 8GB of DRAM.

These switches, stacked one above the other allow each drive to access both switch networks simultaneously providing a redundant path to the drives.

For those wondering, there is a Marvell 88SN3400 adapter card that connects a traditional NVMe SSD to Ethernet so it can be used in this chassis.

Next, let us get hands-on and see some of the fun things we can do with the Kioxia EM6 SSDs.

Any indication on pricing and availability?

NVMeoF: This all sounds interesting, and perhaps the concrete poured to make my mind has fully cured (my 1st storage task was to write SASI (then SCSI) disk & tape drivers for a proprietary OS)…But how does one take this baseline physical architecture up to the level of enterprise storage array services? (file server, snapshotting, backup, security/managed file access, control head failover, etc)? Hmmmm…Maybe I should just follow the old 1980’s TV show “Soap” tag line “Confused? You won’t be after the next episode of Soap”.

This is really is a lot like SAS, but with Ethernet scale-out. The key thing is that you get the single drive small failure domain.

At one end you could do a 1000+ drive archival system with a few servers that are fully redundant (server failover). At the other end you could put more umph into “controllers” by adding more servers and use storage accelerators like DPUs.

Software defined systems based on plain servers have sizing issues. You spend to much on servers to maintain descent sized failure domains. Even if you decide to add a lot of space to each server, you need a lot of servers to keep the redundancy overhead down (erasure coding). Then you get into a multi PB system before it gets economical. Moving the software to the drives can solve this and given that this is already Ethernet it might not be that far out, but will have cost for on drive processing.

With the good old dual path drive you can start with two servers (controllers) and a 10 drive RAID6 and now scale as far as Ethernet or any other NVMeoF network will take you.

I think this system using erasure coding would be a great pair.

I have done tests of NVMEoF using QDR IB, x4 PCIe3 SSDs and Ryzen 3000 series PCs. Compared to locally attached NVME, NVMEoF had slightly slower POSIX open/close operations and essentially the same read/write bandwidth. Compared to NFS over RDMA, NVMEoF was substantially faster on every metric. So NVMEoF is pretty good, esp if you want to consolidate all your SSDs in one place.

Waiting for the price. Would be so nice to lose the Intel/AMD/ARM CPU tax on storage machines.

Can one NVMEoF device support concurrently access from multiple nodes?

Is each of these limited to 25 GbE (~3 GB/sec?) , with individual breakout cables from a 100 GbE connection? I can see where getting near PCI-e 3.0 NVME speeds from even a single storage drive without even needing host servers would prove interesting!

Very interesting! I wonder why they chose to run a full IP stack on the drives rather than keep it at the Ethernet layer? I suppose it does offer a lot more flexibility, allowing devices to be multiple hops away, but then you have a whole embedded OS and TCP stack on each device to worry about from a security standpoint. I suppose the idea is that you run these on a dedicated SAN, physically separate from the rest of the network?

@The Anh Tran – technically yes, you can mount an NVMEoF device on several hosts simultaneously. But it is in general a risky thing to do.

The different hosts see it as a local block device and assume exclusive access. You’ll run into problems where changes to the filesystem made by one host are not seen by other hosts – very bad stuff.

If all hosts were doing strictly read-only access then it would be safe. I did this to share ML training data amongst a couple of GPU compute boxes. It worked. Later, I tried updating the training data files from one host – the changes were not visible to the other hosts.

If, say, you were sharing DB data files amongst the members of a DBMS cluster via this method you would surely corrupt all your data as each node would have it’s own idea of file extents, record locations, etc. They’d trash each other’s changes.

This does sound interesting, I’m wondering what level of security / access control is provided. One would probably want to encrypt the data locally on the host (possibly using a DPU which could even do it transparently) before writing to the drive.

How much?

https://www.ingrasys.com/es2000

@Malvineous – The first RoCE version was plain ethernet, but they moved to UDP/IP in v2 to make routing possible. All this is handled by the NICs so it can RDMA directly to memory. There is also Soft-RoCE for NICs that does not support it in hardware.

@Hans: Last time I asked HPE for a quote on their J2000 JBOF, I suddenly got the urge to buy two servers with NVMe in the front Hoping that upcoming SAS4 JBODs will be tri-state so we at least can have dual-path over PCIe.

Access control and protocol latency will limit use cases, AC could be performed by the switch fabric eg by MAC or IP

This is truly exciting. I started working on distributed storage back in the days of Servenet, then infiniband, then RDMA over infiniband, then RDMA over ethernet,…

Kioxia has delivered an intelligent design that can be used to disaggregate storage with out the incredibly bad performance impact (latency, io rate, and throughput) of “Enterprise Storage Arrays”. It truly sucks that IT has been forced to put up with the inherent architectural flaws of storage arrays based on design principles from the 1980’s.

I wonder what the power consumption of those controllers ‘per drive’ is, nvme aren’t exactly friendly by themselves to the overall power budget per rack per-se.

Personally I’d like to see NVME get replaced with some of that new nextgen tech Intel were talking of a few years back, though never heard about it again since.

The principle with this tech is sound, the management sounds a real headache, looking forward to seeing how fully fleshed out the ‘software’ side becomes, as in, point-and-click for the sysadmin billing by the minute.

This is nuts. My drives will now run Linux and have dual Ethernet?? Also, makes sense.

Just noticed the trays are much deeper than the present drives. Does this hint at a future(?) drive form-factor?

Too many folks here are still looking at this in the old “x*controllers+disks presented to hosts” fashion. Look at the statement “imagine, if…the system instead used 100GB namespaces from 23 drives”. This is incredibly powerful – picture your VM managing a classic array built from namespace #1. Your next VM has a different array layout on namespace #2, and your DPU is running its own array on namespace #3. You can do old-school arrays, object-type arrays, distributed, or whatever you want all backed by a common set of drives which just need more disks added to the network in order to expand.

The only major issue I see here is all the testing is done using IPv4, which would swiftly run out of addresses if you scale. IPv6 is despised yes, but at least show us it works please.

When can we hope for some performance measures? Latency and rate would be the interesting measures, since they could be compared on the same system and SSD with direct PCIe.