The Magic – This is Actually a Multi-DPU ZFS and iSCSI Demo

Now for the more exciting part. This may be the mental model you have of the setup, but that would be incorrect.

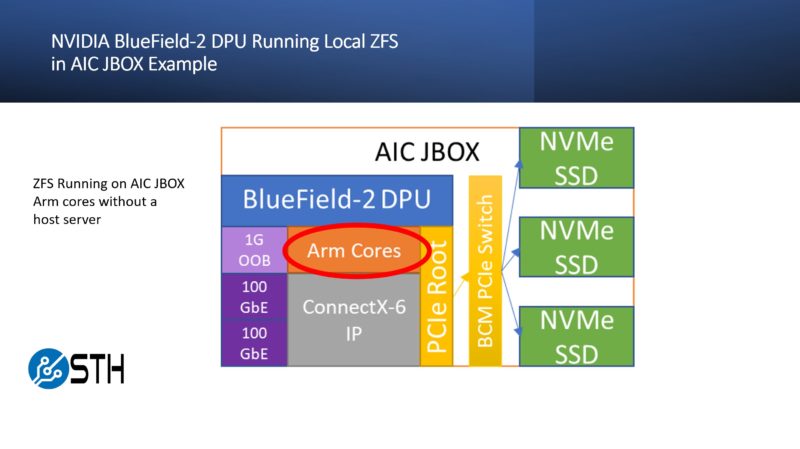

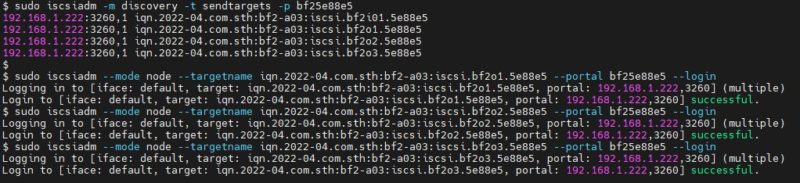

You will notice that the drives are showing up in the system as /dev/sda, /dev/sdb, and /dev/sdc. Normally a NVMe SSD, like the ones we are using would be labeled as “nvme0n1” or something like that instead of “sda”. That is because there is something even more relevant to the DPU model going on here: there are actually two DPUs being used.

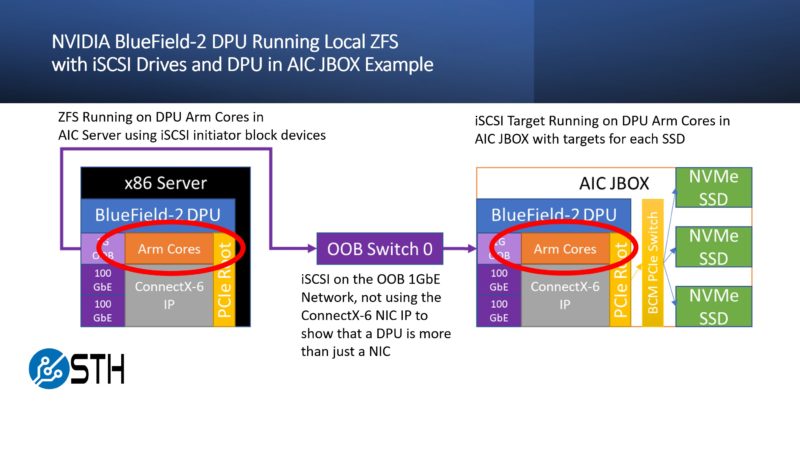

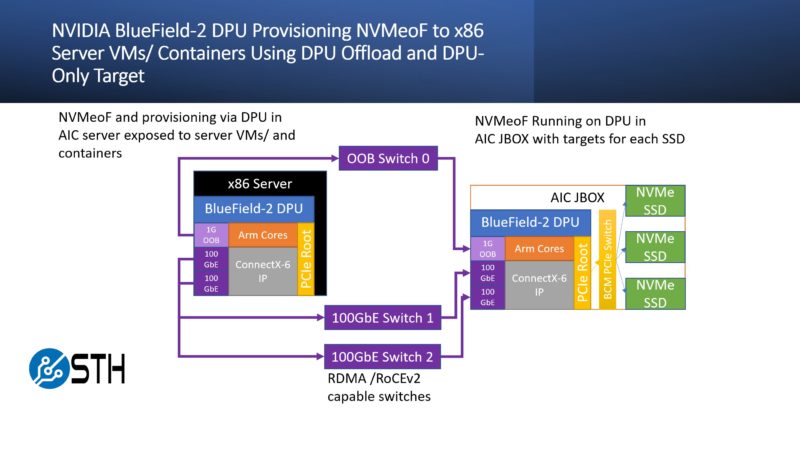

The first DPU is exporting the three 960GB NVMe SSDs as iSCSI targets from the AIC JBOX. The second DPU that we have been showing is in the host AIC server, and is serving as the iSCSI initiator and then handling ZFS duties. This is actually what the setup we are using looks like:

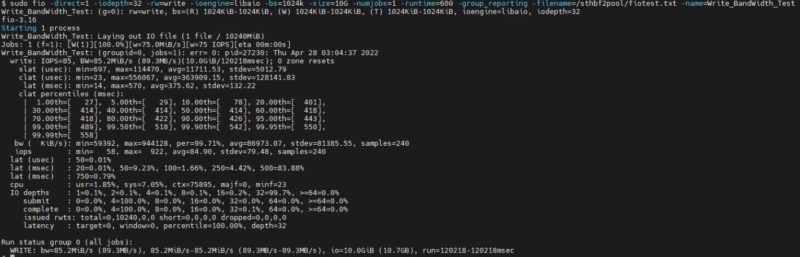

What we essentially have is two nodes doing iSCSI and ZFS without any x86 server involvement other than simply the second DPU getting power from the PCIe slot and cooling. Performance is pretty darn poor in this example because this is running totally on the out-of-band 1GbE management ports and not using the accelerators onboard the DPU.

Still, it works. That is exactly the point. Without any x86 server in the path, we manage to mount SSDs on DPU1 in the AIC JBOX. We then use DPU1 to expose that storage as block devices to the network. Multiple block devices, exposed by DPU1, are then picked up by DPU2, and a ZFS RAID array is created. This ZFS RAID array is then able to provide storage. Again, this is network storage without a traditional server on either end.

Final Words

The real purpose of this piece is to show the concepts behind next-generation infrastructure with DPUs. We purposefully stayed off the accelerators and the 100GbE networking in the NVIDIA BlueField-2 to show the stark contrast of DPU infrastructure using concepts like iSCSI and ZFS that have been around for a long time and people generally understand well. With erasure coding offloads and more built-into next-generation DPUs, many of the older concepts of having RAID controllers locally are going to go away as the network storage model offloads that functionality. Still, the goal was to show folks DPUs in a way that is highly accessible.

The next step for this series is showing DPUs doing NVMeoF storage using the accelerators and offloads as well as the 100GbE ConnectX-6 network ports. The reason we used this ZFS and iSCSI setup is that it showed many of the same concepts, just without going through the higher performance software and hardware. We needed something to bridge the model folks are accustomed to, and the next-generation architecture and this was the idea. For the next version, we will have this:

Not only are we looking at NVIDIA’s solution, but we are going to take a look at solutions across the industry.

very nice piece of writing.

now i understand the concept of DPU.

waiting for next part.

You’ve sold me on DPU for some network tasks, Patrick.

This article… two DPUs to export three SSDs. And, don’t look over there, the host server is just, uh, HOSTING. Nothing to see there.

How much does a DPU cost again?

Jen Sen should just bite the bullet and package these things as… servers. Or y’all should stop saying they do not require servers.

This is amazing. I’m one of those people that just thought of a DPU as a high-end NIC. It’s a contorted demo, but it’s relatable. I’d say you’ve done a great service

Okay, that was fun. Does nVidia have tools available to make their DPUs export storage to host servers via NVMe (or whatever) over PCIe? Could you, say, attach Ceph RDB volumes on the Bluefield and have them appear as hardware storage devices to host machines?

Chelsio is obviously touting something similar with their new boards.

SNAP is the tools and there’s DOCA. Don’t forget that this is only talkin’ about the storage, not all the firewalls that are running on this and it’s not using VMware Monty either.

Scott: Yup! one of the BlueField-2’s primary use cases is “NVMEoF storage target” – there are a couple of SKUs explicitly designed for that purpose, which don’t need a PCIe switch or any weird fiddly reconfiguration to directly connect to an endpoint device (though it’d be a bit silly to only attach 4 PCIe 4.0 x4 SSDs to it!)

Scott: Ah, just realized I misread – but yes, that’s one of the *other* primary use cases for BF2, taking remote NVMEoF / other block devices and presenting them to the host system as a local NVMe device. This is how AWS’s Nitro storage card works, they’re just using a custom card/SoC for it (the 2x25G Nitro network and storage cards use the same SoC as the the MikroTik CCR2004-1G-2XS-PCIe, so that could be fun…)

I think that mikrotik uses an annapurna chip but its more like the ones they use for disk storage not the full Nitro cards being used today. It doesn’t have enough bandwidth for a new Nitro card.

I would like to see a test of the crypto acceleration – does it work with Linux LUKS?

Hope we will arrive at two DPUs both seeing the same SSDs. Then ZFS failover is possible and you could export ZFS block devices however you like or do an NFS export. Endless possibilities like, well, a server The real power of a DPU only comes into play when the offload funktionalitet comes into play.

Did you need to set any additional mlxconfig options to get this too work?

Any other tweaks required to get the BlueField to recognize the additional NVMe devices?

Thanks for the good article. I like the computer equipment of this company. It stands out for its reliability. If possible, write a few more articles about it. I think it would be interesting to read.