The title may be a bit reaching, but in our AIC JBOX review, we are going to look at a piece of hardware that is going to make STH’ers salivate with the possibilities. Indeed, after this formal review, we have a number of very cool projects that will be coming out over the next few weeks as we have been using this JBOX not just for this review, but also to showcase some really cool technology. The machine itself is in many ways very simple. There are no processors, nor built-in networking. Instead, the AIC JBOX is designed to service PCIe devices with PCIe Gen4 switches, power supplies, and cooling. That simplicity yields great flexibility. Let us get to it.

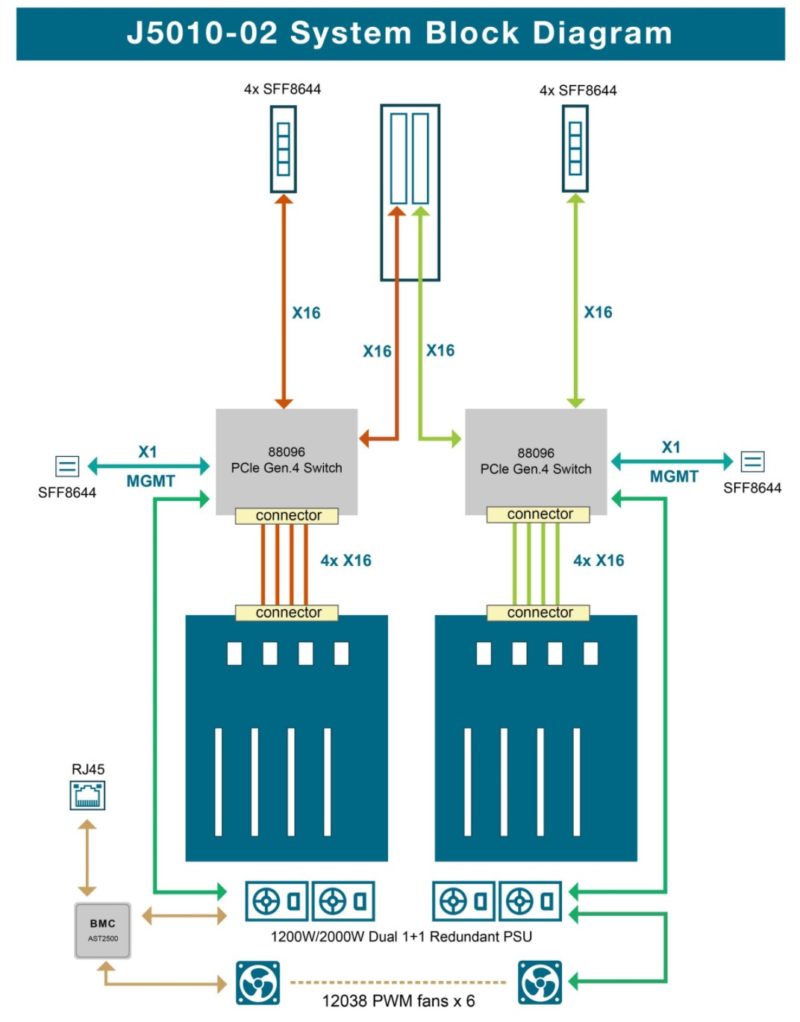

AIC JBOX (J5010-02) Block Diagram

For this overview, we are going to do something very different than our standard review format. We are going to start with the block diagram for this one instead of going through the hardware overview. Originally, this review had the opposite order, but this made it clearer when we get to look at the actual JBOX.

In many systems we review, we have two CPUs, then connections to PCIe slots. This is different. There are two largely independent PCIe Gen4 switches. These are Broadcom Atlas PEX88096 PCIe Gen4 switches. The last 96 means that there are 96 primary switch ports. A fun fact is that on these switches there are actually 98 PCIe lanes with two lanes used for management. They even have an Arm Cortex R4 CPU built-in, but that is more for managing the PCIe switch than it is something you would want to use as a server CPU.

The key here is that instead of CPUs, the two PCIe Gen4 switches are the hearts of the JBOX. Also, one will notice that there are effectively two different sides, each with a Broadcom switch. One can, for example, plug this JBOX into a single server using the same CPU as the root. One can plug this JBOX into different CPUs to avoid socket-to-socket congestion. A third option is connecting one set of devices to a server, and one to another server. Perhaps the most unique, and one that we will show in a follow-up piece, is not connecting this JBOX to a traditional server. Many of our STH readers will see what we are doing in a few of the photos.

With that, let us get to the hardware to see how this block diagram is implemented.

AIC JBOX (J5010-02) Hardware Overview

Originally this section was all just a large hardware overview, but it ended up with over two dozen photos, so we are splitting it into external and internal hardware overviews as we would do with our server reviews. Then, we are also going to show many different PCIe devices in the system.

AIC JBOX (J5010-02) External Hardware Overview

First off, let us talk dimensions. The chassis is 5U, so it is certainly tall, but it is also relatively short in depth. The depth is 450mm or 17.7″. We get many folks asking about short-depth servers and having add-in cards. This is a really interesting option.

The front of the unit has a giant inlet filled with fans, then ~2U of blanked-out spaces. On the bottom, we can see a service tag, along with a power button, serial, and a RJ45 port.

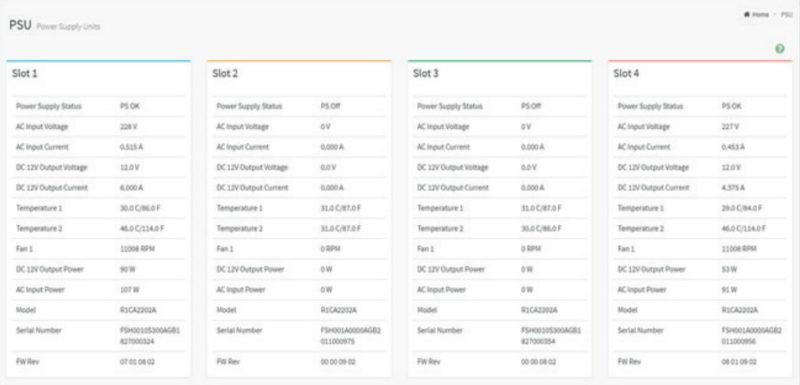

That RJ45 port uses MegaRAC SP-X management for sensor data. One can see things like fan speeds, power supply status, power consumption, and so forth. We are going to do a bigger piece on AIC management in the next few weeks when we look at our first AIC server, but many will be familiar with SP-X since it is an industry-standard solution.

The fans have a covering, and getting into the two thumbscrews means we can pull off the fan cover. This appears to be a dust filter. Overall the chassis is very easy to service with thumb screws and the top is a simple push tab release. We will see more as we go through the review.

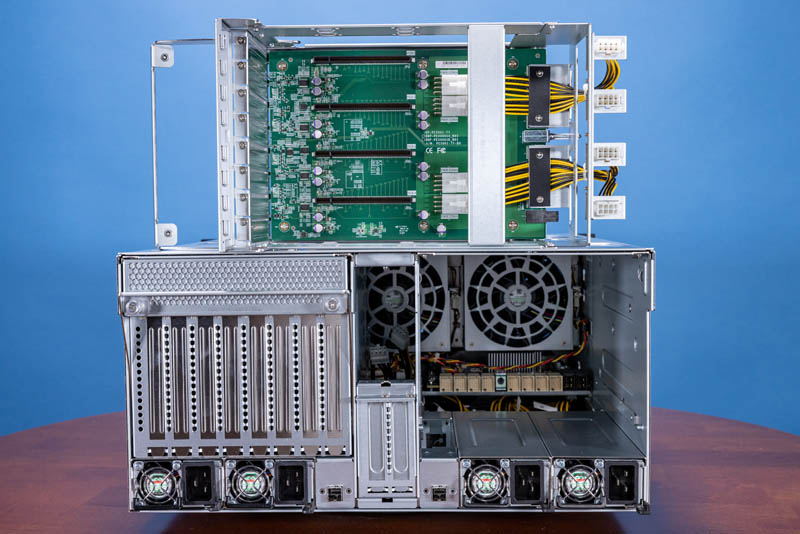

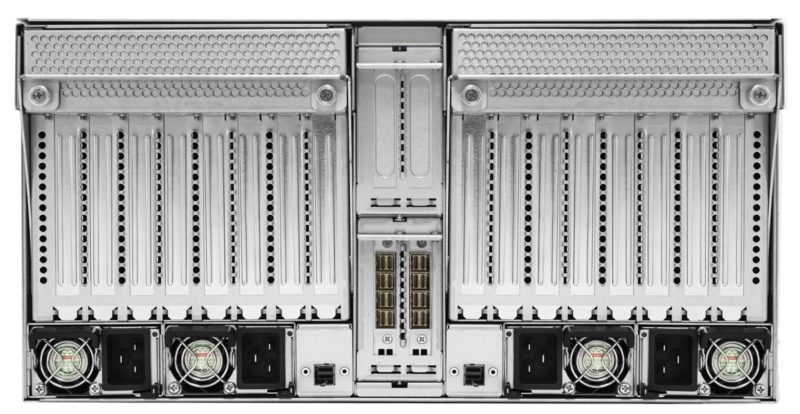

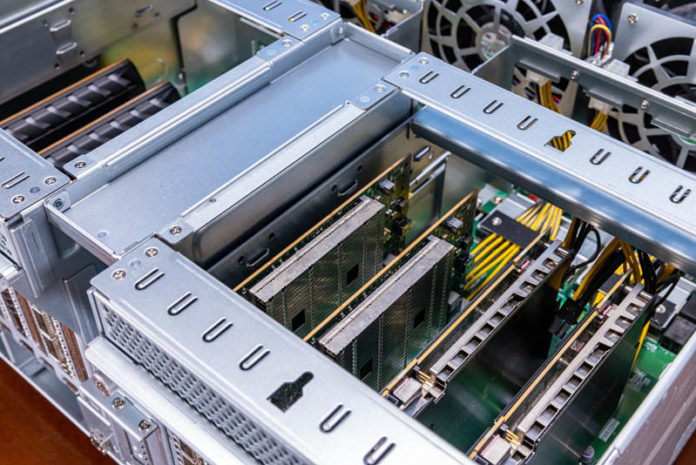

The rear of our unit had a large hole. We are going to explain that in a moment. For now, we can see the big features. There are two sides of the chassis each with a low-profile expansion slot in the middle and two power supplies. Above them are eight full-height expansion slots. We will discuss those more in our internal hardware overview, but those are the big feature of this JBOX.

The big hole in the middle is very interesting. In most configurations, there is a block with eight SFF-8644 connectors. Each connector has four PCIe lanes and that means there are sixteen total PCIe lanes that we can connect to a server. This allows one to do a direct PCIe connection to the external chassis from one, two, or potentially more servers.

If you recall from the block diagram, each side of the chassis has four full-height double-width spaces, two x16 low profile slots, and then potentially the 4×4 lane external connections for a total of six PCIe Gen4 x16 blocks in the system (4x FH, 1x LP, 1x external.) Here is a view with single-width cards to just give you a sense of where the full-height PCIe slots are.

Flanking the two low-profile slots in our system are two SFF-8644 ports. These are x1 ports from the Broadcom PCIe switches meant for management. You would not use these for primary connectivity to servers just due to bandwidth constraints.

We are only going to cover the two x16 low profile slots here, but we did want to note there are power connectors in this section as well.

In terms of power supplies, there are four 2kW 80Plus Platinum power supplies. One small item we would have liked to have seen is PSUs that could operate on 110/120V with output restrictions. This configuration is more for GPUs, and that is why we see the larger power supplies and the lack of lower voltage for edge racks in North America. If one really wanted to deploy these on 110/120V power, this is a fairly common form factor so it is likely AIC could arrange different PSUs for a good size order, but we were not able to confirm that.

Still, the exciting part is inside the chassis. With that, let us get to our internal hardware overview.

What an interesting device! Is there any idea on pricing yet? It would be very interesting if they brought out a similar style of device but with U.3 NVMe slots on the front instead, so you could load it up with fast SSDs but only take up a single x16 PCIe slot on the host.

Excellent device, Patrick! Great review.

I wonder though what that power connector on the sled is rated at? Can it handle 1200W for 4 A40 or similar cards? It does not look very chunky.

Are these any public examples of builds using the AIC JBOX?